Jipeng Qiang

Chinese Idiom Paraphrasing

Apr 20, 2022

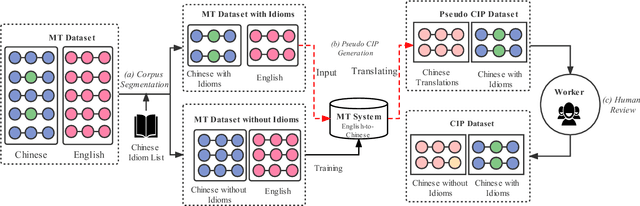

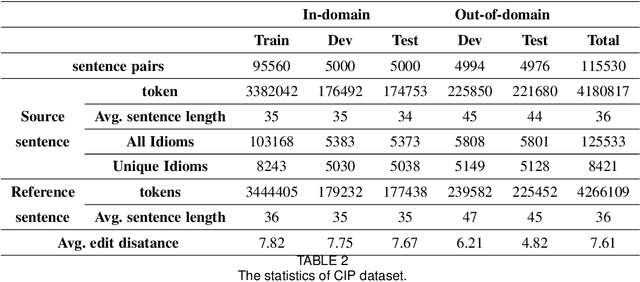

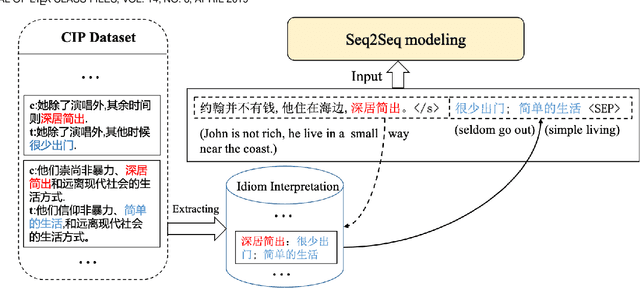

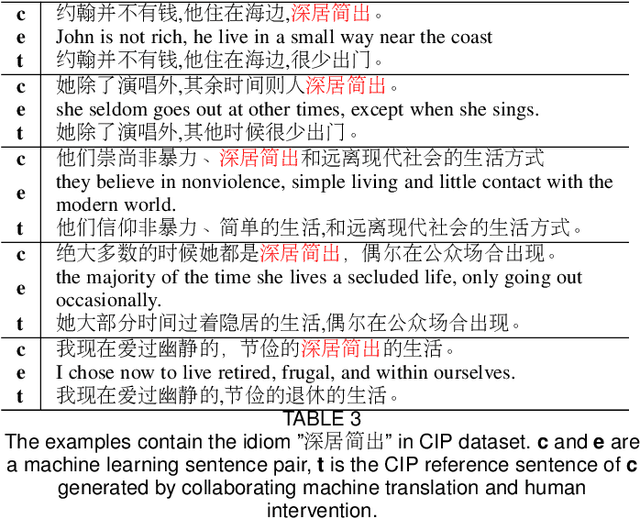

Abstract:Idioms, are a kind of idiomatic expression in Chinese, most of which consist of four Chinese characters. Due to the properties of non-compositionality and metaphorical meaning, Chinese Idioms are hard to be understood by children and non-native speakers. This study proposes a novel task, denoted as Chinese Idiom Paraphrasing (CIP). CIP aims to rephrase idioms-included sentences to non-idiomatic ones under the premise of preserving the original sentence's meaning. Since the sentences without idioms are easier handled by Chinese NLP systems, CIP can be used to pre-process Chinese datasets, thereby facilitating and improving the performance of Chinese NLP tasks, e.g., machine translation system, Chinese idiom cloze, and Chinese idiom embeddings. In this study, CIP task is treated as a special paraphrase generation task. To circumvent difficulties in acquiring annotations, we first establish a large-scale CIP dataset based on human and machine collaboration, which consists of 115,530 sentence pairs. We further deploy three baselines and two novel CIP approaches to deal with CIP problems. The results show that the proposed methods have better performances than the baselines based on the established CIP dataset.

Prompt-Learning for Short Text Classification

Mar 31, 2022

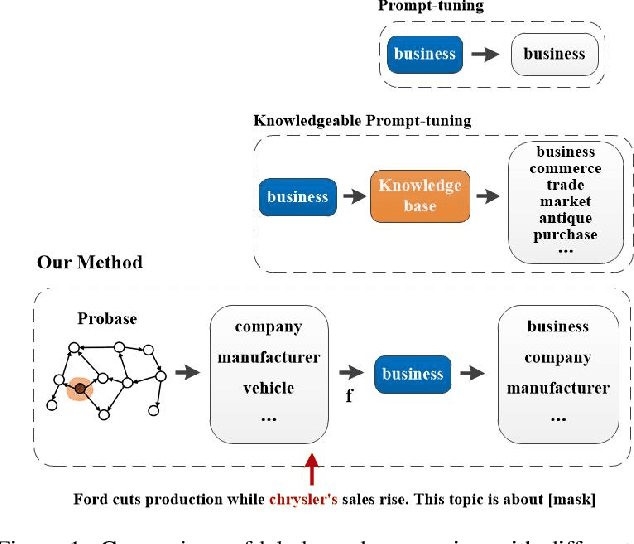

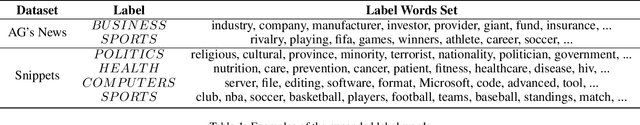

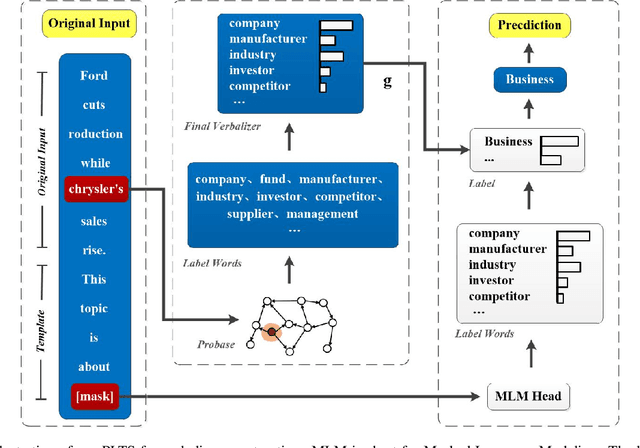

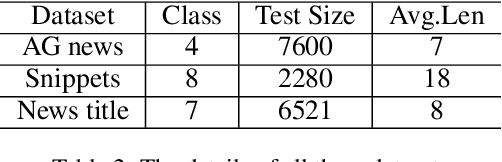

Abstract:In the short text, the extremely short length, feature sparsity, and high ambiguity pose huge challenges to classification tasks. Recently, as an effective method for tuning Pre-trained Language Models for specific downstream tasks, prompt-learning has attracted a vast amount of attention and research. The main intuition behind the prompt-learning is to insert the template into the input and convert the text classification tasks into equivalent cloze-style tasks. However, most prompt-learning methods expand label words manually or only consider the class name for knowledge incorporating in cloze-style prediction, which will inevitably incur omissions and bias in short text classification tasks. In this paper, we propose a simple short text classification approach that makes use of prompt-learning based on knowledgeable expansion. Taking the special characteristics of short text into consideration, the method can consider both the short text itself and class name during expanding label words space. Specifically, the top $N$ concepts related to the entity in the short text are retrieved from the open Knowledge Graph like Probase, and we further refine the expanded label words by the distance calculation between selected concepts and class labels. Experimental results show that our approach obtains obvious improvement compared with other fine-tuning, prompt-learning, and knowledgeable prompt-tuning methods, outperforming the state-of-the-art by up to 6 Accuracy points on three well-known datasets.

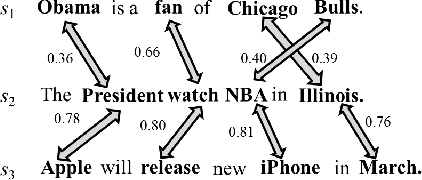

An Unsupervised Method for Building Sentence Simplification Corpora in Multiple Languages

Sep 01, 2021

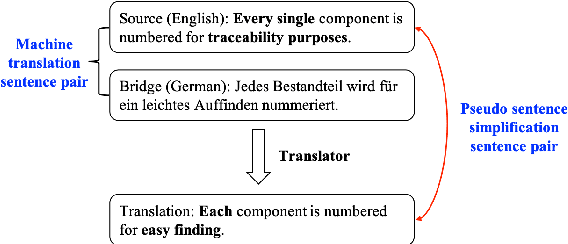

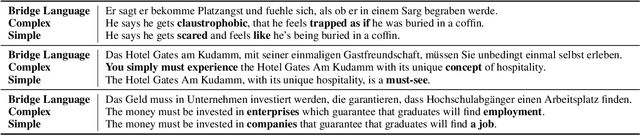

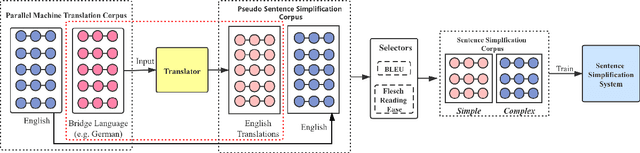

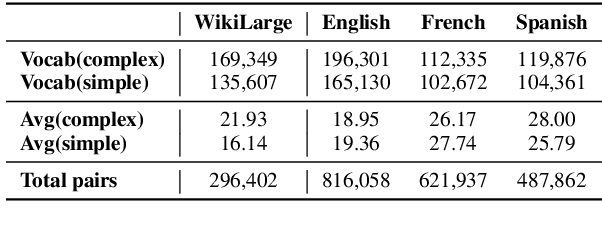

Abstract:The availability of parallel sentence simplification (SS) is scarce for neural SS modelings. We propose an unsupervised method to build SS corpora from large-scale bilingual translation corpora, alleviating the need for SS supervised corpora. Our method is motivated by the following two findings: neural machine translation model usually tends to generate more high-frequency tokens and the difference of text complexity levels exists between the source and target language of a translation corpus. By taking the pair of the source sentences of translation corpus and the translations of their references in a bridge language, we can construct large-scale pseudo parallel SS data. Then, we keep these sentence pairs with a higher complexity difference as SS sentence pairs. The building SS corpora with an unsupervised approach can satisfy the expectations that the aligned sentences preserve the same meanings and have difference in text complexity levels. Experimental results show that SS methods trained by our corpora achieve the state-of-the-art results and significantly outperform the results on English benchmark WikiLarge.

Chinese Lexical Simplification

Oct 14, 2020

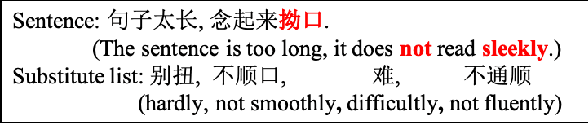

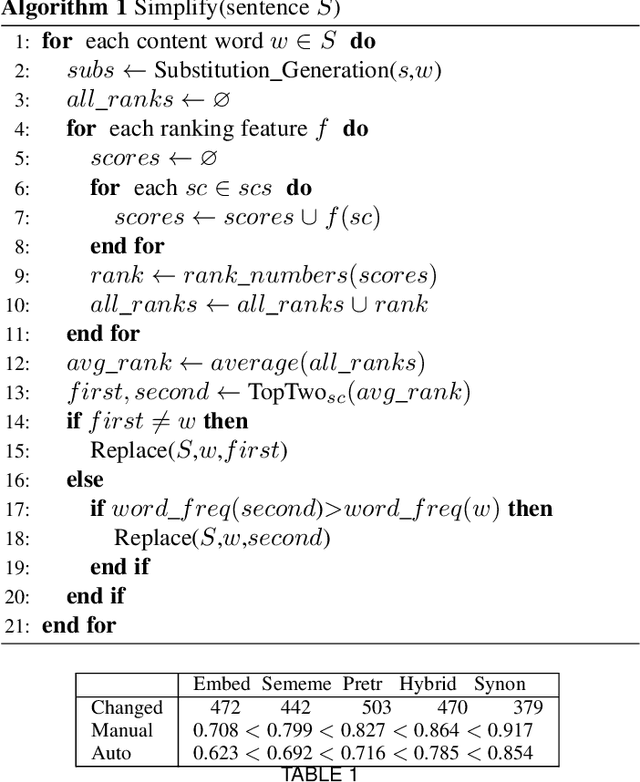

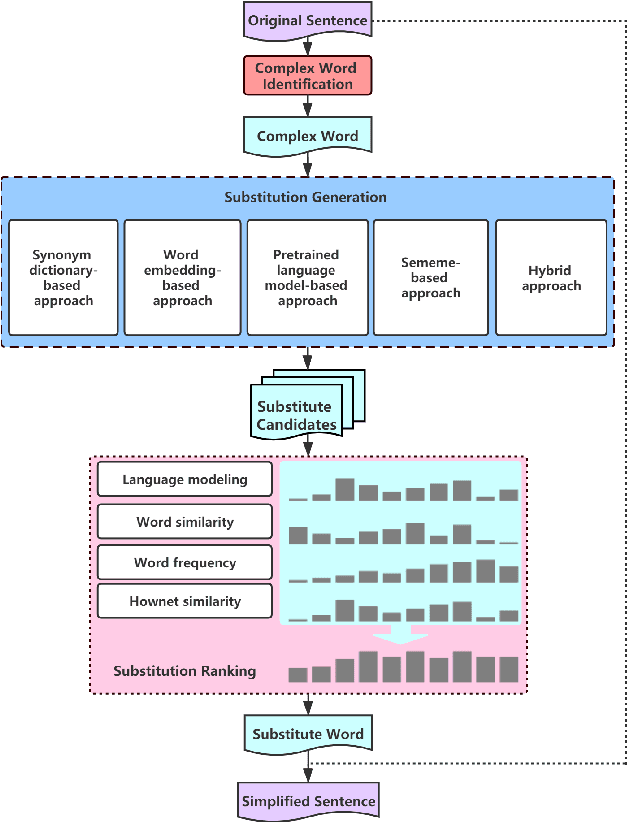

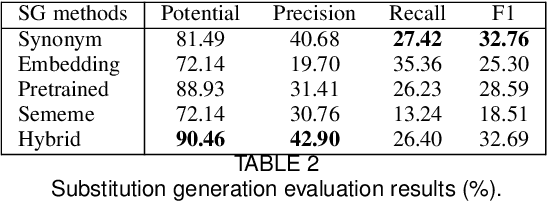

Abstract:Lexical simplification has attracted much attention in many languages, which is the process of replacing complex words in a given sentence with simpler alternatives of equivalent meaning. Although the richness of vocabulary in Chinese makes the text very difficult to read for children and non-native speakers, there is no research work for Chinese lexical simplification (CLS) task. To circumvent difficulties in acquiring annotations, we manually create the first benchmark dataset for CLS, which can be used for evaluating the lexical simplification systems automatically. In order to acquire more thorough comparison, we present five different types of methods as baselines to generate substitute candidates for the complex word that include synonym-based approach, word embedding-based approach, pretrained language model-based approach, sememe-based approach, and a hybrid approach. Finally, we design the experimental evaluation of these baselines and discuss their advantages and disadvantages. To our best knowledge, this is the first study for CLS task.

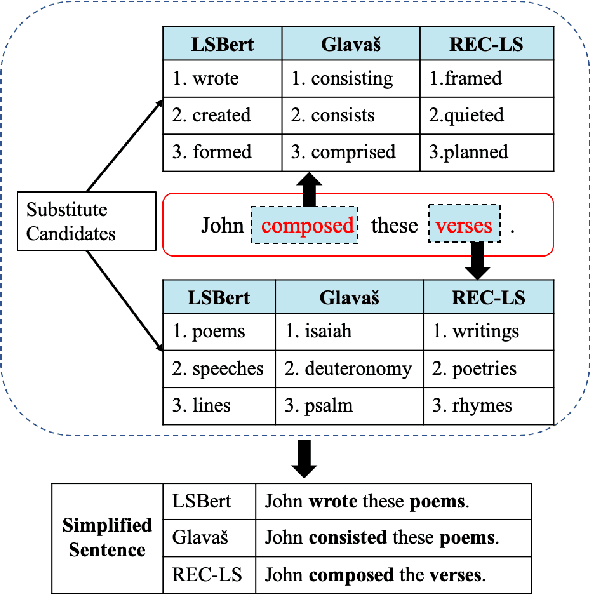

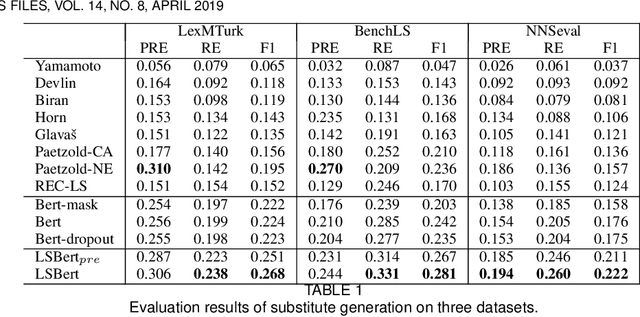

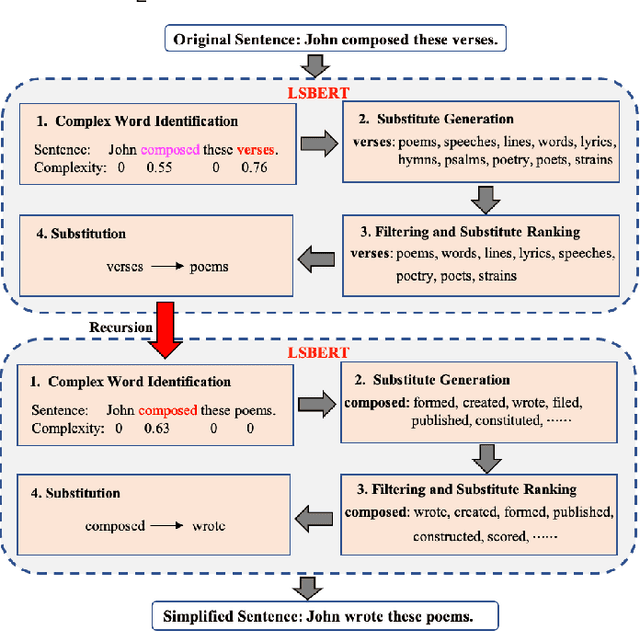

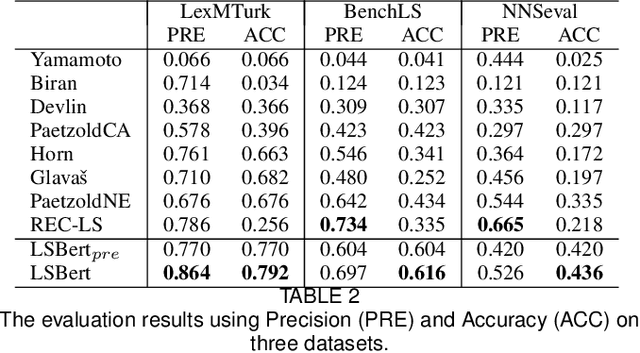

LSBert: A Simple Framework for Lexical Simplification

Jun 25, 2020

Abstract:Lexical simplification (LS) aims to replace complex words in a given sentence with their simpler alternatives of equivalent meaning, to simplify the sentence. Recently unsupervised lexical simplification approaches only rely on the complex word itself regardless of the given sentence to generate candidate substitutions, which will inevitably produce a large number of spurious candidates. In this paper, we propose a lexical simplification framework LSBert based on pretrained representation model Bert, that is capable of (1) making use of the wider context when both detecting the words in need of simplification and generating substitue candidates, and (2) taking five high-quality features into account for ranking candidates, including Bert prediction order, Bert-based language model, and the paraphrase database PPDB, in addition to the word frequency and word similarity commonly used in other LS methods. We show that our system outputs lexical simplifications that are grammatically correct and semantically appropriate, and obtains obvious improvement compared with these baselines, outperforming the state-of-the-art by 29.8 Accuracy points on three well-known benchmarks.

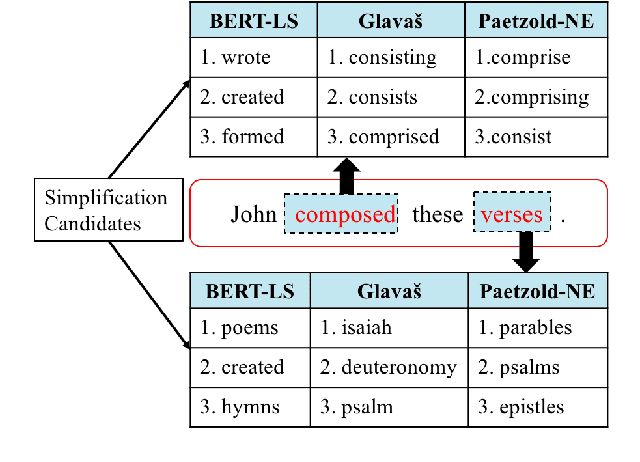

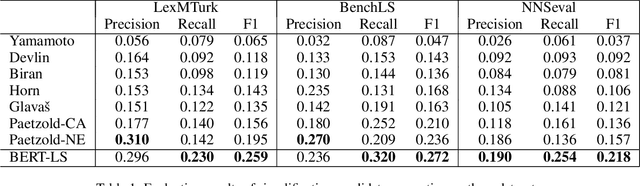

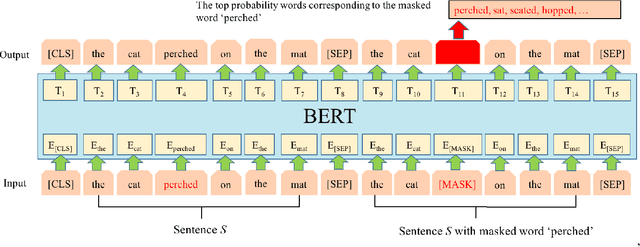

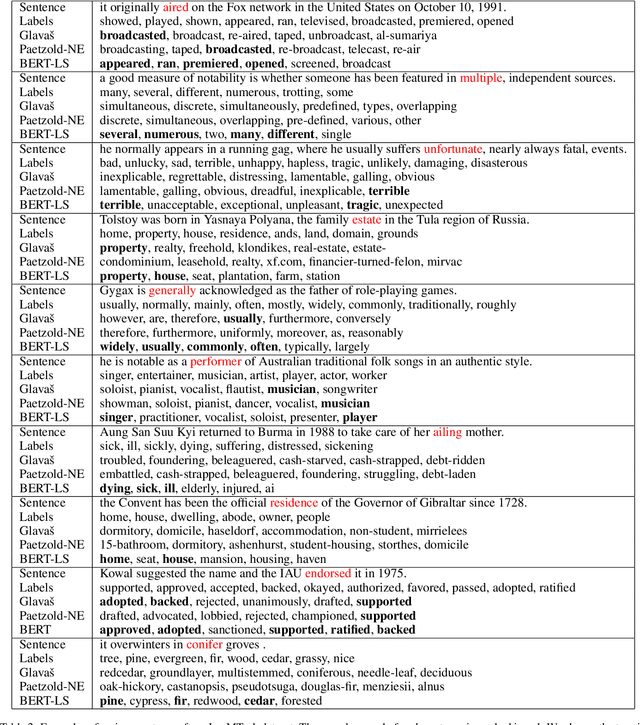

A Simple BERT-Based Approach for Lexical Simplification

Aug 16, 2019

Abstract:Lexical simplification (LS) aims to replace complex words in a given sentence with their simpler alternatives of equivalent meaning. Recently unsupervised lexical simplification approaches only rely on the complex word itself regardless of the given sentence to generate candidate substitutions, which will inevitably produce a large number of spurious candidates. We present a simple BERT-based LS approach that makes use of the pre-trained unsupervised deep bidirectional representations BERT. Despite being entirely unsupervised, experimental results show that our approach obtains obvious improvement than these baselines leveraging linguistic databases and parallel corpus, outperforming the state-of-the-art by more than 11 Accuracy points on three well-known benchmarks.

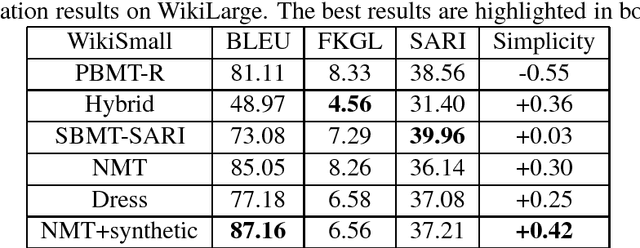

Improving Neural Text Simplification Model with Simplified Corpora

Oct 10, 2018

Abstract:Text simplification (TS) can be viewed as monolingual translation task, translating between text variations within a single language. Recent neural TS models draw on insights from neural machine translation to learn lexical simplification and content reduction using encoder-decoder model. But different from neural machine translation, we cannot obtain enough ordinary and simplified sentence pairs for TS, which are expensive and time-consuming to build. Target-side simplified sentences plays an important role in boosting fluency for statistical TS, and we investigate the use of simplified sentences to train, with no changes to the network architecture. We propose to pair simple training sentence with a synthetic ordinary sentence via back-translation, and treating this synthetic data as additional training data. We train encoder-decoder model using synthetic sentence pairs and original sentence pairs, which can obtain substantial improvements on the available WikiLarge data and WikiSmall data compared with the state-of-the-art methods.

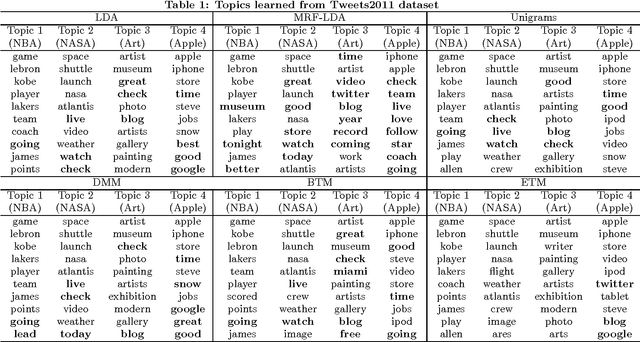

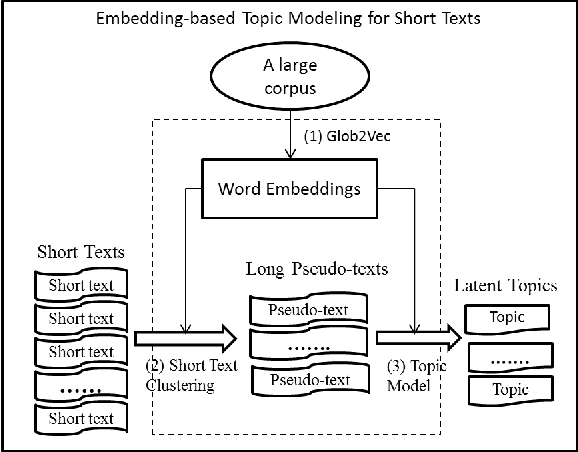

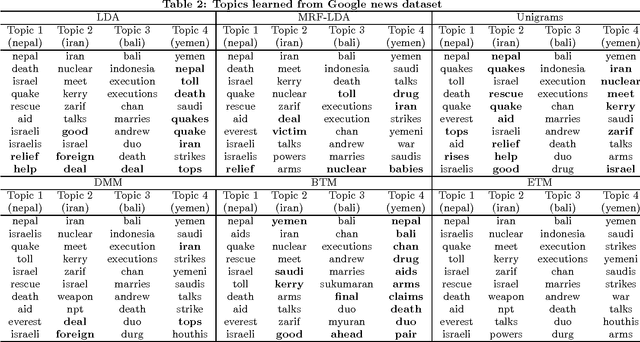

Topic Modeling over Short Texts by Incorporating Word Embeddings

Sep 27, 2016

Abstract:Inferring topics from the overwhelming amount of short texts becomes a critical but challenging task for many content analysis tasks, such as content charactering, user interest profiling, and emerging topic detecting. Existing methods such as probabilistic latent semantic analysis (PLSA) and latent Dirichlet allocation (LDA) cannot solve this prob- lem very well since only very limited word co-occurrence information is available in short texts. This paper studies how to incorporate the external word correlation knowledge into short texts to improve the coherence of topic modeling. Based on recent results in word embeddings that learn se- mantically representations for words from a large corpus, we introduce a novel method, Embedding-based Topic Model (ETM), to learn latent topics from short texts. ETM not only solves the problem of very limited word co-occurrence information by aggregating short texts into long pseudo- texts, but also utilizes a Markov Random Field regularized model that gives correlated words a better chance to be put into the same topic. The experiments on real-world datasets validate the effectiveness of our model comparing with the state-of-the-art models.

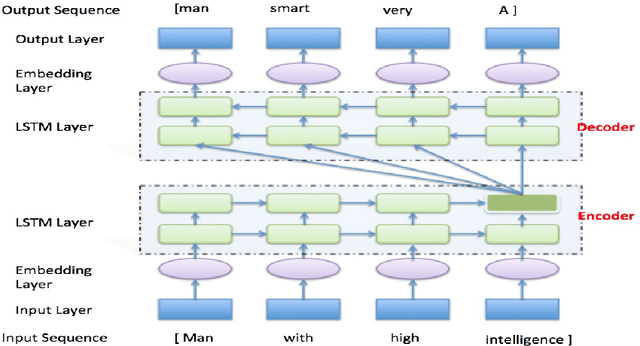

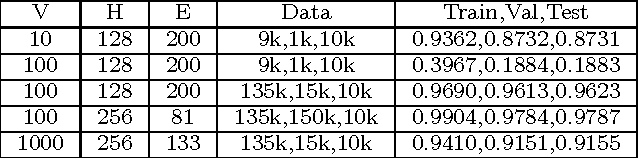

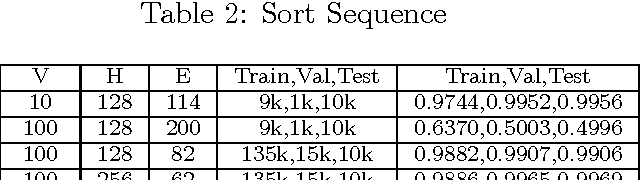

An Experimental Study of LSTM Encoder-Decoder Model for Text Simplification

Sep 13, 2016

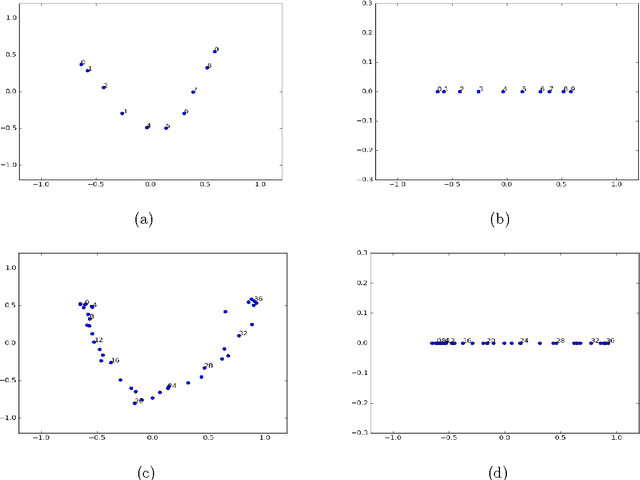

Abstract:Text simplification (TS) aims to reduce the lexical and structural complexity of a text, while still retaining the semantic meaning. Current automatic TS techniques are limited to either lexical-level applications or manually defining a large amount of rules. Since deep neural networks are powerful models that have achieved excellent performance over many difficult tasks, in this paper, we propose to use the Long Short-Term Memory (LSTM) Encoder-Decoder model for sentence level TS, which makes minimal assumptions about word sequence. We conduct preliminary experiments to find that the model is able to learn operation rules such as reversing, sorting and replacing from sequence pairs, which shows that the model may potentially discover and apply rules such as modifying sentence structure, substituting words, and removing words for TS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge