Jacob Chakareski

ElasticVR: Elastic Task Computing in Multi-User Multi-Connectivity Wireless Virtual Reality (VR) Systems

Dec 13, 2025Abstract:Diverse emerging VR applications integrate streaming of high fidelity 360 video content that requires ample amounts of computation and data rate. Scalable 360 video tiling enables having elastic VR computational tasks that can be scaled adaptively in computation and data rate based on the available user and system resources. We integrate scalable 360 video tiling in an edge-client wireless multi-connectivity architecture for joint elastic task computation offloading across multiple VR users called ElasticVR. To balance the trade-offs in communication, computation, energy consumption, and QoE that arise herein, we formulate a constrained QoE and energy optimization problem that integrates the multi-user/multi-connectivity action space with the elasticity of VR computational tasks. The ElasticVR framework introduces two multi-agent deep reinforcement learning solutions, namely CPPG and IPPG. CPPG adopts a centralized training and centralized execution approach to capture the coupling between users' communication and computational demands. This leads to globally coordinated decisions at the cost of increased computational overheads and limited scalability. To address the latter challenges, we also explore an alternative strategy denoted IPPG that adopts a centralized training with decentralized execution paradigm. IPPG leverages shared information and parameter sharing to learn robust policies; however, during execution, each user takes action independently based on its local state information only. The decentralized execution alleviates the communication and computation overhead of centralized decision-making and improves scalability. We show that the ElasticVR framework improves the PSNR by 43.21%, while reducing the response time and energy consumption by 42.35% and 56.83%, respectively, compared with a case where no elasticity is incorporated into VR computations.

Cross-Layer Design for Near-Field mmWave Beam Management and Scheduling under Delay-Sensitive Traffic

Nov 16, 2025

Abstract:Next-generation wireless networks will rely on mmWave/sub-THz spectrum and extremely large antenna arrays (ELAAs). This will push their operation into the near field where far-field beam management degrades and beam training becomes more costly and must be done more frequently. Because ELAA training and data transmission consume energy and training trades off with service time, we pose a cross-layer control problem that couples PHY-layer beam management with MAC-layer service under delay-sensitive traffic. The controller decides when to retrain and how aggressively to train (pilot count and sparsity) while allocating transmit power, explicitly balancing pilot overhead, data-phase rate, and energy to reduce the queueing delay of MAC-layer frames/packets to be transmitted. We model the problem as a partially observable Markov decision process and solve it with deep reinforcement learning. In simulations with a realistic near-field channel and varying mobility and traffic load, the learned policy outperforms strong 5G-NR--style baselines at a comparable energy: it achieves 85.5% higher throughput than DFT sweeping and reduces the overflow rate by 78%. These results indicate a practical path to overhead-aware, traffic-adaptive near-field beam management with implications for emerging low-latency, high-rate next-generation applications such as digital twin, spatial computing, and immersive communication.

Multi-Task Decision-Making for Multi-User 360 Video Processing over Wireless Networks

Jul 03, 2024

Abstract:We study a multi-task decision-making problem for 360 video processing in a wireless multi-user virtual reality (VR) system that includes an edge computing unit (ECU) to deliver 360 videos to VR users and offer computing assistance for decoding/rendering of video frames. However, this comes at the expense of increased data volume and required bandwidth. To balance this trade-off, we formulate a constrained quality of experience (QoE) maximization problem in which the rebuffering time and quality variation between video frames are bounded by user and video requirements. To solve the formulated multi-user QoE maximization, we leverage deep reinforcement learning (DRL) for multi-task rate adaptation and computation distribution (MTRC). The proposed MTRC approach does not rely on any predefined assumption about the environment and relies on video playback statistics (i.e., past throughput, decoding time, transmission time, etc.), video information, and the resulting performance to adjust the video bitrate and computation distribution. We train MTRC with real-world wireless network traces and 360 video datasets to obtain evaluation results in terms of the average QoE, peak signal-to-noise ratio (PSNR), rebuffering time, and quality variation. Our results indicate that the MTRC improves the users' QoE compared to state-of-the-art rate adaptation algorithm. Specifically, we show a 5.97 dB to 6.44 dB improvement in PSNR, a 1.66X to 4.23X improvement in rebuffering time, and a 4.21 dB to 4.35 dB improvement in quality variation.

BONES: Near-Optimal Neural-Enhanced Video Streaming

Oct 15, 2023Abstract:Accessing high-quality video content can be challenging due to insufficient and unstable network bandwidth. Recent advances in neural enhancement have shown promising results in improving the quality of degraded videos through deep learning. Neural-Enhanced Streaming (NES) incorporates this new approach into video streaming, allowing users to download low-quality video segments and then enhance them to obtain high-quality content without violating the playback of the video stream. We introduce BONES, an NES control algorithm that jointly manages the network and computational resources to maximize the quality of experience (QoE) of the user. BONES formulates NES as a Lyapunov optimization problem and solves it in an online manner with near-optimal performance, making it the first NES algorithm to provide a theoretical performance guarantee. Our comprehensive experimental results indicate that BONES increases QoE by 4% to 13% over state-of-the-art algorithms, demonstrating its potential to enhance the video streaming experience for users. Our code and data will be released to the public.

CU-Net: Efficient Point Cloud Color Upsampling Network

Sep 12, 2022

Abstract:Point cloud upsampling is necessary for Augmented Reality, Virtual Reality, and telepresence scenarios. Although the geometry upsampling is well studied to densify point cloud coordinates, the upsampling of colors has been largely overlooked. In this paper, we propose CU-Net, the first deep-learning point cloud color upsampling model. Leveraging a feature extractor based on sparse convolution and a color prediction module based on neural implicit function, CU-Net achieves linear time and space complexity. Therefore, CU-Net is theoretically guaranteed to be more efficient than most existing methods with quadratic complexity. Experimental results demonstrate that CU-Net can colorize a photo-realistic point cloud with nearly a million points in real time, while having better visual quality than baselines. Besides, CU-Net can adapt to an arbitrary upsampling ratio and unseen objects. Our source code will be released to the public soon.

Towards Enabling Next Generation Societal Virtual Reality Applications for Virtual Human Teleportation

Aug 09, 2022

Abstract:Virtual reality (VR) is an emerging technology of great societal potential. Some of its most exciting and promising use cases include remote scene content and untethered lifelike navigation. This article first highlights the relevance of such future societal applications and the challenges ahead towards enabling them. It then provides a broad and contextual high-level perspective of several emerging technologies and unconventional techniques and argues that only by their synergistic integration can the fundamental performance bottlenecks of hyper-intensive computation, ultra-high data rate, and ultra-low latency be overcome to enable untethered and lifelike VR-based remote scene immersion. A novel future system concept is introduced that embodies this holistic integration, unified with a rigorous analysis, to capture the fundamental synergies and interplay between communications, computation, and signal scalability that arise in this context, and advance its performance at the same time. Several representative results highlighting these trade-offs and the benefits of the envisioned system are presented at the end.

Accelerated Structure-Aware Reinforcement Learning for Delay-Sensitive Energy Harvesting Wireless Sensors

Jul 22, 2018

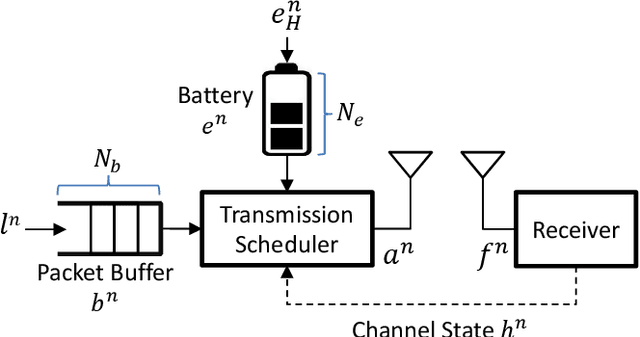

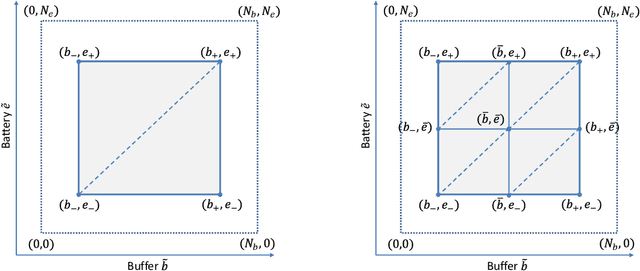

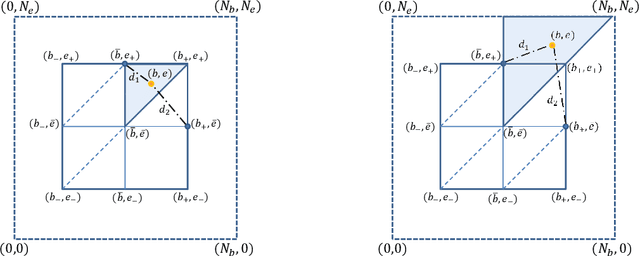

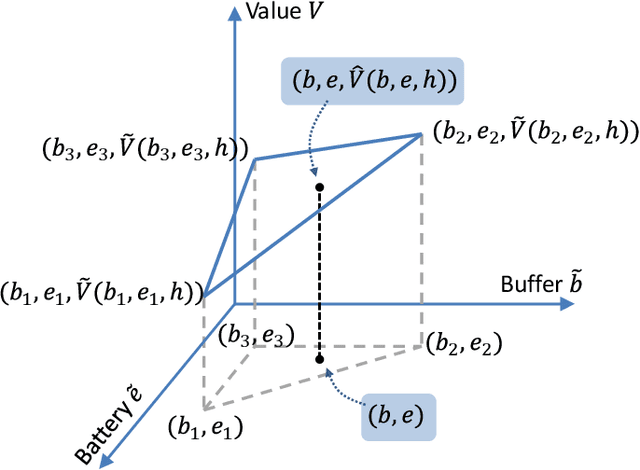

Abstract:We investigate an energy-harvesting wireless sensor transmitting latency-sensitive data over a fading channel. The sensor injects captured data packets into its transmission queue and relies on ambient energy harvested from the environment to transmit them. We aim to find the optimal scheduling policy that decides whether or not to transmit the queue's head-of-line packet at each transmission opportunity such that the expected packet queuing delay is minimized given the available harvested energy. No prior knowledge of the stochastic processes that govern the channel, captured data, or harvested energy dynamics are assumed, thereby necessitating the use of online learning to optimize the scheduling policy. We formulate this scheduling problem as a Markov decision process (MDP) and analyze the structural properties of its optimal value function. In particular, we show that it is non-decreasing and has increasing differences in the queue backlog and that it is non-increasing and has increasing differences in the battery state. We exploit this structure to formulate a novel accelerated reinforcement learning (RL) algorithm to solve the scheduling problem online at a much faster learning rate, while limiting the induced computational complexity. Our experiments demonstrate that the proposed algorithm closely approximates the performance of an optimal offline solution that requires a priori knowledge of the channel, captured data, and harvested energy dynamics. Simultaneously, by leveraging the value function's structure, our approach achieves competitive performance relative to a state-of-the-art RL algorithm, at potentially orders of magnitude lower complexity. Finally, considerable performance gains are demonstrated over the well-known Q-learning algorithm.

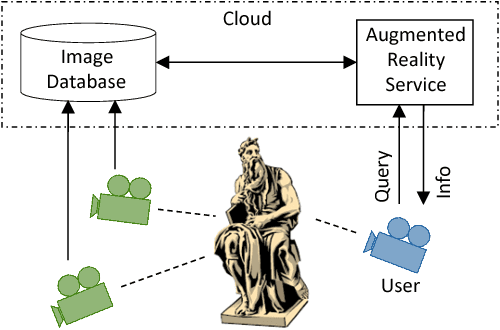

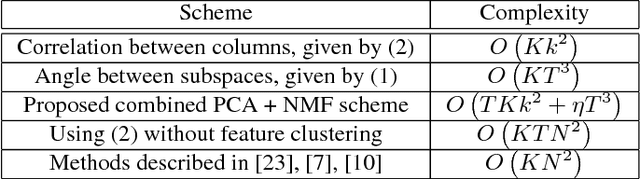

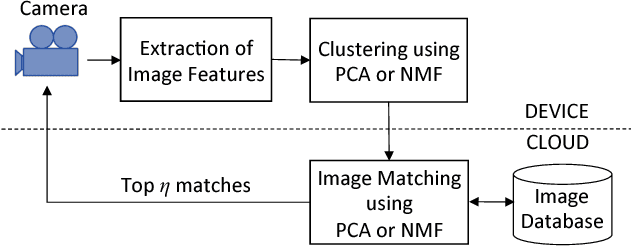

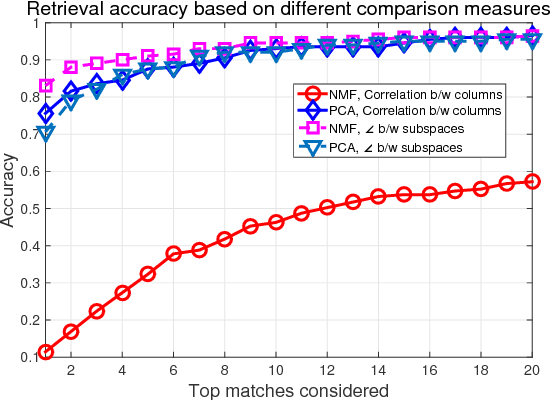

Matrix Factorization-Based Clustering Of Image Features For Bandwidth-Constrained Information Retrieval

May 07, 2016

Abstract:We consider the problem of accurately and efficiently querying a remote server to retrieve information about images captured by a mobile device. In addition to reduced transmission overhead and computational complexity, the retrieval protocol should be robust to variations in the image acquisition process, such as translation, rotation, scaling, and sensor-related differences. We propose to extract scale-invariant image features and then perform clustering to reduce the number of features needed for image matching. Principal Component Analysis (PCA) and Non-negative Matrix Factorization (NMF) are investigated as candidate clustering approaches. The image matching complexity at the database server is quadratic in the (small) number of clusters, not in the (very large) number of image features. We employ an image-dependent information content metric to approximate the model order, i.e., the number of clusters, needed for accurate matching, which is preferable to setting the model order using trial and error. We show how to combine the hypotheses provided by PCA and NMF factor loadings, thereby obtaining more accurate retrieval than using either approach alone. In experiments on a database of urban images, we obtain a top-1 retrieval accuracy of 89% and a top-3 accuracy of 92.5%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge