Nicholas Mastronarde

BenchLink: An SoC-Based Benchmark for Resilient Communication Links in GPS-Denied Environments

Dec 24, 2025

Abstract:Accurate timing and synchronization, typically enabled by GPS, are essential for modern wireless communication systems. However, many emerging applications must operate in GPS-denied environments where signals are unreliable or disrupted, resulting in oscillator drift and carrier frequency impairments. To address these challenges, we present BenchLink, a System-on-Chip (SoC)-based benchmark for resilient communication links that functions without GPS and supports adaptive pilot density and modulation. Unlike traditional General Purpose Processor (GPP)-based software-defined radios (e.g. USRPs), the SoC-based design allows for more precise latency control. We implement and evaluate BenchLink on Zynq UltraScale+ MPSoCs, and demonstrate its effectiveness in both ground and aerial environments. A comprehensive dataset has also been collected under various conditions. We will make both the SoC-based link design and dataset available to the wireless community. BenchLink is expected to facilitate future research on data-driven link adaptation, resilient synchronization in GPS-denied scenarios, and emerging applications that require precise latency control, such as integrated radar sensing and communication.

Cross-Layer Design for Near-Field mmWave Beam Management and Scheduling under Delay-Sensitive Traffic

Nov 16, 2025

Abstract:Next-generation wireless networks will rely on mmWave/sub-THz spectrum and extremely large antenna arrays (ELAAs). This will push their operation into the near field where far-field beam management degrades and beam training becomes more costly and must be done more frequently. Because ELAA training and data transmission consume energy and training trades off with service time, we pose a cross-layer control problem that couples PHY-layer beam management with MAC-layer service under delay-sensitive traffic. The controller decides when to retrain and how aggressively to train (pilot count and sparsity) while allocating transmit power, explicitly balancing pilot overhead, data-phase rate, and energy to reduce the queueing delay of MAC-layer frames/packets to be transmitted. We model the problem as a partially observable Markov decision process and solve it with deep reinforcement learning. In simulations with a realistic near-field channel and varying mobility and traffic load, the learned policy outperforms strong 5G-NR--style baselines at a comparable energy: it achieves 85.5% higher throughput than DFT sweeping and reduces the overflow rate by 78%. These results indicate a practical path to overhead-aware, traffic-adaptive near-field beam management with implications for emerging low-latency, high-rate next-generation applications such as digital twin, spatial computing, and immersive communication.

On the Effects of Modeling on the Sim-to-Real Transfer Gap in Twinning the POWDER Platform

Aug 26, 2024Abstract:Digital Twin (DT) technology is expected to play a pivotal role in NextG wireless systems. However, a key challenge remains in the evaluation of data-driven algorithms within DTs, particularly the transfer of learning from simulations to real-world environments. In this work, we investigate the sim-to-real gap in developing a digital twin for the NSF PAWR Platform, POWDER. We first develop a 3D model of the University of Utah campus, incorporating geographical measurements and all rooftop POWDER nodes. We then assess the accuracy of various path loss models used in training modeling and control policies, examining the impact of each model on sim-to-real link performance predictions. Finally, we discuss the lessons learned from model selection and simulation design, offering guidance for the implementation of DT-enabled wireless networks.

Cloud-Based Federation Framework and Prototype for Open, Scalable, and Shared Access to NextG and IoT Testbeds

Aug 26, 2024

Abstract:In this work, we present a new federation framework for UnionLabs, an innovative cloud-based resource-sharing infrastructure designed for next-generation (NextG) and Internet of Things (IoT) over-the-air (OTA) experiments. The framework aims to reduce the federation complexity for testbeds developers by automating tedious backend operations, thereby providing scalable federation and remote access to various wireless testbeds. We first describe the key components of the new federation framework, including the Systems Manager Integration Engine (SMIE), the Automated Script Generator (ASG), and the Database Context Manager (DCM). We then prototype and deploy the new Federation Plane on the Amazon Web Services (AWS) public cloud, demonstrating its effectiveness by federating two wireless testbeds: i) UB NeXT, a 5G-and-beyond (5G+) testbed at the University at Buffalo, and ii) UT IoT, an IoT testbed at the University of Utah. Through this work we aim to initiate a grassroots campaign to democratize access to wireless research testbeds with heterogeneous hardware resources and network environment, and accelerate the establishment of a mature, open experimental ecosystem for the wireless community. The API of the new Federation Plane will be released to the community after internal testing is completed.

Spectrum Coexistence of Satellite-borne Passive Radiometry and Terrestrial Next-G Networks

Feb 12, 2024

Abstract:Spectrum coexistence between terrestrial Next-G cellular networks and space-borne remote sensing (RS) is now gaining attention. One major question is how this would impact RS equipment. In this study, we develop a framework based on stochastic geometry to evaluate the statistical characteristics of radio frequency interference (RFI) originating from a large-scale terrestrial Next-G network operating in the same frequency band as an RS satellite. For illustration, we consider a network operating in the restricted L-band (1400-1427 MHz) with NASA's Soil Moisture Active Passive (SMAP) satellite, which is one of the latest RS satellites active in this band. We use the Thomas Cluster Process (TCP) to model RFI from clusters of cellular base stations on SMAP's antenna's main- and side-lobes. We show that a large number of active clusters can operate in the restricted L-band without compromising SMAP's mission if they avoid interfering with the main-lobe of its antenna. This is possible thanks to SMAP's extremely low side-lobe antenna gains.

Coexistence of Satellite-borne Passive Radiometry and Terrestrial NextG Wireless Networks in the 1400-1427 MHz Restricted L-Band

Dec 13, 2023Abstract:The rapid growth of wireless technologies has fostered research on spectrum coexistence worldwide. One idea that is gaining attention is using frequency bands solely devoted to passive applications, such as passive remote sensing. One such option is the 27 MHz L-band spectrum from 1.400 GHz to 1.427 GHz. Active wireless transmissions are prohibited in this passive band due to radio regulations aimed at preventing Radio Frequency Interference (RFI) on highly sensitive passive radiometry instruments. The Soil Moisture Active Passive (SMAP) satellite, launched by the National Aeronautics and Space Administration (NASA), is a recent space-based remote sensing mission that passively scans the Earth's electromagnetic emissions in this 27 MHz band to assess soil moisture on a global scale periodically. This paper evaluates using the restricted L-band for active terrestrial wireless communications through two means. First, we investigate an opportunistic temporal use of this passive band within a terrestrial wireless network, such as a cluster of cells, during periods when there is no Line of Sight (LoS) between SMAP and the terrestrial network. Second, leveraging stochastic geometry, we assess the feasibility of using this passive band within a large-scale network in LoS of SMAP while ensuring that the error induced on SMAP's measurements due to RFI is below a given threshold. The methodology established here, although based on SMAP's specifications, is adaptable for utilization with various passive sensing satellites regardless of their orbits or operating frequencies.

minimizing estimation error variance using a weighted sum of samples from the soil moisture active passive (SMAP) satellite

Jun 18, 2023Abstract:The National Aeronautics and Space Administration's (NASA) Soil Moisture Active Passive (SMAP) is the latest passive remote sensing satellite operating in the protected L-band spectrum from 1.400 to 1.427 GHz. SMAP provides global-scale soil moisture images with point-wise passive scanning of the earth's thermal radiations. SMAP takes multiple samples in frequency and time from each antenna footprint to increase the likelihood of capturing RFI-free samples. SMAP's current RFI detection and mitigation algorithm excludes samples detected to be RFI-contaminated and averages the remaining samples. But this approach can be less effective for harsh RFI environments, where RFI contamination is present in all or a large number of samples. In this paper, we investigate a bias-free weighted sum of samples estimator, where the weights can be computed based on the RFI's statistical properties.

What is Interpretable? Using Machine Learning to Design Interpretable Decision-Support Systems

Nov 27, 2018

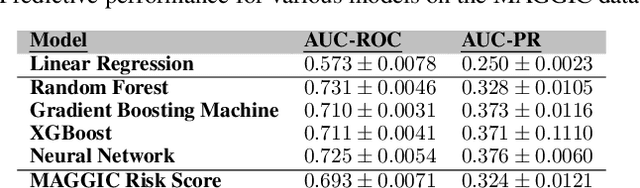

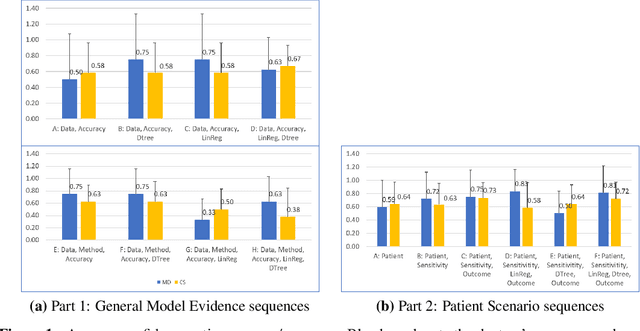

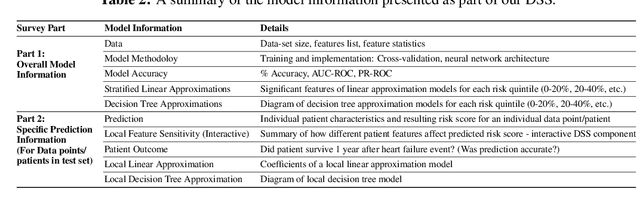

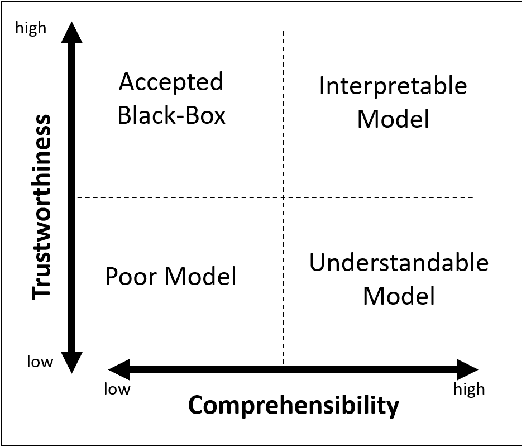

Abstract:Recent efforts in Machine Learning (ML) interpretability have focused on creating methods for explaining black-box ML models. However, these methods rely on the assumption that simple approximations, such as linear models or decision-trees, are inherently human-interpretable, which has not been empirically tested. Additionally, past efforts have focused exclusively on comprehension, neglecting to explore the trust component necessary to convince non-technical experts, such as clinicians, to utilize ML models in practice. In this paper, we posit that reinforcement learning (RL) can be used to learn what is interpretable to different users and, consequently, build their trust in ML models. To validate this idea, we first train a neural network to provide risk assessments for heart failure patients. We then design a RL-based clinical decision-support system (DSS) around the neural network model, which can learn from its interactions with users. We conduct an experiment involving a diverse set of clinicians from multiple institutions in three different countries. Our results demonstrate that ML experts cannot accurately predict which system outputs will maximize clinicians' confidence in the underlying neural network model, and suggest additional findings that have broad implications to the future of research into ML interpretability and the use of ML in medicine.

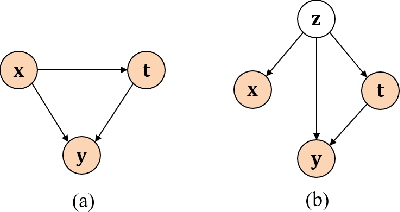

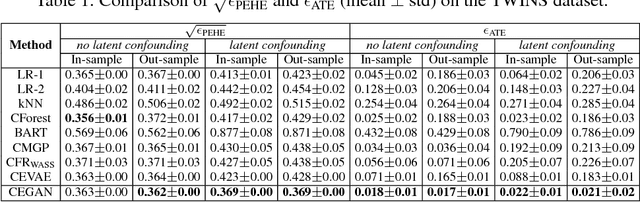

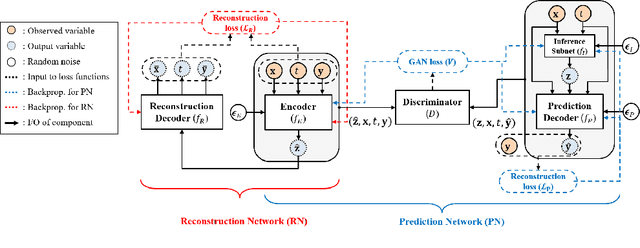

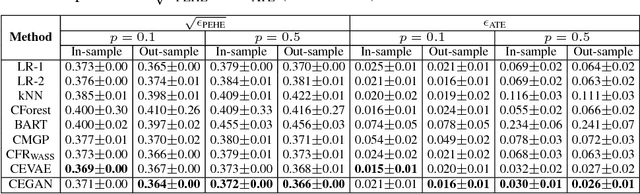

Estimation of Individual Treatment Effect in Latent Confounder Models via Adversarial Learning

Nov 21, 2018

Abstract:Estimating the individual treatment effect (ITE) from observational data is essential in medicine. A central challenge in estimating the ITE is handling confounders, which are factors that affect both an intervention and its outcome. Most previous work relies on the unconfoundedness assumption, which posits that all the confounders are measured in the observational data. However, if there are unmeasurable (latent) confounders, then confounding bias is introduced. Fortunately, noisy proxies for the latent confounders are often available and can be used to make an unbiased estimate of the ITE. In this paper, we develop a novel adversarial learning framework to make unbiased estimates of the ITE using noisy proxies.

Accelerated Structure-Aware Reinforcement Learning for Delay-Sensitive Energy Harvesting Wireless Sensors

Jul 22, 2018

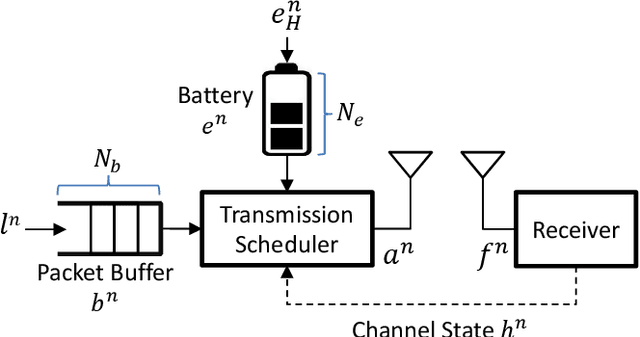

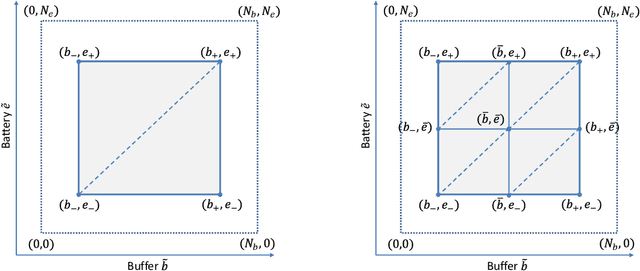

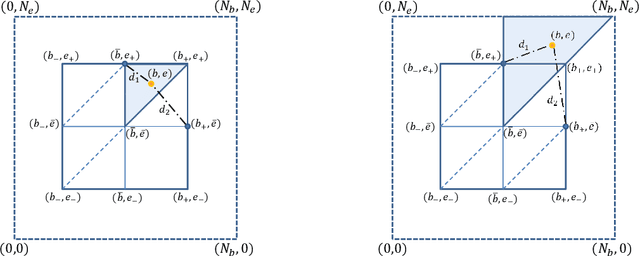

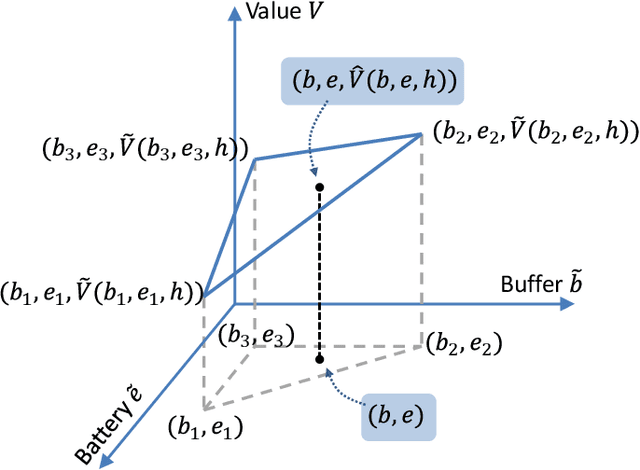

Abstract:We investigate an energy-harvesting wireless sensor transmitting latency-sensitive data over a fading channel. The sensor injects captured data packets into its transmission queue and relies on ambient energy harvested from the environment to transmit them. We aim to find the optimal scheduling policy that decides whether or not to transmit the queue's head-of-line packet at each transmission opportunity such that the expected packet queuing delay is minimized given the available harvested energy. No prior knowledge of the stochastic processes that govern the channel, captured data, or harvested energy dynamics are assumed, thereby necessitating the use of online learning to optimize the scheduling policy. We formulate this scheduling problem as a Markov decision process (MDP) and analyze the structural properties of its optimal value function. In particular, we show that it is non-decreasing and has increasing differences in the queue backlog and that it is non-increasing and has increasing differences in the battery state. We exploit this structure to formulate a novel accelerated reinforcement learning (RL) algorithm to solve the scheduling problem online at a much faster learning rate, while limiting the induced computational complexity. Our experiments demonstrate that the proposed algorithm closely approximates the performance of an optimal offline solution that requires a priori knowledge of the channel, captured data, and harvested energy dynamics. Simultaneously, by leveraging the value function's structure, our approach achieves competitive performance relative to a state-of-the-art RL algorithm, at potentially orders of magnitude lower complexity. Finally, considerable performance gains are demonstrated over the well-known Q-learning algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge