J. Diego Caporale

Effect of Gait Design on Proprioceptive Sensing of Terrain Properties in a Quadrupedal Robot

Sep 26, 2025Abstract:In-situ robotic exploration is an important tool for advancing knowledge of geological processes that describe the Earth and other Planetary bodies. To inform and enhance operations for these roving laboratories, it is imperative to understand the terramechanical properties of their environments, especially for traversing on loose, deformable substrates. Recent research suggested that legged robots with direct-drive and low-gear ratio actuators can sensitively detect external forces, and therefore possess the potential to measure terrain properties with their legs during locomotion, providing unprecedented sampling speed and density while accessing terrains previously too risky to sample. This paper explores these ideas by investigating the impact of gait on proprioceptive terrain sensing accuracy, particularly comparing a sensing-oriented gait, Crawl N' Sense, with a locomotion-oriented gait, Trot-Walk. Each gait's ability to measure the strength and texture of deformable substrate is quantified as the robot locomotes over a laboratory transect consisting of a rigid surface, loose sand, and loose sand with synthetic surface crusts. Our results suggest that with both the sensing-oriented crawling gait and locomotion-oriented trot gait, the robot can measure a consistent difference in the strength (in terms of penetration resistance) between the low- and high-resistance substrates; however, the locomotion-oriented trot gait contains larger magnitude and variance in measurements. Furthermore, the slower crawl gait can detect brittle ruptures of the surface crusts with significantly higher accuracy than the faster trot gait. Our results offer new insights that inform legged robot "sensing during locomotion" gait design and planning for scouting the terrain and producing scientific measurements on other worlds to advance our understanding of their geology and formation.

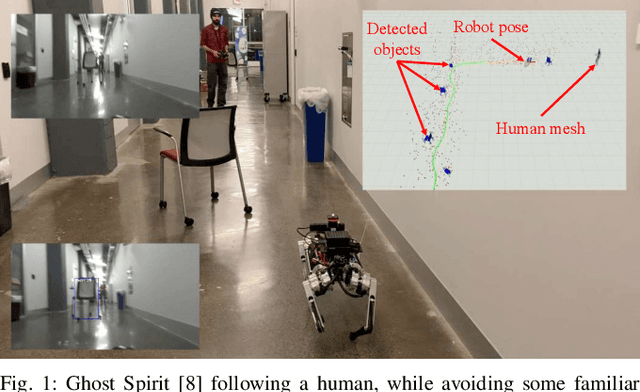

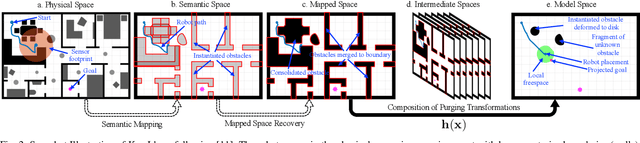

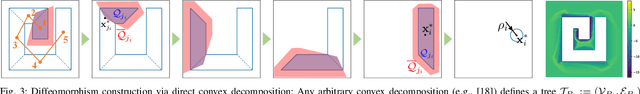

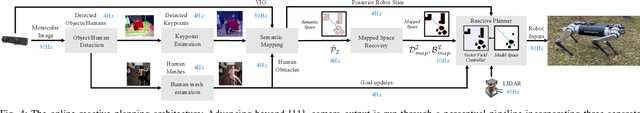

Technical Report: Reactive Semantic Planning in Unexplored Semantic Environments Using Deep Perceptual Feedback

Feb 28, 2020

Abstract:This paper presents a reactive planning system that enriches the topological representation of an environment with a tightly integrated semantic representation, achieved by incorporating and exploiting advances in deep perceptual learning and probabilistic semantic reasoning. Our architecture combines object detection with semantic SLAM, affording robust, reactive logical as well as geometric planning in unexplored environments. Moreover, by incorporating a human mesh estimation algorithm, our system is capable of reacting and responding in real time to semantically labeled human motions and gestures. New formal results allow tracking of suitably non-adversarial moving targets, while maintaining the same collision avoidance guarantees. We suggest the empirical utility of the proposed control architecture with a numerical study including comparisons with a state-of-the-art dynamic replanning algorithm, and physical implementation on both a wheeled and legged platform in different settings with both geometric and semantic goals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge