Huiliang Zhang

STEMS: Spatial-Temporal Enhanced Safe Multi-Agent Coordination for Building Energy Management

Oct 15, 2025Abstract:Building energy management is essential for achieving carbon reduction goals, improving occupant comfort, and reducing energy costs. Coordinated building energy management faces critical challenges in exploiting spatial-temporal dependencies while ensuring operational safety across multi-building systems. Current multi-building energy systems face three key challenges: insufficient spatial-temporal information exploitation, lack of rigorous safety guarantees, and system complexity. This paper proposes Spatial-Temporal Enhanced Safe Multi-Agent Coordination (STEMS), a novel safety-constrained multi-agent reinforcement learning framework for coordinated building energy management. STEMS integrates two core components: (1) a spatial-temporal graph representation learning framework using a GCN-Transformer fusion architecture to capture inter-building relationships and temporal patterns, and (2) a safety-constrained multi-agent RL algorithm incorporating Control Barrier Functions to provide mathematical safety guarantees. Extensive experiments on real-world building datasets demonstrate STEMS's superior performance over existing methods, showing that STEMS achieves 21% cost reduction, 18% emission reduction, and dramatically reduces safety violations from 35.1% to 5.6% while maintaining optimal comfort with only 0.13 discomfort proportion. The framework also demonstrates strong robustness during extreme weather conditions and maintains effectiveness across different building types.

Leveraging Multivariate Long-Term History Representation for Time Series Forecasting

May 20, 2025Abstract:Multivariate Time Series (MTS) forecasting has a wide range of applications in both industry and academia. Recent advances in Spatial-Temporal Graph Neural Network (STGNN) have achieved great progress in modelling spatial-temporal correlations. Limited by computational complexity, most STGNNs for MTS forecasting focus primarily on short-term and local spatial-temporal dependencies. Although some recent methods attempt to incorporate univariate history into modeling, they still overlook crucial long-term spatial-temporal similarities and correlations across MTS, which are essential for accurate forecasting. To fill this gap, we propose a framework called the Long-term Multivariate History Representation (LMHR) Enhanced STGNN for MTS forecasting. Specifically, a Long-term History Encoder (LHEncoder) is adopted to effectively encode the long-term history into segment-level contextual representations and reduce point-level noise. A non-parametric Hierarchical Representation Retriever (HRetriever) is designed to include the spatial information in the long-term spatial-temporal dependency modelling and pick out the most valuable representations with no additional training. A Transformer-based Aggregator (TAggregator) selectively fuses the sparsely retrieved contextual representations based on the ranking positional embedding efficiently. Experimental results demonstrate that LMHR outperforms typical STGNNs by 10.72% on the average prediction horizons and state-of-the-art methods by 4.12% on several real-world datasets. Additionally, it consistently improves prediction accuracy by 9.8% on the top 10% of rapidly changing patterns across the datasets.

Nearest Neighbor Multivariate Time Series Forecasting

May 16, 2025Abstract:Multivariate time series (MTS) forecasting has a wide range of applications in both industry and academia. Recently, spatial-temporal graph neural networks (STGNNs) have gained popularity as MTS forecasting methods. However, current STGNNs can only use the finite length of MTS input data due to the computational complexity. Moreover, they lack the ability to identify similar patterns throughout the entire dataset and struggle with data that exhibit sparsely and discontinuously distributed correlations among variables over an extensive historical period, resulting in only marginal improvements. In this article, we introduce a simple yet effective k-nearest neighbor MTS forecasting ( kNN-MTS) framework, which forecasts with a nearest neighbor retrieval mechanism over a large datastore of cached series, using representations from the MTS model for similarity search. This approach requires no additional training and scales to give the MTS model direct access to the whole dataset at test time, resulting in a highly expressive model that consistently improves performance, and has the ability to extract sparse distributed but similar patterns spanning over multivariables from the entire dataset. Furthermore, a hybrid spatial-temporal encoder (HSTEncoder) is designed for kNN-MTS which can capture both long-term temporal and short-term spatial-temporal dependencies and is shown to provide accurate representation for kNN-MTSfor better forecasting. Experimental results on several real-world datasets show a significant improvement in the forecasting performance of kNN-MTS. The quantitative analysis also illustrates the interpretability and efficiency of kNN-MTS, showing better application prospects and opening up a new path for efficiently using the large dataset in MTS models.

Adaptive Aggregation for Safety-Critical Control

Feb 07, 2023

Abstract:Safety has been recognized as the central obstacle to preventing the use of reinforcement learning (RL) for real-world applications. Different methods have been developed to deal with safety concerns in RL. However, learning reliable RL-based solutions usually require a large number of interactions with the environment. Likewise, how to improve the learning efficiency, specifically, how to utilize transfer learning for safe reinforcement learning, has not been well studied. In this work, we propose an adaptive aggregation framework for safety-critical control. Our method comprises two key techniques: 1) we learn to transfer the safety knowledge by aggregating the multiple source tasks and a target task through the attention network; 2) we separate the goal of improving task performance and reducing constraint violations by utilizing a safeguard. Experiment results demonstrate that our algorithm can achieve fewer safety violations while showing better data efficiency compared with several baselines.

MetaEMS: A Meta Reinforcement Learning-based Control Framework for Building Energy Management System

Oct 23, 2022

Abstract:The building sector has been recognized as one of the primary sectors for worldwide energy consumption. Improving the energy efficiency of the building sector can help reduce the operation cost and reduce the greenhouse gas emission. The energy management system (EMS) can monitor and control the operations of built-in appliances in buildings, so an efficient EMS is of crucial importance to improve the building operation efficiency and maintain safe operations. With the growing penetration of renewable energy and electrical appliances, increasing attention has been paid to the development of intelligent building EMS. Recently, reinforcement learning (RL) has been applied for building EMS and has shown promising potential. However, most of the current RL-based EMS solutions would need a large amount of data to learn a reliable control policy, which limits the applicability of these solutions in the real world. In this work, we propose MetaEMS, which can help achieve better energy management performance with the benefits of RL and meta-learning. Experiment results showcase that our proposed MetaEMS can adapt faster to environment changes and perform better in most situations compared with other baselines.

Anticipating the Unseen Discrepancy for Vision and Language Navigation

Sep 10, 2022

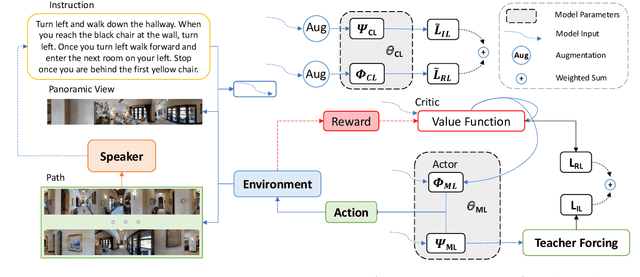

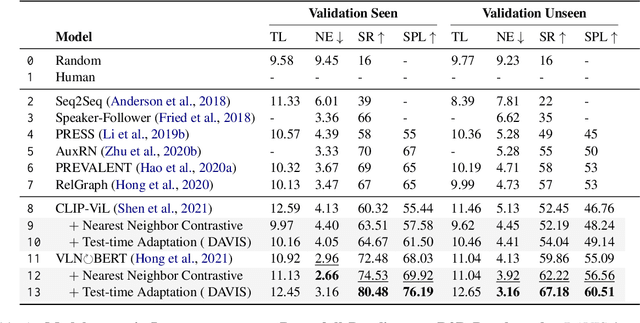

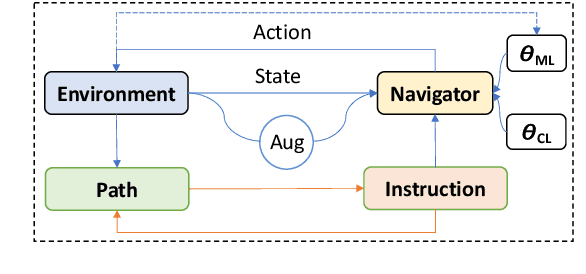

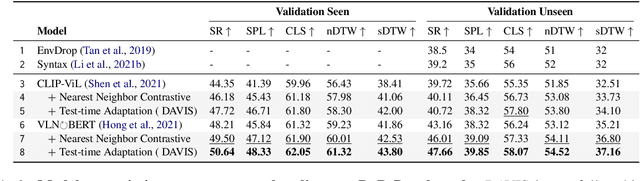

Abstract:Vision-Language Navigation requires the agent to follow natural language instructions to reach a specific target. The large discrepancy between seen and unseen environments makes it challenging for the agent to generalize well. Previous studies propose data augmentation methods to mitigate the data bias explicitly or implicitly and provide improvements in generalization. However, they try to memorize augmented trajectories and ignore the distribution shifts under unseen environments at test time. In this paper, we propose an Unseen Discrepancy Anticipating Vision and Language Navigation (DAVIS) that learns to generalize to unseen environments via encouraging test-time visual consistency. Specifically, we devise: 1) a semi-supervised framework DAVIS that leverages visual consistency signals across similar semantic observations. 2) a two-stage learning procedure that encourages adaptation to test-time distribution. The framework enhances the basic mixture of imitation and reinforcement learning with Momentum Contrast to encourage stable decision-making on similar observations under a joint training stage and a test-time adaptation stage. Extensive experiments show that DAVIS achieves model-agnostic improvement over previous state-of-the-art VLN baselines on R2R and RxR benchmarks. Our source code and data are in supplemental materials.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge