Hiroshi Saruwatari

UTMOS: UTokyo-SaruLab System for VoiceMOS Challenge 2022

Apr 05, 2022

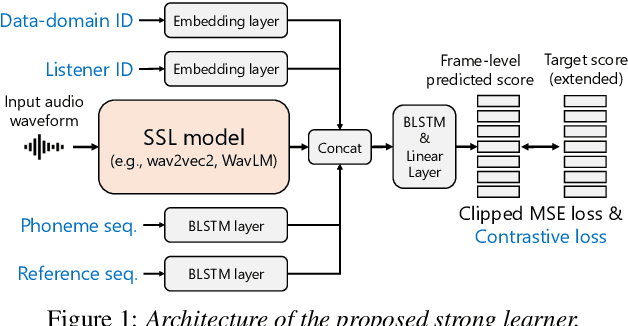

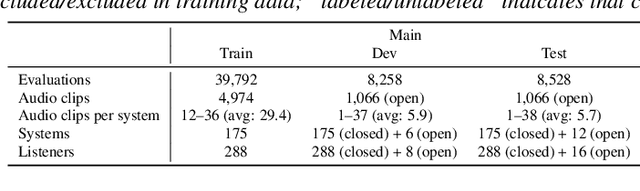

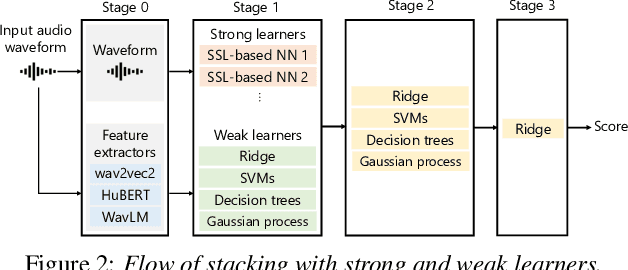

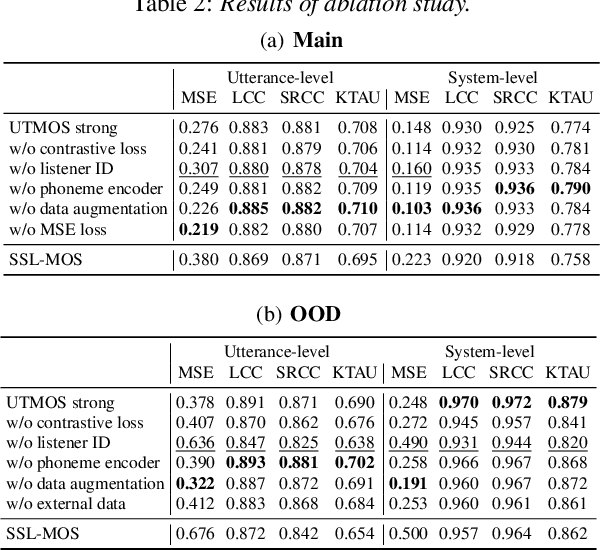

Abstract:We present the UTokyo-SaruLab mean opinion score (MOS) prediction system submitted to VoiceMOS Challenge 2022. The challenge is to predict the MOS values of speech samples collected from previous Blizzard Challenges and Voice Conversion Challenges for two tracks: a main track for in-domain prediction and an out-of-domain (OOD) track for which there is less labeled data from different listening tests. Our system is based on ensemble learning of strong and weak learners. Strong learners incorporate several improvements to the previous fine-tuning models of self-supervised learning (SSL) models, while weak learners use basic machine-learning methods to predict scores from SSL features. In the Challenge, our system had the highest score on several metrics for both the main and OOD tracks. In addition, we conducted ablation studies to investigate the effectiveness of our proposed methods.

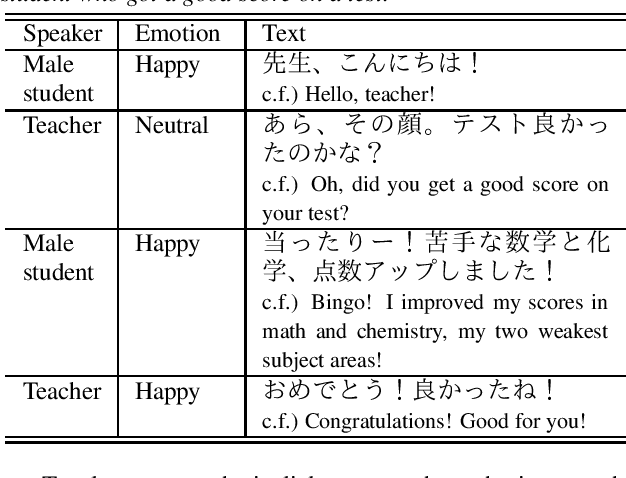

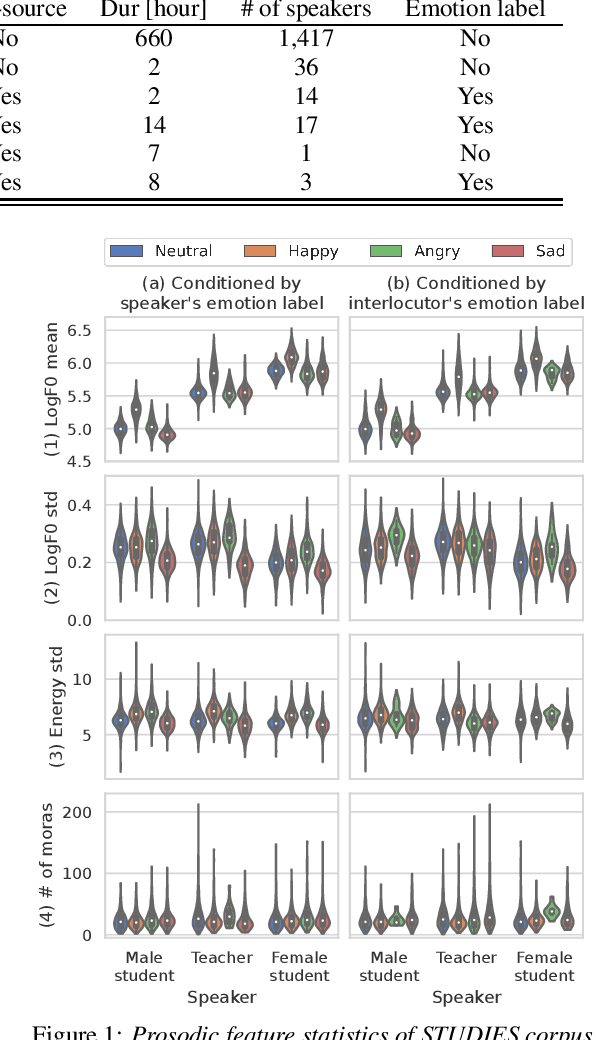

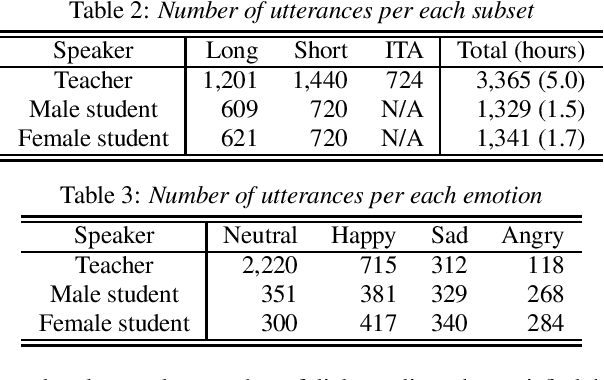

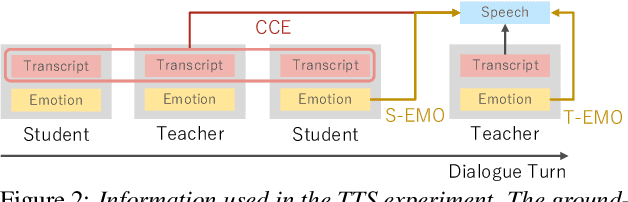

STUDIES: Corpus of Japanese Empathetic Dialogue Speech Towards Friendly Voice Agent

Mar 28, 2022

Abstract:We present STUDIES, a new speech corpus for developing a voice agent that can speak in a friendly manner. Humans naturally control their speech prosody to empathize with each other. By incorporating this "empathetic dialogue" behavior into a spoken dialogue system, we can develop a voice agent that can respond to a user more naturally. We designed the STUDIES corpus to include a speaker who speaks with empathy for the interlocutor's emotion explicitly. We describe our methodology to construct an empathetic dialogue speech corpus and report the analysis results of the STUDIES corpus. We conducted a text-to-speech experiment to initially investigate how we can develop more natural voice agent that can tune its speaking style corresponding to the interlocutor's emotion. The results show that the use of interlocutor's emotion label and conversational context embedding can produce speech with the same degree of naturalness as that synthesized by using the agent's emotion label. Our project page of the STUDIES corpus is http://sython.org/Corpus/STUDIES.

SelfRemaster: Self-Supervised Speech Restoration with Analysis-by-Synthesis Approach Using Channel Modeling

Mar 24, 2022

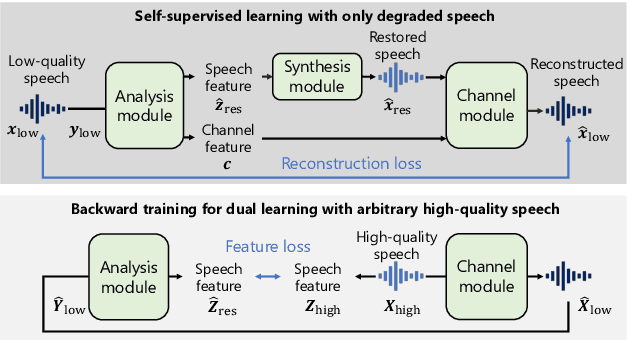

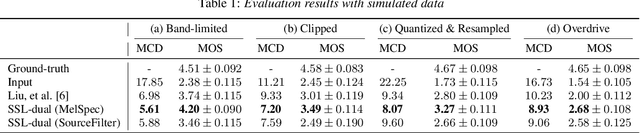

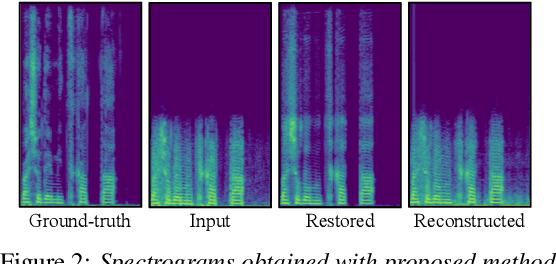

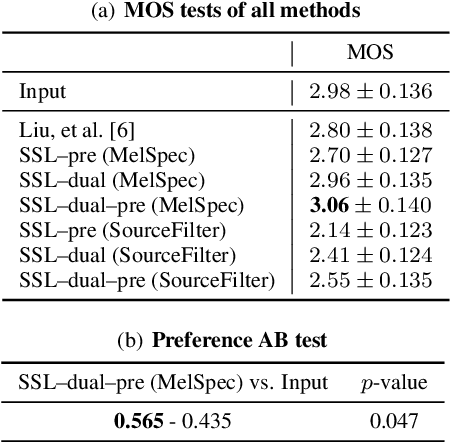

Abstract:We present a self-supervised speech restoration method without paired speech corpora. Because the previous general speech restoration method uses artificial paired data created by applying various distortions to high-quality speech corpora, it cannot sufficiently represent acoustic distortions of real data, limiting the applicability. Our model consists of analysis, synthesis, and channel modules that simulate the recording process of degraded speech and is trained with real degraded speech data in a self-supervised manner. The analysis module extracts distortionless speech features and distortion features from degraded speech, while the synthesis module synthesizes the restored speech waveform, and the channel module adds distortions to the speech waveform. Our model also enables audio effect transfer, in which only acoustic distortions are extracted from degraded speech and added to arbitrary high-quality audio. Experimental evaluations with both simulated and real data show that our method achieves significantly higher-quality speech restoration than the previous supervised method, suggesting its applicability to real degraded speech materials.

Personalized filled-pause generation with group-wise prediction models

Mar 18, 2022

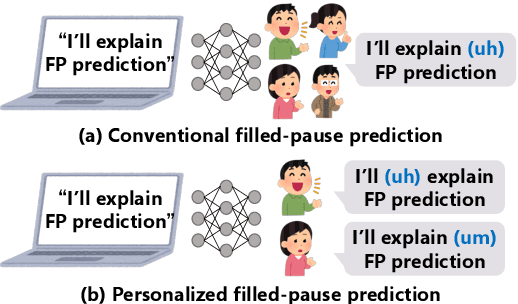

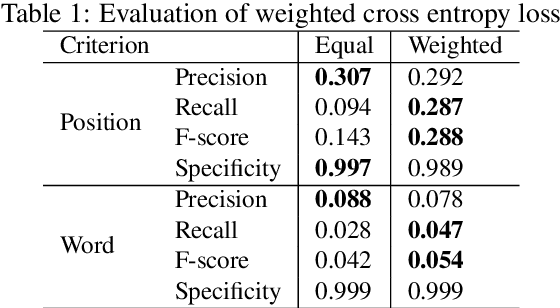

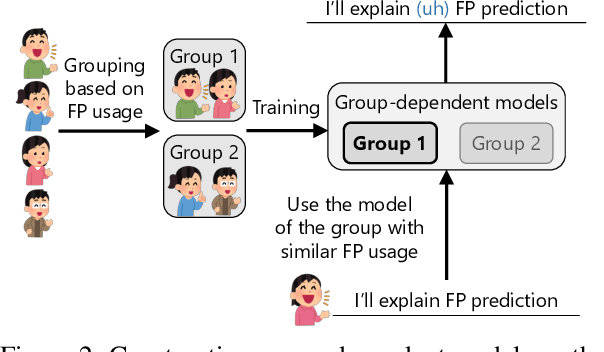

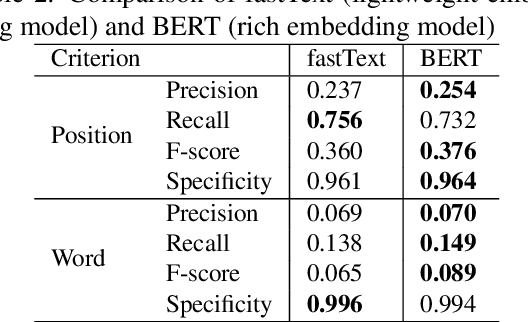

Abstract:In this paper, we propose a method to generate personalized filled pauses (FPs) with group-wise prediction models. Compared with fluent text generation, disfluent text generation has not been widely explored. To generate more human-like texts, we addressed disfluent text generation. The usage of disfluency, such as FPs, rephrases, and word fragments, differs from speaker to speaker, and thus, the generation of personalized FPs is required. However, it is difficult to predict them because of the sparsity of position and the frequency difference between more and less frequently used FPs. Moreover, it is sometimes difficult to adapt FP prediction models to each speaker because of the large variation of the tendency within each speaker. To address these issues, we propose a method to build group-dependent prediction models by grouping speakers on the basis of their tendency to use FPs. This method does not require a large amount of data and time to train each speaker model. We further introduce a loss function and a word embedding model suitable for FP prediction. Our experimental results demonstrate that group-dependent models can predict FPs with higher scores than a non-personalized one and the introduced loss function and word embedding model improve the prediction performance.

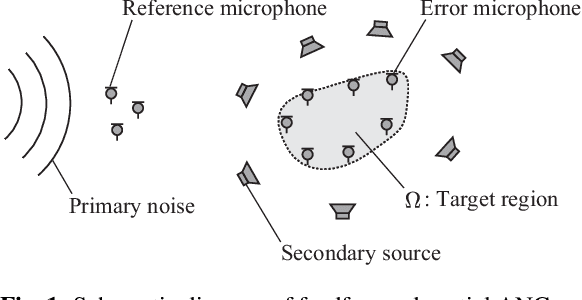

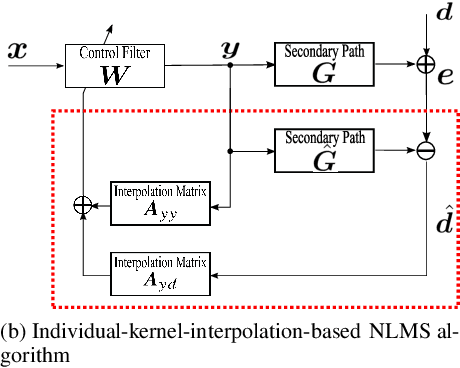

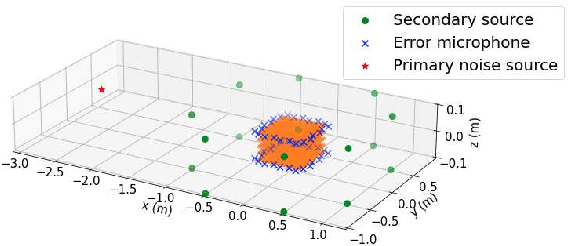

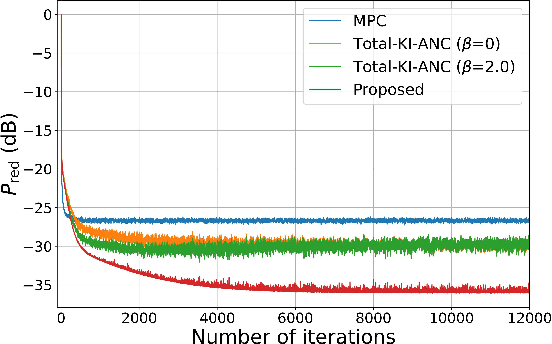

Spatial active noise control based on individual kernel interpolation of primary and secondary sound fields

Feb 10, 2022

Abstract:A spatial active noise control (ANC) method based on the individual kernel interpolation of primary and secondary sound fields is proposed. Spatial ANC is aimed at cancelling unwanted primary noise within a continuous region by using multiple secondary sources and microphones. A method based on the kernel interpolation of a sound field makes it possible to attenuate noise over the target region with flexible array geometry. Furthermore, by using the kernel function with directional weighting, prior information on primary noise source directions can be taken into consideration. However, whereas the sound field to be interpolated is a superposition of primary and secondary sound fields, the directional weight for the primary noise source was applied to the total sound field in previous work; therefore, the performance improvement was limited. We propose a method of individually interpolating the primary and secondary sound fields and formulate a normalized least-mean-square algorithm based on this interpolation method. Experimental results indicate that the proposed method outperforms the method based on total kernel interpolation.

Differentiable Digital Signal Processing Mixture Model for Synthesis Parameter Extraction from Mixture of Harmonic Sounds

Feb 01, 2022

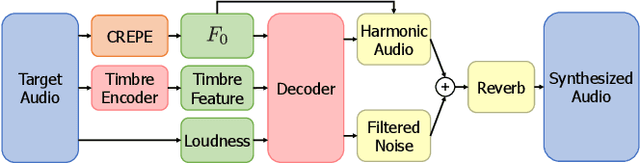

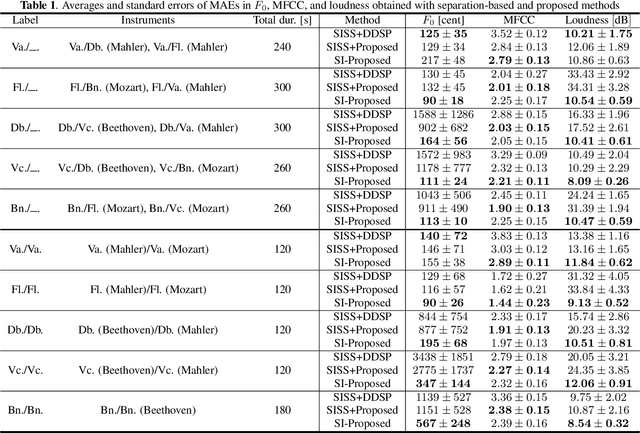

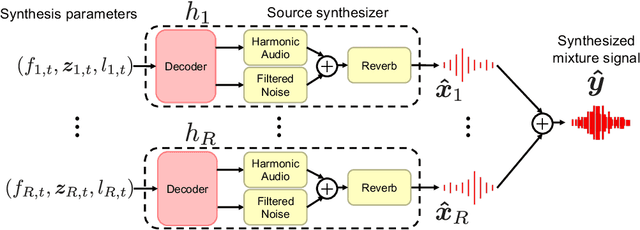

Abstract:A differentiable digital signal processing (DDSP) autoencoder is a musical sound synthesizer that combines a deep neural network (DNN) and spectral modeling synthesis. It allows us to flexibly edit sounds by changing the fundamental frequency, timbre feature, and loudness (synthesis parameters) extracted from an input sound. However, it is designed for a monophonic harmonic sound and cannot handle mixtures of harmonic sounds. In this paper, we propose a model (DDSP mixture model) that represents a mixture as the sum of the outputs of multiple pretrained DDSP autoencoders. By fitting the output of the proposed model to the observed mixture, we can directly estimate the synthesis parameters of each source. Through synthesis parameter extraction experiments, we show that the proposed method has high and stable performance compared with a straightforward method that applies the DDSP autoencoder to the signals separated by an audio source separation method.

J-MAC: Japanese multi-speaker audiobook corpus for speech synthesis

Jan 26, 2022

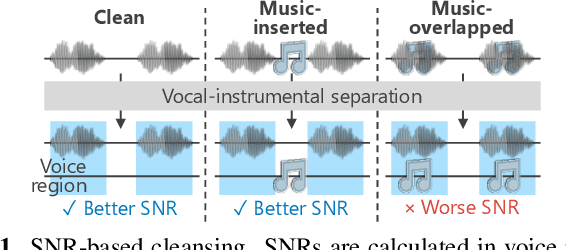

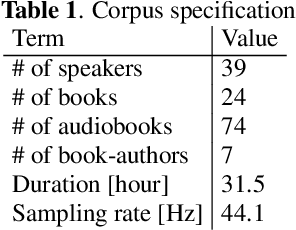

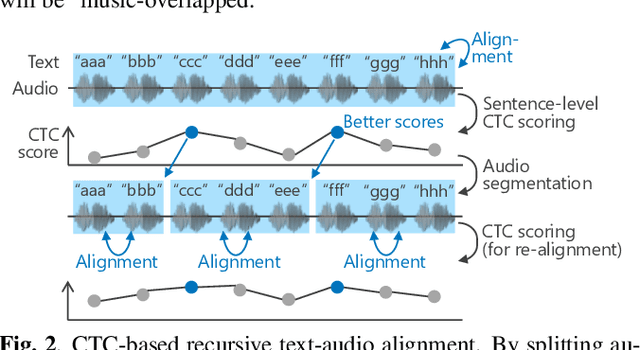

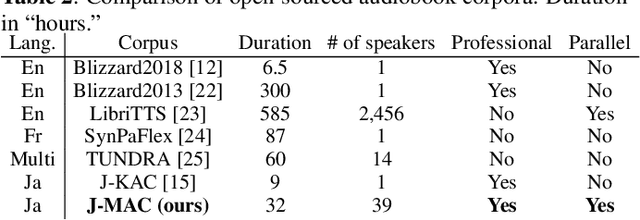

Abstract:In this paper, we construct a Japanese audiobook speech corpus called "J-MAC" for speech synthesis research. With the success of reading-style speech synthesis, the research target is shifting to tasks that use complicated contexts. Audiobook speech synthesis is a good example that requires cross-sentence, expressiveness, etc. Unlike reading-style speech, speaker-specific expressiveness in audiobook speech also becomes the context. To enhance this research, we propose a method of constructing a corpus from audiobooks read by professional speakers. From many audiobooks and their texts, our method can automatically extract and refine the data without any language dependency. Specifically, we use vocal-instrumental separation to extract clean data, connectionist temporal classification to roughly align text and audio, and voice activity detection to refine the alignment. J-MAC is open-sourced in our project page. We also conduct audiobook speech synthesis evaluations, and the results give insights into audiobook speech synthesis.

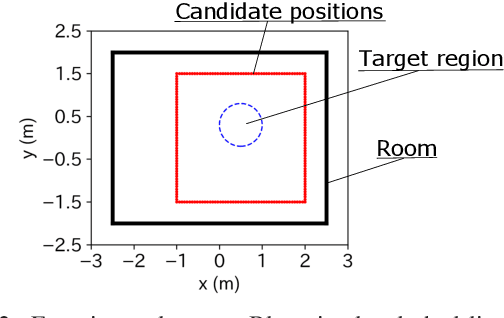

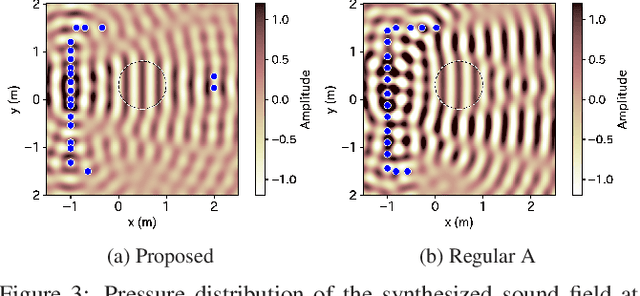

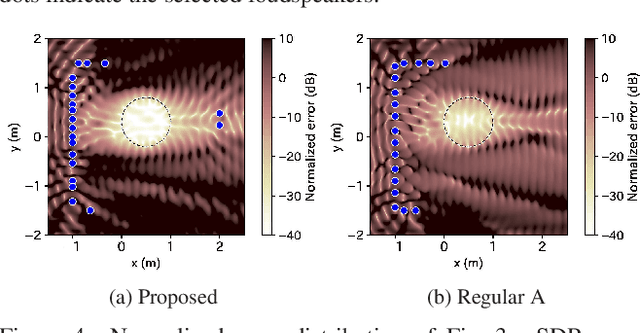

Mean-square-error-based secondary source placement in sound field synthesis with prior information on desired field

Dec 10, 2021

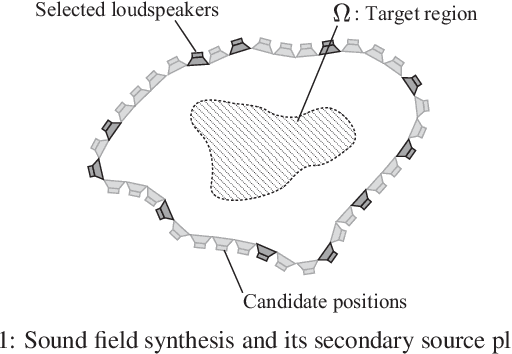

Abstract:A method of optimizing secondary source placement in sound field synthesis is proposed. Such an optimization method will be useful when the allowable placement region and available number of loudspeakers are limited. We formulate a mean-square-error-based cost function, incorporating the statistical properties of possible desired sound fields, for general linear-least-squares-based sound field synthesis methods, including pressure matching and (weighted) mode matching, whereas most of the current methods are applicable only to the pressure-matching method. An efficient greedy algorithm for minimizing the proposed cost function is also derived. Numerical experiments indicated that a high reproduction accuracy can be achieved by the placement optimized by the proposed method compared with the empirically used regular placement.

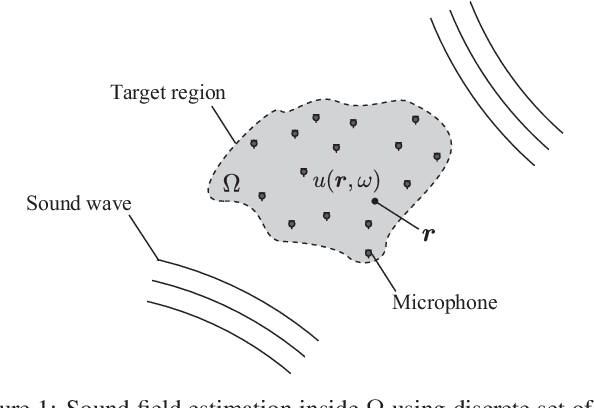

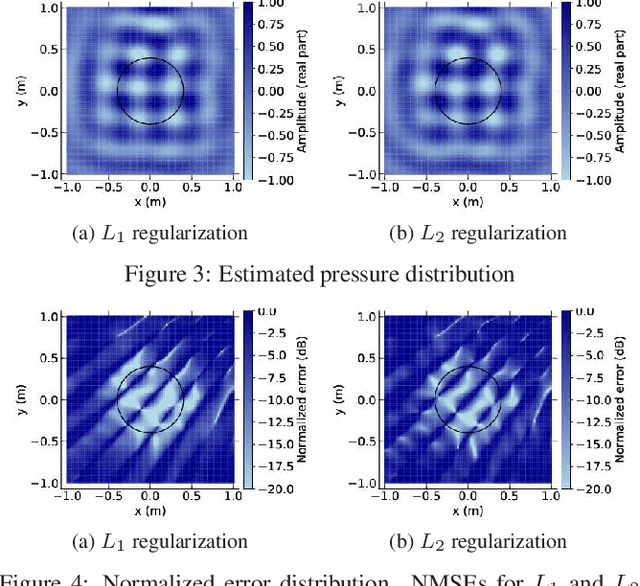

Kernel Learning For Sound Field Estimation With L1 and L2 Regularizations

Oct 12, 2021

Abstract:A method to estimate an acoustic field from discrete microphone measurements is proposed. A kernel-interpolation-based method using the kernel function formulated for sound field interpolation has been used in various applications. The kernel function with directional weighting makes it possible to incorporate prior information on source directions to improve estimation accuracy. However, in prior studies, parameters for directional weighting have been empirically determined. We propose a method to optimize these parameters using observation values, which is particularly useful when prior information on source directions is uncertain. The proposed algorithm is based on discretization of the parameters and representation of the kernel function as a weighted sum of sub-kernels. Two types of regularization for the weights, $L_1$ and $L_2$, are investigated. Experimental results indicate that the proposed method achieves higher estimation accuracy than the method without kernel learning.

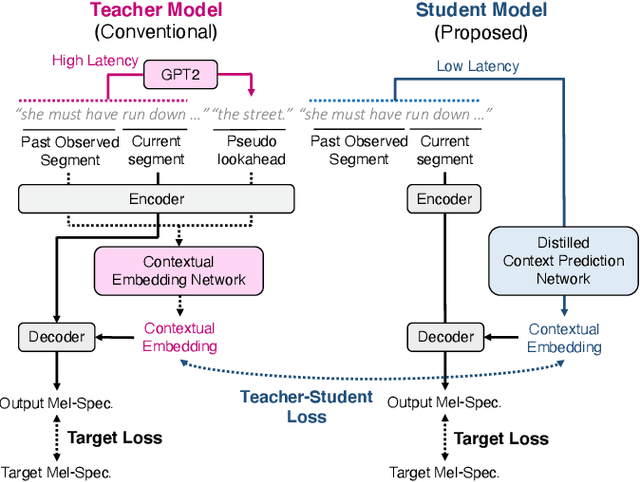

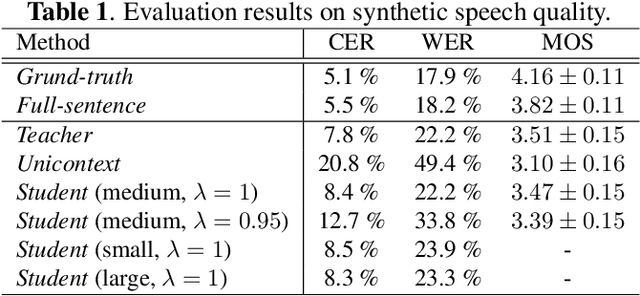

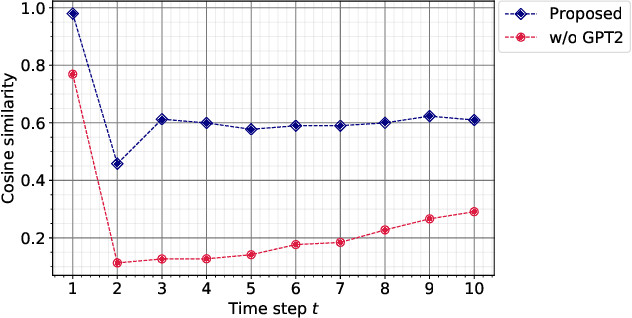

Low-Latency Incremental Text-to-Speech Synthesis with Distilled Context Prediction Network

Sep 22, 2021

Abstract:Incremental text-to-speech (TTS) synthesis generates utterances in small linguistic units for the sake of real-time and low-latency applications. We previously proposed an incremental TTS method that leverages a large pre-trained language model to take unobserved future context into account without waiting for the subsequent segment. Although this method achieves comparable speech quality to that of a method that waits for the future context, it entails a huge amount of processing for sampling from the language model at each time step. In this paper, we propose an incremental TTS method that directly predicts the unobserved future context with a lightweight model, instead of sampling words from the large-scale language model. We perform knowledge distillation from a GPT2-based context prediction network into a simple recurrent model by minimizing a teacher-student loss defined between the context embedding vectors of those models. Experimental results show that the proposed method requires about ten times less inference time to achieve comparable synthetic speech quality to that of our previous method, and it can perform incremental synthesis much faster than the average speaking speed of human English speakers, demonstrating the availability of our method to real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge