Adversarial Training for Improving Model Robustness? Look at Both Prediction and Interpretation

Mar 23, 2022Hanjie Chen, Yangfeng Ji

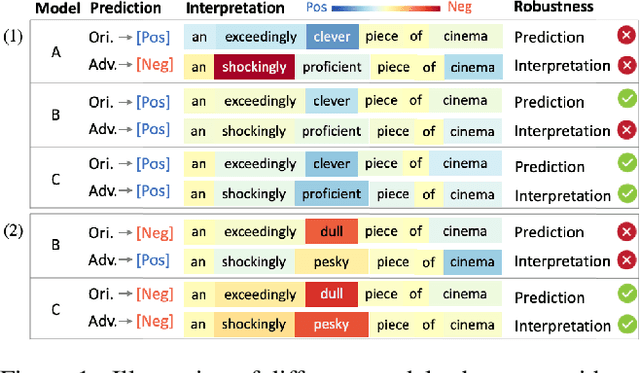

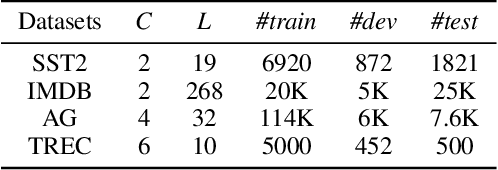

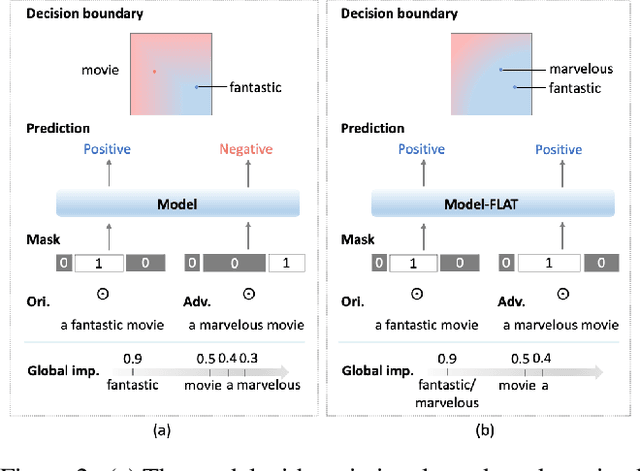

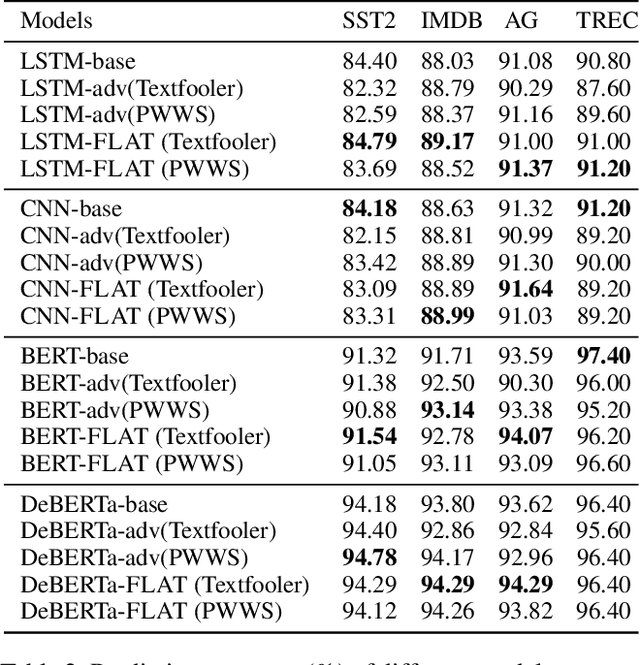

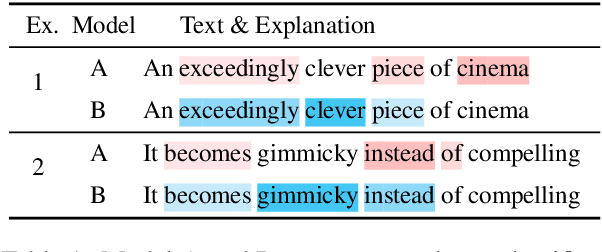

Neural language models show vulnerability to adversarial examples which are semantically similar to their original counterparts with a few words replaced by their synonyms. A common way to improve model robustness is adversarial training which follows two steps-collecting adversarial examples by attacking a target model, and fine-tuning the model on the augmented dataset with these adversarial examples. The objective of traditional adversarial training is to make a model produce the same correct predictions on an original/adversarial example pair. However, the consistency between model decision-makings on two similar texts is ignored. We argue that a robust model should behave consistently on original/adversarial example pairs, that is making the same predictions (what) based on the same reasons (how) which can be reflected by consistent interpretations. In this work, we propose a novel feature-level adversarial training method named FLAT. FLAT aims at improving model robustness in terms of both predictions and interpretations. FLAT incorporates variational word masks in neural networks to learn global word importance and play as a bottleneck teaching the model to make predictions based on important words. FLAT explicitly shoots at the vulnerability problem caused by the mismatch between model understandings on the replaced words and their synonyms in original/adversarial example pairs by regularizing the corresponding global word importance scores. Experiments show the effectiveness of FLAT in improving the robustness with respect to both predictions and interpretations of four neural network models (LSTM, CNN, BERT, and DeBERTa) to two adversarial attacks on four text classification tasks. The models trained via FLAT also show better robustness than baseline models on unforeseen adversarial examples across different attacks.

Explaining Prediction Uncertainty of Pre-trained Language Models by Detecting Uncertain Words in Inputs

Jan 11, 2022Hanjie Chen, Yangfeng Ji

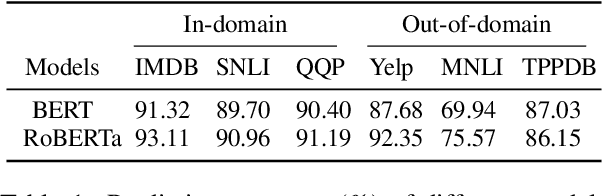

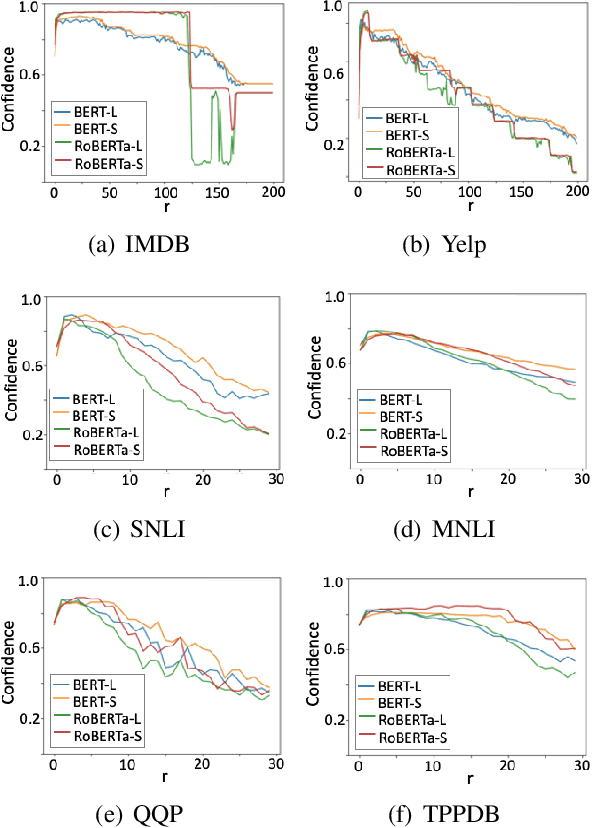

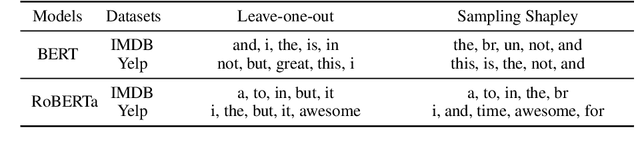

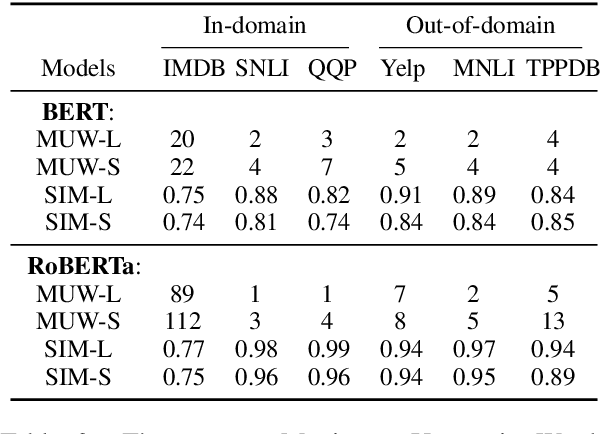

Estimating the predictive uncertainty of pre-trained language models is important for increasing their trustworthiness in NLP. Although many previous works focus on quantifying prediction uncertainty, there is little work on explaining the uncertainty. This paper pushes a step further on explaining uncertain predictions of post-calibrated pre-trained language models. We adapt two perturbation-based post-hoc interpretation methods, Leave-one-out and Sampling Shapley, to identify words in inputs that cause the uncertainty in predictions. We test the proposed methods on BERT and RoBERTa with three tasks: sentiment classification, natural language inference, and paraphrase identification, in both in-domain and out-of-domain settings. Experiments show that both methods consistently capture words in inputs that cause prediction uncertainty.

Perturbing Inputs for Fragile Interpretations in Deep Natural Language Processing

Aug 11, 2021Sanchit Sinha, Hanjie Chen, Arshdeep Sekhon, Yangfeng Ji, Yanjun Qi

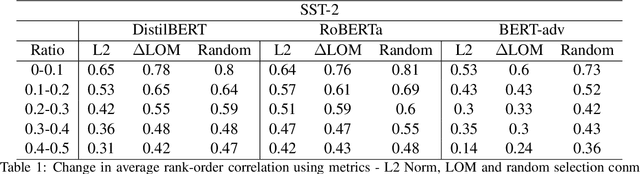

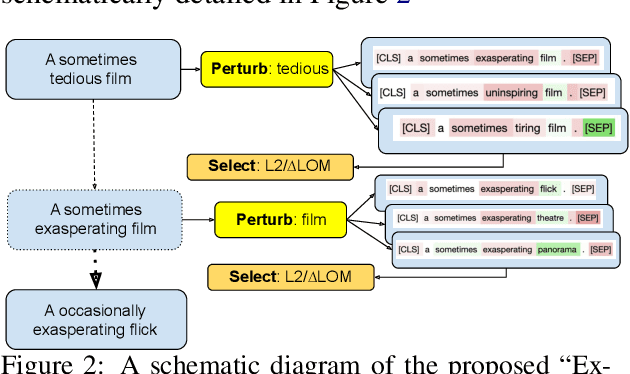

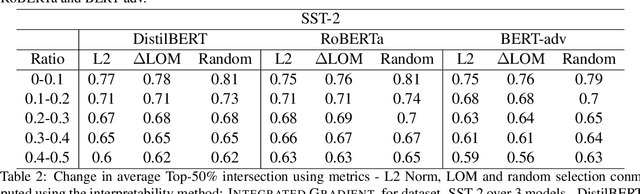

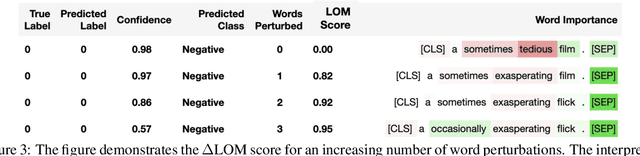

Interpretability methods like Integrated Gradient and LIME are popular choices for explaining natural language model predictions with relative word importance scores. These interpretations need to be robust for trustworthy NLP applications in high-stake areas like medicine or finance. Our paper demonstrates how interpretations can be manipulated by making simple word perturbations on an input text. Via a small portion of word-level swaps, these adversarial perturbations aim to make the resulting text semantically and spatially similar to its seed input (therefore sharing similar interpretations). Simultaneously, the generated examples achieve the same prediction label as the seed yet are given a substantially different explanation by the interpretation methods. Our experiments generate fragile interpretations to attack two SOTA interpretation methods, across three popular Transformer models and on two different NLP datasets. We observe that the rank order correlation drops by over 20% when less than 10% of words are perturbed on average. Further, rank-order correlation keeps decreasing as more words get perturbed. Furthermore, we demonstrate that candidates generated from our method have good quality metrics.

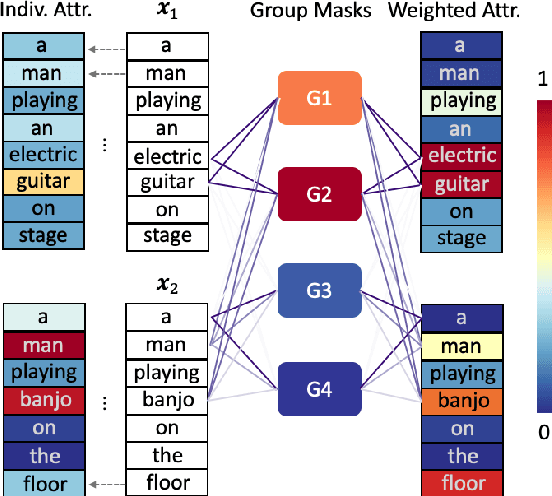

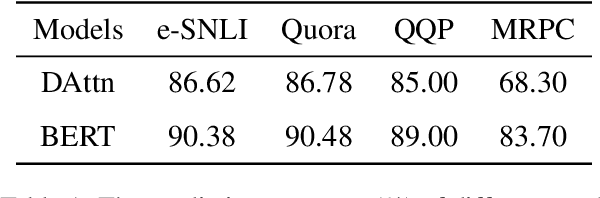

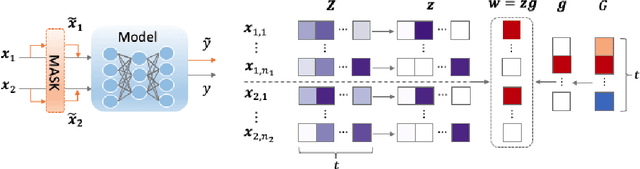

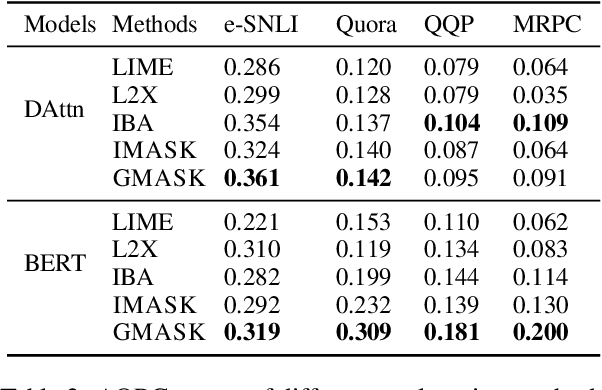

Explaining Neural Network Predictions on Sentence Pairs via Learning Word-Group Masks

Apr 13, 2021Hanjie Chen, Song Feng, Jatin Ganhotra, Hui Wan, Chulaka Gunasekara, Sachindra Joshi, Yangfeng Ji

Explaining neural network models is important for increasing their trustworthiness in real-world applications. Most existing methods generate post-hoc explanations for neural network models by identifying individual feature attributions or detecting interactions between adjacent features. However, for models with text pairs as inputs (e.g., paraphrase identification), existing methods are not sufficient to capture feature interactions between two texts and their simple extension of computing all word-pair interactions between two texts is computationally inefficient. In this work, we propose the Group Mask (GMASK) method to implicitly detect word correlations by grouping correlated words from the input text pair together and measure their contribution to the corresponding NLP tasks as a whole. The proposed method is evaluated with two different model architectures (decomposable attention model and BERT) across four datasets, including natural language inference and paraphrase identification tasks. Experiments show the effectiveness of GMASK in providing faithful explanations to these models.

Learning Variational Word Masks to Improve the Interpretability of Neural Text Classifiers

Oct 01, 2020Hanjie Chen, Yangfeng Ji

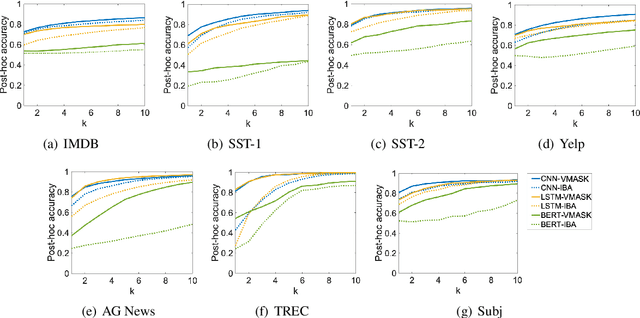

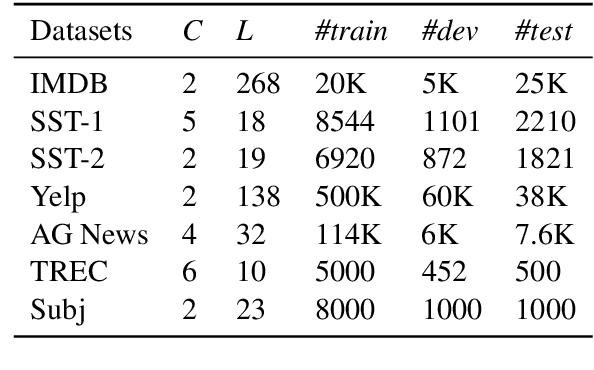

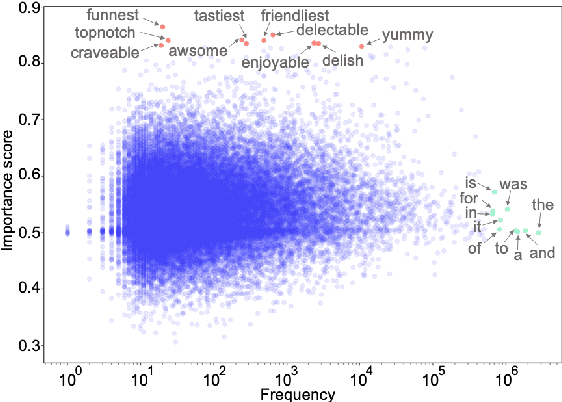

To build an interpretable neural text classifier, most of the prior work has focused on designing inherently interpretable models or finding faithful explanations. A new line of work on improving model interpretability has just started, and many existing methods require either prior information or human annotations as additional inputs in training. To address this limitation, we propose the variational word mask (VMASK) method to automatically learn task-specific important words and reduce irrelevant information on classification, which ultimately improves the interpretability of model predictions. The proposed method is evaluated with three neural text classifiers (CNN, LSTM, and BERT) on seven benchmark text classification datasets. Experiments show the effectiveness of VMASK in improving both model prediction accuracy and interpretability.

Generating Hierarchical Explanations on Text Classification via Feature Interaction Detection

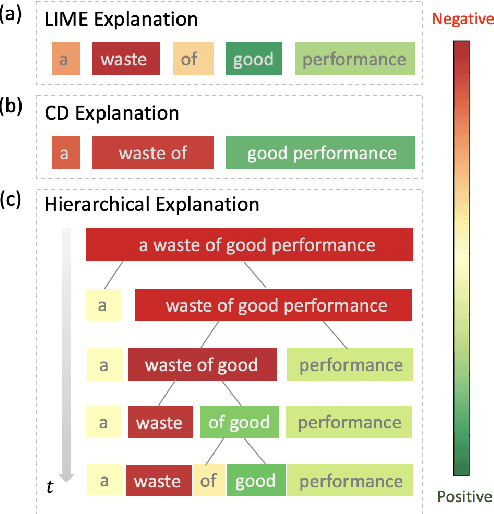

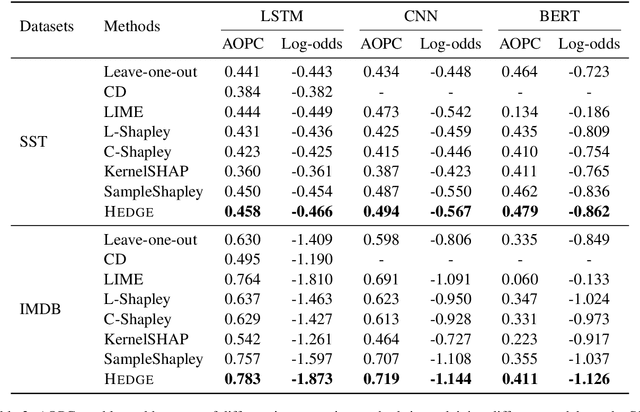

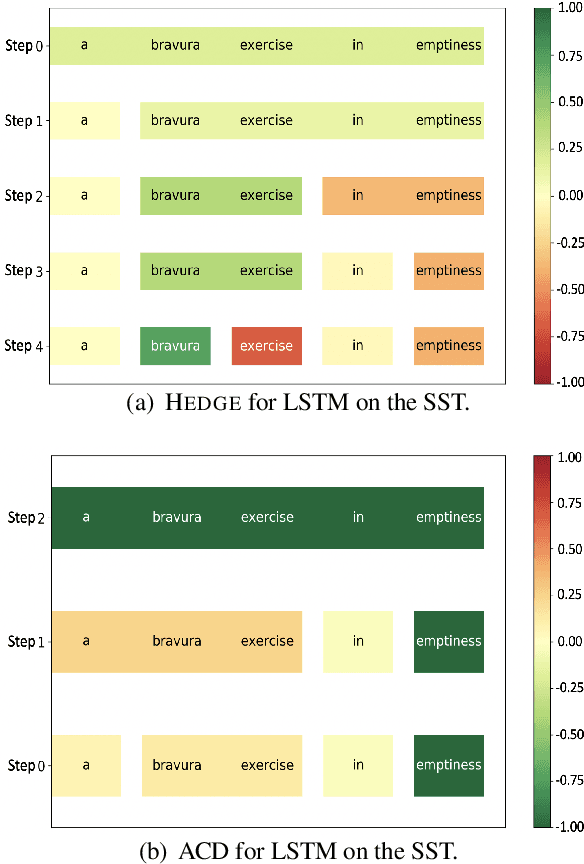

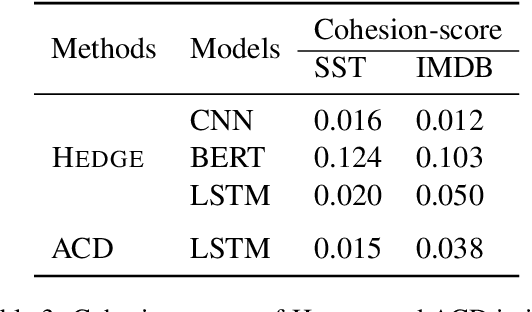

Apr 04, 2020Hanjie Chen, Guangtao Zheng, Yangfeng Ji

Generating explanations for neural networks has become crucial for their applications in real-world with respect to reliability and trustworthiness. In natural language processing, existing methods usually provide important features which are words or phrases selected from an input text as an explanation, but ignore the interactions between them. It poses challenges for humans to interpret an explanation and connect it to model prediction. In this work, we build hierarchical explanations by detecting feature interactions. Such explanations visualize how words and phrases are combined at different levels of the hierarchy, which can help users understand the decision-making of black-box models. The proposed method is evaluated with three neural text classifiers (LSTM, CNN, and BERT) on two benchmark datasets, via both automatic and human evaluations. Experiments show the effectiveness of the proposed method in providing explanations that are both faithful to models and interpretable to humans.

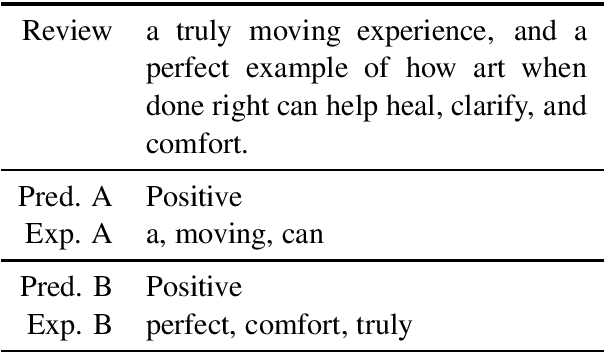

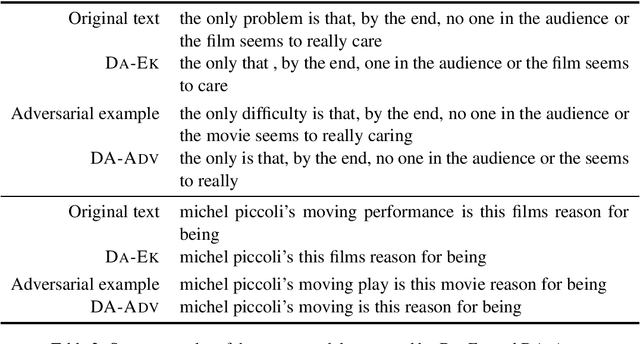

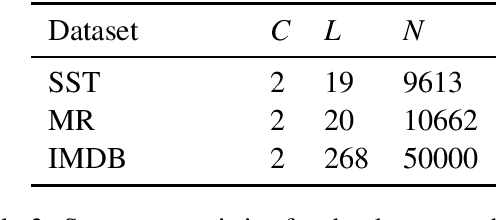

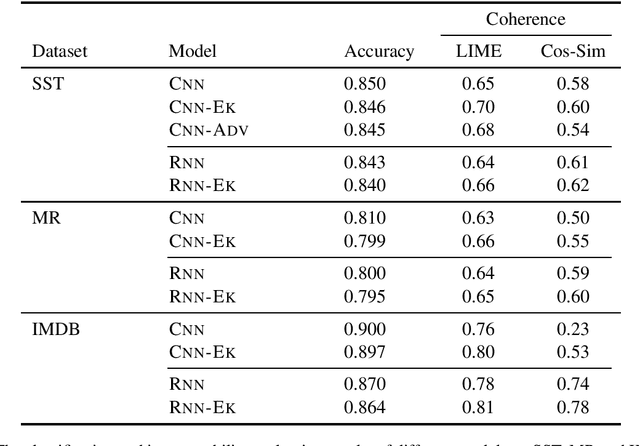

Improving the Explainability of Neural Sentiment Classifiers via Data Augmentation

Oct 09, 2019Hanjie Chen, Yangfeng Ji

Sentiment analysis has been widely used by businesses for social media opinion mining, especially in the financial services industry, where customers' feedbacks are critical for companies. Recent progress of neural network models has achieved remarkable performance on sentiment classification, while the lack of classification interpretation may raise the trustworthy and many other issues in practice. In this work, we study the problem of improving the explainability of existing sentiment classifiers. We propose two data augmentation methods that create additional training examples to help improve model explainability: one method with a predefined sentiment word list as external knowledge and the other with adversarial examples. We test the proposed methods on both CNN and RNN classifiers with three benchmark sentiment datasets. The model explainability is assessed by both human evaluators and a simple automatic evaluation measurement. Experiments show the proposed data augmentation methods significantly improve the explainability of both neural classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge