Haiwei Wu

Mask Detection and Breath Monitoring from Speech: on Data Augmentation, Feature Representation and Modeling

Aug 14, 2020

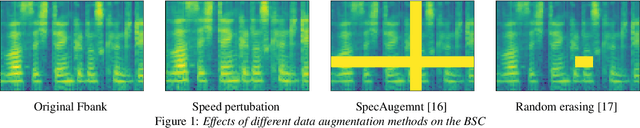

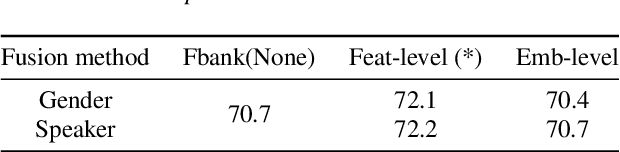

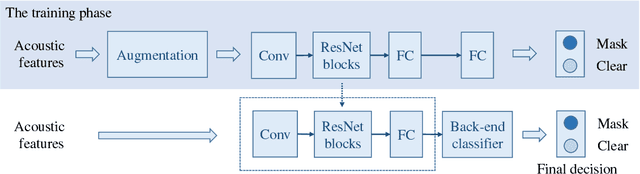

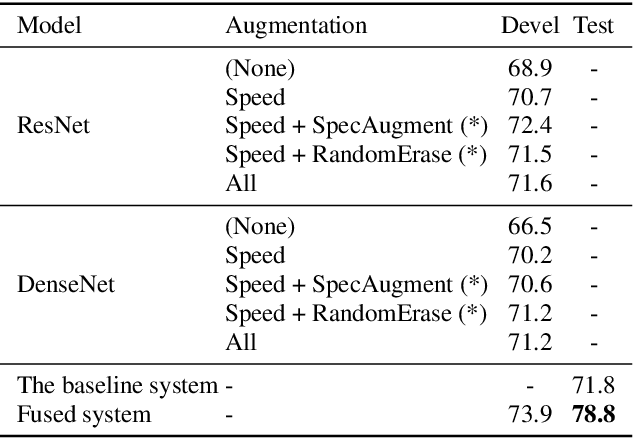

Abstract:This paper introduces our approaches for the Mask and Breathing Sub-Challenge in the Interspeech COMPARE Challenge 2020. For the mask detection task, we train deep convolutional neural networks with filter-bank energies, gender-aware features, and speaker-aware features. Support Vector Machines follows as the back-end classifiers for binary prediction on the extracted deep embeddings. Several data augmentation schemes are used to increase the quantity of training data and improve our models' robustness, including speed perturbation, SpecAugment, and random erasing. For the speech breath monitoring task, we investigate different bottleneck features based on the Bi-LSTM structure. Experimental results show that our proposed methods outperform the baselines and achieve 0.746 PCC and 78.8% UAR on the Breathing and Mask evaluation set, respectively.

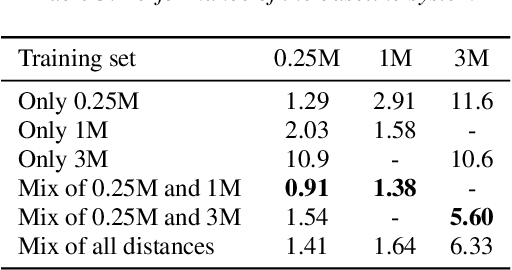

Acoustic Word Embedding System for Code-Switching Query-by-example Spoken Term Detection

May 24, 2020

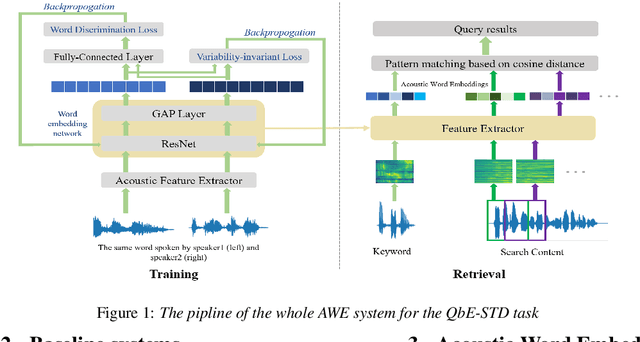

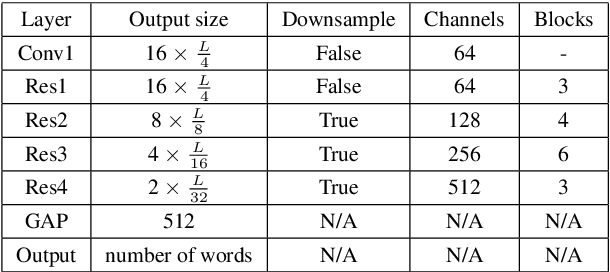

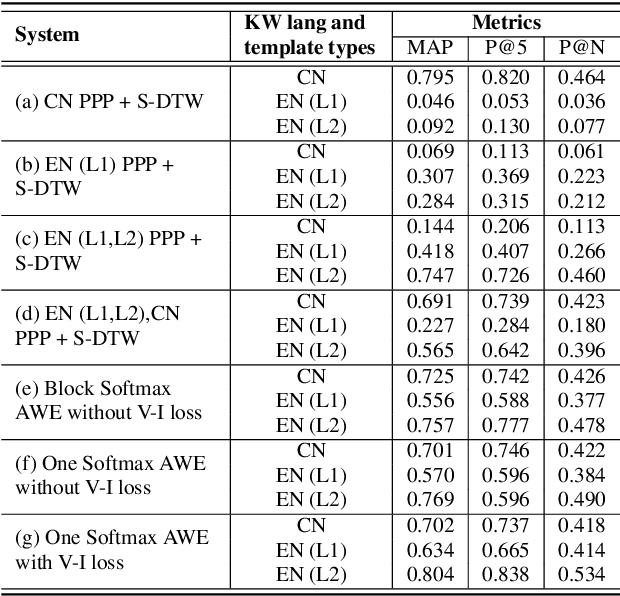

Abstract:In this paper, we propose a deep convolutional neural network-based acoustic word embedding system on code-switching query by example spoken term detection. Different from previous configurations, we combine audio data in two languages for training instead of only using one single language. We transform the acoustic features of keyword templates and searching content to fixed-dimensional vectors and calculate the distances between keyword segments and searching content segments obtained in a sliding manner. An auxiliary variability-invariant loss is also applied to training data within the same word but different speakers. This strategy is used to prevent the extractor from encoding undesired speaker- or accent-related information into the acoustic word embeddings. Experimental results show that our proposed system produces promising searching results in the code-switching test scenario. With the increased number of templates and the employment of variability-invariant loss, the searching performance is further enhanced.

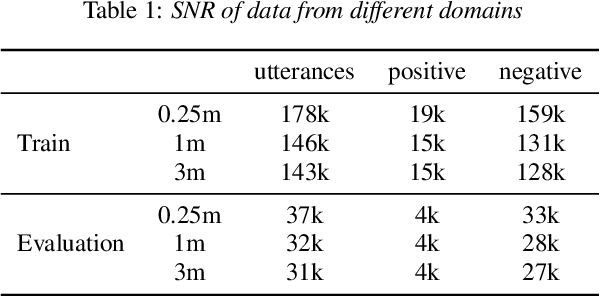

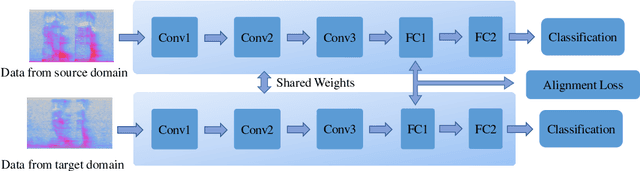

Domain Aware Training for Far-field Small-footprint Keyword Spotting

May 16, 2020

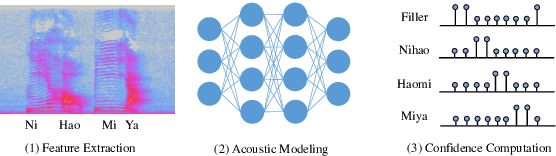

Abstract:In this paper, we focus on the task of small-footprint keyword spotting under the far-field scenario. Far-field environments are commonly encountered in real-life speech applications, causing severe degradation of performance due to room reverberation and various kinds of noises. Our baseline system is built on the convolutional neural network trained with pooled data of both far-field and close-talking speech. To cope with the distortions, we develop three domain aware training systems, including the domain embedding system, the deep CORAL system, and the multi-task learning system. These methods incorporate domain knowledge into network training and improve the performance of the keyword classifier on far-field conditions. Experimental results show that our proposed methods manage to maintain the performance on the close-talking speech and achieve significant improvement on the far-field test set.

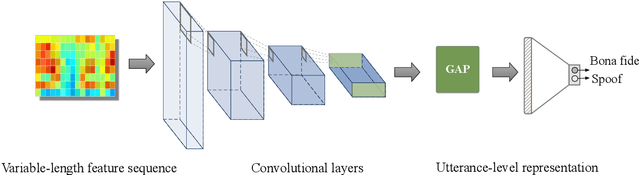

The DKU Replay Detection System for the ASVspoof 2019 Challenge: On Data Augmentation, Feature Representation, Classification, and Fusion

Jul 05, 2019

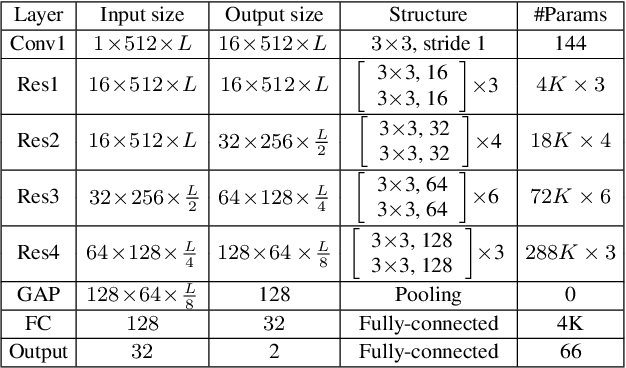

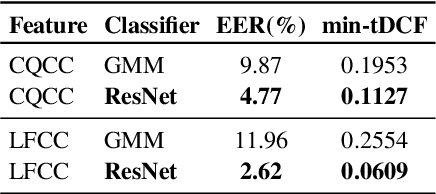

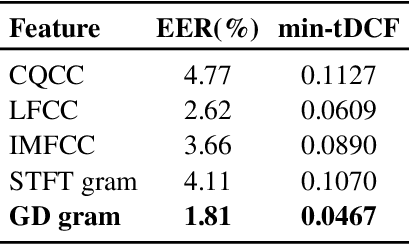

Abstract:This paper describes our DKU replay detection system for the ASVspoof 2019 challenge. The goal is to develop spoofing countermeasure for automatic speaker recognition in physical access scenario. We leverage the countermeasure system pipeline from four aspects, including the data augmentation, feature representation, classification, and fusion. First, we introduce an utterance-level deep learning framework for anti-spoofing. It receives the variable-length feature sequence and outputs the utterance-level scores directly. Based on the framework, we try out various kinds of input feature representations extracted from either the magnitude spectrum or phase spectrum. Besides, we also perform the data augmentation strategy by applying the speed perturbation on the raw waveform. Our best single system employs a residual neural network trained by the speed-perturbed group delay gram. It achieves EER of 1.04% on the development set, as well as EER of 1.08% on the evaluation set. Finally, using the simple average score from several single systems can further improve the performance. EER of 0.24% on the development set and 0.66% on the evaluation set is obtained for our primary system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge