Guihua Shan

TemporalFlowViz: Parameter-Aware Visual Analytics for Interpreting Scramjet Combustion Evolution

Sep 05, 2025

Abstract:Understanding the complex combustion dynamics within scramjet engines is critical for advancing high-speed propulsion technologies. However, the large scale and high dimensionality of simulation-generated temporal flow field data present significant challenges for visual interpretation, feature differentiation, and cross-case comparison. In this paper, we present TemporalFlowViz, a parameter-aware visual analytics workflow and system designed to support expert-driven clustering, visualization, and interpretation of temporal flow fields from scramjet combustion simulations. Our approach leverages hundreds of simulated combustion cases with varying initial conditions, each producing time-sequenced flow field images. We use pretrained Vision Transformers to extract high-dimensional embeddings from these frames, apply dimensionality reduction and density-based clustering to uncover latent combustion modes, and construct temporal trajectories in the embedding space to track the evolution of each simulation over time. To bridge the gap between latent representations and expert reasoning, domain specialists annotate representative cluster centroids with descriptive labels. These annotations are used as contextual prompts for a vision-language model, which generates natural-language summaries for individual frames and full simulation cases. The system also supports parameter-based filtering, similarity-based case retrieval, and coordinated multi-view exploration to facilitate in-depth analysis. We demonstrate the effectiveness of TemporalFlowViz through two expert-informed case studies and expert feedback, showing TemporalFlowViz enhances hypothesis generation, supports interpretable pattern discovery, and enhances knowledge discovery in large-scale scramjet combustion analysis.

ParamsDrag: Interactive Parameter Space Exploration via Image-Space Dragging

Jul 19, 2024Abstract:Numerical simulation serves as a cornerstone in scientific modeling, yet the process of fine-tuning simulation parameters poses significant challenges. Conventionally, parameter adjustment relies on extensive numerical simulations, data analysis, and expert insights, resulting in substantial computational costs and low efficiency. The emergence of deep learning in recent years has provided promising avenues for more efficient exploration of parameter spaces. However, existing approaches often lack intuitive methods for precise parameter adjustment and optimization. To tackle these challenges, we introduce ParamsDrag, a model that facilitates parameter space exploration through direct interaction with visualizations. Inspired by DragGAN, our ParamsDrag model operates in three steps. First, the generative component of ParamsDrag generates visualizations based on the input simulation parameters. Second, by directly dragging structure-related features in the visualizations, users can intuitively understand the controlling effect of different parameters. Third, with the understanding from the earlier step, users can steer ParamsDrag to produce dynamic visual outcomes. Through experiments conducted on real-world simulations and comparisons with state-of-the-art deep learning-based approaches, we demonstrate the efficacy of our solution.

CNNPruner: Pruning Convolutional Neural Networks with Visual Analytics

Sep 08, 2020

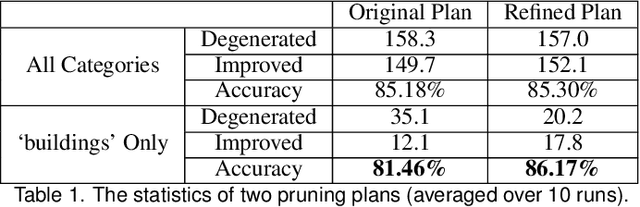

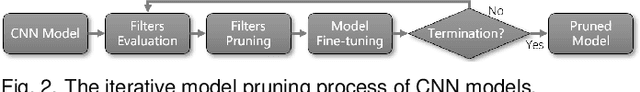

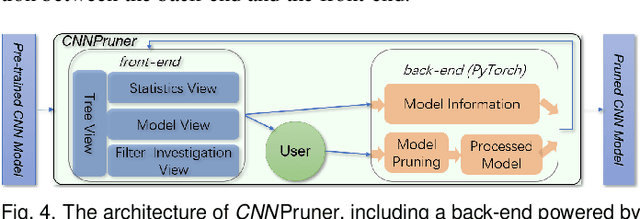

Abstract:Convolutional neural networks (CNNs) have demonstrated extraordinarily good performance in many computer vision tasks. The increasing size of CNN models, however, prevents them from being widely deployed to devices with limited computational resources, e.g., mobile/embedded devices. The emerging topic of model pruning strives to address this problem by removing less important neurons and fine-tuning the pruned networks to minimize the accuracy loss. Nevertheless, existing automated pruning solutions often rely on a numerical threshold of the pruning criteria, lacking the flexibility to optimally balance the trade-off between model size and accuracy. Moreover, the complicated interplay between the stages of neuron pruning and model fine-tuning makes this process opaque, and therefore becomes difficult to optimize. In this paper, we address these challenges through a visual analytics approach, named CNNPruner. It considers the importance of convolutional filters through both instability and sensitivity, and allows users to interactively create pruning plans according to a desired goal on model size or accuracy. Also, CNNPruner integrates state-of-the-art filter visualization techniques to help users understand the roles that different filters played and refine their pruning plans. Through comprehensive case studies on CNNs with real-world sizes, we validate the effectiveness of CNNPruner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge