Fadel Adib

Wave-Former: Through-Occlusion 3D Reconstruction via Wireless Shape Completion

Nov 19, 2025Abstract:We present Wave-Former, a novel method capable of high-accuracy 3D shape reconstruction for completely occluded, diverse, everyday objects. This capability can open new applications spanning robotics, augmented reality, and logistics. Our approach leverages millimeter-wave (mmWave) wireless signals, which can penetrate common occlusions and reflect off hidden objects. In contrast to past mmWave reconstruction methods, which suffer from limited coverage and high noise, Wave-Former introduces a physics-aware shape completion model capable of inferring full 3D geometry. At the heart of Wave-Former's design is a novel three-stage pipeline which bridges raw wireless signals with recent advancements in vision-based shape completion by incorporating physical properties of mmWave signals. The pipeline proposes candidate geometric surfaces, employs a transformer-based shape completion model designed specifically for mmWave signals, and finally performs entropy-guided surface selection. This enables Wave-Former to be trained using entirely synthetic point-clouds, while demonstrating impressive generalization to real-world data. In head-to-head comparisons with state-of-the-art baselines, Wave-Former raises recall from 54% to 72% while maintaining a high precision of 85%.

RISE: Single Static Radar-based Indoor Scene Understanding

Nov 18, 2025Abstract:Robust and privacy-preserving indoor scene understanding remains a fundamental open problem. While optical sensors such as RGB and LiDAR offer high spatial fidelity, they suffer from severe occlusions and introduce privacy risks in indoor environments. In contrast, millimeter-wave (mmWave) radar preserves privacy and penetrates obstacles, but its inherently low spatial resolution makes reliable geometric reasoning difficult. We introduce RISE, the first benchmark and system for single-static-radar indoor scene understanding, jointly targeting layout reconstruction and object detection. RISE is built upon the key insight that multipath reflections, traditionally treated as noise, encode rich geometric cues. To exploit this, we propose a Bi-Angular Multipath Enhancement that explicitly models Angle-of-Arrival and Angle-of-Departure to recover secondary (ghost) reflections and reveal invisible structures. On top of these enhanced observations, a simulation-to-reality Hierarchical Diffusion framework transforms fragmented radar responses into complete layout reconstruction and object detection. Our benchmark contains 50,000 frames collected across 100 real indoor trajectories, forming the first large-scale dataset dedicated to radar-based indoor scene understanding. Extensive experiments show that RISE reduces the Chamfer Distance by 60% (down to 16 cm) compared to the state of the art in layout reconstruction, and delivers the first mmWave-based object detection, achieving 58% IoU. These results establish RISE as a new foundation for geometry-aware and privacy-preserving indoor scene understanding using a single static radar.

3D Self-Localization of Drones using a Single Millimeter-Wave Anchor

Oct 12, 2023

Abstract:We present the design, implementation, and evaluation of MiFly, a self-localization system for autonomous drones that works across indoor and outdoor environments, including low-visibility, dark, and GPS-denied settings. MiFly performs 6DoF self-localization by leveraging a single millimeter-wave (mmWave) anchor in its vicinity - even if that anchor is visually occluded. MmWave signals are used in radar and 5G systems and can operate in the dark and through occlusions. MiFly introduces a new mmWave anchor design and mounts light-weight high-resolution mmWave radars on a drone. By jointly designing the localization algorithms and the novel low-power mmWave anchor hardware (including its polarization and modulation), the drone is capable of high-speed 3D localization. Furthermore, by intelligently fusing the location estimates from its mmWave radars and its IMUs, it can accurately and robustly track its 6DoF trajectory. We implemented and evaluated MiFly on a DJI drone. We demonstrate a median localization error of 7cm and a 90th percentile less than 15cm, even when the anchor is fully occluded (visually) from the drone.

Towards Battery-Free Machine Learning and Inference in Underwater Environments

Feb 16, 2022

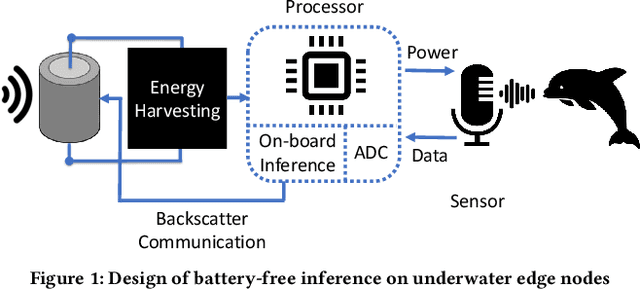

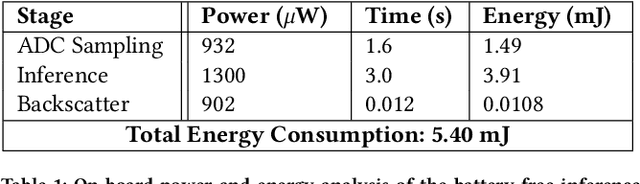

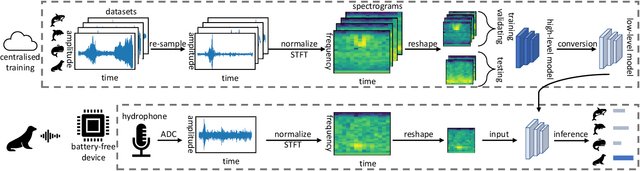

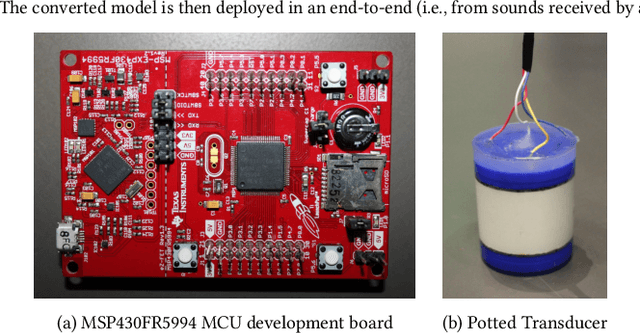

Abstract:This paper is motivated by a simple question: Can we design and build battery-free devices capable of machine learning and inference in underwater environments? An affirmative answer to this question would have significant implications for a new generation of underwater sensing and monitoring applications for environmental monitoring, scientific exploration, and climate/weather prediction. To answer this question, we explore the feasibility of bridging advances from the past decade in two fields: battery-free networking and low-power machine learning. Our exploration demonstrates that it is indeed possible to enable battery-free inference in underwater environments. We designed a device that can harvest energy from underwater sound, power up an ultra-low-power microcontroller and on-board sensor, perform local inference on sensed measurements using a lightweight Deep Neural Network, and communicate the inference result via backscatter to a receiver. We tested our prototype in an emulated marine bioacoustics application, demonstrating the potential to recognize underwater animal sounds without batteries. Through this exploration, we highlight the challenges and opportunities for making underwater battery-free inference and machine learning ubiquitous.

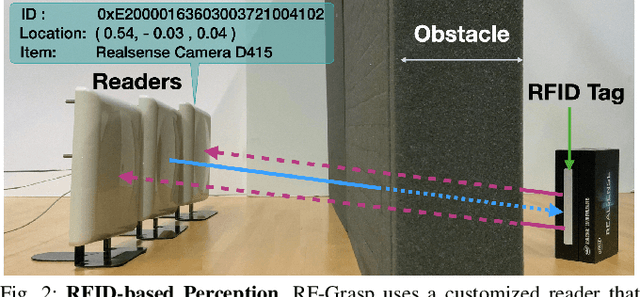

Robotic Grasping of Fully-Occluded Objects using RF Perception

Dec 31, 2020

Abstract:We present the design, implementation, and evaluation of RF-Grasp, a robotic system that can grasp fully-occluded objects in unknown and unstructured environments. Unlike prior systems that are constrained by the line-of-sight perception of vision and infrared sensors, RF-Grasp employs RF (Radio Frequency) perception to identify and locate target objects through occlusions, and perform efficient exploration and complex manipulation tasks in non-line-of-sight settings. RF-Grasp relies on an eye-in-hand camera and batteryless RFID tags attached to objects of interest. It introduces two main innovations: (1) an RF-visual servoing controller that uses the RFID's location to selectively explore the environment and plan an efficient trajectory toward an occluded target, and (2) an RF-visual deep reinforcement learning network that can learn and execute efficient, complex policies for decluttering and grasping. We implemented and evaluated an end-to-end physical prototype of RF-Grasp and a state-of-the-art baseline. We demonstrate it improves success rate and efficiency by up to 40-50% in cluttered settings. We also demonstrate RF-Grasp in novel tasks such mechanical search of fully-occluded objects behind obstacles, opening up new possibilities for robotic manipulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge