Emmanouil Angelis

Do Depth-Grown Models Overcome the Curse of Depth? An In-Depth Analysis

Dec 09, 2025Abstract:Gradually growing the depth of Transformers during training can not only reduce training cost but also lead to improved reasoning performance, as shown by MIDAS (Saunshi et al., 2024). Thus far, however, a mechanistic understanding of these gains has been missing. In this work, we establish a connection to recent work showing that layers in the second half of non-grown, pre-layernorm Transformers contribute much less to the final output distribution than those in the first half - also known as the Curse of Depth (Sun et al., 2025, Csordás et al., 2025). Using depth-wise analyses, we demonstrate that growth via gradual middle stacking yields more effective utilization of model depth, alters the residual stream structure, and facilitates the formation of permutable computational blocks. In addition, we propose a lightweight modification of MIDAS that yields further improvements in downstream reasoning benchmarks. Overall, this work highlights how the gradual growth of model depth can lead to the formation of distinct computational circuits and overcome the limited depth utilization seen in standard non-grown models.

Minimum-Excess-Work Guidance

May 19, 2025Abstract:We propose a regularization framework inspired by thermodynamic work for guiding pre-trained probability flow generative models (e.g., continuous normalizing flows or diffusion models) by minimizing excess work, a concept rooted in statistical mechanics and with strong conceptual connections to optimal transport. Our approach enables efficient guidance in sparse-data regimes common to scientific applications, where only limited target samples or partial density constraints are available. We introduce two strategies: Path Guidance for sampling rare transition states by concentrating probability mass on user-defined subsets, and Observable Guidance for aligning generated distributions with experimental observables while preserving entropy. We demonstrate the framework's versatility on a coarse-grained protein model, guiding it to sample transition configurations between folded/unfolded states and correct systematic biases using experimental data. The method bridges thermodynamic principles with modern generative architectures, offering a principled, efficient, and physics-inspired alternative to standard fine-tuning in data-scarce domains. Empirical results highlight improved sample efficiency and bias reduction, underscoring its applicability to molecular simulations and beyond.

Doubly Robust Structure Identification from Temporal Data

Nov 10, 2023Abstract:Learning the causes of time-series data is a fundamental task in many applications, spanning from finance to earth sciences or bio-medical applications. Common approaches for this task are based on vector auto-regression, and they do not take into account unknown confounding between potential causes. However, in settings with many potential causes and noisy data, these approaches may be substantially biased. Furthermore, potential causes may be correlated in practical applications. Moreover, existing algorithms often do not work with cyclic data. To address these challenges, we propose a new doubly robust method for Structure Identification from Temporal Data ( SITD ). We provide theoretical guarantees, showing that our method asymptotically recovers the true underlying causal structure. Our analysis extends to cases where the potential causes have cycles and they may be confounded. We further perform extensive experiments to showcase the superior performance of our method.

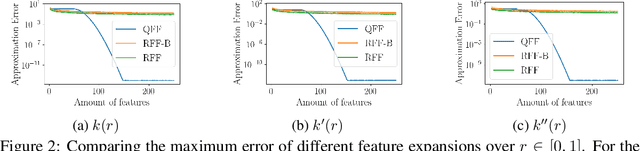

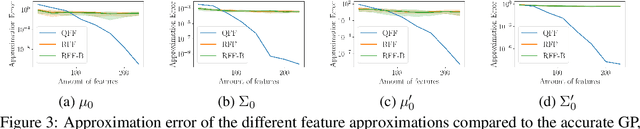

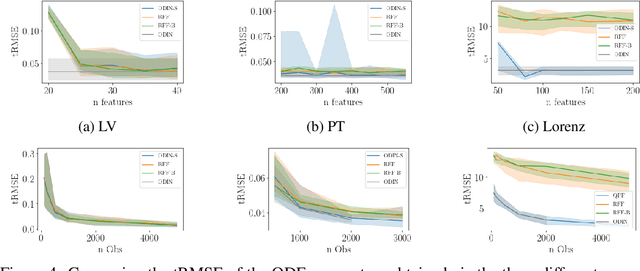

SLEIPNIR: Deterministic and Provably Accurate Feature Expansion for Gaussian Process Regression with Derivatives

Mar 05, 2020

Abstract:Gaussian processes are an important regression tool with excellent analytic properties which allow for direct integration of derivative observations. However, vanilla GP methods scale cubically in the amount of observations. In this work, we propose a novel approach for scaling GP regression with derivatives based on quadrature Fourier features. We then prove deterministic, non-asymptotic and exponentially fast decaying error bounds which apply for both the approximated kernel as well as the approximated posterior. To furthermore illustrate the practical applicability of our method, we then apply it to ODIN, a recently developed algorithm for ODE parameter inference. In an extensive experiments section, all results are empirically validated, demonstrating the speed, accuracy, and practical applicability of this approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge