Eduard Alarcón

Quantum Computing for Large-scale Network Optimization: Opportunities and Challenges

Sep 09, 2025Abstract:The complexity of large-scale 6G-and-beyond networks demands innovative approaches for multi-objective optimization over vast search spaces, a task often intractable. Quantum computing (QC) emerges as a promising technology for efficient large-scale optimization. We present our vision of leveraging QC to tackle key classes of problems in future mobile networks. By analyzing and identifying common features, particularly their graph-centric representation, we propose a unified strategy involving QC algorithms. Specifically, we outline a methodology for optimization using quantum annealing as well as quantum reinforcement learning. Additionally, we discuss the main challenges that QC algorithms and hardware must overcome to effectively optimize future networks.

Guided Graph Compression for Quantum Graph Neural Networks

Jun 11, 2025

Abstract:Graph Neural Networks (GNNs) are effective for processing graph-structured data but face challenges with large graphs due to high memory requirements and inefficient sparse matrix operations on GPUs. Quantum Computing (QC) offers a promising avenue to address these issues and inspires new algorithmic approaches. In particular, Quantum Graph Neural Networks (QGNNs) have been explored in recent literature. However, current quantum hardware limits the dimension of the data that can be effectively encoded. Existing approaches either simplify datasets manually or use artificial graph datasets. This work introduces the Guided Graph Compression (GGC) framework, which uses a graph autoencoder to reduce both the number of nodes and the dimensionality of node features. The compression is guided to enhance the performance of a downstream classification task, which can be applied either with a quantum or a classical classifier. The framework is evaluated on the Jet Tagging task, a classification problem of fundamental importance in high energy physics that involves distinguishing particle jets initiated by quarks from those by gluons. The GGC is compared against using the autoencoder as a standalone preprocessing step and against a baseline classical GNN classifier. Our numerical results demonstrate that GGC outperforms both alternatives, while also facilitating the testing of novel QGNN ansatzes on realistic datasets.

Frequency-Dependent Power Consumption Modeling of CMOS Transmitters for WNoC Architectures

May 19, 2025Abstract:Wireless Network-on-Chip (WNoC) systems, which wirelessly interconnect the chips of a computing system, have been proposed as a complement to existing chip-to-chip wired links. However, their feasibility depends on the availability of custom-designed high-speed, tiny, ultra-efficient transceivers. This represents a challenge due to the tradeoffs between bandwidth, area, and energy efficiency that are found as frequency increases, which suggests that there is an optimal frequency region. To aid in the search for such an optimal design point, this paper presents a behavioral model that quantifies the expected power consumption of oscillators, mixers, and power amplifiers as a function of frequency. The model is built on extensive surveys of the respective sub-blocks, all based on experimental data. By putting together the models of the three sub-blocks, a comprehensive power model is obtained, which will aid in selecting the optimal operating frequency for WNoC systems.

Towards Scalable Multi-Chip Wireless Networks with Near-Field Time Reversal

Apr 26, 2024

Abstract:The concept of Wireless Network-on-Chip (WNoC) has emerged as a potential solution to address the escalating communication demands of modern computing systems due to their low-latency, versatility, and reconfigurability. However, for WNoC to fulfill its potential, it is essential to establish multiple high-speed wireless links across chips. Unfortunately, the compact and enclosed nature of computing packages introduces significant challenges in the form of Co-Channel Interference (CCI) and Inter-Symbol Interference (ISI), which not only hinder the deployment of multiple spatial channels but also severely restrict the symbol rate of each individual channel. In this paper, we posit that Time Reversal (TR) could be effective in addressing both impairments in this static scenario thanks to its spatiotemporal focusing capabilities even in the near field. Through comprehensive full-wave simulations and bit error rate analysis in multiple scenarios and at multiple frequency bands, we provide evidence that TR can increase the symbol rate by an order of magnitude, enabling the deployment of multiple concurrent links and achieving aggregate speeds exceeding 100 Gb/s. Finally, we evaluate the impact of reducing the sampling rate of the TR filter on the achievable speeds, paving the way to practical TR-based wireless communications at the chip scale.

Circuit Partitioning for Multi-Core Quantum Architectures with Deep Reinforcement Learning

Jan 31, 2024Abstract:Quantum computing holds immense potential for solving classically intractable problems by leveraging the unique properties of quantum mechanics. The scalability of quantum architectures remains a significant challenge. Multi-core quantum architectures are proposed to solve the scalability problem, arising a new set of challenges in hardware, communications and compilation, among others. One of these challenges is to adapt a quantum algorithm to fit within the different cores of the quantum computer. This paper presents a novel approach for circuit partitioning using Deep Reinforcement Learning, contributing to the advancement of both quantum computing and graph partitioning. This work is the first step in integrating Deep Reinforcement Learning techniques into Quantum Circuit Mapping, opening the door to a new paradigm of solutions to such problems.

Topological Graph Signal Compression

Aug 21, 2023Abstract:Recently emerged Topological Deep Learning (TDL) methods aim to extend current Graph Neural Networks (GNN) by naturally processing higher-order interactions, going beyond the pairwise relations and local neighborhoods defined by graph representations. In this paper we propose a novel TDL-based method for compressing signals over graphs, consisting in two main steps: first, disjoint sets of higher-order structures are inferred based on the original signal --by clustering $N$ datapoints into $K\ll N$ collections; then, a topological-inspired message passing gets a compressed representation of the signal within those multi-element sets. Our results show that our framework improves both standard GNN and feed-forward architectures in compressing temporal link-based signals from two real-word Internet Service Provider Networks' datasets --from $30\%$ up to $90\%$ better reconstruction errors across all evaluation scenarios--, suggesting that it better captures and exploits spatial and temporal correlations over the whole graph-based network structure.

Exploration of Time Reversal for Wireless Communications within Computing Packages

Aug 11, 2023

Abstract:Wireless Network-on-Chip (WNoC) is a promising paradigm to overcome the versatility and scalability issues of conventional on-chip networks for current processor chips. However, the chip environment suffers from delay spread which leads to intense Inter-Symbol Interference (ISI). This degrades the signal when transmitting and makes it difficult to achieve the desired Bit Error Rate (BER) in this constraint-driven scenario. Time reversal (TR) is a technique that uses the multipath richness of the channel to overcome the undesired effects of the delay spread. As the flip-chip channel is static and can be characterized beforehand, in this paper we propose to apply TR to the wireless in-package channel. We evaluate the effects of this technique in time and space from an electromagnetic point of view. Furthermore, we study the effectiveness of TR in modulated data communications in terms of BER as a function of transmission rate and power. Our results show not only the spatiotemporal focusing effect of TR in a chip that could lead to multiple spatial channels, but also that transmissions using TR outperform, BER-wise, non-TR transmissions it by an order of magnitude

Toward Autonomous Reconfigurable Intelligent Surfaces Through Wireless Energy Harvesting

Aug 18, 2021

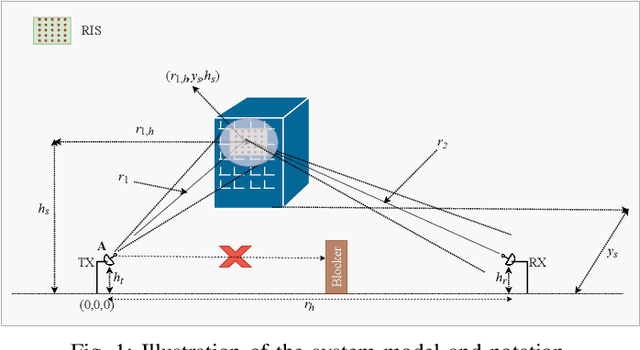

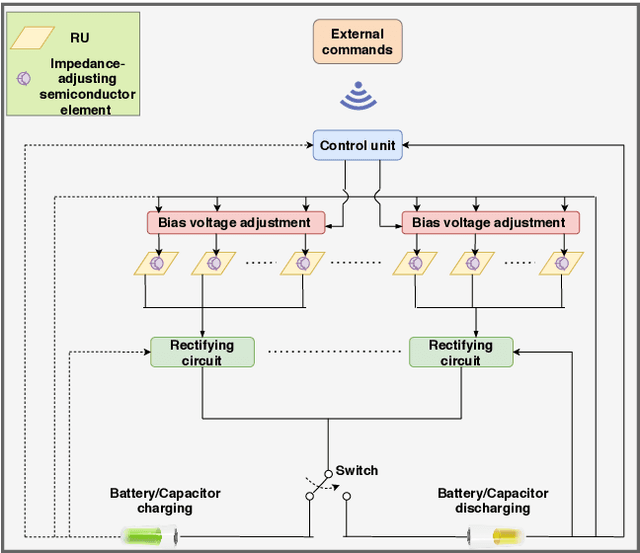

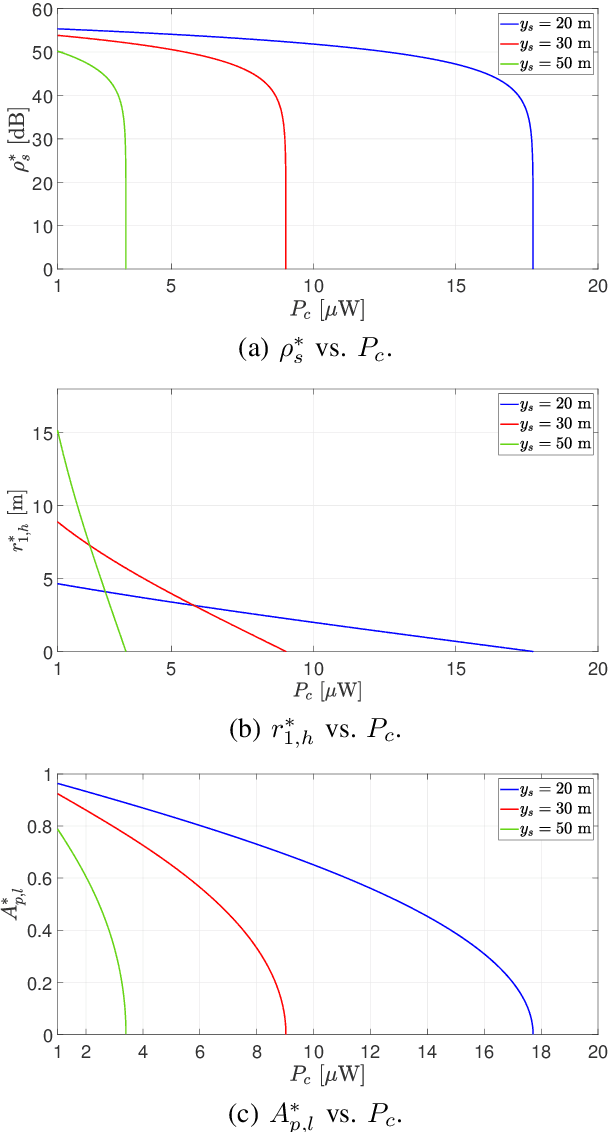

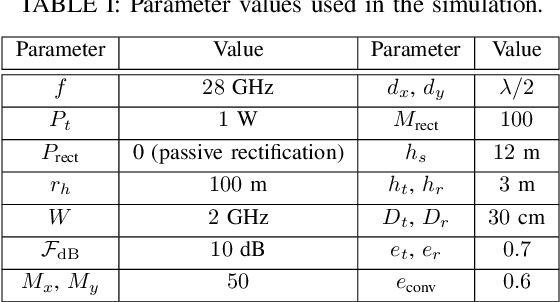

Abstract:In this work, we examine the potential of autonomous operation of a reconfigurable intelligent surface (RIS) using wireless energy harvesting from information signals. To this end, we first identify the main RIS power-consuming components and introduce a suitable power-consumption model. Subsequently, we introduce a novel RIS power-splitting architecture that enables simultaneous energy harvesting and beamsteering. Specifically, a subset of the RIS unit cells (UCs) is used for beamsteering while the remaining ones absorb energy. For the subset allocation, we propose policies obtained as solutions to two optimization problems. The first problem aims at maximizing the signal-to-noise ratio (SNR) at the receiver without violating the RIS's energy harvesting demands. Additionally, the objective of the second problem is to maximize the RIS harvested power, while ensuring an acceptable SNR at the receiver. We prove that under particular propagation conditions, some of the proposed policies deliver the optimal solution of the two problems. Furthermore, we report numerical results that reveal the efficiency of the policies with respect to the optimal and very high-complexity brute-force design approach. Finally, through a case study of user tracking, we showcase that the RIS power-consumption demands can be secured by harvesting energy from information signals.

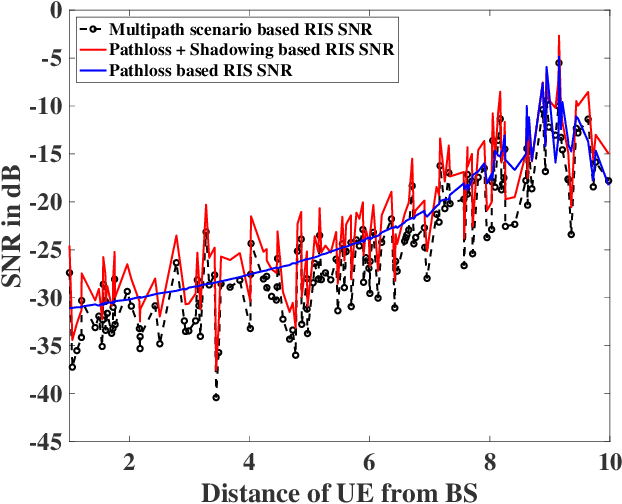

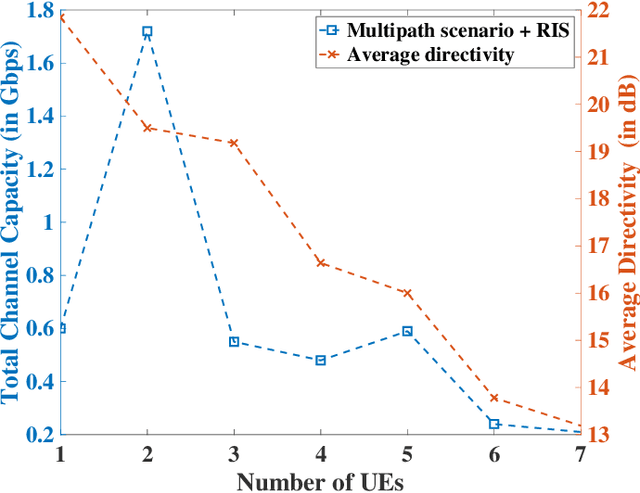

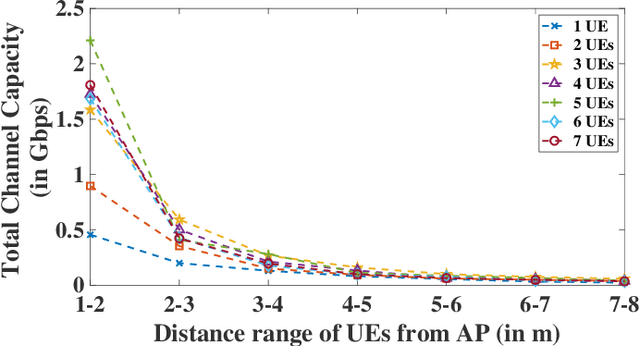

On the Enabling of Multi-user Communications with Reconfigurable Intelligent Surfaces

Jun 12, 2021

Abstract:Reconfigurable Intelligent Surface (RIS) composed of programmable actuators is a promising technology, thanks to its capability in manipulating Electromagnetic (EM) wavefronts. In particular, RISs have the potential to provide significant performance improvements for wireless networks. However, to do so, a proper configuration of the reflection coefficients of the unit cells in the RIS is required. RISs are sophisticated platforms so the design and fabrication complexity might be uneconomical for single-user scenarios while a RIS that can service multi-users justifies the costs. For the first time, we propose an efficient reconfiguration technique providing the multi-beam radiation pattern. Thanks to the analytical model the reconfiguration profile is at hand compared to time-consuming optimization techniques. The outcome can pave the wave for commercial use of multi-user communication beyond 5G networks. We analyze the performance of our proposed RIS technology for indoor and outdoor scenarios, given the broadcast mode of operation. The aforesaid scenarios encompass some of the most challenging scenarios that wireless networks encounter. We show that our proposed technique provisions sufficient gains in the observed channel capacity when the users are close to the RIS in the indoor office environment scenario. Further, we report more than one order of magnitude increase in the system throughput given the outdoor environment. The results prove that RIS with the ability to communicate with multiple users can empower wireless networks with great capacity.

Computing Graph Neural Networks: A Survey from Algorithms to Accelerators

Sep 30, 2020

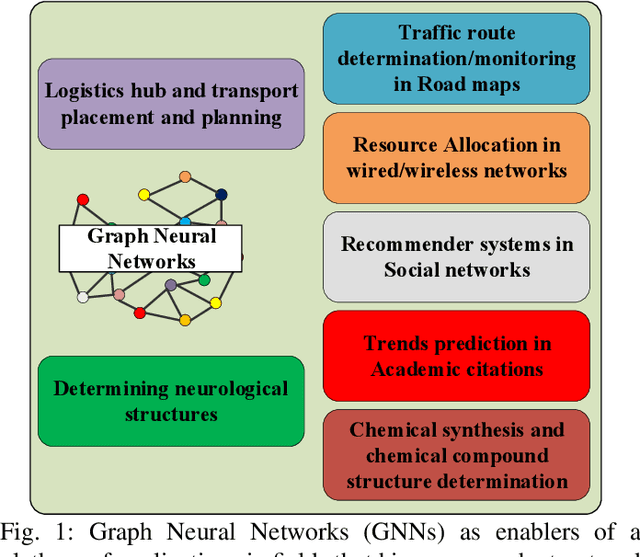

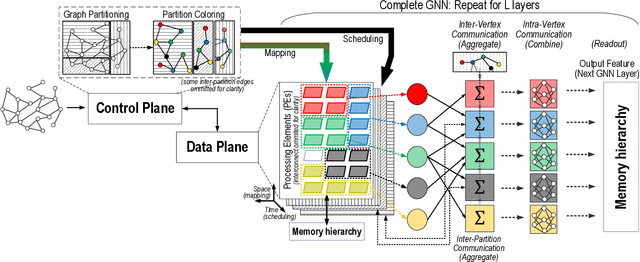

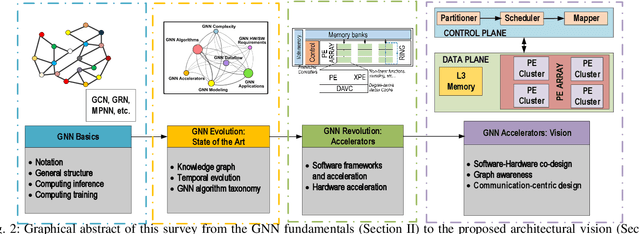

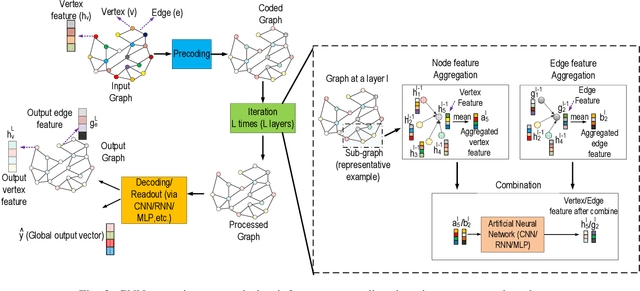

Abstract:Graph Neural Networks (GNNs) have exploded onto the machine learning scene in recent years owing to their capability to model and learn from graph-structured data. Such an ability has strong implications in a wide variety of fields whose data is inherently relational, for which conventional neural networks do not perform well. Indeed, as recent reviews can attest, research in the area of GNNs has grown rapidly and has lead to the development of a variety of GNN algorithm variants as well as to the exploration of groundbreaking applications in chemistry, neurology, electronics, or communication networks, among others. At the current stage of research, however, the efficient processing of GNNs is still an open challenge for several reasons. Besides of their novelty, GNNs are hard to compute due to their dependence on the input graph, their combination of dense and very sparse operations, or the need to scale to huge graphs in some applications. In this context, this paper aims to make two main contributions. On the one hand, a review of the field of GNNs is presented from the perspective of computing. This includes a brief tutorial on the GNN fundamentals, an overview of the evolution of the field in the last decade, and a summary of operations carried out in the multiple phases of different GNN algorithm variants. On the other hand, an in-depth analysis of current software and hardware acceleration schemes is provided, from which a hardware-software, graph-aware, and communication-centric vision for GNN accelerators is distilled.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge