Denis Repin

Automated Deep Abstractions for Stochastic Chemical Reaction Networks

Jan 30, 2020

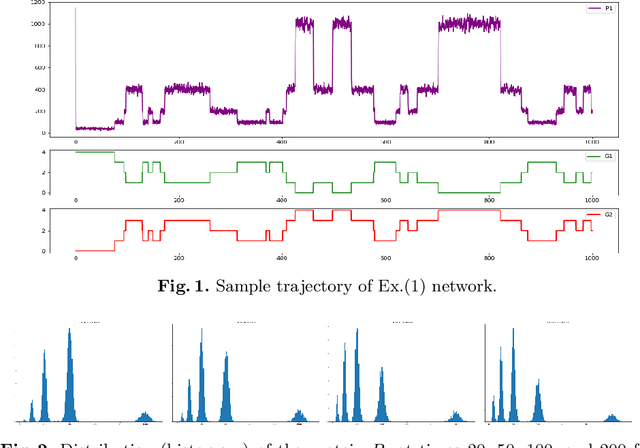

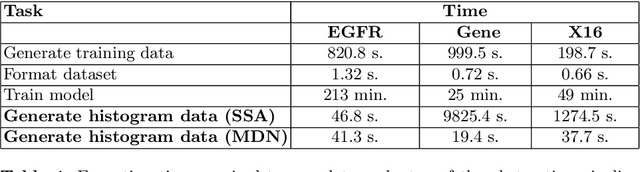

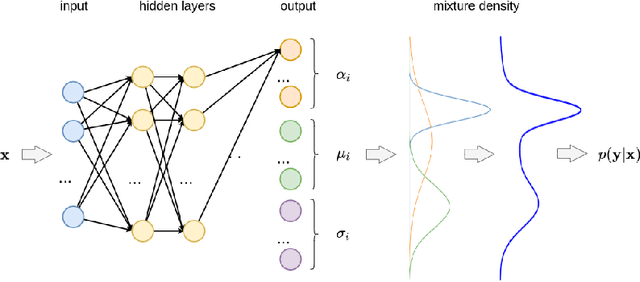

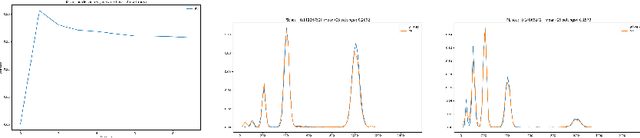

Abstract:Predicting stochastic cellular dynamics as emerging from the mechanistic models of molecular interactions is a long-standing challenge in systems biology: low-level chemical reaction network (CRN) models give raise to a highly-dimensional continuous-time Markov chain (CTMC) which is computationally demanding and often prohibitive to analyse in practice. A recently proposed abstraction method uses deep learning to replace this CTMC with a discrete-time continuous-space process, by training a mixture density deep neural network with traces sampled at regular time intervals (which can obtained either by simulating a given CRN or as time-series data from experiment). The major advantage of such abstraction is that it produces a computational model that is dramatically cheaper to execute, while preserving the statistical features of the training data. In general, the abstraction accuracy improves with the amount of training data. However, depending on a CRN, the overall quality of the method -- the efficiency gain and abstraction accuracy -- will also depend on the choice of neural network architecture given by hyper-parameters such as the layer types and connections between them. As a consequence, in practice, the modeller would have to take care of finding the suitable architecture manually, for each given CRN, through a tedious and time-consuming trial-and-error cycle. In this paper, we propose to further automatise deep abstractions for stochastic CRNs, through learning the optimal neural network architecture along with learning the transition kernel of the abstract process. Automated search of the architecture makes the method applicable directly to any given CRN, which is time-saving for deep learning experts and crucial for non-specialists. We implement the method and demonstrate its performance on a number of representative CRNs with multi-modal emergent phenotypes.

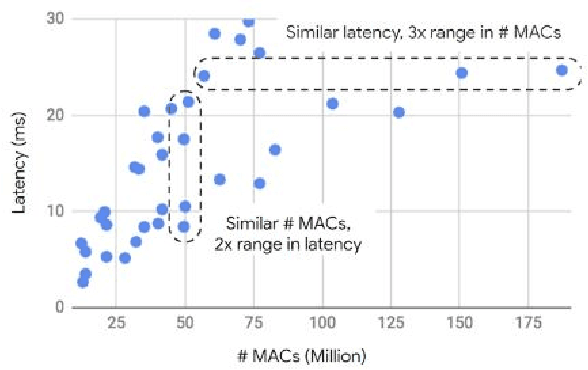

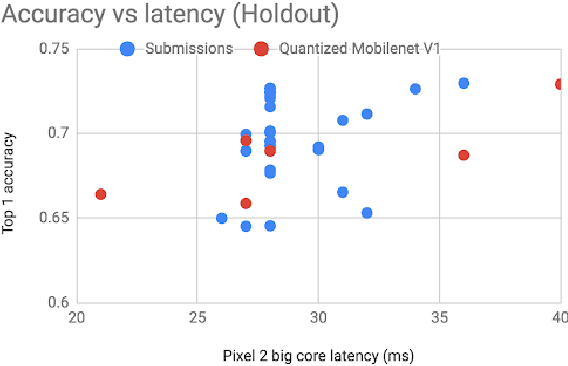

Low-Power Computer Vision: Status, Challenges, Opportunities

Apr 15, 2019

Abstract:Computer vision has achieved impressive progress in recent years. Meanwhile, mobile phones have become the primary computing platforms for millions of people. In addition to mobile phones, many autonomous systems rely on visual data for making decisions and some of these systems have limited energy (such as unmanned aerial vehicles also called drones and mobile robots). These systems rely on batteries and energy efficiency is critical. This article serves two main purposes: (1) Examine the state-of-the-art for low-power solutions to detect objects in images. Since 2015, the IEEE Annual International Low-Power Image Recognition Challenge (LPIRC) has been held to identify the most energy-efficient computer vision solutions. This article summarizes 2018 winners' solutions. (2) Suggest directions for research as well as opportunities for low-power computer vision.

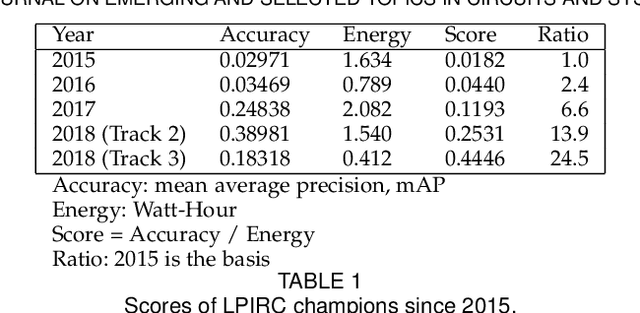

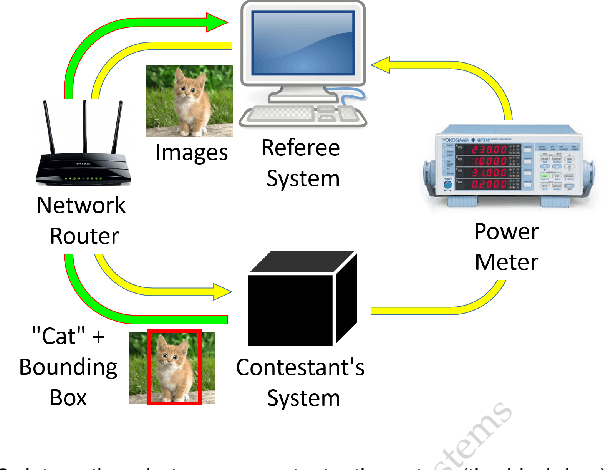

2018 Low-Power Image Recognition Challenge

Oct 03, 2018Abstract:The Low-Power Image Recognition Challenge (LPIRC, https://rebootingcomputing.ieee.org/lpirc) is an annual competition started in 2015. The competition identifies the best technologies that can classify and detect objects in images efficiently (short execution time and low energy consumption) and accurately (high precision). Over the four years, the winners' scores have improved more than 24 times. As computer vision is widely used in many battery-powered systems (such as drones and mobile phones), the need for low-power computer vision will become increasingly important. This paper summarizes LPIRC 2018 by describing the three different tracks and the winners' solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge