Chenyu Hu

FAROS: Robust Federated Learning with Adaptive Scaling against Backdoor Attacks

Jan 05, 2026Abstract:Federated Learning (FL) enables multiple clients to collaboratively train a shared model without exposing local data. However, backdoor attacks pose a significant threat to FL. These attacks aim to implant a stealthy trigger into the global model, causing it to mislead on inputs that possess a specific trigger while functioning normally on benign data. Although pre-aggregation detection is a main defense direction, existing state-of-the-art defenses often rely on fixed defense parameters. This reliance makes them vulnerable to single-point-of-failure risks, rendering them less effective against sophisticated attackers. To address these limitations, we propose FAROS, an enhanced FL framework that incorporates Adaptive Differential Scaling (ADS) and Robust Core-set Computing (RCC). The ADS mechanism adjusts the defense's sensitivity dynamically, based on the dispersion of uploaded gradients by clients in each round. This allows it to counter attackers who strategically shift between stealthiness and effectiveness. Furthermore, the RCC effectively mitigates the risk of single-point failure by computing the centroid of a core set comprising clients with the highest confidence. We conducted extensive experiments across various datasets, models, and attack scenarios. The results demonstrate that our method outperforms current defenses in both attack success rate and main task accuracy.

Hierarchical Direction Perception via Atomic Dot-Product Operators for Rotation-Invariant Point Clouds Learning

Nov 11, 2025

Abstract:Point cloud processing has become a cornerstone technology in many 3D vision tasks. However, arbitrary rotations introduce variations in point cloud orientations, posing a long-standing challenge for effective representation learning. The core of this issue is the disruption of the point cloud's intrinsic directional characteristics caused by rotational perturbations. Recent methods attempt to implicitly model rotational equivariance and invariance, preserving directional information and propagating it into deep semantic spaces. Yet, they often fall short of fully exploiting the multiscale directional nature of point clouds to enhance feature representations. To address this, we propose the Direction-Perceptive Vector Network (DiPVNet). At its core is an atomic dot-product operator that simultaneously encodes directional selectivity and rotation invariance--endowing the network with both rotational symmetry modeling and adaptive directional perception. At the local level, we introduce a Learnable Local Dot-Product (L2DP) Operator, which enables interactions between a center point and its neighbors to adaptively capture the non-uniform local structures of point clouds. At the global level, we leverage generalized harmonic analysis to prove that the dot-product between point clouds and spherical sampling vectors is equivalent to a direction-aware spherical Fourier transform (DASFT). This leads to the construction of a global directional response spectrum for modeling holistic directional structures. We rigorously prove the rotation invariance of both operators. Extensive experiments on challenging scenarios involving noise and large-angle rotations demonstrate that DiPVNet achieves state-of-the-art performance on point cloud classification and segmentation tasks. Our code is available at https://github.com/wxszreal0/DiPVNet.

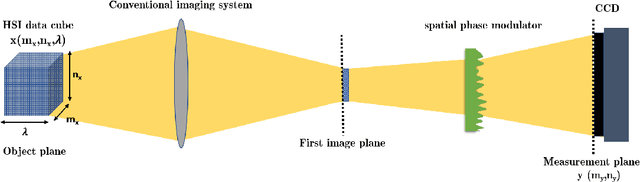

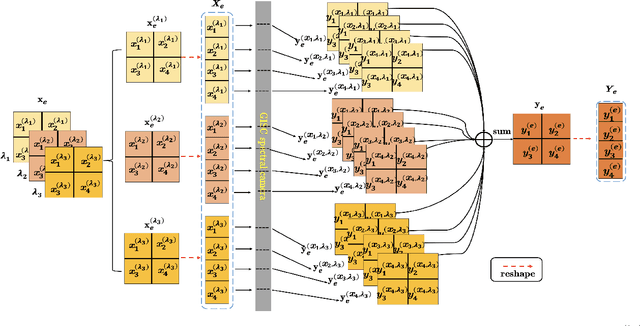

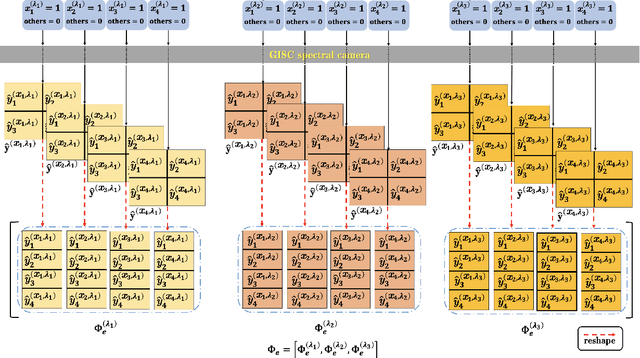

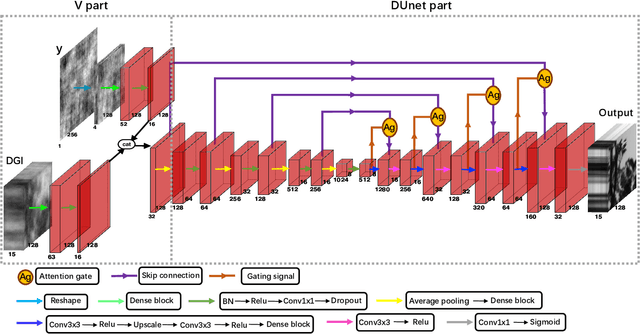

Hyperspectral image reconstruction for spectral camera based on ghost imaging via sparsity constraints using V-DUnet

Jun 28, 2022

Abstract:Spectral camera based on ghost imaging via sparsity constraints (GISC spectral camera) obtains three-dimensional (3D) hyperspectral information with two-dimensional (2D) compressive measurements in a single shot, which has attracted much attention in recent years. However, its imaging quality and real-time performance of reconstruction still need to be further improved. Recently, deep learning has shown great potential in improving the reconstruction quality and reconstruction speed for computational imaging. When applying deep learning into GISC spectral camera, there are several challenges need to be solved: 1) how to deal with the large amount of 3D hyperspectral data, 2) how to reduce the influence caused by the uncertainty of the random reference measurements, 3) how to improve the reconstructed image quality as far as possible. In this paper, we present an end-to-end V-DUnet for the reconstruction of 3D hyperspectral data in GISC spectral camera. To reduce the influence caused by the uncertainty of the measurement matrix and enhance the reconstructed image quality, both differential ghost imaging results and the detected measurements are sent into the network's inputs. Compared with compressive sensing algorithm, such as PICHCS and TwIST, it not only significantly improves the imaging quality with high noise immunity, but also speeds up the reconstruction time by more than two orders of magnitude.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge