Axel Thielscher

End-to-end Cortical Surface Reconstruction from Clinical Magnetic Resonance Images

May 20, 2025Abstract:Surface-based cortical analysis is valuable for a variety of neuroimaging tasks, such as spatial normalization, parcellation, and gray matter (GM) thickness estimation. However, most tools for estimating cortical surfaces work exclusively on scans with at least 1 mm isotropic resolution and are tuned to a specific magnetic resonance (MR) contrast, often T1-weighted (T1w). This precludes application using most clinical MR scans, which are very heterogeneous in terms of contrast and resolution. Here, we use synthetic domain-randomized data to train the first neural network for explicit estimation of cortical surfaces from scans of any contrast and resolution, without retraining. Our method deforms a template mesh to the white matter (WM) surface, which guarantees topological correctness. This mesh is further deformed to estimate the GM surface. We compare our method to recon-all-clinical (RAC), an implicit surface reconstruction method which is currently the only other tool capable of processing heterogeneous clinical MR scans, on ADNI and a large clinical dataset (n=1,332). We show a approximately 50 % reduction in cortical thickness error (from 0.50 to 0.24 mm) with respect to RAC and better recovery of the aging-related cortical thinning patterns detected by FreeSurfer on high-resolution T1w scans. Our method enables fast and accurate surface reconstruction of clinical scans, allowing studies (1) with sample sizes far beyond what is feasible in a research setting, and (2) of clinical populations that are difficult to enroll in research studies. The code is publicly available at https://github.com/simnibs/brainnet.

SynthSeg: Domain Randomisation for Segmentation of Brain MRI Scans of any Contrast and Resolution

Jul 20, 2021

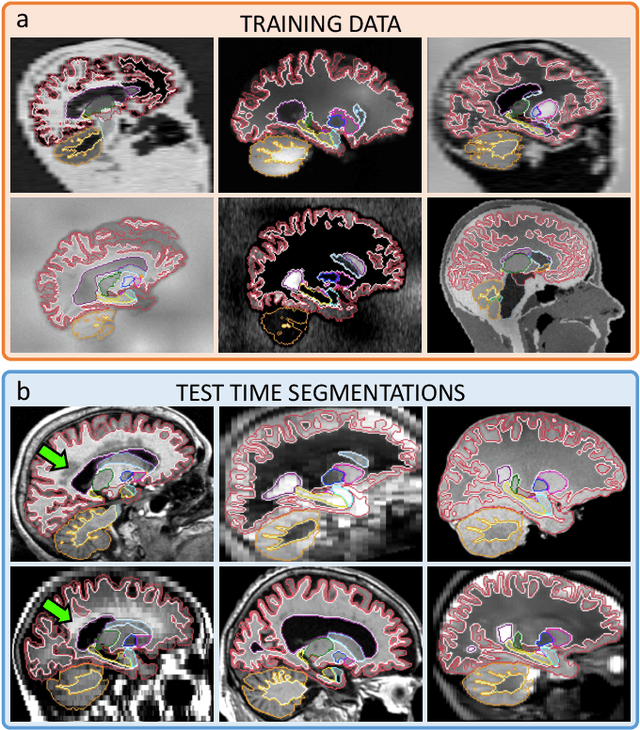

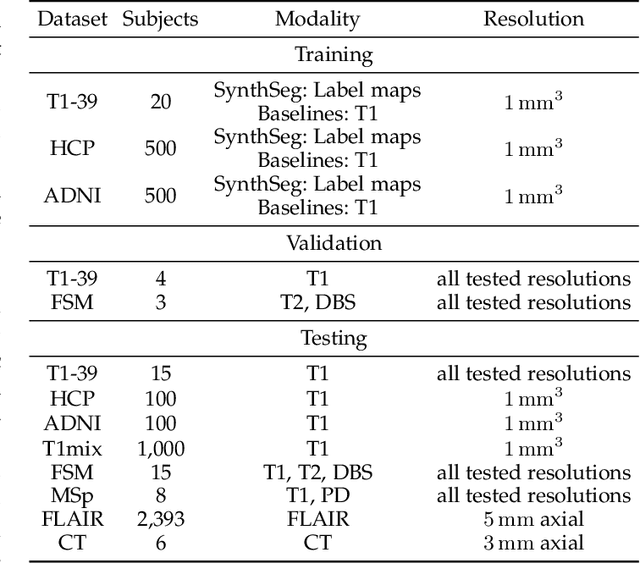

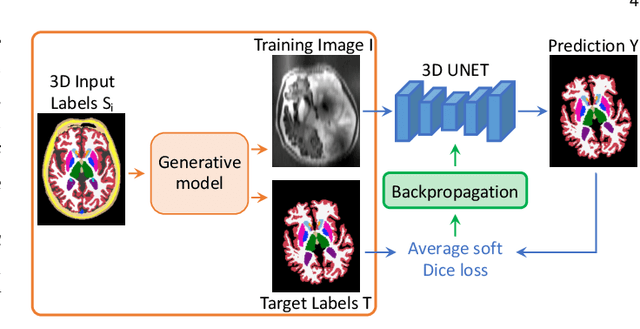

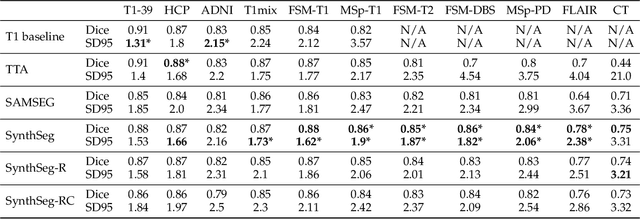

Abstract:Despite advances in data augmentation and transfer learning, convolutional neural networks (CNNs) have difficulties generalising to unseen target domains. When applied to segmentation of brain MRI scans, CNNs are highly sensitive to changes in resolution and contrast: even within the same MR modality, decreases in performance can be observed across datasets. We introduce SynthSeg, the first segmentation CNN agnostic to brain MRI scans of any contrast and resolution. SynthSeg is trained with synthetic data sampled from a generative model inspired by Bayesian segmentation. Crucially, we adopt a \textit{domain randomisation} strategy where we fully randomise the generation parameters to maximise the variability of the training data. Consequently, SynthSeg can segment preprocessed and unpreprocessed real scans of any target domain, without retraining or fine-tuning. Because SynthSeg only requires segmentations to be trained (no images), it can learn from label maps obtained automatically from existing datasets of different populations (e.g., with atrophy and lesions), thus achieving robustness to a wide range of morphological variability. We demonstrate SynthSeg on 5,500 scans of 6 modalities and 10 resolutions, where it exhibits unparalleled generalisation compared to supervised CNNs, test time adaptation, and Bayesian segmentation. The code and trained model are available at https://github.com/BBillot/SynthSeg.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge