Anuva Agarwal

Grounding Complex Navigational Instructions Using Scene Graphs

Jun 03, 2021

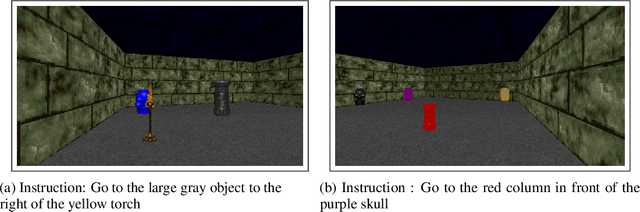

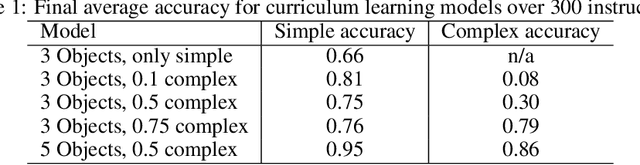

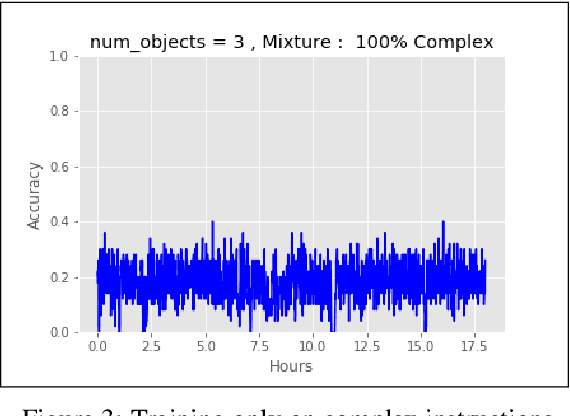

Abstract:Training a reinforcement learning agent to carry out natural language instructions is limited by the available supervision, i.e. knowing when the instruction has been carried out. We adapt the CLEVR visual question answering dataset to generate complex natural language navigation instructions and accompanying scene graphs, yielding an environment-agnostic supervised dataset. To demonstrate the use of this data set, we map the scenes to the VizDoom environment and use the architecture in \citet{gatedattention} to train an agent to carry out these more complex language instructions.

Interactive Image Generation Using Scene Graphs

May 09, 2019

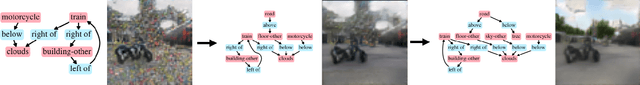

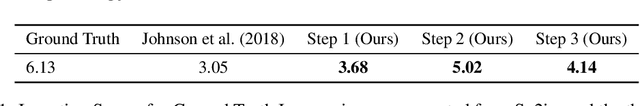

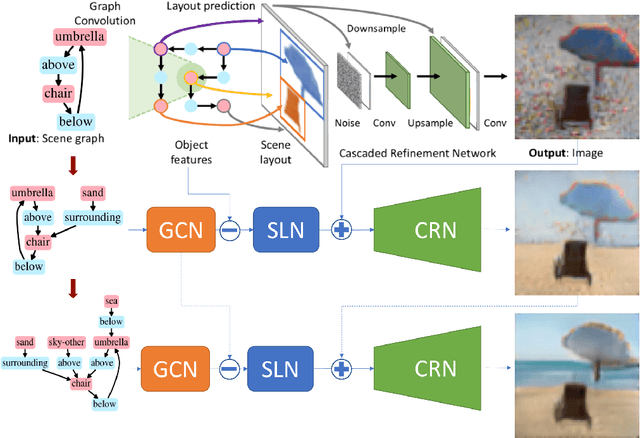

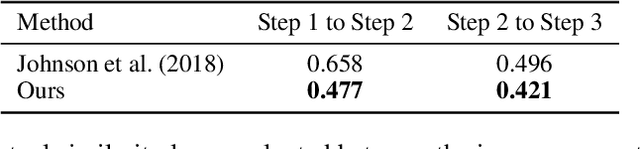

Abstract:Recent years have witnessed some exciting developments in the domain of generating images from scene-based text descriptions. These approaches have primarily focused on generating images from a static text description and are limited to generating images in a single pass. They are unable to generate an image interactively based on an incrementally additive text description (something that is more intuitive and similar to the way we describe an image). We propose a method to generate an image incrementally based on a sequence of graphs of scene descriptions (scene-graphs). We propose a recurrent network architecture that preserves the image content generated in previous steps and modifies the cumulative image as per the newly provided scene information. Our model utilizes Graph Convolutional Networks (GCN) to cater to variable-sized scene graphs along with Generative Adversarial image translation networks to generate realistic multi-object images without needing any intermediate supervision during training. We experiment with Coco-Stuff dataset which has multi-object images along with annotations describing the visual scene and show that our model significantly outperforms other approaches on the same dataset in generating visually consistent images for incrementally growing scene graphs.

ExCL: Extractive Clip Localization Using Natural Language Descriptions

Apr 04, 2019

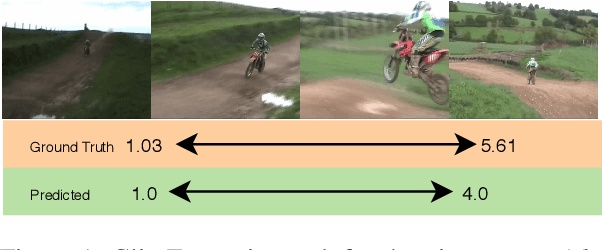

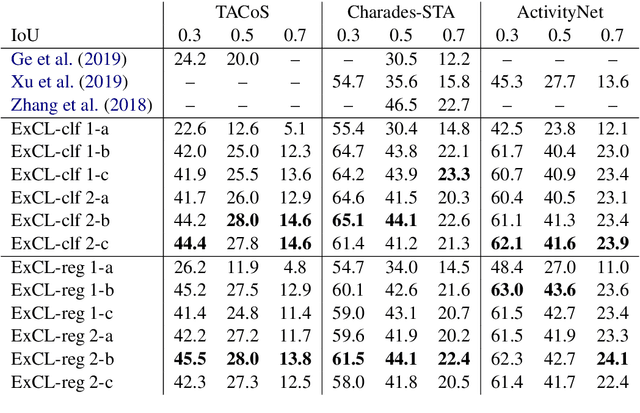

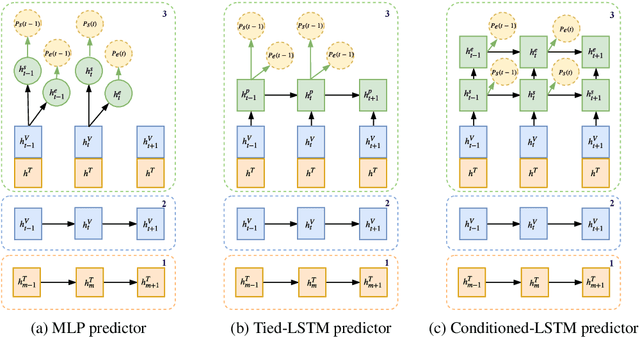

Abstract:The task of retrieving clips within videos based on a given natural language query requires cross-modal reasoning over multiple frames. Prior approaches such as sliding window classifiers are inefficient, while text-clip similarity driven ranking-based approaches such as segment proposal networks are far more complicated. In order to select the most relevant video clip corresponding to the given text description, we propose a novel extractive approach that predicts the start and end frames by leveraging cross-modal interactions between the text and video - this removes the need to retrieve and re-rank multiple proposal segments. Using recurrent networks we encode the two modalities into a joint representation which is then used in different variants of start-end frame predictor networks. Through extensive experimentation and ablative analysis, we demonstrate that our simple and elegant approach significantly outperforms state of the art on two datasets and has comparable performance on a third.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge