Multimodal Integration of Human-Like Attention in Visual Question Answering

Sep 27, 2021Ekta Sood, Fabian Kögel, Philipp Müller, Dominike Thomas, Mihai Bace, Andreas Bulling

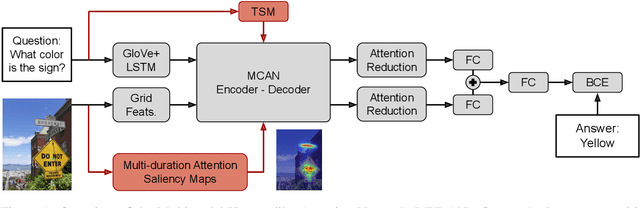

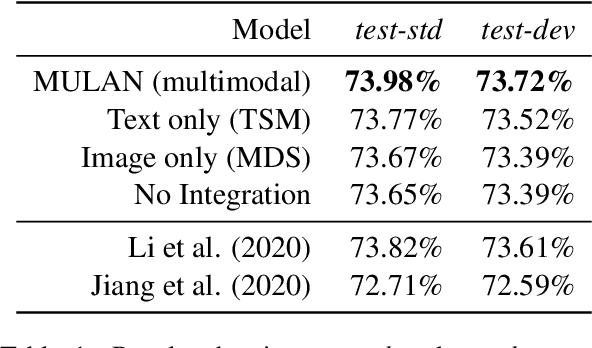

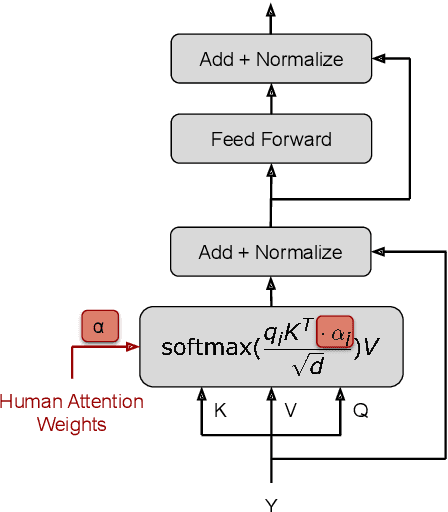

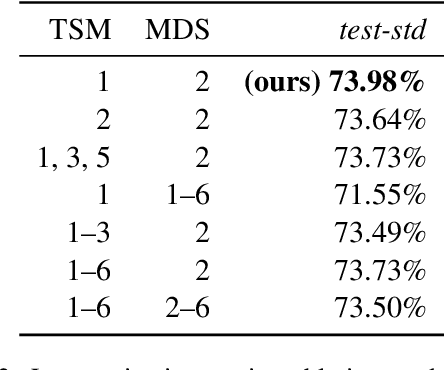

Human-like attention as a supervisory signal to guide neural attention has shown significant promise but is currently limited to uni-modal integration - even for inherently multimodal tasks such as visual question answering (VQA). We present the Multimodal Human-like Attention Network (MULAN) - the first method for multimodal integration of human-like attention on image and text during training of VQA models. MULAN integrates attention predictions from two state-of-the-art text and image saliency models into neural self-attention layers of a recent transformer-based VQA model. Through evaluations on the challenging VQAv2 dataset, we show that MULAN achieves a new state-of-the-art performance of 73.98% accuracy on test-std and 73.72% on test-dev and, at the same time, has approximately 80% fewer trainable parameters than prior work. Overall, our work underlines the potential of integrating multimodal human-like and neural attention for VQA

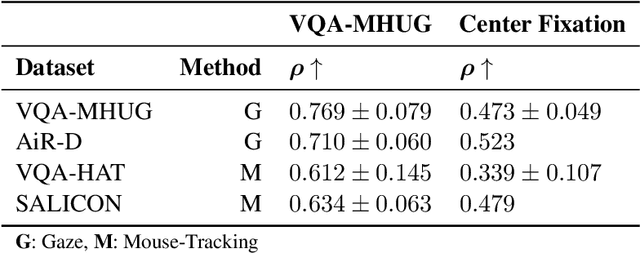

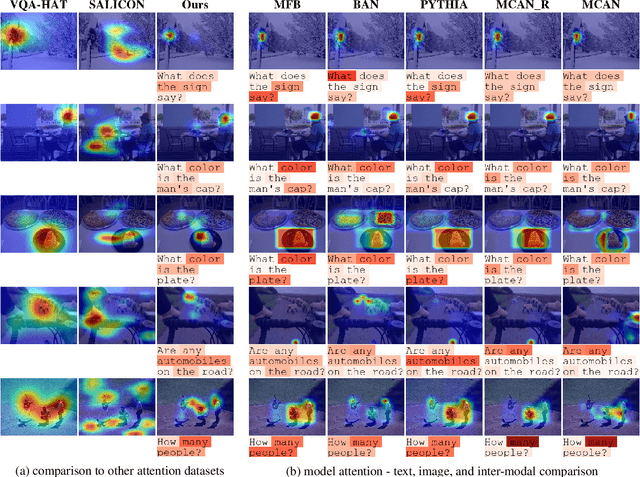

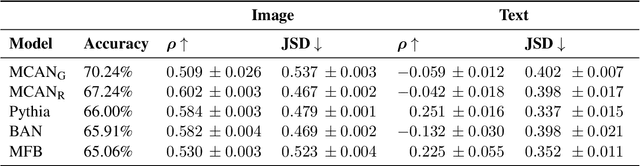

VQA-MHUG: A Gaze Dataset to Study Multimodal Neural Attention in Visual Question Answering

Sep 27, 2021Ekta Sood, Fabian Kögel, Florian Strohm, Prajit Dhar, Andreas Bulling

We present VQA-MHUG - a novel 49-participant dataset of multimodal human gaze on both images and questions during visual question answering (VQA) collected using a high-speed eye tracker. We use our dataset to analyze the similarity between human and neural attentive strategies learned by five state-of-the-art VQA models: Modular Co-Attention Network (MCAN) with either grid or region features, Pythia, Bilinear Attention Network (BAN), and the Multimodal Factorized Bilinear Pooling Network (MFB). While prior work has focused on studying the image modality, our analyses show - for the first time - that for all models, higher correlation with human attention on text is a significant predictor of VQA performance. This finding points at a potential for improving VQA performance and, at the same time, calls for further research on neural text attention mechanisms and their integration into architectures for vision and language tasks, including but potentially also beyond VQA.

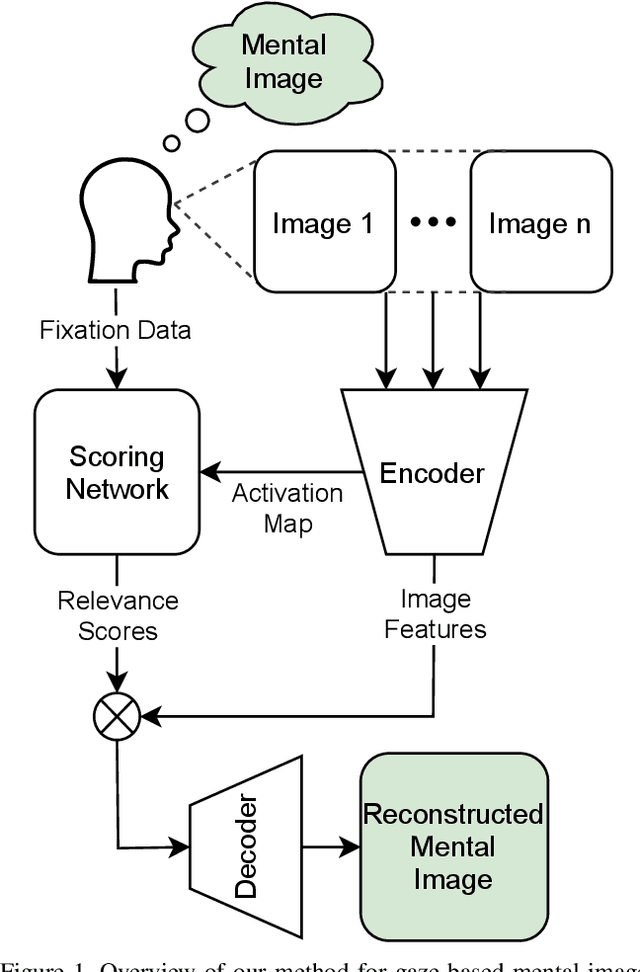

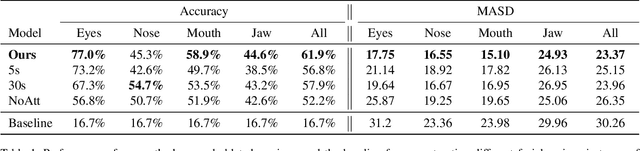

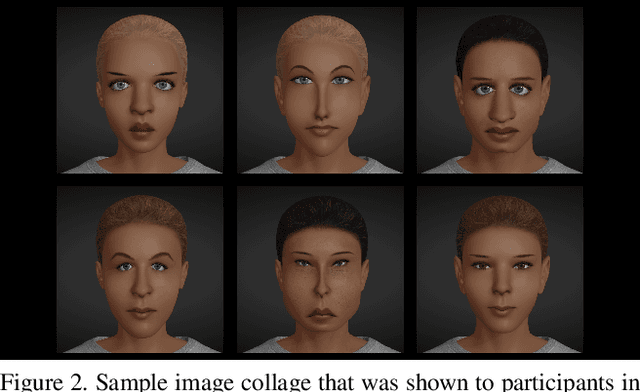

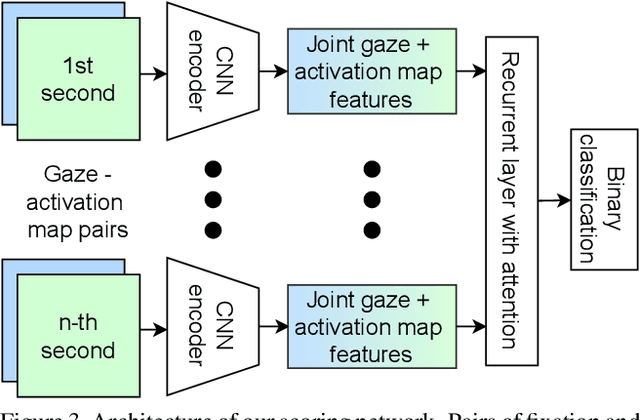

Neural Photofit: Gaze-based Mental Image Reconstruction

Aug 17, 2021Florian Strohm, Ekta Sood, Sven Mayer, Philipp Müller, Mihai Bâce, Andreas Bulling

We propose a novel method that leverages human fixations to visually decode the image a person has in mind into a photofit (facial composite). Our method combines three neural networks: An encoder, a scoring network, and a decoder. The encoder extracts image features and predicts a neural activation map for each face looked at by a human observer. A neural scoring network compares the human and neural attention and predicts a relevance score for each extracted image feature. Finally, image features are aggregated into a single feature vector as a linear combination of all features weighted by relevance which a decoder decodes into the final photofit. We train the neural scoring network on a novel dataset containing gaze data of 19 participants looking at collages of synthetic faces. We show that our method significantly outperforms a mean baseline predictor and report on a human study that shows that we can decode photofits that are visually plausible and close to the observer's mental image.

Improving Natural Language Processing Tasks with Human Gaze-Guided Neural Attention

Oct 27, 2020Ekta Sood, Simon Tannert, Philipp Mueller, Andreas Bulling

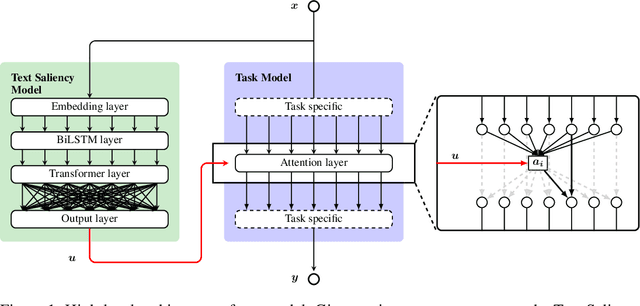

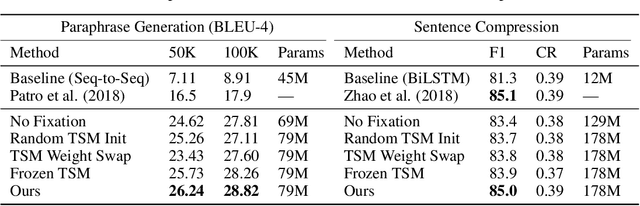

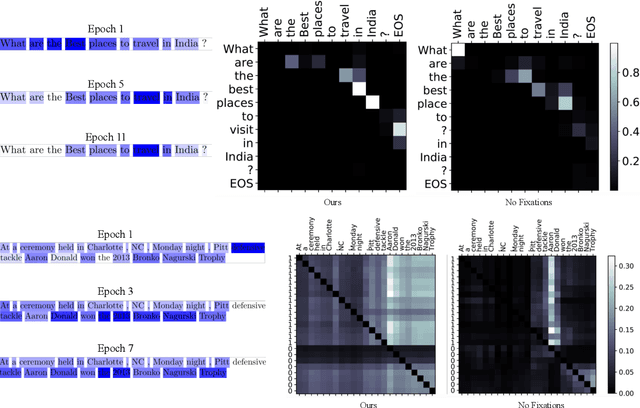

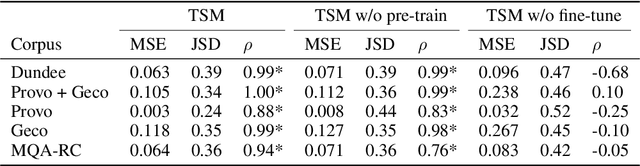

A lack of corpora has so far limited advances in integrating human gaze data as a supervisory signal in neural attention mechanisms for natural language processing(NLP). We propose a novel hybrid text saliency model(TSM) that, for the first time, combines a cognitive model of reading with explicit human gaze supervision in a single machine learning framework. On four different corpora we demonstrate that our hybrid TSM duration predictions are highly correlated with human gaze ground truth. We further propose a novel joint modeling approach to integrate TSM predictions into the attention layer of a network designed for a specific upstream NLP task without the need for any task-specific human gaze data. We demonstrate that our joint model outperforms the state of the art in paraphrase generation on the Quora Question Pairs corpus by more than 10% in BLEU-4 and achieves state of the art performance for sentence compression on the challenging Google Sentence Compression corpus. As such, our work introduces a practical approach for bridging between data-driven and cognitive models and demonstrates a new way to integrate human gaze-guided neural attention into NLP tasks.

Interpreting Attention Models with Human Visual Attention in Machine Reading Comprehension

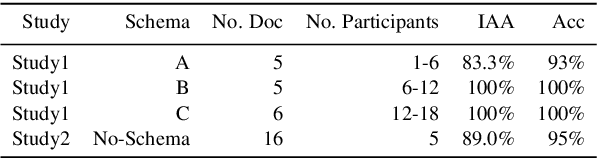

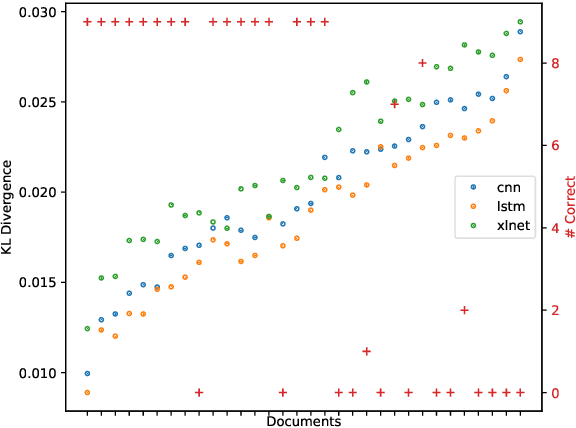

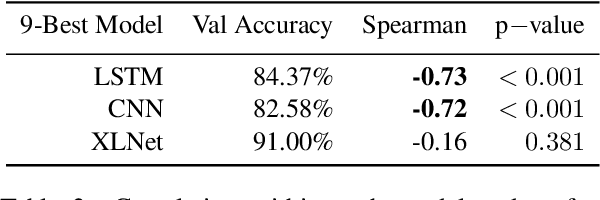

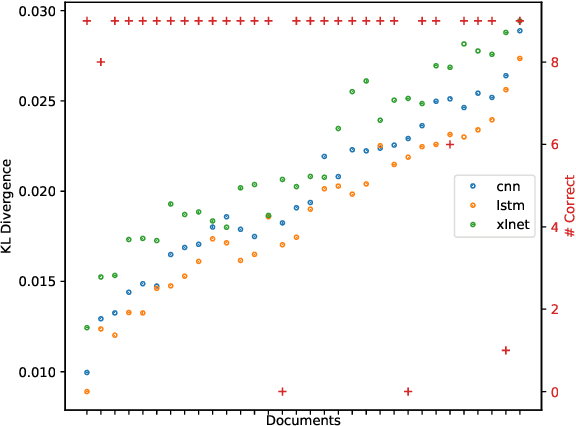

Oct 27, 2020Ekta Sood, Simon Tannert, Diego Frassinelli, Andreas Bulling, Ngoc Thang Vu

While neural networks with attention mechanisms have achieved superior performance on many natural language processing tasks, it remains unclear to which extent learned attention resembles human visual attention. In this paper, we propose a new method that leverages eye-tracking data to investigate the relationship between human visual attention and neural attention in machine reading comprehension. To this end, we introduce a novel 23 participant eye tracking dataset - MQA-RC, in which participants read movie plots and answered pre-defined questions. We compare state of the art networks based on long short-term memory (LSTM), convolutional neural models (CNN) and XLNet Transformer architectures. We find that higher similarity to human attention and performance significantly correlates to the LSTM and CNN models. However, we show this relationship does not hold true for the XLNet models -- despite the fact that the XLNet performs best on this challenging task. Our results suggest that different architectures seem to learn rather different neural attention strategies and similarity of neural to human attention does not guarantee best performance.

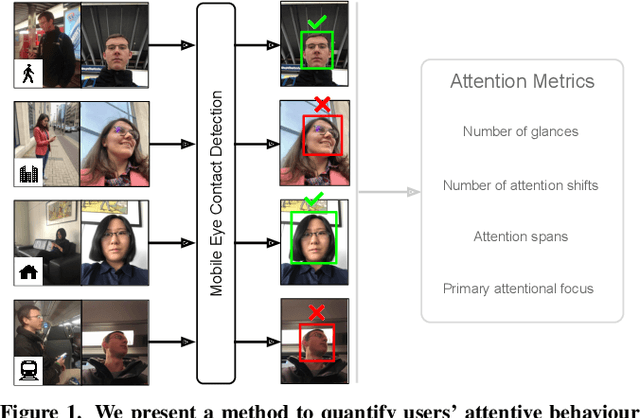

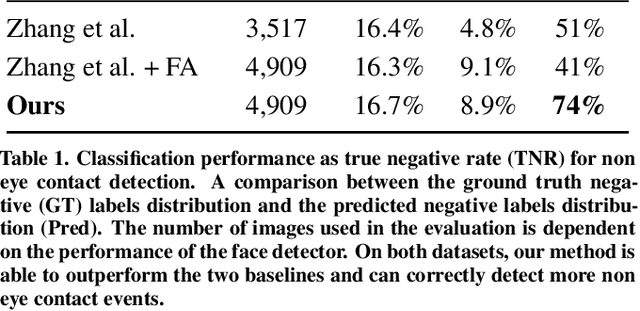

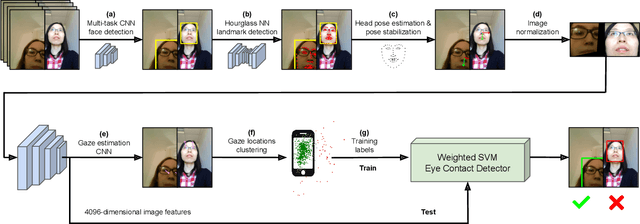

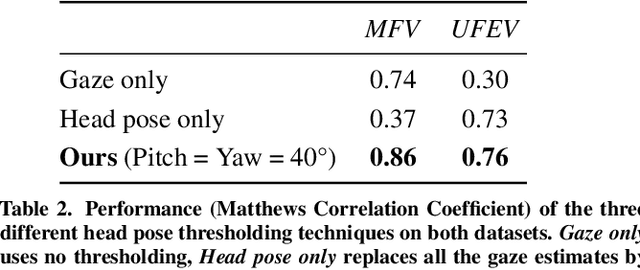

Accurate and Robust Eye Contact Detection During Everyday Mobile Device Interactions

Jul 25, 2019Mihai Bâce, Sander Staal, Andreas Bulling

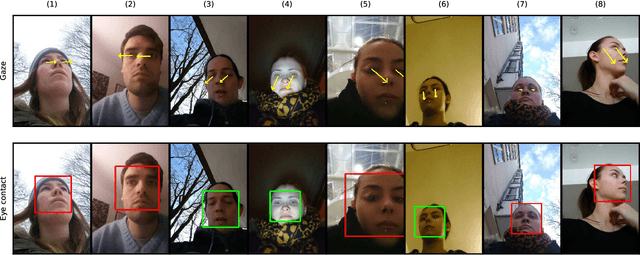

Quantification of human attention is key to several tasks in mobile human-computer interaction (HCI), such as predicting user interruptibility, estimating noticeability of user interface content, or measuring user engagement. Previous works to study mobile attentive behaviour required special-purpose eye tracking equipment or constrained users' mobility. We propose a novel method to sense and analyse visual attention on mobile devices during everyday interactions. We demonstrate the capabilities of our method on the sample task of eye contact detection that has recently attracted increasing research interest in mobile HCI. Our method builds on a state-of-the-art method for unsupervised eye contact detection and extends it to address challenges specific to mobile interactive scenarios. Through evaluation on two current datasets, we demonstrate significant performance improvements for eye contact detection across mobile devices, users, or environmental conditions. Moreover, we discuss how our method enables the calculation of additional attention metrics that, for the first time, enable researchers from different domains to study and quantify attention allocation during mobile interactions in the wild.

How far are we from quantifying visual attention in mobile HCI?

Jul 25, 2019Mihai Bâce, Sander Staal, Andreas Bulling

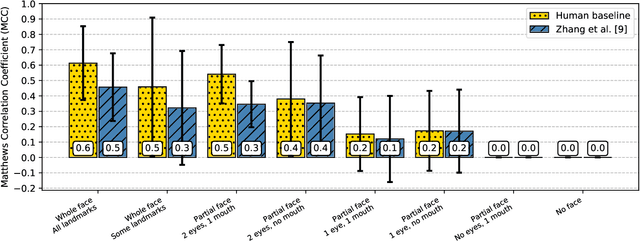

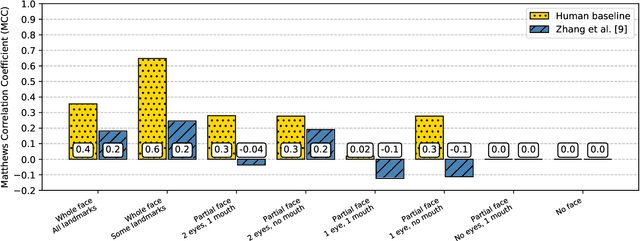

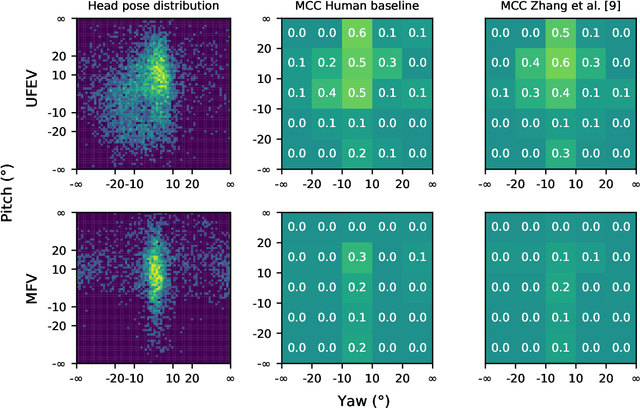

With an ever-increasing number of mobile devices competing for our attention, quantifying when, how often, or for how long users visually attend to their devices has emerged as a core challenge in mobile human-computer interaction. Encouraged by recent advances in automatic eye contact detection using machine learning and device-integrated cameras, we provide a fundamental investigation into the feasibility of quantifying visual attention during everyday mobile interactions. We identify core challenges and sources of errors associated with sensing attention on mobile devices in the wild, including the impact of face and eye visibility, the importance of robust head pose estimation, and the need for accurate gaze estimation. Based on this analysis, we propose future research directions and discuss how eye contact detection represents the foundation for exciting new applications towards next-generation pervasive attentive user interfaces.

Learning to Find Eye Region Landmarks for Remote Gaze Estimation in Unconstrained Settings

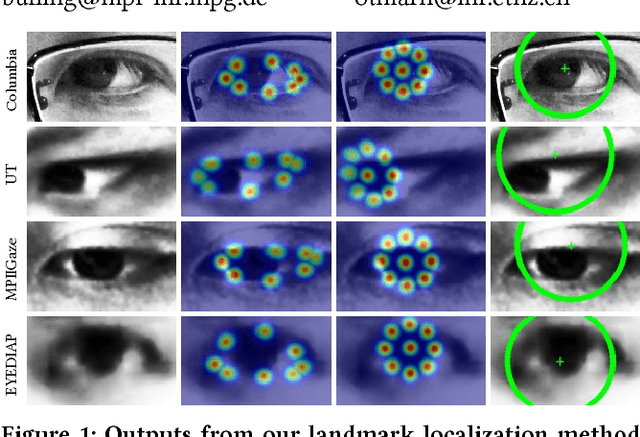

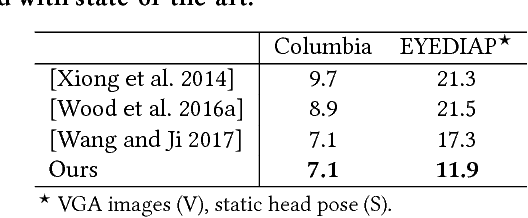

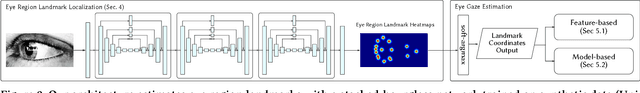

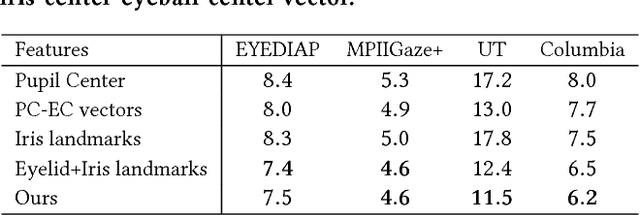

May 12, 2018Seonwook Park, Xucong Zhang, Andreas Bulling, Otmar Hilliges

Conventional feature-based and model-based gaze estimation methods have proven to perform well in settings with controlled illumination and specialized cameras. In unconstrained real-world settings, however, such methods are surpassed by recent appearance-based methods due to difficulties in modeling factors such as illumination changes and other visual artifacts. We present a novel learning-based method for eye region landmark localization that enables conventional methods to be competitive to latest appearance-based methods. Despite having been trained exclusively on synthetic data, our method exceeds the state of the art for iris localization and eye shape registration on real-world imagery. We then use the detected landmarks as input to iterative model-fitting and lightweight learning-based gaze estimation methods. Our approach outperforms existing model-fitting and appearance-based methods in the context of person-independent and personalized gaze estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge