Aleksandr I. Panov

Instruction Following with Goal-Conditioned Reinforcement Learning in Virtual Environments

Jul 12, 2024

Abstract:In this study, we address the issue of enabling an artificial intelligence agent to execute complex language instructions within virtual environments. In our framework, we assume that these instructions involve intricate linguistic structures and multiple interdependent tasks that must be navigated successfully to achieve the desired outcomes. To effectively manage these complexities, we propose a hierarchical framework that combines the deep language comprehension of large language models with the adaptive action-execution capabilities of reinforcement learning agents. The language module (based on LLM) translates the language instruction into a high-level action plan, which is then executed by a pre-trained reinforcement learning agent. We have demonstrated the effectiveness of our approach in two different environments: in IGLU, where agents are instructed to build structures, and in Crafter, where agents perform tasks and interact with objects in the surrounding environment according to language commands.

Object-Centric Learning with Slot Mixture Module

Nov 08, 2023

Abstract:Object-centric architectures usually apply a differentiable module to the entire feature map to decompose it into sets of entity representations called slots. Some of these methods structurally resemble clustering algorithms, where the cluster's center in latent space serves as a slot representation. Slot Attention is an example of such a method, acting as a learnable analog of the soft k-means algorithm. Our work employs a learnable clustering method based on the Gaussian Mixture Model. Unlike other approaches, we represent slots not only as centers of clusters but also incorporate information about the distance between clusters and assigned vectors, leading to more expressive slot representations. Our experiments demonstrate that using this approach instead of Slot Attention improves performance in object-centric scenarios, achieving state-of-the-art results in the set property prediction task.

Gradual Optimization Learning for Conformational Energy Minimization

Nov 05, 2023

Abstract:Molecular conformation optimization is crucial to computer-aided drug discovery and materials design. Traditional energy minimization techniques rely on iterative optimization methods that use molecular forces calculated by a physical simulator (oracle) as anti-gradients. However, this is a computationally expensive approach that requires many interactions with a physical simulator. One way to accelerate this procedure is to replace the physical simulator with a neural network. Despite recent progress in neural networks for molecular conformation energy prediction, such models are prone to distribution shift, leading to inaccurate energy minimization. We find that the quality of energy minimization with neural networks can be improved by providing optimization trajectories as additional training data. Still, it takes around $5 \times 10^5$ additional conformations to match the physical simulator's optimization quality. In this work, we present the Gradual Optimization Learning Framework (GOLF) for energy minimization with neural networks that significantly reduces the required additional data. The framework consists of an efficient data-collecting scheme and an external optimizer. The external optimizer utilizes gradients from the energy prediction model to generate optimization trajectories, and the data-collecting scheme selects additional training data to be processed by the physical simulator. Our results demonstrate that the neural network trained with GOLF performs on par with the oracle on a benchmark of diverse drug-like molecules using $50$x less additional data.

Graphical Object-Centric Actor-Critic

Oct 26, 2023

Abstract:There have recently been significant advances in the problem of unsupervised object-centric representation learning and its application to downstream tasks. The latest works support the argument that employing disentangled object representations in image-based object-centric reinforcement learning tasks facilitates policy learning. We propose a novel object-centric reinforcement learning algorithm combining actor-critic and model-based approaches to utilize these representations effectively. In our approach, we use a transformer encoder to extract object representations and graph neural networks to approximate the dynamics of an environment. The proposed method fills a research gap in developing efficient object-centric world models for reinforcement learning settings that can be used for environments with discrete or continuous action spaces. Our algorithm performs better in a visually complex 3D robotic environment and a 2D environment with compositional structure than the state-of-the-art model-free actor-critic algorithm built upon transformer architecture and the state-of-the-art monolithic model-based algorithm.

Learning Successor Representations with Distributed Hebbian Temporal Memory

Oct 20, 2023

Abstract:This paper presents a novel approach to address the challenge of online hidden representation learning for decision-making under uncertainty in non-stationary, partially observable environments. The proposed algorithm, Distributed Hebbian Temporal Memory (DHTM), is based on factor graph formalism and a multicomponent neuron model. DHTM aims to capture sequential data relationships and make cumulative predictions about future observations, forming Successor Representation (SR). Inspired by neurophysiological models of the neocortex, the algorithm utilizes distributed representations, sparse transition matrices, and local Hebbian-like learning rules to overcome the instability and slow learning process of traditional temporal memory algorithms like RNN and HMM. Experimental results demonstrate that DHTM outperforms classical LSTM and performs comparably to more advanced RNN-like algorithms, speeding up Temporal Difference learning for SR in changing environments. Additionally, we compare the SRs produced by DHTM to another biologically inspired HMM-like algorithm, CSCG. Our findings suggest that DHTM is a promising approach for addressing the challenges of online hidden representation learning in dynamic environments.

Recurrent Memory Decision Transformer

Jul 05, 2023

Abstract:Originally developed for natural language problems, transformer models have recently been widely used in offline reinforcement learning tasks. This is because the agent's history can be represented as a sequence, and the whole task can be reduced to the sequence modeling task. However, the quadratic complexity of the transformer operation limits the potential increase in context. Therefore, different versions of the memory mechanism are used to work with long sequences in a natural language. This paper proposes the Recurrent Memory Decision Transformer (RMDT), a model that uses a recurrent memory mechanism for reinforcement learning problems. We conduct thorough experiments on Atari games and MuJoCo control problems and show that our proposed model is significantly superior to its counterparts without the recurrent memory mechanism on Atari games. We also carefully study the effect of memory on the performance of the proposed model. These findings shed light on the potential of incorporating recurrent memory mechanisms to improve the performance of large-scale transformer models in offline reinforcement learning tasks. The Recurrent Memory Decision Transformer code is publicly available in the repository \url{https://anonymous.4open.science/r/RMDT-4FE4}.

Intrinsic Motivation in Model-based Reinforcement Learning: A Brief Review

Jan 24, 2023Abstract:The reinforcement learning research area contains a wide range of methods for solving the problems of intelligent agent control. Despite the progress that has been made, the task of creating a highly autonomous agent is still a significant challenge. One potential solution to this problem is intrinsic motivation, a concept derived from developmental psychology. This review considers the existing methods for determining intrinsic motivation based on the world model obtained by the agent. We propose a systematic approach to current research in this field, which consists of three categories of methods, distinguished by the way they utilize a world model in the agent's components: complementary intrinsic reward, exploration policy, and intrinsically motivated goals. The proposed unified framework describes the architecture of agents using a world model and intrinsic motivation to improve learning. The potential for developing new techniques in this area of research is also examined.

HPointLoc: Point-based Indoor Place Recognition using Synthetic RGB-D Images

Dec 30, 2022

Abstract:We present a novel dataset named as HPointLoc, specially designed for exploring capabilities of visual place recognition in indoor environment and loop detection in simultaneous localization and mapping. The loop detection sub-task is especially relevant when a robot with an on-board RGB-D camera can drive past the same place (``Point") at different angles. The dataset is based on the popular Habitat simulator, in which it is possible to generate photorealistic indoor scenes using both own sensor data and open datasets, such as Matterport3D. To study the main stages of solving the place recognition problem on the HPointLoc dataset, we proposed a new modular approach named as PNTR. It first performs an image retrieval with the Patch-NetVLAD method, then extracts keypoints and matches them using R2D2, LoFTR or SuperPoint with SuperGlue, and finally performs a camera pose optimization step with TEASER++. Such a solution to the place recognition problem has not been previously studied in existing publications. The PNTR approach has shown the best quality metrics on the HPointLoc dataset and has a high potential for real use in localization systems for unmanned vehicles. The proposed dataset and framework are publicly available: https://github.com/metra4ok/HPointLoc.

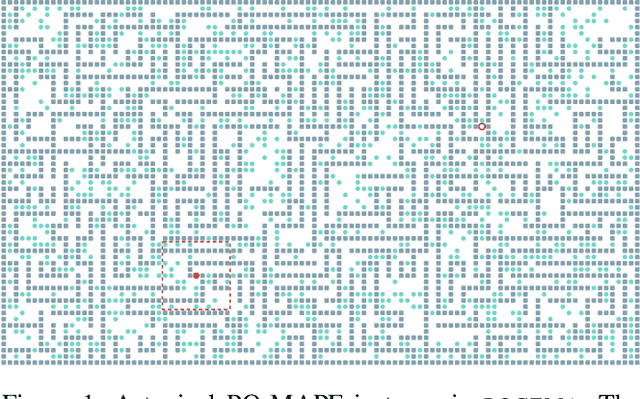

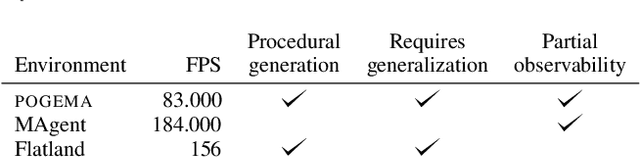

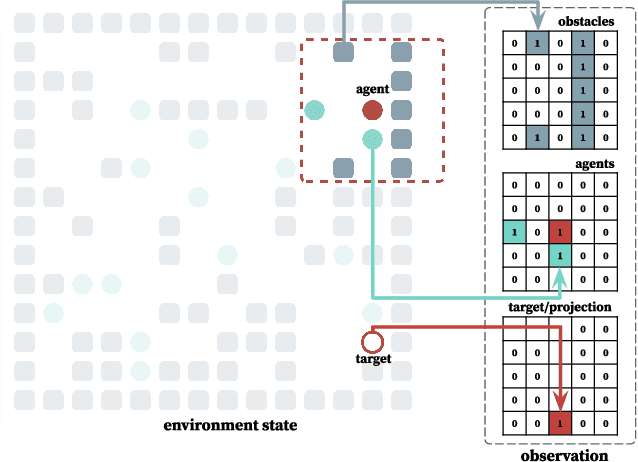

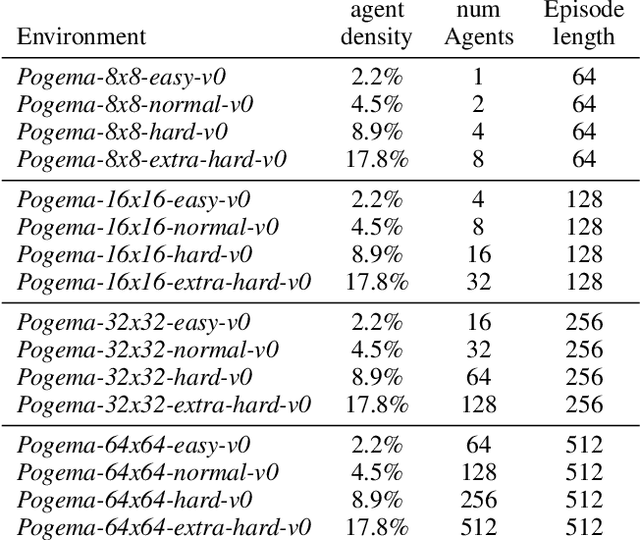

POGEMA: Partially Observable Grid Environment for Multiple Agents

Jun 22, 2022

Abstract:We introduce POGEMA (https://github.com/AIRI-Institute/pogema) a sandbox for challenging partially observable multi-agent pathfinding (PO-MAPF) problems . This is a grid-based environment that was specifically designed to be a flexible, tunable and scalable benchmark. It can be tailored to a variety of PO-MAPF, which can serve as an excellent testing ground for planning and learning methods, and their combination, which will allow us to move towards filling the gap between AI planning and learning.

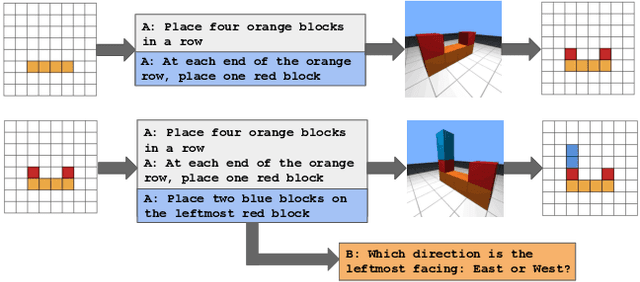

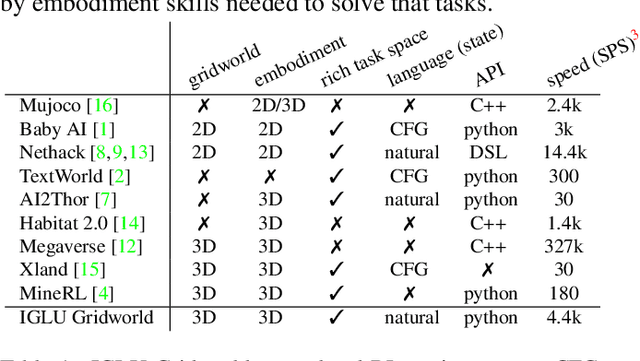

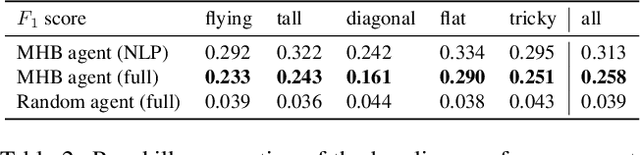

IGLU Gridworld: Simple and Fast Environment for Embodied Dialog Agents

May 31, 2022

Abstract:We present the IGLU Gridworld: a reinforcement learning environment for building and evaluating language conditioned embodied agents in a scalable way. The environment features visual agent embodiment, interactive learning through collaboration, language conditioned RL, and combinatorically hard task (3d blocks building) space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge