Adnan Aijaz

Goal-oriented Communication for Fast and Robust Robotic Fault Detection and Recovery

Jan 26, 2026Abstract:Autonomous robotic systems are widely deployed in smart factories and operate in dynamic, uncertain, and human-involved environments that require low-latency and robust fault detection and recovery (FDR). However, existing FDR frameworks exhibit various limitations, such as significant delays in communication and computation, and unreliability in robot motion/trajectory generation, mainly because the communication-computation-control (3C) loop is designed without considering the downstream FDR goal. To address this, we propose a novel Goal-oriented Communication (GoC) framework that jointly designs the 3C loop tailored for fast and robust robotic FDR, with the goal of minimising the FDR time while maximising the robotic task (e.g., workpiece sorting) success rate. For fault detection, our GoC framework innovatively defines and extracts the 3D scene graph (3D-SG) as the semantic representation via our designed representation extractor, and detects faults by monitoring spatial relationship changes in the 3D-SG. For fault recovery, we fine-tune a small language model (SLM) via Low-Rank Adaptation (LoRA) and enhance its reasoning and generalization capabilities via knowledge distillation to generate recovery motions for robots. We also design a lightweight goal-oriented digital twin reconstruction module to refine the recovery motions generated by the SLM when fine-grained robotic control is required, using only task-relevant object contours for digital twin reconstruction. Extensive simulations demonstrate that our GoC framework reduces the FDR time by up to 82.6% and improves the task success rate by up to 76%, compared to the state-of-the-art frameworks that rely on vision language models for fault detection and large language models for fault recovery.

A Multi-Year Urban Streetlight Imagery Dataset for Visual Monitoring and Spatio-Temporal Drift Detection

Dec 13, 2025

Abstract:We present a large-scale, longitudinal visual dataset of urban streetlights captured by 22 fixed-angle cameras deployed across Bristol, U.K., from 2021 to 2025. The dataset contains over 526,000 images, collected hourly under diverse lighting, weather, and seasonal conditions. Each image is accompanied by rich metadata, including timestamps, GPS coordinates, and device identifiers. This unique real-world dataset enables detailed investigation of visual drift, anomaly detection, and MLOps strategies in smart city deployments. To promtoe seconardary analysis, we additionally provide a self-supervised framework based on convolutional variational autoencoders (CNN-VAEs). Models are trained separately for each camera node and for day/night image sets. We define two per-sample drift metrics: relative centroid drift, capturing latent space deviation from a baseline quarter, and relative reconstruction error, measuring normalized image-domain degradation. This dataset provides a realistic, fine-grained benchmark for evaluating long-term model stability, drift-aware learning, and deployment-ready vision systems. The images and structured metadata are publicly released in JPEG and CSV formats, supporting reproducibility and downstream applications such as streetlight monitoring, weather inference, and urban scene understanding. The dataset can be found at https://doi.org/10.5281/zenodo.17781192 and https://doi.org/10.5281/zenodo.17859120.

Flying Base Stations for Offshore Wind Farm Monitoring and Control: Holistic Performance Evaluation and Optimization

Jul 10, 2025Abstract:Ensuring reliable and low-latency communication in offshore wind farms is critical for efficient monitoring and control, yet remains challenging due to the harsh environment and lack of infrastructure. This paper investigates a flying base station (FBS) approach for wide-area monitoring and control in the UK Hornsea offshore wind farm project. By leveraging mobile, flexible FBS platforms in the remote and harsh offshore environment, the proposed system offers real-time connectivity for turbines without the need for deploying permanent infrastructure at the sea. We develop a detailed and practical end-to-end latency model accounting for five key factors: flight duration, connection establishment, turbine state information upload, computational delay, and control transmission, to provide a holistic perspective often missing in prior studies. Furthermore, we combine trajectory planning, beamforming, and resource allocation into a multi-objective optimization framework for the overall latency minimization, specifically designed for large-scale offshore wind farm deployments. Simulation results verify the effectiveness of our proposed method in minimizing latency and enhancing efficiency in FBS-assisted offshore monitoring across various power levels, while consistently outperforming baseline designs.

Task-Oriented Connectivity for Networked Robotics with Generative AI and Semantic Communications

Mar 09, 2025Abstract:The convergence of robotics, advanced communication networks, and artificial intelligence (AI) holds the promise of transforming industries through fully automated and intelligent operations. In this work, we introduce a novel co-working framework for robots that unifies goal-oriented semantic communication (SemCom) with a Generative AI (GenAI)-agent under a semantic-aware network. SemCom prioritizes the exchange of meaningful information among robots and the network, thereby reducing overhead and latency. Meanwhile, the GenAI-agent leverages generative AI models to interpret high-level task instructions, allocate resources, and adapt to dynamic changes in both network and robotic environments. This agent-driven paradigm ushers in a new level of autonomy and intelligence, enabling complex tasks of networked robots to be conducted with minimal human intervention. We validate our approach through a multi-robot anomaly detection use-case simulation, where robots detect, compress, and transmit relevant information for classification. Simulation results confirm that SemCom significantly reduces data traffic while preserving critical semantic details, and the GenAI-agent ensures task coordination and network adaptation. This synergy provides a robust, efficient, and scalable solution for modern industrial environments.

Building the Self-Improvement Loop: Error Detection and Correction in Goal-Oriented Semantic Communications

Nov 03, 2024

Abstract:Error detection and correction are essential for ensuring robust and reliable operation in modern communication systems, particularly in complex transmission environments. However, discussions on these topics have largely been overlooked in semantic communication (SemCom), which focuses on transmitting meaning rather than symbols, leading to significant improvements in communication efficiency. Despite these advantages, semantic errors -- stemming from discrepancies between transmitted and received meanings -- present a major challenge to system reliability. This paper addresses this gap by proposing a comprehensive framework for detecting and correcting semantic errors in SemCom systems. We formally define semantic error, detection, and correction mechanisms, and identify key sources of semantic errors. To address these challenges, we develop a Gaussian process (GP)-based method for latent space monitoring to detect errors, alongside a human-in-the-loop reinforcement learning (HITL-RL) approach to optimize semantic model configurations using user feedback. Experimental results validate the effectiveness of the proposed methods in mitigating semantic errors under various conditions, including adversarial attacks, input feature changes, physical channel variations, and user preference shifts. This work lays the foundation for more reliable and adaptive SemCom systems with robust semantic error management techniques.

Adapting MLOps for Diverse In-Network Intelligence in 6G Era: Challenges and Solutions

Oct 24, 2024

Abstract:Seamless integration of artificial intelligence (AI) and machine learning (ML) techniques with wireless systems is a crucial step for 6G AInization. However, such integration faces challenges in terms of model functionality and lifecycle management. ML operations (MLOps) offer a systematic approach to tackle these challenges. Existing approaches toward implementing MLOps in a centralized platform often overlook the challenges posed by diverse learning paradigms and network heterogeneity. This article provides a new approach to MLOps targeting the intricacies of future wireless networks. Considering unique aspects of the future radio access network (RAN), we formulate three operational pipelines, namely reinforcement learning operations (RLOps), federated learning operations (FedOps), and generative AI operations (GenOps). These pipelines form the foundation for seamlessly integrating various learning/inference capabilities into networks. We outline the specific challenges and proposed solutions for each operation, facilitating large-scale deployment of AI-Native 6G networks.

Goal-Oriented and Semantic Communication in 6G AI-Native Networks: The 6G-GOALS Approach

Feb 12, 2024

Abstract:Recent advances in AI technologies have notably expanded device intelligence, fostering federation and cooperation among distributed AI agents. These advancements impose new requirements on future 6G mobile network architectures. To meet these demands, it is essential to transcend classical boundaries and integrate communication, computation, control, and intelligence. This paper presents the 6G-GOALS approach to goal-oriented and semantic communications for AI-Native 6G Networks. The proposed approach incorporates semantic, pragmatic, and goal-oriented communication into AI-native technologies, aiming to facilitate information exchange between intelligent agents in a more relevant, effective, and timely manner, thereby optimizing bandwidth, latency, energy, and electromagnetic field (EMF) radiation. The focus is on distilling data to its most relevant form and terse representation, aligning with the source's intent or the destination's objectives and context, or serving a specific goal. 6G-GOALS builds on three fundamental pillars: i) AI-enhanced semantic data representation, sensing, compression, and communication, ii) foundational AI reasoning and causal semantic data representation, contextual relevance, and value for goal-oriented effectiveness, and iii) sustainability enabled by more efficient wireless services. Finally, we illustrate two proof-of-concepts implementing semantic, goal-oriented, and pragmatic communication principles in near-future use cases. Our study covers the project's vision, methodologies, and potential impact.

Demo: A Digital Twin of the 5G Radio Access Network for Anomaly Detection Functionality

Aug 30, 2023

Abstract:Recently, the concept of digital twins (DTs) has received significant attention within the realm of 5G/6G. This demonstration shows an innovative DT design and implementation framework tailored toward integration within the 5G infrastructure. The proposed DT enables near real-time anomaly detection capability pertaining to user connectivity. It empowers the 5G system to proactively execute decisions for resource control and connection restoration.

Toward Multi-Service Edge-Intelligence Paradigm: Temporal-Adaptive Prediction for Time-Critical Control over Wireless

Dec 12, 2022

Abstract:Time-critical control applications typically pose stringent connectivity requirements for communication networks. The imperfections associated with the wireless medium such as packet losses, synchronization errors, and varying delays have a detrimental effect on performance of real-time control, often with safety implications. This paper introduces multi-service edge-intelligence as a new paradigm for realizing time-critical control over wireless. It presents the concept of multi-service edge-intelligence which revolves around tight integration of wireless access, edge-computing and machine learning techniques, in order to provide stability guarantees under wireless imperfections. The paper articulates some of the key system design aspects of multi-service edge-intelligence. It also presents a temporal-adaptive prediction technique to cope with dynamically changing wireless environments. It provides performance results in a robotic teleoperation scenario. Finally, it discusses some open research and design challenges for multi-service edge-intelligence.

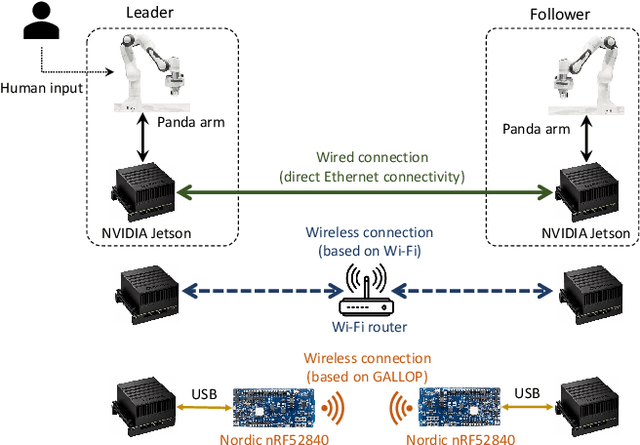

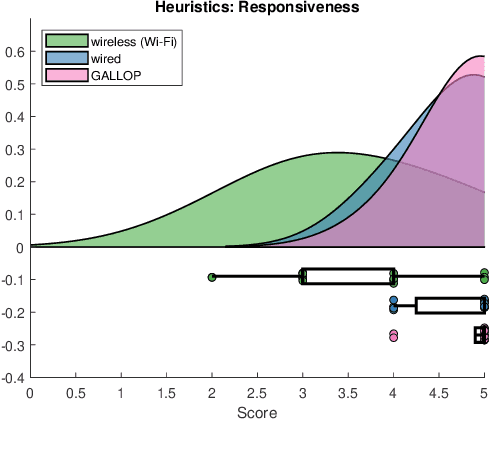

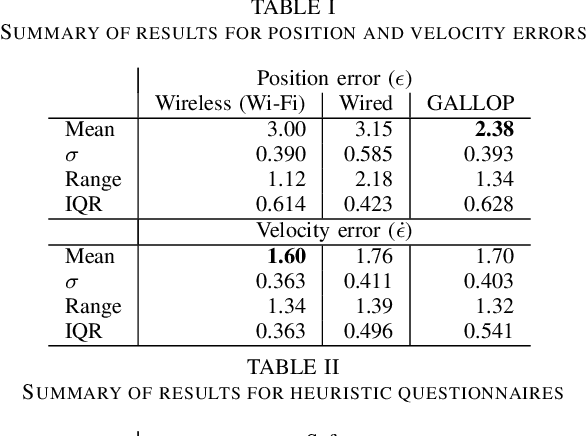

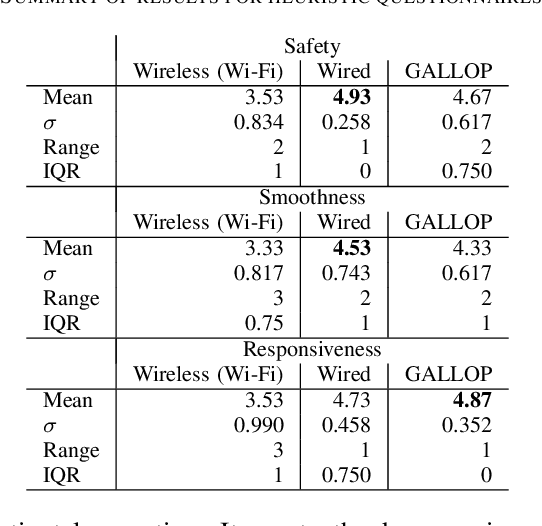

Demo: Untethered Haptic Teleoperation for Nuclear Decommissioning using a Low-Power Wireless Control Technology

Jun 27, 2022

Abstract:Haptic teleoperation is typically realized through wired networking technologies (e.g., Ethernet) which guarantee performance of control loops closed over the communication medium, particularly in terms of latency, jitter, and reliability. This demonstration shows the capability of conducting haptic teleoperation over a novel low-power wireless control technology, called GALLOP, in a nuclear decommissioning use-case. It shows the viability of GALLOP for meeting latency, timeliness, and safety requirements of haptic teleoperation. Evaluation conducted as part of the demonstration reveals that GALLOP, which has been implemented over an off-the-shelf Bluetooth 5.0 chipset, can be a replacement for conventional wired TCP/IP connection, and outperforms WiFi-based wireless solution in same use-case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge