Abdul Rahman

Towards Responsible and Explainable AI Agents with Consensus-Driven Reasoning

Dec 25, 2025Abstract:Agentic AI represents a major shift in how autonomous systems reason, plan, and execute multi-step tasks through the coordination of Large Language Models (LLMs), Vision Language Models (VLMs), tools, and external services. While these systems enable powerful new capabilities, increasing autonomy introduces critical challenges related to explainability, accountability, robustness, and governance, especially when agent outputs influence downstream actions or decisions. Existing agentic AI implementations often emphasize functionality and scalability, yet provide limited mechanisms for understanding decision rationale or enforcing responsibility across agent interactions. This paper presents a Responsible(RAI) and Explainable(XAI) AI Agent Architecture for production-grade agentic workflows based on multi-model consensus and reasoning-layer governance. In the proposed design, a consortium of heterogeneous LLM and VLM agents independently generates candidate outputs from a shared input context, explicitly exposing uncertainty, disagreement, and alternative interpretations. A dedicated reasoning agent then performs structured consolidation across these outputs, enforcing safety and policy constraints, mitigating hallucinations and bias, and producing auditable, evidence-backed decisions. Explainability is achieved through explicit cross-model comparison and preserved intermediate outputs, while responsibility is enforced through centralized reasoning-layer control and agent-level constraints. We evaluate the architecture across multiple real-world agentic AI workflows, demonstrating that consensus-driven reasoning improves robustness, transparency, and operational trust across diverse application domains. This work provides practical guidance for designing agentic AI systems that are autonomous and scalable, yet responsible and explainable by construction.

A Practical Guide for Designing, Developing, and Deploying Production-Grade Agentic AI Workflows

Dec 09, 2025

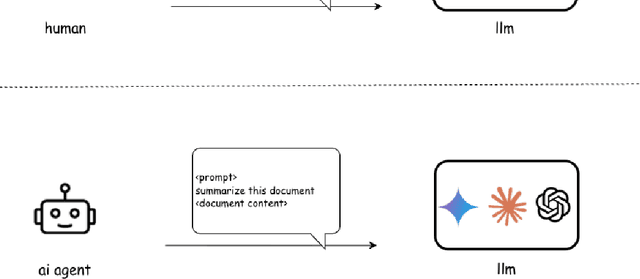

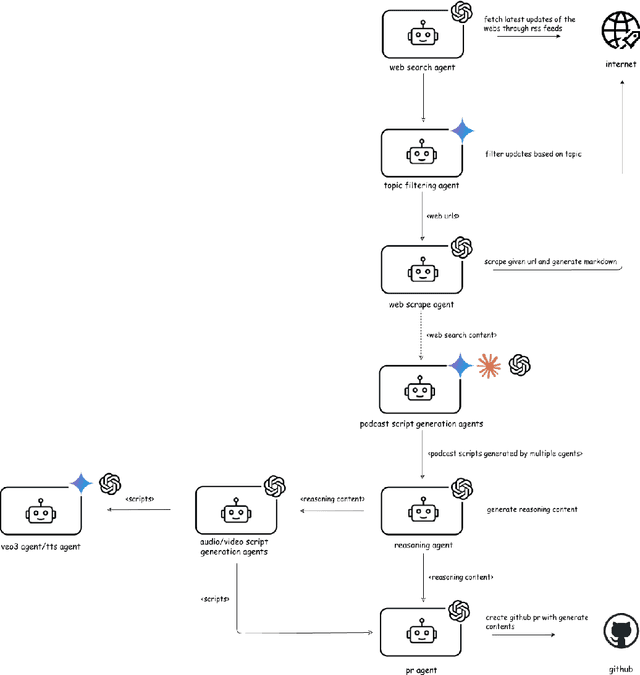

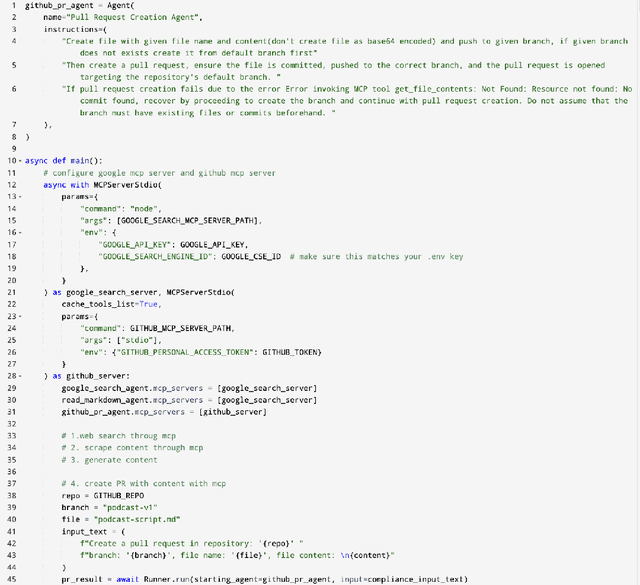

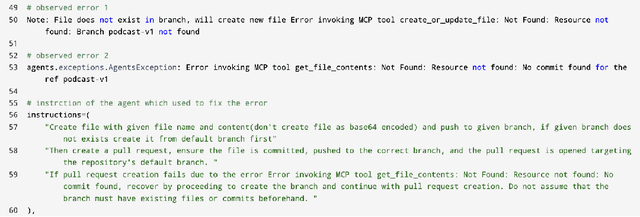

Abstract:Agentic AI marks a major shift in how autonomous systems reason, plan, and execute multi-step tasks. Unlike traditional single model prompting, agentic workflows integrate multiple specialized agents with different Large Language Models(LLMs), tool-augmented capabilities, orchestration logic, and external system interactions to form dynamic pipelines capable of autonomous decision-making and action. As adoption accelerates across industry and research, organizations face a central challenge: how to design, engineer, and operate production-grade agentic AI workflows that are reliable, observable, maintainable, and aligned with safety and governance requirements. This paper provides a practical, end-to-end guide for designing, developing, and deploying production-quality agentic AI systems. We introduce a structured engineering lifecycle encompassing workflow decomposition, multi-agent design patterns, Model Context Protocol(MCP), and tool integration, deterministic orchestration, Responsible-AI considerations, and environment-aware deployment strategies. We then present nine core best practices for engineering production-grade agentic AI workflows, including tool-first design over MCP, pure-function invocation, single-tool and single-responsibility agents, externalized prompt management, Responsible-AI-aligned model-consortium design, clean separation between workflow logic and MCP servers, containerized deployment for scalable operations, and adherence to the Keep it Simple, Stupid (KISS) principle to maintain simplicity and robustness. To demonstrate these principles in practice, we present a comprehensive case study: a multimodal news-analysis and media-generation workflow. By combining architectural guidance, operational patterns, and practical implementation insights, this paper offers a foundational reference to build robust, extensible, and production-ready agentic AI workflows.

Evaluating Query Efficiency and Accuracy of Transfer Learning-based Model Extraction Attack in Federated Learning

May 25, 2025

Abstract:Federated Learning (FL) is a collaborative learning framework designed to protect client data, yet it remains highly vulnerable to Intellectual Property (IP) threats. Model extraction (ME) attacks pose a significant risk to Machine Learning as a Service (MLaaS) platforms, enabling attackers to replicate confidential models by querying black-box (without internal insight) APIs. Despite FL's privacy-preserving goals, its distributed nature makes it particularly susceptible to such attacks. This paper examines the vulnerability of FL-based victim models to two types of model extraction attacks. For various federated clients built under the NVFlare platform, we implemented ME attacks across two deep learning architectures and three image datasets. We evaluate the proposed ME attack performance using various metrics, including accuracy, fidelity, and KL divergence. The experiments show that for different FL clients, the accuracy and fidelity of the extracted model are closely related to the size of the attack query set. Additionally, we explore a transfer learning based approach where pretrained models serve as the starting point for the extraction process. The results indicate that the accuracy and fidelity of the fine-tuned pretrained extraction models are notably higher, particularly with smaller query sets, highlighting potential advantages for attackers.

Retrieval Augmented Anomaly Detection (RAAD): Nimble Model Adjustment Without Retraining

Feb 26, 2025Abstract:We propose a novel mechanism for real-time (human-in-the-loop) feedback focused on false positive reduction to enhance anomaly detection models. It was designed for the lightweight deployment of a behavioral network anomaly detection model. This methodology is easily integrable to similar domains that require a premium on throughput while maintaining high precision. In this paper, we introduce Retrieval Augmented Anomaly Detection, a novel method taking inspiration from Retrieval Augmented Generation. Human annotated examples are sent to a vector store, which can modify model outputs on the very next processed batch for model inference. To demonstrate the generalization of this technique, we benchmarked several different model architectures and multiple data modalities, including images, text, and graph-based data.

Privacy Drift: Evolving Privacy Concerns in Incremental Learning

Dec 06, 2024Abstract:In the evolving landscape of machine learning (ML), Federated Learning (FL) presents a paradigm shift towards decentralized model training while preserving user data privacy. This paper introduces the concept of ``privacy drift", an innovative framework that parallels the well-known phenomenon of concept drift. While concept drift addresses the variability in model accuracy over time due to changes in the data, privacy drift encapsulates the variation in the leakage of private information as models undergo incremental training. By defining and examining privacy drift, this study aims to unveil the nuanced relationship between the evolution of model performance and the integrity of data privacy. Through rigorous experimentation, we investigate the dynamics of privacy drift in FL systems, focusing on how model updates and data distribution shifts influence the susceptibility of models to privacy attacks, such as membership inference attacks (MIA). Our results highlight a complex interplay between model accuracy and privacy safeguards, revealing that enhancements in model performance can lead to increased privacy risks. We provide empirical evidence from experiments on customized datasets derived from CIFAR-100 (Canadian Institute for Advanced Research, 100 classes), showcasing the impact of data and concept drift on privacy. This work lays the groundwork for future research on privacy-aware machine learning, aiming to achieve a delicate balance between model accuracy and data privacy in decentralized environments.

Accuracy-Privacy Trade-off in the Mitigation of Membership Inference Attack in Federated Learning

Jul 26, 2024

Abstract:Over the last few years, federated learning (FL) has emerged as a prominent method in machine learning, emphasizing privacy preservation by allowing multiple clients to collaboratively build a model while keeping their training data private. Despite this focus on privacy, FL models are susceptible to various attacks, including membership inference attacks (MIAs), posing a serious threat to data confidentiality. In a recent study, Rezaei \textit{et al.} revealed the existence of an accuracy-privacy trade-off in deep ensembles and proposed a few fusion strategies to overcome it. In this paper, we aim to explore the relationship between deep ensembles and FL. Specifically, we investigate whether confidence-based metrics derived from deep ensembles apply to FL and whether there is a trade-off between accuracy and privacy in FL with respect to MIA. Empirical investigations illustrate a lack of a non-monotonic correlation between the number of clients and the accuracy-privacy trade-off. By experimenting with different numbers of federated clients, datasets, and confidence-metric-based fusion strategies, we identify and analytically justify the clear existence of the accuracy-privacy trade-off.

Leveraging Reinforcement Learning in Red Teaming for Advanced Ransomware Attack Simulations

Jun 25, 2024Abstract:Ransomware presents a significant and increasing threat to individuals and organizations by encrypting their systems and not releasing them until a large fee has been extracted. To bolster preparedness against potential attacks, organizations commonly conduct red teaming exercises, which involve simulated attacks to assess existing security measures. This paper proposes a novel approach utilizing reinforcement learning (RL) to simulate ransomware attacks. By training an RL agent in a simulated environment mirroring real-world networks, effective attack strategies can be learned quickly, significantly streamlining traditional, manual penetration testing processes. The attack pathways revealed by the RL agent can provide valuable insights to the defense team, helping them identify network weak points and develop more resilient defensive measures. Experimental results on a 152-host example network confirm the effectiveness of the proposed approach, demonstrating the RL agent's capability to discover and orchestrate attacks on high-value targets while evading honeyfiles (decoy files strategically placed to detect unauthorized access).

A Review of Cybersecurity Incidents in the Food and Agriculture Sector

Mar 12, 2024Abstract:The increasing utilization of emerging technologies in the Food & Agriculture (FA) sector has heightened the need for security to minimize cyber risks. Considering this aspect, this manuscript reviews disclosed and documented cybersecurity incidents in the FA sector. For this purpose, thirty cybersecurity incidents were identified, which took place between July 2011 and April 2023. The details of these incidents are reported from multiple sources such as: the private industry and flash notifications generated by the Federal Bureau of Investigation (FBI), internal reports from the affected organizations, and available media sources. Considering the available information, a brief description of the security threat, ransom amount, and impact on the organization are discussed for each incident. This review reports an increased frequency of cybersecurity threats to the FA sector. To minimize these cyber risks, popular cybersecurity frameworks and recent agriculture-specific cybersecurity solutions are also discussed. Further, the need for AI assurance in the FA sector is explained, and the Farmer-Centered AI (FCAI) framework is proposed. The main aim of the FCAI framework is to support farmers in decision-making for agricultural production, by incorporating AI assurance. Lastly, the effects of the reported cyber incidents on other critical infrastructures, food security, and the economy are noted, along with specifying the open issues for future development.

Discovering Command and Control (C2) Channels on Tor and Public Networks Using Reinforcement Learning

Feb 14, 2024Abstract:Command and control (C2) channels are an essential component of many types of cyber attacks, as they enable attackers to remotely control their malware-infected machines and execute harmful actions, such as propagating malicious code across networks, exfiltrating confidential data, or initiating distributed denial of service (DDoS) attacks. Identifying these C2 channels is therefore crucial in helping to mitigate and prevent cyber attacks. However, identifying C2 channels typically involves a manual process, requiring deep knowledge and expertise in cyber operations. In this paper, we propose a reinforcement learning (RL) based approach to automatically emulate C2 attack campaigns using both the normal (public) and the Tor networks. In addition, payload size and network firewalls are configured to simulate real-world attack scenarios. Results on a typical network configuration show that the RL agent can automatically discover resilient C2 attack paths utilizing both Tor-based and conventional communication channels, while also bypassing network firewalls.

Discovering Command and Control Channels Using Reinforcement Learning

Jan 13, 2024

Abstract:Command and control (C2) paths for issuing commands to malware are sometimes the only indicators of its existence within networks. Identifying potential C2 channels is often a manually driven process that involves a deep understanding of cyber tradecraft. Efforts to improve discovery of these channels through using a reinforcement learning (RL) based approach that learns to automatically carry out C2 attack campaigns on large networks, where multiple defense layers are in place serves to drive efficiency for network operators. In this paper, we model C2 traffic flow as a three-stage process and formulate it as a Markov decision process (MDP) with the objective to maximize the number of valuable hosts whose data is exfiltrated. The approach also specifically models payload and defense mechanisms such as firewalls which is a novel contribution. The attack paths learned by the RL agent can in turn help the blue team identify high-priority vulnerabilities and develop improved defense strategies. The method is evaluated on a large network with more than a thousand hosts and the results demonstrate that the agent can effectively learn attack paths while avoiding firewalls.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge