Sports Ball Detection And Tracking

Papers and Code

BST: Badminton Stroke-type Transformer for Skeleton-based Action Recognition in Racket Sports

Feb 28, 2025Badminton, known for having the fastest ball speeds among all sports, presents significant challenges to the field of computer vision, including player identification, court line detection, shuttlecock trajectory tracking, and player stroke-type classification. In this paper, we introduce a novel video segmentation strategy to extract frames of each player's racket swing in a badminton broadcast match. These segmented frames are then processed by two existing models: one for Human Pose Estimation to obtain player skeletal joints, and the other for shuttlecock trajectory detection to extract shuttlecock trajectories. Leveraging these joints, trajectories, and player positions as inputs, we propose Badminton Stroke-type Transformer (BST) to classify player stroke-types in singles. To the best of our knowledge, experimental results demonstrate that our method outperforms the previous state-of-the-art on the largest publicly available badminton video dataset, ShuttleSet, which shows that effectively leveraging ball trajectory is likely to be a trend for racket sports action recognition.

TrackNetV4: Enhancing Fast Sports Object Tracking with Motion Attention Maps

Sep 22, 2024

Accurately detecting and tracking high-speed, small objects, such as balls in sports videos, is challenging due to factors like motion blur and occlusion. Although recent deep learning frameworks like TrackNetV1, V2, and V3 have advanced tennis ball and shuttlecock tracking, they often struggle in scenarios with partial occlusion or low visibility. This is primarily because these models rely heavily on visual features without explicitly incorporating motion information, which is crucial for precise tracking and trajectory prediction. In this paper, we introduce an enhancement to the TrackNet family by fusing high-level visual features with learnable motion attention maps through a motion-aware fusion mechanism, effectively emphasizing the moving ball's location and improving tracking performance. Our approach leverages frame differencing maps, modulated by a motion prompt layer, to highlight key motion regions over time. Experimental results on the tennis ball and shuttlecock datasets show that our method enhances the tracking performance of both TrackNetV2 and V3. We refer to our lightweight, plug-and-play solution, built on top of the existing TrackNet, as TrackNetV4.

Pose2Trajectory: Using Transformers on Body Pose to Predict Tennis Player's Trajectory

Nov 07, 2024Tracking the trajectory of tennis players can help camera operators in production. Predicting future movement enables cameras to automatically track and predict a player's future trajectory without human intervention. Predicting future human movement in the context of complex physical tasks is also intellectually satisfying. Swift advancements in sports analytics and the wide availability of videos for tennis have inspired us to propose a novel method called Pose2Trajectory, which predicts a tennis player's future trajectory as a sequence derived from their body joints' data and ball position. Demonstrating impressive accuracy, our approach capitalizes on body joint information to provide a comprehensive understanding of the human body's geometry and motion, thereby enhancing the prediction of the player's trajectory. We use encoder-decoder Transformer architecture trained on the joints and trajectory information of the players with ball positions. The predicted sequence can provide information to help close-up cameras to keep tracking the tennis player, following centroid coordinates. We generate a high-quality dataset from multiple videos to assist tennis player movement prediction using object detection and human pose estimation methods. It contains bounding boxes and joint information for tennis players and ball positions in singles tennis games. Our method shows promising results in predicting the tennis player's movement trajectory with different sequence prediction lengths using the joints and trajectory information with the ball position.

Deep Understanding of Soccer Match Videos

Jul 11, 2024

Soccer is one of the most popular sport worldwide, with live broadcasts frequently available for major matches. However, extracting detailed, frame-by-frame information on player actions from these videos remains a challenge. Utilizing state-of-the-art computer vision technologies, our system can detect key objects such as soccer balls, players and referees. It also tracks the movements of players and the ball, recognizes player numbers, classifies scenes, and identifies highlights such as goal kicks. By analyzing live TV streams of soccer matches, our system can generate highlight GIFs, tactical illustrations, and diverse summary graphs of ongoing games. Through these visual recognition techniques, we deliver a comprehensive understanding of soccer game videos, enriching the viewer's experience with detailed and insightful analysis.

Widely Applicable Strong Baseline for Sports Ball Detection and Tracking

Nov 16, 2023

In this work, we present a novel Sports Ball Detection and Tracking (SBDT) method that can be applied to various sports categories. Our approach is composed of (1) high-resolution feature extraction, (2) position-aware model training, and (3) inference considering temporal consistency, all of which are put together as a new SBDT baseline. Besides, to validate the wide-applicability of our approach, we compare our baseline with 6 state-of-the-art SBDT methods on 5 datasets from different sports categories. We achieve this by newly introducing two SBDT datasets, providing new ball annotations for two datasets, and re-implementing all the methods to ease extensive comparison. Experimental results demonstrate that our approach is substantially superior to existing methods on all the sports categories covered by the datasets. We believe our proposed method can play as a Widely Applicable Strong Baseline (WASB) of SBDT, and our datasets and codebase will promote future SBDT research. Datasets and codes are available at https://github.com/nttcom/WASB-SBDT .

Improving Object Detection Quality in Football Through Super-Resolution Techniques

Jan 31, 2024This study explores the potential of super-resolution techniques in enhancing object detection accuracy in football. Given the sport's fast-paced nature and the critical importance of precise object (e.g. ball, player) tracking for both analysis and broadcasting, super-resolution could offer significant improvements. We investigate how advanced image processing through super-resolution impacts the accuracy and reliability of object detection algorithms in processing football match footage. Our methodology involved applying state-of-the-art super-resolution techniques to a diverse set of football match videos from SoccerNet, followed by object detection using Faster R-CNN. The performance of these algorithms, both with and without super-resolution enhancement, was rigorously evaluated in terms of detection accuracy. The results indicate a marked improvement in object detection accuracy when super-resolution preprocessing is applied. The improvement of object detection through the integration of super-resolution techniques yields significant benefits, especially for low-resolution scenarios, with a notable 12\% increase in mean Average Precision (mAP) at an IoU (Intersection over Union) range of 0.50:0.95 for 320x240 size images when increasing the resolution fourfold using RLFN. As the dimensions increase, the magnitude of improvement becomes more subdued; however, a discernible improvement in the quality of detection is consistently evident. Additionally, we discuss the implications of these findings for real-time sports analytics, player tracking, and the overall viewing experience. The study contributes to the growing field of sports technology by demonstrating the practical benefits and limitations of integrating super-resolution techniques in football analytics and broadcasting.

A Badminton Recognition and Tracking System Based on Context Multi-feature Fusion

Jun 26, 2023

Ball recognition and tracking have traditionally been the main focus of computer vision researchers as a crucial component of sports video analysis. The difficulties, such as the small ball size, blurry appearance, quick movements, and so on, prevent many classic methods from performing well on ball detection and tracking. In this paper, we present a method for detecting and tracking badminton balls. According to the characteristics of different ball speeds, two trajectory clip trackers are designed based on different rules to capture the correct trajectory of the ball. Meanwhile, combining contextual information, two rounds of detection from coarse-grained to fine-grained are used to solve the challenges encountered in badminton detection. The experimental results show that the precision, recall, and F1-measure of our method, reach 100%, 72.6% and 84.1% with the data without occlusion, respectively.

Event Detection in Football using Graph Convolutional Networks

Jan 24, 2023

The massive growth of data collection in sports has opened numerous avenues for professional teams and media houses to gain insights from this data. The data collected includes per frame player and ball trajectories, and event annotations such as passes, fouls, cards, goals, etc. Graph Convolutional Networks (GCNs) have recently been employed to process this highly unstructured tracking data which can be otherwise difficult to model because of lack of clarity on how to order players in a sequence and how to handle missing objects of interest. In this thesis, we focus on the goal of automatic event detection from football videos. We show how to model the players and the ball in each frame of the video sequence as a graph, and present the results for graph convolutional layers and pooling methods that can be used to model the temporal context present around each action.

Table Tennis Stroke Detection and Recognition Using Ball Trajectory Data

Feb 19, 2023

In this work, the novel task of detecting and classifying table tennis strokes solely using the ball trajectory has been explored. A single camera setup positioned in the umpire's view has been employed to procure a dataset consisting of six stroke classes executed by four professional table tennis players. Ball tracking using YOLOv4, a traditional object detection model, and TrackNetv2, a temporal heatmap based model, have been implemented on our dataset and their performances have been benchmarked. A mathematical approach developed to extract temporal boundaries of strokes using the ball trajectory data yielded a total of 2023 valid strokes in our dataset, while also detecting services and missed strokes successfully. The temporal convolutional network developed performed stroke recognition on completely unseen data with an accuracy of 87.155%. Several machine learning and deep learning based model architectures have been trained for stroke recognition using ball trajectory input and benchmarked based on their performances. While stroke recognition in the field of table tennis has been extensively explored based on human action recognition using video data focused on the player's actions, the use of ball trajectory data for the same is an unexplored characteristic of the sport. Hence, the motivation behind the work is to demonstrate that meaningful inferences such as stroke detection and recognition can be drawn using minimal input information.

A Comprehensive Review of Computer Vision in Sports: Open Issues, Future Trends and Research Directions

Mar 23, 2022

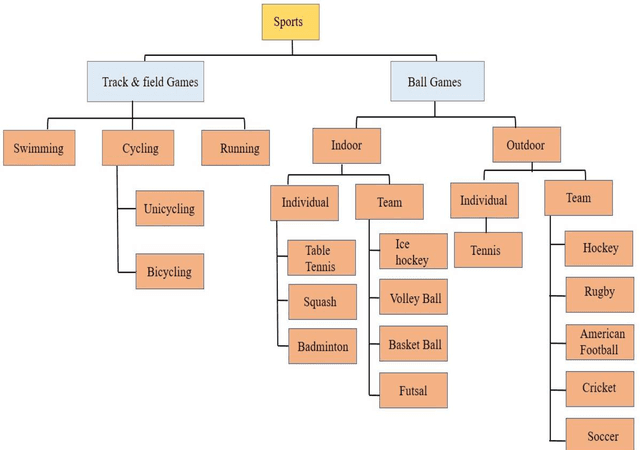

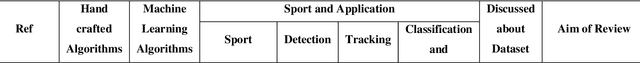

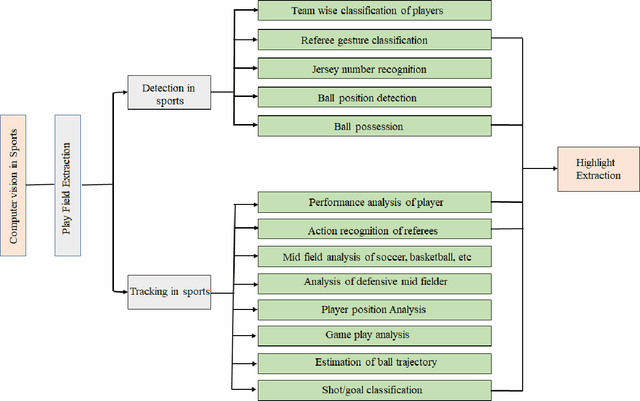

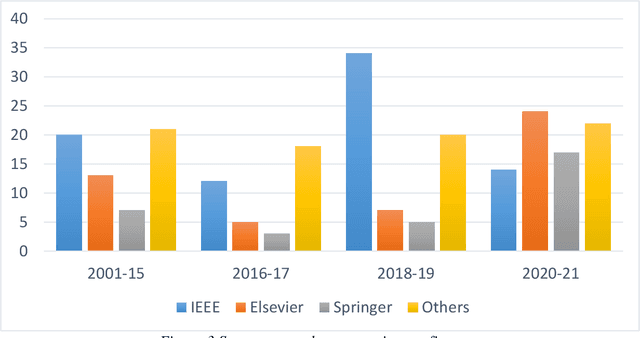

Recent developments in video analysis of sports and computer vision techniques have achieved significant improvements to enable a variety of critical operations. To provide enhanced information, such as detailed complex analysis in sports like soccer, basketball, cricket, badminton, etc., studies have focused mainly on computer vision techniques employed to carry out different tasks. This paper presents a comprehensive review of sports video analysis for various applications high-level analysis such as detection and classification of players, tracking player or ball in sports and predicting the trajectories of player or ball, recognizing the teams strategies, classifying various events in sports. The paper further discusses published works in a variety of application-specific tasks related to sports and the present researchers views regarding them. Since there is a wide research scope in sports for deploying computer vision techniques in various sports, some of the publicly available datasets related to a particular sport have been provided. This work reviews a detailed discussion on some of the artificial intelligence(AI)applications in sports vision, GPU-based work stations, and embedded platforms. Finally, this review identifies the research directions, probable challenges, and future trends in the area of visual recognition in sports.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge