Sketchgraphs

Papers and Code

DAVINCI: A Single-Stage Architecture for Constrained CAD Sketch Inference

Oct 30, 2024This work presents DAVINCI, a unified architecture for single-stage Computer-Aided Design (CAD) sketch parameterization and constraint inference directly from raster sketch images. By jointly learning both outputs, DAVINCI minimizes error accumulation and enhances the performance of constrained CAD sketch inference. Notably, DAVINCI achieves state-of-the-art results on the large-scale SketchGraphs dataset, demonstrating effectiveness on both precise and hand-drawn raster CAD sketches. To reduce DAVINCI's reliance on large-scale annotated datasets, we explore the efficacy of CAD sketch augmentations. We introduce Constraint-Preserving Transformations (CPTs), i.e. random permutations of the parametric primitives of a CAD sketch that preserve its constraints. This data augmentation strategy allows DAVINCI to achieve reasonable performance when trained with only 0.1% of the SketchGraphs dataset. Furthermore, this work contributes a new version of SketchGraphs, augmented with CPTs. The newly introduced CPTSketchGraphs dataset includes 80 million CPT-augmented sketches, thus providing a rich resource for future research in the CAD sketch domain.

PICASSO: A Feed-Forward Framework for Parametric Inference of CAD Sketches via Rendering Self-Supervision

Jul 18, 2024We propose PICASSO, a novel framework CAD sketch parameterization from hand-drawn or precise sketch images via rendering self-supervision. Given a drawing of a CAD sketch, the proposed framework turns it into parametric primitives that can be imported into CAD software. Compared to existing methods, PICASSO enables the learning of parametric CAD sketches from either precise or hand-drawn sketch images, even in cases where annotations at the parameter level are scarce or unavailable. This is achieved by leveraging the geometric characteristics of sketches as a learning cue to pre-train a CAD parameterization network. Specifically, PICASSO comprises two primary components: (1) a Sketch Parameterization Network (SPN) that predicts a series of parametric primitives from CAD sketch images, and (2) a Sketch Rendering Network (SRN) that renders parametric CAD sketches in a differentiable manner. SRN facilitates the computation of a image-to-image loss, which can be utilized to pre-train SPN, thereby enabling zero- and few-shot learning scenarios for the parameterization of hand-drawn sketches. Extensive evaluation on the widely used SketchGraphs dataset validates the effectiveness of the proposed framework.

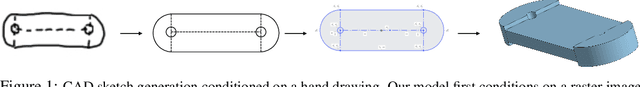

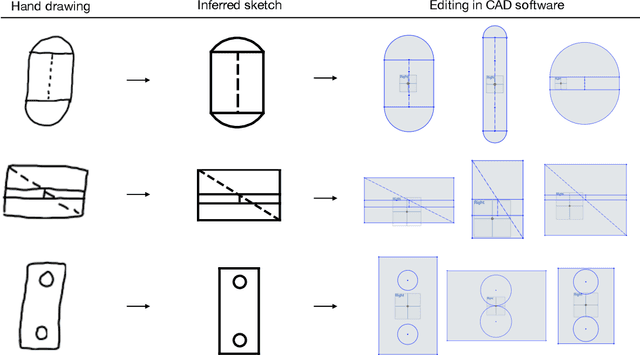

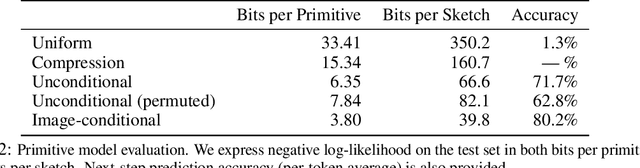

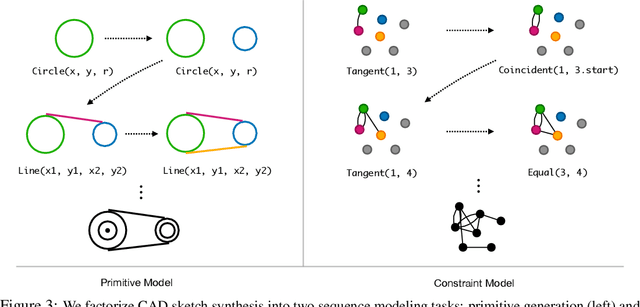

Vitruvion: A Generative Model of Parametric CAD Sketches

Sep 29, 2021

Parametric computer-aided design (CAD) tools are the predominant way that engineers specify physical structures, from bicycle pedals to airplanes to printed circuit boards. The key characteristic of parametric CAD is that design intent is encoded not only via geometric primitives, but also by parameterized constraints between the elements. This relational specification can be viewed as the construction of a constraint program, allowing edits to coherently propagate to other parts of the design. Machine learning offers the intriguing possibility of accelerating the design process via generative modeling of these structures, enabling new tools such as autocompletion, constraint inference, and conditional synthesis. In this work, we present such an approach to generative modeling of parametric CAD sketches, which constitute the basic computational building blocks of modern mechanical design. Our model, trained on real-world designs from the SketchGraphs dataset, autoregressively synthesizes sketches as sequences of primitives, with initial coordinates, and constraints that reference back to the sampled primitives. As samples from the model match the constraint graph representation used in standard CAD software, they may be directly imported, solved, and edited according to downstream design tasks. In addition, we condition the model on various contexts, including partial sketches (primers) and images of hand-drawn sketches. Evaluation of the proposed approach demonstrates its ability to synthesize realistic CAD sketches and its potential to aid the mechanical design workflow.

SketchGraphs: A Large-Scale Dataset for Modeling Relational Geometry in Computer-Aided Design

Jul 16, 2020

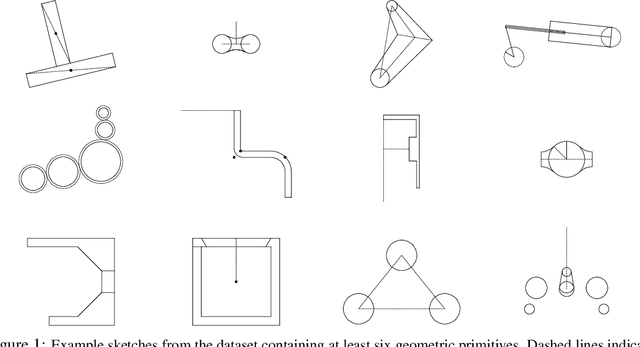

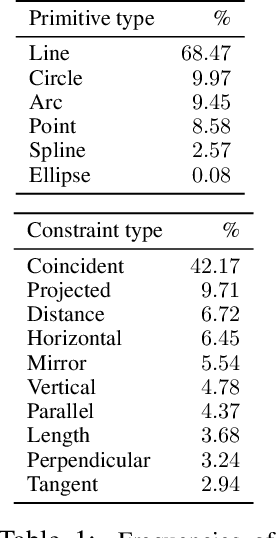

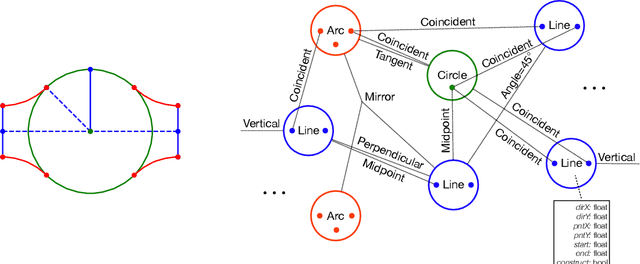

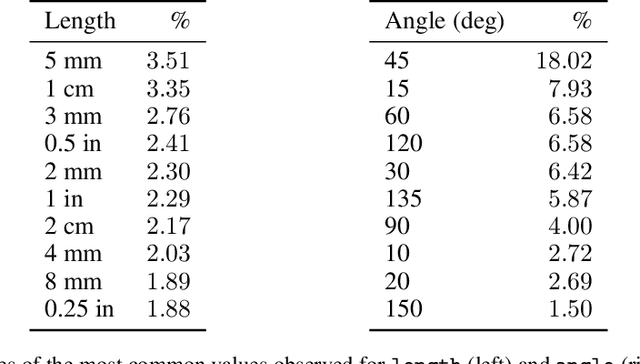

Parametric computer-aided design (CAD) is the dominant paradigm in mechanical engineering for physical design. Distinguished by relational geometry, parametric CAD models begin as two-dimensional sketches consisting of geometric primitives (e.g., line segments, arcs) and explicit constraints between them (e.g., coincidence, perpendicularity) that form the basis for three-dimensional construction operations. Training machine learning models to reason about and synthesize parametric CAD designs has the potential to reduce design time and enable new design workflows. Additionally, parametric CAD designs can be viewed as instances of constraint programming and they offer a well-scoped test bed for exploring ideas in program synthesis and induction. To facilitate this research, we introduce SketchGraphs, a collection of 15 million sketches extracted from real-world CAD models coupled with an open-source data processing pipeline. Each sketch is represented as a geometric constraint graph where edges denote designer-imposed geometric relationships between primitives, the nodes of the graph. We demonstrate and establish benchmarks for two use cases of the dataset: generative modeling of sketches and conditional generation of likely constraints given unconstrained geometry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge