Djamila Aouada

Annotation Free Spacecraft Detection and Segmentation using Vision Language Models

Feb 04, 2026Abstract:Vision Language Models (VLMs) have demonstrated remarkable performance in open-world zero-shot visual recognition. However, their potential in space-related applications remains largely unexplored. In the space domain, accurate manual annotation is particularly challenging due to factors such as low visibility, illumination variations, and object blending with planetary backgrounds. Developing methods that can detect and segment spacecraft and orbital targets without requiring extensive manual labeling is therefore of critical importance. In this work, we propose an annotation-free detection and segmentation pipeline for space targets using VLMs. Our approach begins by automatically generating pseudo-labels for a small subset of unlabeled real data with a pre-trained VLM. These pseudo-labels are then leveraged in a teacher-student label distillation framework to train lightweight models. Despite the inherent noise in the pseudo-labels, the distillation process leads to substantial performance gains over direct zero-shot VLM inference. Experimental evaluations on the SPARK-2024, SPEED+, and TANGO datasets on segmentation tasks demonstrate consistent improvements in average precision (AP) by up to 10 points. Code and models are available at https://github.com/giddyyupp/annotation-free-spacecraft-segmentation.

Training Free Zero-Shot Visual Anomaly Localization via Diffusion Inversion

Jan 12, 2026Abstract:Zero-Shot image Anomaly Detection (ZSAD) aims to detect and localise anomalies without access to any normal training samples of the target data. While recent ZSAD approaches leverage additional modalities such as language to generate fine-grained prompts for localisation, vision-only methods remain limited to image-level classification, lacking spatial precision. In this work, we introduce a simple yet effective training-free vision-only ZSAD framework that circumvents the need for fine-grained prompts by leveraging the inversion of a pretrained Denoising Diffusion Implicit Model (DDIM). Specifically, given an input image and a generic text description (e.g., "an image of an [object class]"), we invert the image to obtain latent representations and initiate the denoising process from a fixed intermediate timestep to reconstruct the image. Since the underlying diffusion model is trained solely on normal data, this process yields a normal-looking reconstruction. The discrepancy between the input image and the reconstructed one highlights potential anomalies. Our method achieves state-of-the-art performance on VISA dataset, demonstrating strong localisation capabilities without auxiliary modalities and facilitating a shift away from prompt dependence for zero-shot anomaly detection research. Code is available at https://github.com/giddyyupp/DIVAD.

VLMDiff: Leveraging Vision-Language Models for Multi-Class Anomaly Detection with Diffusion

Nov 11, 2025

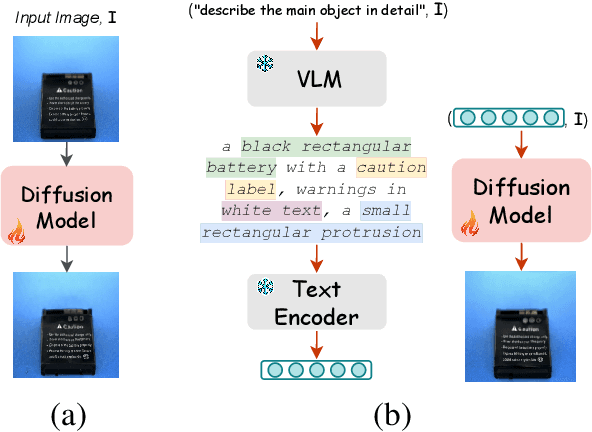

Abstract:Detecting visual anomalies in diverse, multi-class real-world images is a significant challenge. We introduce \ours, a novel unsupervised multi-class visual anomaly detection framework. It integrates a Latent Diffusion Model (LDM) with a Vision-Language Model (VLM) for enhanced anomaly localization and detection. Specifically, a pre-trained VLM with a simple prompt extracts detailed image descriptions, serving as additional conditioning for LDM training. Current diffusion-based methods rely on synthetic noise generation, limiting their generalization and requiring per-class model training, which hinders scalability. \ours, however, leverages VLMs to obtain normal captions without manual annotations or additional training. These descriptions condition the diffusion model, learning a robust normal image feature representation for multi-class anomaly detection. Our method achieves competitive performance, improving the pixel-level Per-Region-Overlap (PRO) metric by up to 25 points on the Real-IAD dataset and 8 points on the COCO-AD dataset, outperforming state-of-the-art diffusion-based approaches. Code is available at https://github.com/giddyyupp/VLMDiff.

Zero-Shot Anomaly Detection in Battery Thermal Images Using Visual Question Answering with Prior Knowledge

May 22, 2025Abstract:Batteries are essential for various applications, including electric vehicles and renewable energy storage, making safety and efficiency critical concerns. Anomaly detection in battery thermal images helps identify failures early, but traditional deep learning methods require extensive labeled data, which is difficult to obtain, especially for anomalies due to safety risks and high data collection costs. To overcome this, we explore zero-shot anomaly detection using Visual Question Answering (VQA) models, which leverage pretrained knowledge and textbased prompts to generalize across vision tasks. By incorporating prior knowledge of normal battery thermal behavior, we design prompts to detect anomalies without battery-specific training data. We evaluate three VQA models (ChatGPT-4o, LLaVa-13b, and BLIP-2) analyzing their robustness to prompt variations, repeated trials, and qualitative outputs. Despite the lack of finetuning on battery data, our approach demonstrates competitive performance compared to state-of-the-art models that are trained with the battery data. Our findings highlight the potential of VQA-based zero-shot learning for battery anomaly detection and suggest future directions for improving its effectiveness.

Domain Adaptation for Multi-label Image Classification: a Discriminator-free Approach

May 20, 2025Abstract:This paper introduces a discriminator-free adversarial-based approach termed DDA-MLIC for Unsupervised Domain Adaptation (UDA) in the context of Multi-Label Image Classification (MLIC). While recent efforts have explored adversarial-based UDA methods for MLIC, they typically include an additional discriminator subnet. Nevertheless, decoupling the classification and the discrimination tasks may harm their task-specific discriminative power. Herein, we address this challenge by presenting a novel adversarial critic directly derived from the task-specific classifier. Specifically, we employ a two-component Gaussian Mixture Model (GMM) to model both source and target predictions, distinguishing between two distinct clusters. Instead of using the traditional Expectation Maximization (EM) algorithm, our approach utilizes a Deep Neural Network (DNN) to estimate the parameters of each GMM component. Subsequently, the source and target GMM parameters are leveraged to formulate an adversarial loss using the Fr\'echet distance. The proposed framework is therefore not only fully differentiable but is also cost-effective as it avoids the expensive iterative process usually induced by the standard EM method. The proposed method is evaluated on several multi-label image datasets covering three different types of domain shift. The obtained results demonstrate that DDA-MLIC outperforms existing state-of-the-art methods in terms of precision while requiring a lower number of parameters. The code is made publicly available at github.com/cvi2snt/DDA-MLIC.

MultiMAE Meets Earth Observation: Pre-training Multi-modal Multi-task Masked Autoencoders for Earth Observation Tasks

May 20, 2025Abstract:Multi-modal data in Earth Observation (EO) presents a huge opportunity for improving transfer learning capabilities when pre-training deep learning models. Unlike prior work that often overlooks multi-modal EO data, recent methods have started to include it, resulting in more effective pre-training strategies. However, existing approaches commonly face challenges in effectively transferring learning to downstream tasks where the structure of available data differs from that used during pre-training. This paper addresses this limitation by exploring a more flexible multi-modal, multi-task pre-training strategy for EO data. Specifically, we adopt a Multi-modal Multi-task Masked Autoencoder (MultiMAE) that we pre-train by reconstructing diverse input modalities, including spectral, elevation, and segmentation data. The pre-trained model demonstrates robust transfer learning capabilities, outperforming state-of-the-art methods on various EO datasets for classification and segmentation tasks. Our approach exhibits significant flexibility, handling diverse input configurations without requiring modality-specific pre-trained models. Code will be available at: https://github.com/josesosajs/multimae-meets-eo.

Uncertainty-Aware Knowledge Distillation for Compact and Efficient 6DoF Pose Estimation

Mar 17, 2025Abstract:Compact and efficient 6DoF object pose estimation is crucial in applications such as robotics, augmented reality, and space autonomous navigation systems, where lightweight models are critical for real-time accurate performance. This paper introduces a novel uncertainty-aware end-to-end Knowledge Distillation (KD) framework focused on keypoint-based 6DoF pose estimation. Keypoints predicted by a large teacher model exhibit varying levels of uncertainty that can be exploited within the distillation process to enhance the accuracy of the student model while ensuring its compactness. To this end, we propose a distillation strategy that aligns the student and teacher predictions by adjusting the knowledge transfer based on the uncertainty associated with each teacher keypoint prediction. Additionally, the proposed KD leverages this uncertainty-aware alignment of keypoints to transfer the knowledge at key locations of their respective feature maps. Experiments on the widely-used LINEMOD benchmark demonstrate the effectiveness of our method, achieving superior 6DoF object pose estimation with lightweight models compared to state-of-the-art approaches. Further validation on the SPEED+ dataset for spacecraft pose estimation highlights the robustness of our approach under diverse 6DoF pose estimation scenarios.

Removing Geometric Bias in One-Class Anomaly Detection with Adaptive Feature Perturbation

Mar 07, 2025

Abstract:One-class anomaly detection aims to detect objects that do not belong to a predefined normal class. In practice training data lack those anomalous samples; hence state-of-the-art methods are trained to discriminate between normal and synthetically-generated pseudo-anomalous data. Most methods use data augmentation techniques on normal images to simulate anomalies. However the best-performing ones implicitly leverage a geometric bias present in the benchmarking datasets. This limits their usability in more general conditions. Others are relying on basic noising schemes that may be suboptimal in capturing the underlying structure of normal data. In addition most still favour the image domain to generate pseudo-anomalies training models end-to-end from only the normal class and overlooking richer representations of the information. To overcome these limitations we consider frozen yet rich feature spaces given by pretrained models and create pseudo-anomalous features with a novel adaptive linear feature perturbation technique. It adapts the noise distribution to each sample applies decaying linear perturbations to feature vectors and further guides the classification process using a contrastive learning objective. Experimental evaluation conducted on both standard and geometric bias-free datasets demonstrates the superiority of our approach with respect to comparable baselines. The codebase is accessible via our public repository.

When Unsupervised Domain Adaptation meets One-class Anomaly Detection: Addressing the Two-fold Unsupervised Curse by Leveraging Anomaly Scarcity

Feb 28, 2025Abstract:This paper introduces the first fully unsupervised domain adaptation (UDA) framework for unsupervised anomaly detection (UAD). The performance of UAD techniques degrades significantly in the presence of a domain shift, difficult to avoid in a real-world setting. While UDA has contributed to solving this issue in binary and multi-class classification, such a strategy is ill-posed in UAD. This might be explained by the unsupervised nature of the two tasks, namely, domain adaptation and anomaly detection. Herein, we first formulate this problem that we call the two-fold unsupervised curse. Then, we propose a pioneering solution to this curse, considered intractable so far, by assuming that anomalies are rare. Specifically, we leverage clustering techniques to identify a dominant cluster in the target feature space. Posed as the normal cluster, the latter is aligned with the source normal features. Concretely, given a one-class source set and an unlabeled target set composed mostly of normal data and some anomalies, we fit the source features within a hypersphere while jointly aligning them with the features of the dominant cluster from the target set. The paper provides extensive experiments and analysis on common adaptation benchmarks for anomaly detection, demonstrating the relevance of both the newly introduced paradigm and the proposed approach. The code will be made publicly available.

Audio-visual Deepfake Detection With Local Temporal Inconsistencies

Jan 14, 2025

Abstract:This paper proposes an audio-visual deepfake detection approach that aims to capture fine-grained temporal inconsistencies between audio and visual modalities. To achieve this, both architectural and data synthesis strategies are introduced. From an architectural perspective, a temporal distance map, coupled with an attention mechanism, is designed to capture these inconsistencies while minimizing the impact of irrelevant temporal subsequences. Moreover, we explore novel pseudo-fake generation techniques to synthesize local inconsistencies. Our approach is evaluated against state-of-the-art methods using the DFDC and FakeAVCeleb datasets, demonstrating its effectiveness in detecting audio-visual deepfakes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge