Seadronessee

Papers and Code

SVGS-DSGAT: An IoT-Enabled Innovation in Underwater Robotic Object Detection Technology

Jan 21, 2025

With the advancement of Internet of Things (IoT) technology, underwater target detection and tracking have become increasingly important for ocean monitoring and resource management. Existing methods often fall short in handling high-noise and low-contrast images in complex underwater environments, lacking precision and robustness. This paper introduces a novel SVGS-DSGAT model that combines GraphSage, SVAM, and DSGAT modules, enhancing feature extraction and target detection capabilities through graph neural networks and attention mechanisms. The model integrates IoT technology to facilitate real-time data collection and processing, optimizing resource allocation and model responsiveness. Experimental results demonstrate that the SVGS-DSGAT model achieves an mAP of 40.8% on the URPC 2020 dataset and 41.5% on the SeaDronesSee dataset, significantly outperforming existing mainstream models. This IoT-enhanced approach not only excels in high-noise and complex backgrounds but also improves the overall efficiency and scalability of the system. This research provides an effective IoT solution for underwater target detection technology, offering significant practical application value and broad development prospects.

* 17 pages, 8 figures

ReIDTracker Sea: the technical report of BoaTrack and SeaDronesSee-MOT challenge at MaCVi of WACV24

Nov 12, 2023

Multi-Object Tracking is one of the most important technologies in maritime computer vision. Our solution tries to explore Multi-Object Tracking in maritime Unmanned Aerial vehicles (UAVs) and Unmanned Surface Vehicles (USVs) usage scenarios. Most of the current Multi-Object Tracking algorithms require complex association strategies and association information (2D location and motion, 3D motion, 3D depth, 2D appearance) to achieve better performance, which makes the entire tracking system extremely complex and heavy. At the same time, most of the current Multi-Object Tracking algorithms still require video annotation data which is costly to obtain for training. Our solution tries to explore Multi-Object Tracking in a completely unsupervised way. The scheme accomplishes instance representation learning by using self-supervision on ImageNet. Then, by cooperating with high-quality detectors, the multi-target tracking task can be completed simply and efficiently. The scheme achieved top 3 performance on both UAV-based Multi-Object Tracking with Reidentification and USV-based Multi-Object Tracking benchmarks and the solution won the championship in many multiple Multi-Object Tracking competitions. such as BDD100K MOT,MOTS, Waymo 2D MOT

Stable Yaw Estimation of Boats from the Viewpoint of UAVs and USVs

Jun 24, 2023

Yaw estimation of boats from the viewpoint of unmanned aerial vehicles (UAVs) and unmanned surface vehicles (USVs) or boats is a crucial task in various applications such as 3D scene rendering, trajectory prediction, and navigation. However, the lack of literature on yaw estimation of objects from the viewpoint of UAVs has motivated us to address this domain. In this paper, we propose a method based on HyperPosePDF for predicting the orientation of boats in the 6D space. For that, we use existing datasets, such as PASCAL3D+ and our own datasets, SeaDronesSee-3D and BOArienT, which we annotated manually. We extend HyperPosePDF to work in video-based scenarios, such that it yields robust orientation predictions across time. Naively applying HyperPosePDF on video data yields single-point predictions, resulting in far-off predictions and often incorrect symmetric orientations due to unseen or visually different data. To alleviate this issue, we propose aggregating the probability distributions of pose predictions, resulting in significantly improved performance, as shown in our experimental evaluation. Our proposed method could significantly benefit downstream tasks in marine robotics.

1st Workshop on Maritime Computer Vision 2023: Challenge Results

Nov 28, 2022

The 1$^{\text{st}}$ Workshop on Maritime Computer Vision (MaCVi) 2023 focused on maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicle (USV), and organized several subchallenges in this domain: (i) UAV-based Maritime Object Detection, (ii) UAV-based Maritime Object Tracking, (iii) USV-based Maritime Obstacle Segmentation and (iv) USV-based Maritime Obstacle Detection. The subchallenges were based on the SeaDronesSee and MODS benchmarks. This report summarizes the main findings of the individual subchallenges and introduces a new benchmark, called SeaDronesSee Object Detection v2, which extends the previous benchmark by including more classes and footage. We provide statistical and qualitative analyses, and assess trends in the best-performing methodologies of over 130 submissions. The methods are summarized in the appendix. The datasets, evaluation code and the leaderboard are publicly available at https://seadronessee.cs.uni-tuebingen.de/macvi.

SeaDronesSee: A Maritime Benchmark for Detecting Humans in Open Water

May 05, 2021

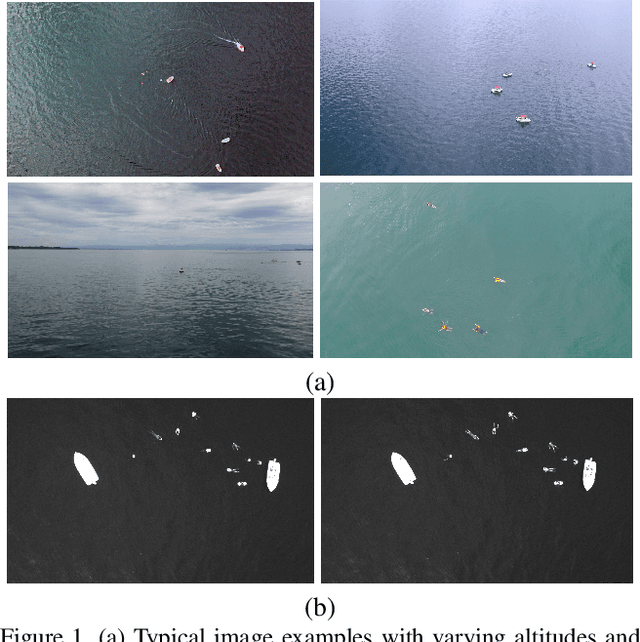

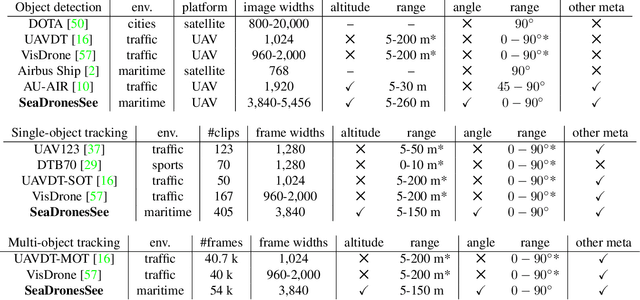

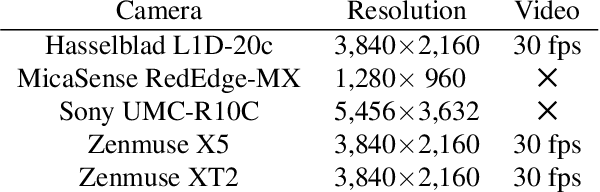

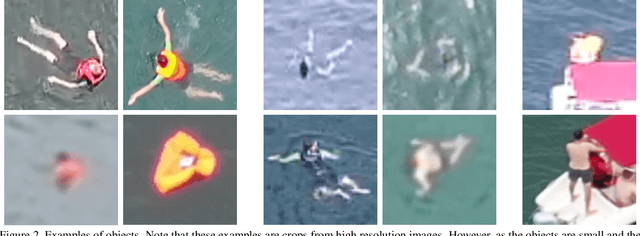

Unmanned Aerial Vehicles (UAVs) are of crucial importance in search and rescue missions in maritime environments due to their flexible and fast operation capabilities. Modern computer vision algorithms are of great interest in aiding such missions. However, they are dependent on large amounts of real-case training data from UAVs, which is only available for traffic scenarios on land. Moreover, current object detection and tracking data sets only provide limited environmental information or none at all, neglecting a valuable source of information. Therefore, this paper introduces a large-scaled visual object detection and tracking benchmark (SeaDronesSee) aiming to bridge the gap from land-based vision systems to sea-based ones. We collect and annotate over 54,000 frames with 400,000 instances captured from various altitudes and viewing angles ranging from 5 to 260 meters and 0 to 90 degrees while providing the respective meta information for altitude, viewing angle and other meta data. We evaluate multiple state-of-the-art computer vision algorithms on this newly established benchmark serving as baselines. We provide an evaluation server where researchers can upload their prediction and compare their results on a central leaderboard

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge