Cyber Attack Detection

Cyber attack detection is the process of identifying and preventing cyber attacks on computer systems and networks.

Papers and Code

Tri-LLM Cooperative Federated Zero-Shot Intrusion Detection with Semantic Disagreement and Trust-Aware Aggregation

Jan 30, 2026Federated learning (FL) has become an effective paradigm for privacy-preserving, distributed Intrusion Detection Systems (IDS) in cyber-physical and Internet of Things (IoT) networks, where centralized data aggregation is often infeasible due to privacy and bandwidth constraints. Despite its advantages, most existing FL-based IDS assume closed-set learning and lack mechanisms such as uncertainty estimation, semantic generalization, and explicit modeling of epistemic ambiguity in zero-day attack scenarios. Additionally, robustness to heterogeneous and unreliable clients remains a challenge in practical applications. This paper introduces a semantics-driven federated IDS framework that incorporates language-derived semantic supervision into federated optimization, enabling open-set and zero-shot intrusion detection for previously unseen attack behaviors. The approach constructs semantic attack prototypes using a Tri-LLM ensemble of GPT-4o, DeepSeek-V3, and LLaMA-3-8B, aligning distributed telemetry features with high-level attack concepts. Inter-LLM semantic disagreement is modeled as epistemic uncertainty for zero-day risk estimation, while a trust-aware aggregation mechanism dynamically weights client updates based on reliability. Experimental results show stable semantic alignment across heterogeneous clients and consistent convergence. The framework achieves over 80% zero-shot detection accuracy on unseen attack patterns, improving zero-day discrimination by more than 10% compared to similarity-based baselines, while maintaining low aggregation instability in the presence of unreliable or compromised clients.

Securing Time in Energy IoT: A Clock-Dynamics-Aware Spatio-Temporal Graph Attention Network for Clock Drift Attacks and Y2K38 Failures

Jan 30, 2026The integrity of time in distributed Internet of Things (IoT) devices is crucial for reliable operation in energy cyber-physical systems, such as smart grids and microgrids. However, IoT systems are vulnerable to clock drift, time-synchronization manipulation, and timestamp discontinuities, such as the Year 2038 (Y2K38) Unix overflow, all of which disrupt temporal ordering. Conventional anomaly-detection models, which assume reliable timestamps, fail to capture temporal inconsistencies. This paper introduces STGAT (Spatio-Temporal Graph Attention Network), a framework that models both temporal distortion and inter-device consistency in energy IoT systems. STGAT combines drift-aware temporal embeddings and temporal self-attention to capture corrupted time evolution at individual devices, and uses graph attention to model spatial propagation of timing errors. A curvature-regularized latent representation geometrically separates normal clock evolution from anomalies caused by drift, synchronization offsets, and overflow events. Experimental results on energy IoT telemetry with controlled timing perturbations show that STGAT achieves 95.7% accuracy, outperforming recurrent, transformer, and graph-based baselines with significant improvements (d > 1.8, p < 0.001). Additionally, STGAT reduces detection delay by 26%, achieving a 2.3-time-step delay while maintaining stable performance under overflow, drift, and physical inconsistencies.

An Optimized Decision Tree-Based Framework for Explainable IoT Anomaly Detection

Jan 18, 2026The increase in the number of Internet of Things (IoT) devices has tremendously increased the attack surface of cyber threats thus making a strong intrusion detection system (IDS) with a clear explanation of the process essential towards resource-constrained environments. Nevertheless, current IoT IDS systems are usually traded off with detection quality, model elucidability, and computational effectiveness, thus the deployment on IoT devices. The present paper counteracts these difficulties by suggesting an explainable AI (XAI) framework based on an optimized Decision Tree classifier with both local and global importance methods: SHAP values that estimate feature attribution using local explanations, and Morris sensitivity analysis that identifies the feature importance in a global view. The proposed system attains the state of art on the test performance with 99.91% accuracy, F1-score of 99.51% and Cohen Kappa of 0.9960 and high stability is confirmed by a cross validation mean accuracy of 98.93%. Efficiency is also enhanced in terms of computations to provide faster inferences compared to those that are generalized in ensemble models. SrcMac has shown as the most significant predictor in feature analyses according to SHAP and Morris methods. Compared to the previous work, our solution eliminates its major drawback lack because it allows us to apply it to edge devices and, therefore, achieve real-time processing, adhere to the new regulation of transparency in AI, and achieve high detection rates on attacks of dissimilar classes. This combination performance of high accuracy, explainability, and low computation make the framework useful and reliable as a resource-constrained IoT security problem in real environments.

Hybrid IDS Using Signature-Based and Anomaly-Based Detection

Jan 17, 2026Intrusion detection systems (IDS) are essential for protecting computer systems and networks against a wide range of cyber threats that continue to evolve over time. IDS are commonly categorized into two main types, each with its own strengths and limitations, such as difficulty in detecting previously unseen attacks and the tendency to generate high false positive rates. This paper presents a comprehensive survey and a conceptual overview of Hybrid IDS, which integrate signature-based and anomaly-based detection techniques to enhance attack detection capabilities. The survey examines recent research on Hybrid IDS, classifies existing models into functional categories, and discusses their advantages, limitations, and application domains, including financial systems, air traffic control, and social networks. In addition, recent trends in Hybrid IDS research, such as machine learning-based approaches and cloud-based deployments, are reviewed. Finally, this work outlines potential future research directions aimed at developing more cost-effective Hybrid IDS solutions with improved ability to detect emerging and sophisticated cyberattacks.

QUPID: A Partitioned Quantum Neural Network for Anomaly Detection in Smart Grid

Jan 16, 2026Smart grid infrastructures have revolutionized energy distribution, but their day-to-day operations require robust anomaly detection methods to counter risks associated with cyber-physical threats and system faults potentially caused by natural disasters, equipment malfunctions, and cyber attacks. Conventional machine learning (ML) models are effective in several domains, yet they struggle to represent the complexities observed in smart grid systems. Furthermore, traditional ML models are highly susceptible to adversarial manipulations, making them increasingly unreliable for real-world deployment. Quantum ML (QML) provides a unique advantage, utilizing quantum-enhanced feature representations to model the intricacies of the high-dimensional nature of smart grid systems while demonstrating greater resilience to adversarial manipulation. In this work, we propose QUPID, a partitioned quantum neural network (PQNN) that outperforms traditional state-of-the-art ML models in anomaly detection. We extend our model to R-QUPID that even maintains its performance when including differential privacy (DP) for enhanced robustness. Moreover, our partitioning framework addresses a significant scalability problem in QML by efficiently distributing computational workloads, making quantum-enhanced anomaly detection practical in large-scale smart grid environments. Our experimental results across various scenarios exemplifies the efficacy of QUPID and R-QUPID to significantly improve anomaly detection capabilities and robustness compared to traditional ML approaches.

Cyberattack Detection in Virtualized Microgrids Using LightGBM and Knowledge-Distilled Classifiers

Jan 07, 2026Modern microgrids depend on distributed sensing and communication interfaces, making them increasingly vulnerable to cyber physical disturbances that threaten operational continuity and equipment safety. In this work, a complete virtual microgrid was designed and implemented in MATLAB/Simulink, integrating heterogeneous renewable sources and secondary controller layers. A structured cyberattack framework was developed using MGLib to inject adversarial signals directly into the secondary control pathways. Multiple attack classes were emulated, including ramp, sinusoidal, additive, coordinated stealth, and denial of service behaviors. The virtual environment was used to generate labeled datasets under both normal and attack conditions. The datasets trained Light Gradient Boosting Machine (LightGBM) models to perform two functions: detecting the presence of an intrusion (binary) and distinguishing among attack types (multiclass). The multiclass model attained 99.72% accuracy and a 99.62% F1 score, while the binary model attained 94.8% accuracy and a 94.3% F1 score. A knowledge-distillation step reduced the size of the multiclass model, allowing faster predictions with only a small drop in performance. Real-time tests showed a processing delay of about 54 to 67 ms per 1000 samples, demonstrating suitability for CPU-based edge deployment in microgrid controllers. The results confirm that lightweight machine learning based intrusion detection methods can provide fast, accurate, and efficient cyberattack detection without relying on complex deep learning models. Key contributions include: (1) development of a complete MATLAB-based virtual microgrid, (2) structured attack injection at the control layer, (3) creation of multiclass labeled datasets, and (4) design of low-cost AI models suitable for practical microgrid cybersecurity.

Engineering Attack Vectors and Detecting Anomalies in Additive Manufacturing

Jan 01, 2026Additive manufacturing (AM) is rapidly integrating into critical sectors such as aerospace, automotive, and healthcare. However, this cyber-physical convergence introduces new attack surfaces, especially at the interface between computer-aided design (CAD) and machine execution layers. In this work, we investigate targeted cyberattacks on two widely used fused deposition modeling (FDM) systems, Creality's flagship model K1 Max, and Ender 3. Our threat model is a multi-layered Man-in-the-Middle (MitM) intrusion, where the adversary intercepts and manipulates G-code files during upload from the user interface to the printer firmware. The MitM intrusion chain enables several stealthy sabotage scenarios. These attacks remain undetectable by conventional slicer software or runtime interfaces, resulting in structurally defective yet externally plausible printed parts. To counter these stealthy threats, we propose an unsupervised Intrusion Detection System (IDS) that analyzes structured machine logs generated during live printing. Our defense mechanism uses a frozen Transformer-based encoder (a BERT variant) to extract semantic representations of system behavior, followed by a contrastively trained projection head that learns anomaly-sensitive embeddings. Later, a clustering-based approach and a self-attention autoencoder are used for classification. Experimental results demonstrate that our approach effectively distinguishes between benign and compromised executions.

Hybrid Ensemble Method for Detecting Cyber-Attacks in Water Distribution Systems Using the BATADAL Dataset

Dec 16, 2025

The cybersecurity of Industrial Control Systems that manage critical infrastructure such as Water Distribution Systems has become increasingly important as digital connectivity expands. BATADAL benchmark data is a good source of testing intrusion detection techniques, but it presents several important problems, such as imbalance in the number of classes, multivariate time dependence, and stealthy attacks. We consider a hybrid ensemble learning model that will enhance the detection ability of cyber-attacks in WDS by using the complementary capabilities of machine learning and deep learning models. Three base learners, namely, Random Forest , eXtreme Gradient Boosting , and Long Short-Term Memory network, have been strictly compared and seven ensemble types using simple averaged and stacked learning with a logistic regression meta-learner. Random Forest analysis identified top predictors turned into temporal and statistical features, and Synthetic Minority Oversampling Technique (SMOTE) was used to overcome the class imbalance issue. The analyics indicates that the single Long Short-Term Memory network model is of poor performance (F1 = 0.000, AUC = 0.4460), but tree-based models, especially eXtreme Gradient Boosting, perform well (F1 = 0.7470, AUC=0.9684). The hybrid stacked ensemble of Random Forest , eXtreme Gradient Boosting , and Long Short-Term Memory network scored the highest, with the attack class of 0.7205 with an F1-score and a AUC of 0.9826 indicating that the heterogeneous stacking between model precision and generalization can work. The proposed framework establishes a robust and scalable solution for cyber-attack detection in time-dependent industrial systems, integrating temporal learning and ensemble diversity to support the secure operation of critical infrastructure.

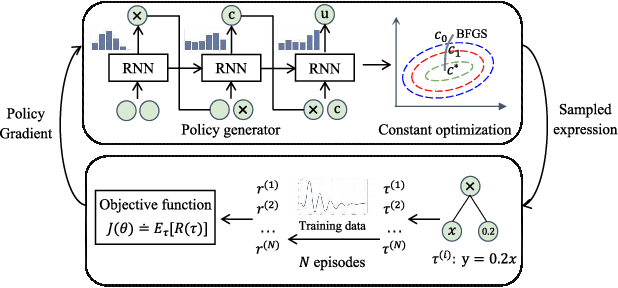

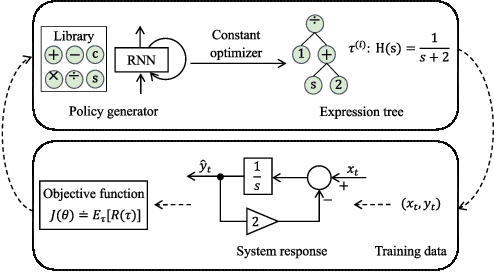

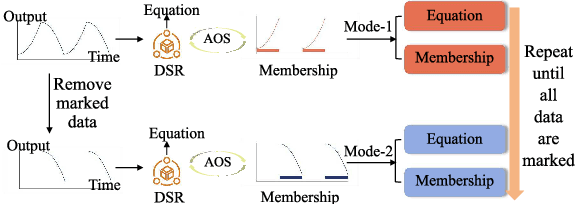

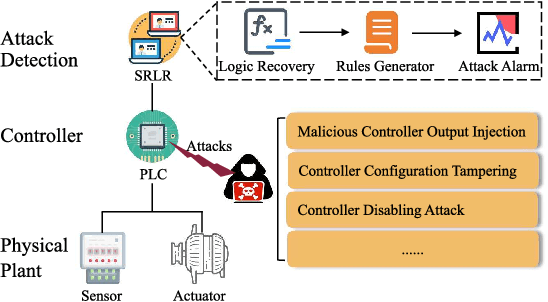

SRLR: Symbolic Regression based Logic Recovery to Counter Programmable Logic Controller Attacks

Dec 12, 2025

Programmable Logic Controllers (PLCs) are critical components in Industrial Control Systems (ICSs). Their potential exposure to external world makes them susceptible to cyber-attacks. Existing detection methods against controller logic attacks use either specification-based or learnt models. However, specification-based models require experts' manual efforts or access to PLC's source code, while machine learning-based models often fall short of providing explanation for their decisions. We design SRLR -- a it Symbolic Regression based Logic Recovery} solution to identify the logic of a PLC based only on its inputs and outputs. The recovered logic is used to generate explainable rules for detecting controller logic attacks. SRLR enhances the latest deep symbolic regression methods using the following ICS-specific properties: (1) some important ICS control logic is best represented in frequency domain rather than time domain; (2) an ICS controller can operate in multiple modes, each using different logic, where mode switches usually do not happen frequently; (3) a robust controller usually filters out outlier inputs as ICS sensor data can be noisy; and (4) with the above factors captured, the degree of complexity of the formulas is reduced, making effective search possible. Thanks to these enhancements, SRLR consistently outperforms all existing methods in a variety of ICS settings that we evaluate. In terms of the recovery accuracy, SRLR's gain can be as high as 39% in some challenging environment. We also evaluate SRLR on a distribution grid containing hundreds of voltage regulators, demonstrating its stability in handling large-scale, complex systems with varied configurations.

* 27 pages, 20 figures. This article was accepted by IEEE Transactions on Information Forensics and Security. DOI: 10.1109/TIFS.2025.3634027

Intrusion Detection in Internet of Vehicles Using Machine Learning

Dec 16, 2025

The Internet of Vehicles (IoV) has evolved modern transportation through enhanced connectivity and intelligent systems. However, this increased connectivity introduces critical vulnerabilities, making vehicles susceptible to cyber-attacks such Denial-ofService (DoS) and message spoofing. This project aims to develop a machine learning-based intrusion detection system to classify malicious Controller Area network (CAN) bus traffic using the CiCIoV2024 benchmark dataset. We analyzed various attack patterns including DoS and spoofing attacks targeting critical vehicle parameters such as Spoofing-GAS - gas pedal position, Spoofing-RPM, Spoofing-Speed, and Spoofing-Steering\_Wheel. Our initial findings confirm a multi-class classification problem with a clear structural difference between attack types and benign data, providing a strong foundation for machine learning models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge