Zhuoyun Li

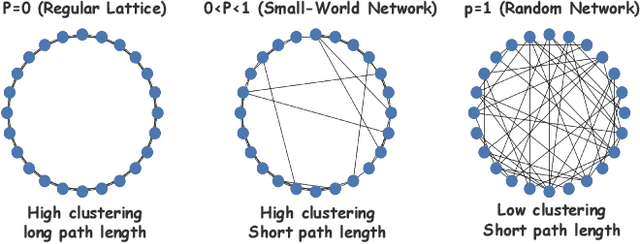

Rethinking Multi-Agent Intelligence Through the Lens of Small-World Networks

Dec 19, 2025

Abstract:Large language models (LLMs) have enabled multi-agent systems (MAS) in which multiple agents argue, critique, and coordinate to solve complex tasks, making communication topology a first-class design choice. Yet most existing LLM-based MAS either adopt fully connected graphs, simple sparse rings, or ad-hoc dynamic selection, with little structural guidance. In this work, we revisit classic theory on small-world (SW) networks and ask: what changes if we treat SW connectivity as a design prior for MAS? We first bridge insights from neuroscience and complex networks to MAS, highlighting how SW structures balance local clustering and long-range integration. Using multi-agent debate (MAD) as a controlled testbed, experiment results show that SW connectivity yields nearly the same accuracy and token cost, while substantially stabilizing consensus trajectories. Building on this, we introduce an uncertainty-guided rewiring scheme for scaling MAS, where long-range shortcuts are added between epistemically divergent agents using LLM-oriented uncertainty signals (e.g., semantic entropy). This yields controllable SW structures that adapt to task difficulty and agent heterogeneity. Finally, we discuss broader implications of SW priors for MAS design, framing them as stabilizers of reasoning, enhancers of robustness, scalable coordinators, and inductive biases for emergent cognitive roles.

Chasing Consistency: Quantifying and Optimizing Human-Model Alignment in Chain-of-Thought Reasoning

Nov 09, 2025Abstract:This paper presents a framework for evaluating and optimizing reasoning consistency in Large Language Models (LLMs) via a new metric, the Alignment Score, which quantifies the semantic alignment between model-generated reasoning chains and human-written reference chains in Chain-of-Thought (CoT) reasoning. Empirically, we find that 2-hop reasoning chains achieve the highest Alignment Score. To explain this phenomenon, we define four key error types: logical disconnection, thematic shift, redundant reasoning, and causal reversal, and show how each contributes to the degradation of the Alignment Score. Building on this analysis, we further propose Semantic Consistency Optimization Sampling (SCOS), a method that samples and favors chains with minimal alignment errors, significantly improving Alignment Scores by an average of 29.84% with longer reasoning chains, such as in 3-hop tasks.

Position: Towards a Responsible LLM-empowered Multi-Agent Systems

Feb 03, 2025

Abstract:The rise of Agent AI and Large Language Model-powered Multi-Agent Systems (LLM-MAS) has underscored the need for responsible and dependable system operation. Tools like LangChain and Retrieval-Augmented Generation have expanded LLM capabilities, enabling deeper integration into MAS through enhanced knowledge retrieval and reasoning. However, these advancements introduce critical challenges: LLM agents exhibit inherent unpredictability, and uncertainties in their outputs can compound across interactions, threatening system stability. To address these risks, a human-centered design approach with active dynamic moderation is essential. Such an approach enhances traditional passive oversight by facilitating coherent inter-agent communication and effective system governance, allowing MAS to achieve desired outcomes more efficiently.

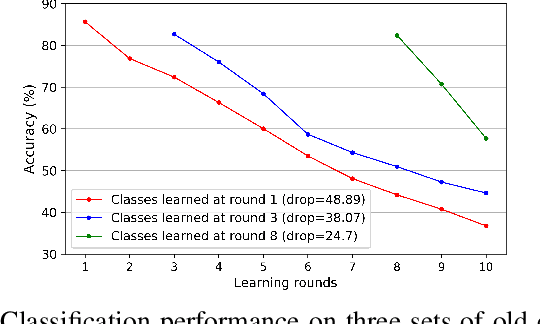

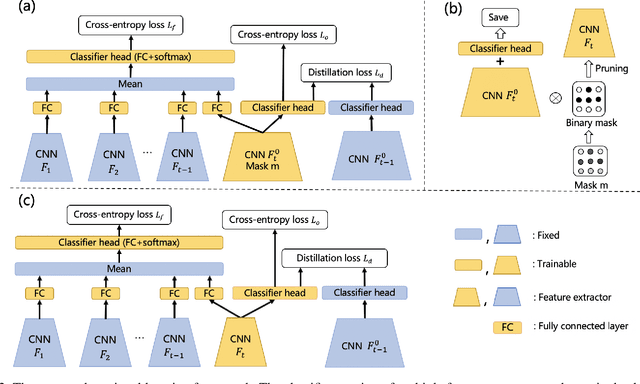

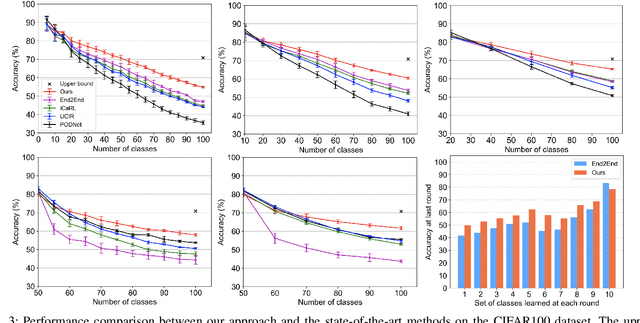

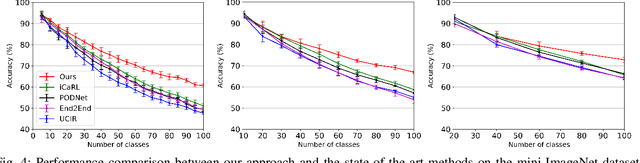

Preserving Earlier Knowledge in Continual Learning with the Help of All Previous Feature Extractors

Apr 28, 2021

Abstract:Continual learning of new knowledge over time is one desirable capability for intelligent systems to recognize more and more classes of objects. Without or with very limited amount of old data stored, an intelligent system often catastrophically forgets previously learned old knowledge when learning new knowledge. Recently, various approaches have been proposed to alleviate the catastrophic forgetting issue. However, old knowledge learned earlier is commonly less preserved than that learned more recently. In order to reduce the forgetting of particularly earlier learned old knowledge and improve the overall continual learning performance, we propose a simple yet effective fusion mechanism by including all the previously learned feature extractors into the intelligent model. In addition, a new feature extractor is included to the model when learning a new set of classes each time, and a feature extractor pruning is also applied to prevent the whole model size from growing rapidly. Experiments on multiple classification tasks show that the proposed approach can effectively reduce the forgetting of old knowledge, achieving state-of-the-art continual learning performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge