Zhunga Liu

Collaborative Learning of Scattering and Deep Features for SAR Target Recognition with Noisy Labels

Aug 11, 2025

Abstract:The acquisition of high-quality labeled synthetic aperture radar (SAR) data is challenging due to the demanding requirement for expert knowledge. Consequently, the presence of unreliable noisy labels is unavoidable, which results in performance degradation of SAR automatic target recognition (ATR). Existing research on learning with noisy labels mainly focuses on image data. However, the non-intuitive visual characteristics of SAR data are insufficient to achieve noise-robust learning. To address this problem, we propose collaborative learning of scattering and deep features (CLSDF) for SAR ATR with noisy labels. Specifically, a multi-model feature fusion framework is designed to integrate scattering and deep features. The attributed scattering centers (ASCs) are treated as dynamic graph structure data, and the extracted physical characteristics effectively enrich the representation of deep image features. Then, the samples with clean and noisy labels are divided by modeling the loss distribution with multiple class-wise Gaussian Mixture Models (GMMs). Afterward, the semi-supervised learning of two divergent branches is conducted based on the data divided by each other. Moreover, a joint distribution alignment strategy is introduced to enhance the reliability of co-guessed labels. Extensive experiments have been done on the Moving and Stationary Target Acquisition and Recognition (MSTAR) dataset, and the results show that the proposed method can achieve state-of-the-art performance under different operating conditions with various label noises.

Monocular Obstacle Avoidance Based on Inverse PPO for Fixed-wing UAVs

Nov 27, 2024

Abstract:Fixed-wing Unmanned Aerial Vehicles (UAVs) are one of the most commonly used platforms for the burgeoning Low-altitude Economy (LAE) and Urban Air Mobility (UAM), due to their long endurance and high-speed capabilities. Classical obstacle avoidance systems, which rely on prior maps or sophisticated sensors, face limitations in unknown low-altitude environments and small UAV platforms. In response, this paper proposes a lightweight deep reinforcement learning (DRL) based UAV collision avoidance system that enables a fixed-wing UAV to avoid unknown obstacles at cruise speed over 30m/s, with only onboard visual sensors. The proposed system employs a single-frame image depth inference module with a streamlined network architecture to ensure real-time obstacle detection, optimized for edge computing devices. After that, a reinforcement learning controller with a novel reward function is designed to balance the target approach and flight trajectory smoothness, satisfying the specific dynamic constraints and stability requirements of a fixed-wing UAV platform. An adaptive entropy adjustment mechanism is introduced to mitigate the exploration-exploitation trade-off inherent in DRL, improving training convergence and obstacle avoidance success rates. Extensive software-in-the-loop and hardware-in-the-loop experiments demonstrate that the proposed framework outperforms other methods in obstacle avoidance efficiency and flight trajectory smoothness and confirm the feasibility of implementing the algorithm on edge devices. The source code is publicly available at \url{https://github.com/ch9397/FixedWing-MonoPPO}.

DOEPatch: Dynamically Optimized Ensemble Model for Adversarial Patches Generation

Dec 28, 2023Abstract:Object detection is a fundamental task in various applications ranging from autonomous driving to intelligent security systems. However, recognition of a person can be hindered when their clothing is decorated with carefully designed graffiti patterns, leading to the failure of object detection. To achieve greater attack potential against unknown black-box models, adversarial patches capable of affecting the outputs of multiple-object detection models are required. While ensemble models have proven effective, current research in the field of object detection typically focuses on the simple fusion of the outputs of all models, with limited attention being given to developing general adversarial patches that can function effectively in the physical world. In this paper, we introduce the concept of energy and treat the adversarial patches generation process as an optimization of the adversarial patches to minimize the total energy of the ``person'' category. Additionally, by adopting adversarial training, we construct a dynamically optimized ensemble model. During training, the weight parameters of the attacked target models are adjusted to find the balance point at which the generated adversarial patches can effectively attack all target models. We carried out six sets of comparative experiments and tested our algorithm on five mainstream object detection models. The adversarial patches generated by our algorithm can reduce the recognition accuracy of YOLOv2 and YOLOv3 to 13.19\% and 29.20\%, respectively. In addition, we conducted experiments to test the effectiveness of T-shirts covered with our adversarial patches in the physical world and could achieve that people are not recognized by the object detection model. Finally, leveraging the Grad-CAM tool, we explored the attack mechanism of adversarial patches from an energetic perspective.

Multi-sensor Suboptimal Fusion Student's $t$ Filter

Apr 23, 2022

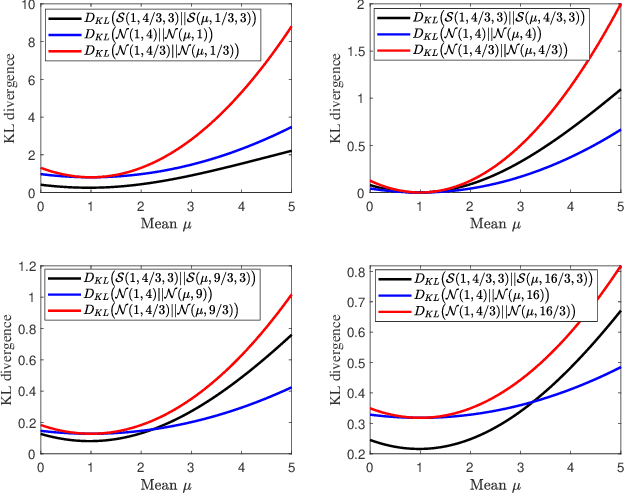

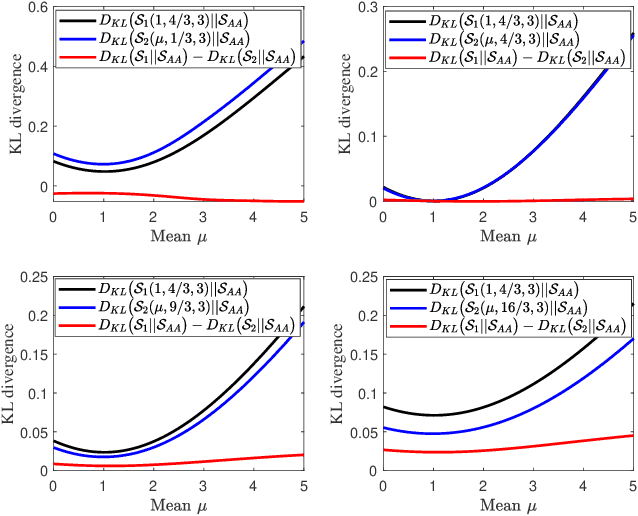

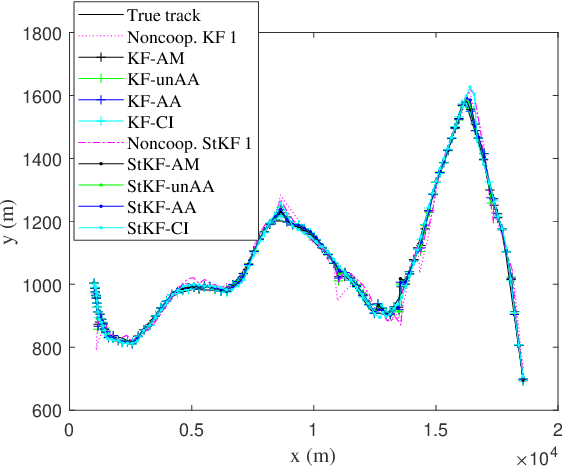

Abstract:A multi-sensor fusion Student's $t$ filter is proposed for time-series recursive estimation in the presence of heavy-tailed process and measurement noises. Driven from an information-theoretic optimization, the approach extends the single sensor Student's $t$ Kalman filter based on the suboptimal arithmetic average (AA) fusion approach. To ensure computationally efficient, closed-form $t$ density recursion, reasonable approximation has been used in both local-sensor filtering and inter-sensor fusion calculation. The overall framework accommodates any Gaussian-oriented fusion approach such as the covariance intersection (CI). Simulation demonstrates the effectiveness of the proposed multi-sensor AA fusion-based $t$ filter in dealing with outliers as compared with the classic Gaussian estimator, and the advantage of the AA fusion in comparison with the CI approach and the augmented measurement fusion.

Pose Discrepancy Spatial Transformer Based Feature Disentangling for Partial Aspect Angles SAR Target Recognition

Mar 07, 2021

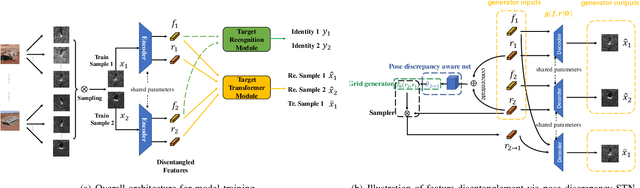

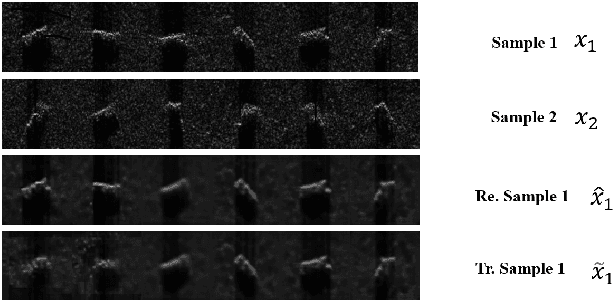

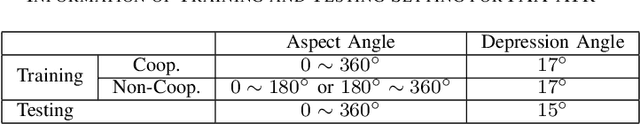

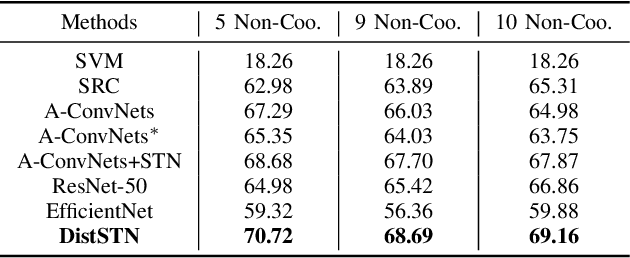

Abstract:This letter presents a novel framework termed DistSTN for the task of synthetic aperture radar (SAR) automatic target recognition (ATR). In contrast to the conventional SAR ATR algorithms, DistSTN considers a more challenging practical scenario for non-cooperative targets whose aspect angles for training are incomplete and limited in a partial range while those of testing samples are unlimited. To address this issue, instead of learning the pose invariant features, DistSTN newly involves an elaborated feature disentangling model to separate the learned pose factors of a SAR target from the identity ones so that they can independently control the representation process of the target image. To disentangle the explainable pose factors, we develop a pose discrepancy spatial transformer module in DistSTN to characterize the intrinsic transformation between the factors of two different targets with an explicit geometric model. Furthermore, DistSTN develops an amortized inference scheme that enables efficient feature extraction and recognition using an encoder-decoder mechanism. Experimental results with the moving and stationary target acquisition and recognition (MSTAR) benchmark demonstrate the effectiveness of our proposed approach. Compared with the other ATR algorithms, DistSTN can achieve higher recognition accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge