A Theory of Human-Like Few-Shot Learning

Jan 03, 2023Zhiying Jiang, Rui Wang, Dongbo Bu, Ming Li

We aim to bridge the gap between our common-sense few-sample human learning and large-data machine learning. We derive a theory of human-like few-shot learning from von-Neuman-Landauer's principle. modelling human learning is difficult as how people learn varies from one to another. Under commonly accepted definitions, we prove that all human or animal few-shot learning, and major models including Free Energy Principle and Bayesian Program Learning that model such learning, approximate our theory, under Church-Turing thesis. We find that deep generative model like variational autoencoder (VAE) can be used to approximate our theory and perform significantly better than baseline models including deep neural networks, for image recognition, low resource language processing, and character recognition.

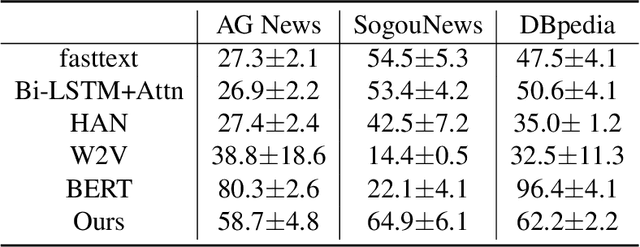

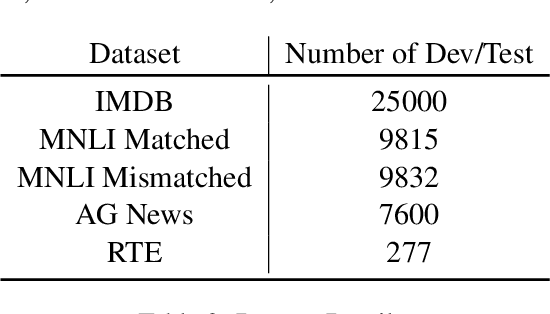

Less is More: Parameter-Free Text Classification with Gzip

Dec 19, 2022Zhiying Jiang, Matthew Y. R. Yang, Mikhail Tsirlin, Raphael Tang, Jimmy Lin

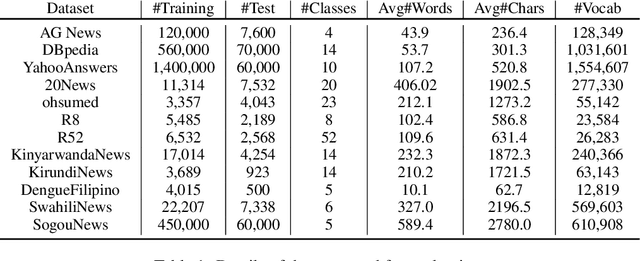

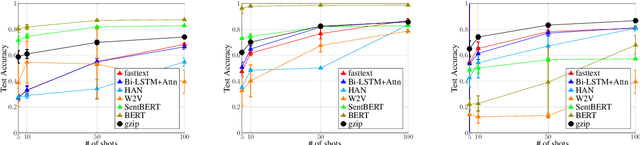

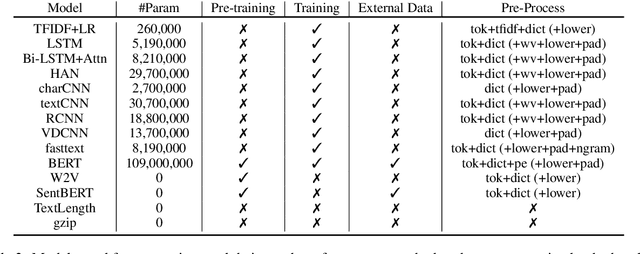

Deep neural networks (DNNs) are often used for text classification tasks as they usually achieve high levels of accuracy. However, DNNs can be computationally intensive with billions of parameters and large amounts of labeled data, which can make them expensive to use, to optimize and to transfer to out-of-distribution (OOD) cases in practice. In this paper, we propose a non-parametric alternative to DNNs that's easy, light-weight and universal in text classification: a combination of a simple compressor like gzip with a $k$-nearest-neighbor classifier. Without any training, pre-training or fine-tuning, our method achieves results that are competitive with non-pretrained deep learning methods on six in-distributed datasets. It even outperforms BERT on all five OOD datasets, including four low-resource languages. Our method also performs particularly well in few-shot settings where labeled data are too scarce for DNNs to achieve a satisfying accuracy.

What the DAAM: Interpreting Stable Diffusion Using Cross Attention

Oct 11, 2022Raphael Tang, Akshat Pandey, Zhiying Jiang, Gefei Yang, Karun Kumar, Jimmy Lin, Ferhan Ture

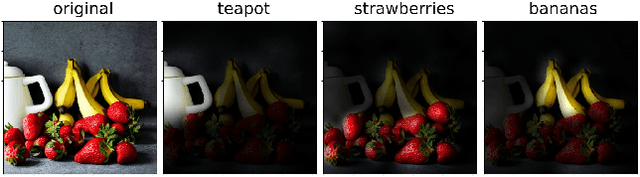

Large-scale diffusion neural networks represent a substantial milestone in text-to-image generation, with some performing similar to real photographs in human evaluation. However, they remain poorly understood, lacking explainability and interpretability analyses, largely due to their proprietary, closed-source nature. In this paper, to shine some much-needed light on text-to-image diffusion models, we perform a text-image attribution analysis on Stable Diffusion, a recently open-sourced large diffusion model. To produce pixel-level attribution maps, we propose DAAM, a novel method based on upscaling and aggregating cross-attention activations in the latent denoising subnetwork. We support its correctness by evaluating its unsupervised semantic segmentation quality on its own generated imagery, compared to supervised segmentation models. We show that DAAM performs strongly on COCO caption-generated images, achieving an mIoU of 61.0, and it outperforms supervised models on open-vocabulary segmentation, for an mIoU of 51.5. We further find that certain parts of speech, like punctuation and conjunctions, influence the generated imagery most, which agrees with the prior literature, while determiners and numerals the least, suggesting poor numeracy. To our knowledge, we are the first to propose and study word-pixel attribution for large-scale text-to-image diffusion models. Our code and data are at https://github.com/castorini/daam.

Building an Efficiency Pipeline: Commutativity and Cumulativeness of Efficiency Operators for Transformers

Jul 31, 2022Ji Xin, Raphael Tang, Zhiying Jiang, Yaoliang Yu, Jimmy Lin

There exists a wide variety of efficiency methods for natural language processing (NLP) tasks, such as pruning, distillation, dynamic inference, quantization, etc. We can consider an efficiency method as an operator applied on a model. Naturally, we may construct a pipeline of multiple efficiency methods, i.e., to apply multiple operators on the model sequentially. In this paper, we study the plausibility of this idea, and more importantly, the commutativity and cumulativeness of efficiency operators. We make two interesting observations: (1) Efficiency operators are commutative -- the order of efficiency methods within the pipeline has little impact on the final results; (2) Efficiency operators are also cumulative -- the final results of combining several efficiency methods can be estimated by combining the results of individual methods. These observations deepen our understanding of efficiency operators and provide useful guidelines for their real-world applications.

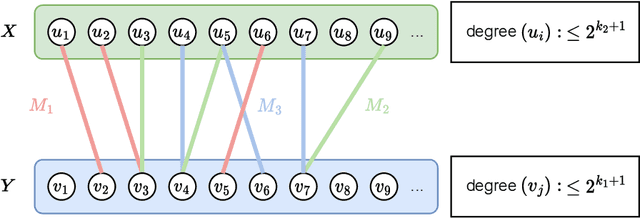

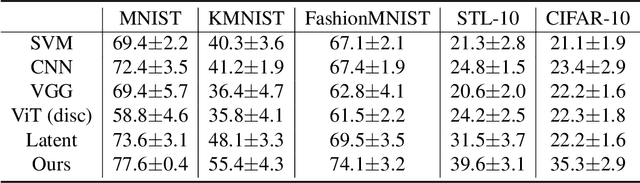

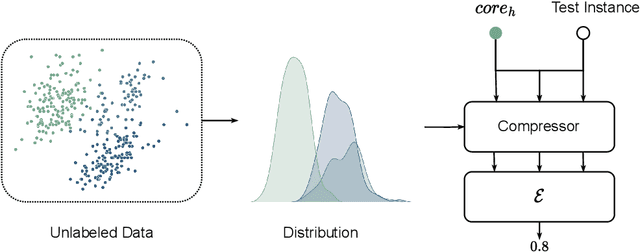

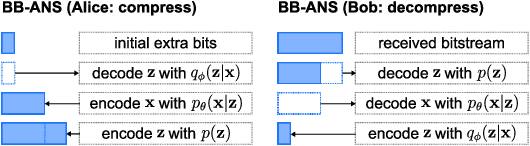

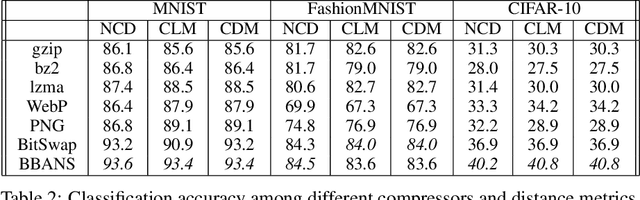

Few-Shot Non-Parametric Learning with Deep Latent Variable Model

Jun 23, 2022Zhiying Jiang, Yiqin Dai, Ji Xin, Ming Li, Jimmy Lin

Most real-world problems that machine learning algorithms are expected to solve face the situation with 1) unknown data distribution; 2) little domain-specific knowledge; and 3) datasets with limited annotation. We propose Non-Parametric learning by Compression with Latent Variables (NPC-LV), a learning framework for any dataset with abundant unlabeled data but very few labeled ones. By only training a generative model in an unsupervised way, the framework utilizes the data distribution to build a compressor. Using a compressor-based distance metric derived from Kolmogorov complexity, together with few labeled data, NPC-LV classifies without further training. We show that NPC-LV outperforms supervised methods on all three datasets on image classification in low data regime and even outperform semi-supervised learning methods on CIFAR-10. We demonstrate how and when negative evidence lowerbound (nELBO) can be used as an approximate compressed length for classification. By revealing the correlation between compression rate and classification accuracy, we illustrate that under NPC-LV, the improvement of generative models can enhance downstream classification accuracy.

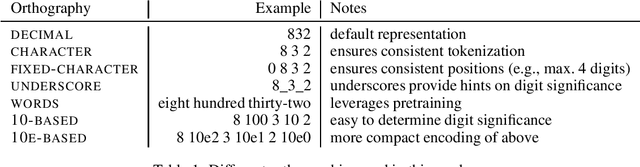

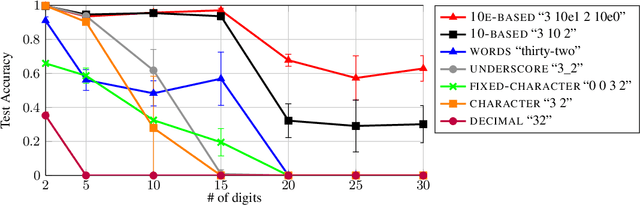

Investigating the Limitations of Transformers with Simple Arithmetic Tasks

Mar 02, 2021Rodrigo Nogueira, Zhiying Jiang, Jimmy Lin

The ability to perform arithmetic tasks is a remarkable trait of human intelligence and might form a critical component of more complex reasoning tasks. In this work, we investigate if the surface form of a number has any influence on how sequence-to-sequence language models learn simple arithmetic tasks such as addition and subtraction across a wide range of values. We find that how a number is represented in its surface form has a strong influence on the model's accuracy. In particular, the model fails to learn addition of five-digit numbers when using subwords (e.g., "32"), and it struggles to learn with character-level representations (e.g., "3 2"). By introducing position tokens (e.g., "3 10e1 2"), the model learns to accurately add and subtract numbers up to 60 digits. We conclude that modern pretrained language models can easily learn arithmetic from very few examples, as long as we use the proper surface representation. This result bolsters evidence that subword tokenizers and positional encodings are components in current transformer designs that might need improvement. Moreover, we show that regardless of the number of parameters and training examples, models cannot learn addition rules that are independent of the length of the numbers seen during training. Code to reproduce our experiments is available at https://github.com/castorini/transformers-arithmetic

Investigating the Limitations of the Transformers with Simple Arithmetic Tasks

Feb 25, 2021Rodrigo Nogueira, Zhiying Jiang, Jimmy Lin

The ability to perform arithmetic tasks is a remarkable trait of human intelligence and might form a critical component of more complex reasoning tasks. In this work, we investigate if the surface form of a number has any influence on how sequence-to-sequence language models learn simple arithmetic tasks such as addition and subtraction across a wide range of values. We find that how a number is represented in its surface form has a strong influence on the model's accuracy. In particular, the model fails to learn addition of five-digit numbers when using subwords (e.g., "32"), and it struggles to learn with character-level representations (e.g., "3 2"). By introducing position tokens (e.g., "3 10e1 2"), the model learns to accurately add and subtract numbers up to 60 digits. We conclude that modern pretrained language models can easily learn arithmetic from very few examples, as long as we use the proper surface representation. This result bolsters evidence that subword tokenizers and positional encodings are components in current transformer designs that might need improvement. Moreover, we show that regardless of the number of parameters and training examples, models cannot learn addition rules that are independent of the length of the numbers seen during training. Code to reproduce our experiments is available at https://github.com/castorini/transformers-arithmetic

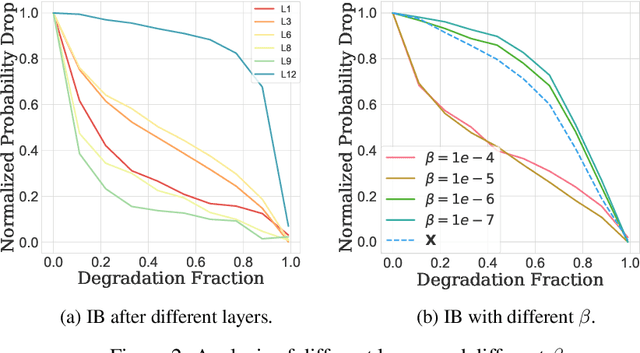

Inserting Information Bottlenecks for Attribution in Transformers

Dec 27, 2020Zhiying Jiang, Raphael Tang, Ji Xin, Jimmy Lin

Pretrained transformers achieve the state of the art across tasks in natural language processing, motivating researchers to investigate their inner mechanisms. One common direction is to understand what features are important for prediction. In this paper, we apply information bottlenecks to analyze the attribution of each feature for prediction on a black-box model. We use BERT as the example and evaluate our approach both quantitatively and qualitatively. We show the effectiveness of our method in terms of attribution and the ability to provide insight into how information flows through layers. We demonstrate that our technique outperforms two competitive methods in degradation tests on four datasets. Code is available at https://github.com/bazingagin/IBA.

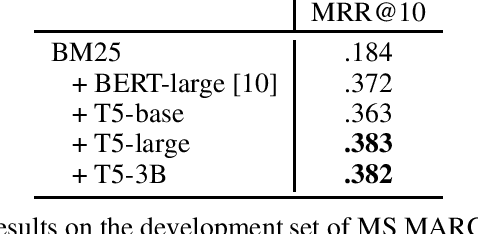

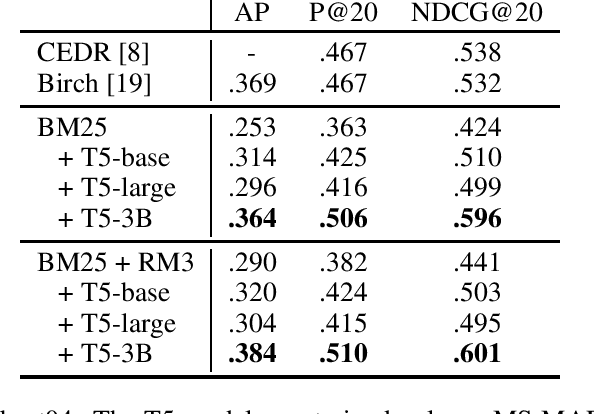

Document Ranking with a Pretrained Sequence-to-Sequence Model

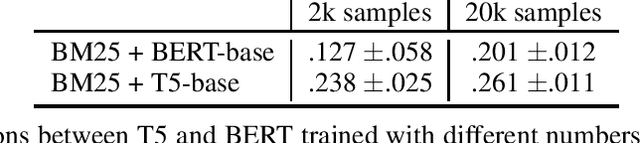

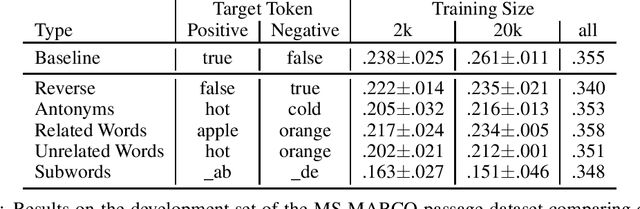

Mar 14, 2020Rodrigo Nogueira, Zhiying Jiang, Jimmy Lin

This work proposes a novel adaptation of a pretrained sequence-to-sequence model to the task of document ranking. Our approach is fundamentally different from a commonly-adopted classification-based formulation of ranking, based on encoder-only pretrained transformer architectures such as BERT. We show how a sequence-to-sequence model can be trained to generate relevance labels as "target words", and how the underlying logits of these target words can be interpreted as relevance probabilities for ranking. On the popular MS MARCO passage ranking task, experimental results show that our approach is at least on par with previous classification-based models and can surpass them with larger, more-recent models. On the test collection from the TREC 2004 Robust Track, we demonstrate a zero-shot transfer-based approach that outperforms previous state-of-the-art models requiring in-dataset cross-validation. Furthermore, we find that our approach significantly outperforms an encoder-only model in a data-poor regime (i.e., with few training examples). We investigate this observation further by varying target words to probe the model's use of latent knowledge.

PaperRobot: Incremental Draft Generation of Scientific Ideas

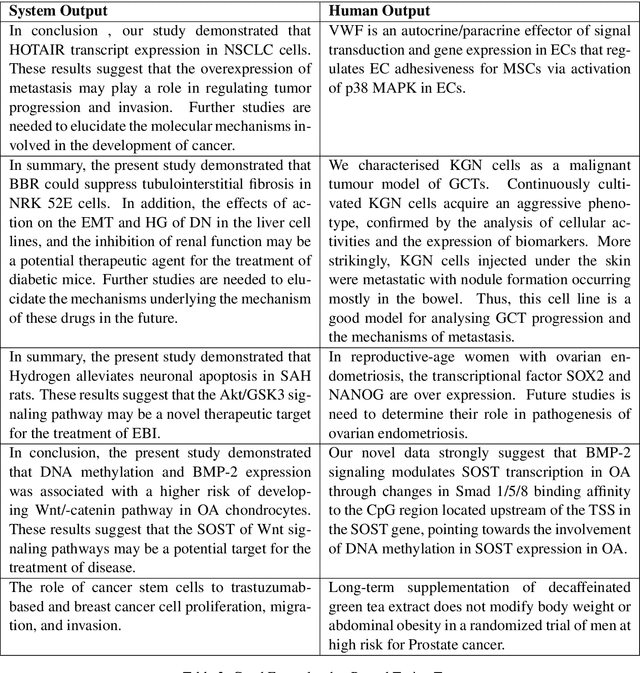

May 31, 2019Qingyun Wang, Lifu Huang, Zhiying Jiang, Kevin Knight, Heng Ji, Mohit Bansal, Yi Luan

We present a PaperRobot who performs as an automatic research assistant by (1) conducting deep understanding of a large collection of human-written papers in a target domain and constructing comprehensive background knowledge graphs (KGs); (2) creating new ideas by predicting links from the background KGs, by combining graph attention and contextual text attention; (3) incrementally writing some key elements of a new paper based on memory-attention networks: from the input title along with predicted related entities to generate a paper abstract, from the abstract to generate conclusion and future work, and finally from future work to generate a title for a follow-on paper. Turing Tests, where a biomedical domain expert is asked to compare a system output and a human-authored string, show PaperRobot generated abstracts, conclusion and future work sections, and new titles are chosen over human-written ones up to 30%, 24% and 12% of the time, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge