Zhimin Lin

LongCat-Flash-Thinking-2601 Technical Report

Jan 23, 2026Abstract:We introduce LongCat-Flash-Thinking-2601, a 560-billion-parameter open-source Mixture-of-Experts (MoE) reasoning model with superior agentic reasoning capability. LongCat-Flash-Thinking-2601 achieves state-of-the-art performance among open-source models on a wide range of agentic benchmarks, including agentic search, agentic tool use, and tool-integrated reasoning. Beyond benchmark performance, the model demonstrates strong generalization to complex tool interactions and robust behavior under noisy real-world environments. Its advanced capability stems from a unified training framework that combines domain-parallel expert training with subsequent fusion, together with an end-to-end co-design of data construction, environments, algorithms, and infrastructure spanning from pre-training to post-training. In particular, the model's strong generalization capability in complex tool-use are driven by our in-depth exploration of environment scaling and principled task construction. To optimize long-tailed, skewed generation and multi-turn agentic interactions, and to enable stable training across over 10,000 environments spanning more than 20 domains, we systematically extend our asynchronous reinforcement learning framework, DORA, for stable and efficient large-scale multi-environment training. Furthermore, recognizing that real-world tasks are inherently noisy, we conduct a systematic analysis and decomposition of real-world noise patterns, and design targeted training procedures to explicitly incorporate such imperfections into the training process, resulting in improved robustness for real-world applications. To further enhance performance on complex reasoning tasks, we introduce a Heavy Thinking mode that enables effective test-time scaling by jointly expanding reasoning depth and width through intensive parallel thinking.

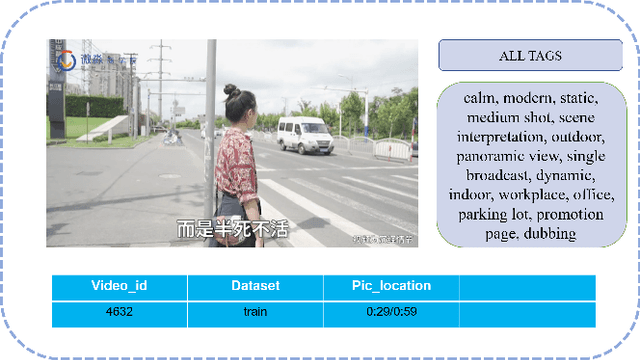

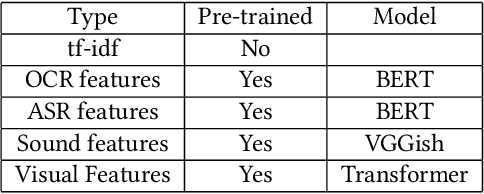

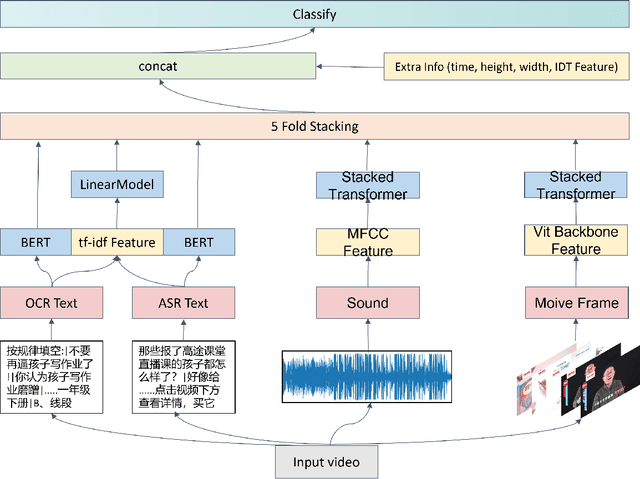

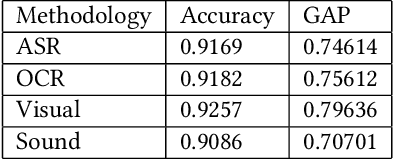

Multimodal Feature Fusion for Video Advertisements Tagging Via Stacking Ensemble

Aug 02, 2021

Abstract:Automated tagging of video advertisements has been a critical yet challenging problem, and it has drawn increasing interests in last years as its applications seem to be evident in many fields. Despite sustainable efforts have been made, the tagging task is still suffered from several challenges, such as, efficiently feature fusion approach is desirable, but under-explored in previous studies. In this paper, we present our approach for Multimodal Video Ads Tagging in the 2021 Tencent Advertising Algorithm Competition. Specifically, we propose a novel multi-modal feature fusion framework, with the goal to combine complementary information from multiple modalities. This framework introduces stacking-based ensembling approach to reduce the influence of varying levels of noise and conflicts between different modalities. Thus, our framework can boost the performance of the tagging task, compared to previous methods. To empirically investigate the effectiveness and robustness of the proposed framework, we conduct extensive experiments on the challenge datasets. The obtained results suggest that our framework can significantly outperform related approaches and our method ranks as the 1st place on the final leaderboard, with a Global Average Precision (GAP) of 82.63%. To better promote the research in this field, we will release our code in the final version.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge