Yujie Zhao

TwinAligner: Visual-Dynamic Alignment Empowers Physics-aware Real2Sim2Real for Robotic Manipulation

Dec 22, 2025Abstract:The robotics field is evolving towards data-driven, end-to-end learning, inspired by multimodal large models. However, reliance on expensive real-world data limits progress. Simulators offer cost-effective alternatives, but the gap between simulation and reality challenges effective policy transfer. This paper introduces TwinAligner, a novel Real2Sim2Real system that addresses both visual and dynamic gaps. The visual alignment module achieves pixel-level alignment through SDF reconstruction and editable 3DGS rendering, while the dynamic alignment module ensures dynamic consistency by identifying rigid physics from robot-object interaction. TwinAligner improves robot learning by providing scalable data collection and establishing a trustworthy iterative cycle, accelerating algorithm development. Quantitative evaluations highlight TwinAligner's strong capabilities in visual and dynamic real-to-sim alignment. This system enables policies trained in simulation to achieve strong zero-shot generalization to the real world. The high consistency between real-world and simulated policy performance underscores TwinAligner's potential to advance scalable robot learning. Code and data will be released on https://twin-aligner.github.io

Real2Edit2Real: Generating Robotic Demonstrations via a 3D Control Interface

Dec 22, 2025Abstract:Recent progress in robot learning has been driven by large-scale datasets and powerful visuomotor policy architectures, yet policy robustness remains limited by the substantial cost of collecting diverse demonstrations, particularly for spatial generalization in manipulation tasks. To reduce repetitive data collection, we present Real2Edit2Real, a framework that generates new demonstrations by bridging 3D editability with 2D visual data through a 3D control interface. Our approach first reconstructs scene geometry from multi-view RGB observations with a metric-scale 3D reconstruction model. Based on the reconstructed geometry, we perform depth-reliable 3D editing on point clouds to generate new manipulation trajectories while geometrically correcting the robot poses to recover physically consistent depth, which serves as a reliable condition for synthesizing new demonstrations. Finally, we propose a multi-conditional video generation model guided by depth as the primary control signal, together with action, edge, and ray maps, to synthesize spatially augmented multi-view manipulation videos. Experiments on four real-world manipulation tasks demonstrate that policies trained on data generated from only 1-5 source demonstrations can match or outperform those trained on 50 real-world demonstrations, improving data efficiency by up to 10-50x. Moreover, experimental results on height and texture editing demonstrate the framework's flexibility and extensibility, indicating its potential to serve as a unified data generation framework.

Pose-Robust Calibration Strategy for Point-of-Gaze Estimation on Mobile Phones

Aug 14, 2025Abstract:Although appearance-based point-of-gaze (PoG) estimation has improved, the estimators still struggle to generalize across individuals due to personal differences. Therefore, person-specific calibration is required for accurate PoG estimation. However, calibrated PoG estimators are often sensitive to head pose variations. To address this, we investigate the key factors influencing calibrated estimators and explore pose-robust calibration strategies. Specifically, we first construct a benchmark, MobilePoG, which includes facial images from 32 individuals focusing on designated points under either fixed or continuously changing head poses. Using this benchmark, we systematically analyze how the diversity of calibration points and head poses influences estimation accuracy. Our experiments show that introducing a wider range of head poses during calibration improves the estimator's ability to handle pose variation. Building on this insight, we propose a dynamic calibration strategy in which users fixate on calibration points while moving their phones. This strategy naturally introduces head pose variation during a user-friendly and efficient calibration process, ultimately producing a better calibrated PoG estimator that is less sensitive to head pose variations than those using conventional calibration strategies. Codes and datasets are available at our project page.

PRO-V: An Efficient Program Generation Multi-Agent System for Automatic RTL Verification

Jun 13, 2025Abstract:LLM-assisted hardware verification is gaining substantial attention due to its potential to significantly reduce the cost and effort of crafting effective testbenches. It also serves as a critical enabler for LLM-aided end-to-end hardware language design. However, existing current LLMs often struggle with Register Transfer Level (RTL) code generation, resulting in testbenches that exhibit functional errors in Hardware Description Languages (HDL) logic. Motivated by the strong performance of LLMs in Python code generation under inference-time sampling strategies, and their promising capabilities as judge agents, we propose PRO-V a fully program generation multi-agent system for robust RTL verification. Pro-V incorporates an efficient best-of-n iterative sampling strategy to enhance the correctness of generated testbenches. Moreover, it introduces an LLM-as-a-judge aid validation framework featuring an automated prompt generation pipeline. By converting rule-based static analysis from the compiler into natural language through in-context learning, this pipeline enables LLMs to assist the compiler in determining whether verification failures stem from errors in the RTL design or the testbench. PRO-V attains a verification accuracy of 87.17% on golden RTL implementations and 76.28% on RTL mutants. Our code is open-sourced at https://github.com/stable-lab/Pro-V.

MAGE: A Multi-Agent Engine for Automated RTL Code Generation

Dec 10, 2024

Abstract:The automatic generation of RTL code (e.g., Verilog) through natural language instructions has emerged as a promising direction with the advancement of large language models (LLMs). However, producing RTL code that is both syntactically and functionally correct remains a significant challenge. Existing single-LLM-agent approaches face substantial limitations because they must navigate between various programming languages and handle intricate generation, verification, and modification tasks. To address these challenges, this paper introduces MAGE, the first open-source multi-agent AI system designed for robust and accurate Verilog RTL code generation. We propose a novel high-temperature RTL candidate sampling and debugging system that effectively explores the space of code candidates and significantly improves the quality of the candidates. Furthermore, we design a novel Verilog-state checkpoint checking mechanism that enables early detection of functional errors and delivers precise feedback for targeted fixes, significantly enhancing the functional correctness of the generated RTL code. MAGE achieves a 95.7% rate of syntactic and functional correctness code generation on VerilogEval-Human 2 benchmark, surpassing the state-of-the-art Claude-3.5-sonnet by 23.3 %, demonstrating a robust and reliable approach for AI-driven RTL design workflows.

RA-PbRL: Provably Efficient Risk-Aware Preference-Based Reinforcement Learning

Oct 31, 2024

Abstract:Preference-based Reinforcement Learning (PbRL) studies the problem where agents receive only preferences over pairs of trajectories in each episode. Traditional approaches in this field have predominantly focused on the mean reward or utility criterion. However, in PbRL scenarios demanding heightened risk awareness, such as in AI systems, healthcare, and agriculture, risk-aware measures are requisite. Traditional risk-aware objectives and algorithms are not applicable in such one-episode-reward settings. To address this, we explore and prove the applicability of two risk-aware objectives to PbRL: nested and static quantile risk objectives. We also introduce Risk-Aware- PbRL (RA-PbRL), an algorithm designed to optimize both nested and static objectives. Additionally, we provide a theoretical analysis of the regret upper bounds, demonstrating that they are sublinear with respect to the number of episodes, and present empirical results to support our findings. Our code is available in https://github.com/aguilarjose11/PbRLNeurips.

A Survey of Numerical Algorithms that can Solve the Lasso Problems

Mar 07, 2023Abstract:In statistics, the least absolute shrinkage and selection operator (Lasso) is a regression method that performs both variable selection and regularization. There is a lot of literature available, discussing the statistical properties of the regression coefficients estimated by the Lasso method. However, there lacks a comprehensive review discussing the algorithms to solve the optimization problem in Lasso. In this review, we summarize five representative algorithms to optimize the objective function in Lasso, including the iterative shrinkage threshold algorithm (ISTA), fast iterative shrinkage-thresholding algorithms (FISTA), coordinate gradient descent algorithm (CGDA), smooth L1 algorithm (SLA), and path following algorithm (PFA). Additionally, we also compare their convergence rate, as well as their potential strengths and weakness.

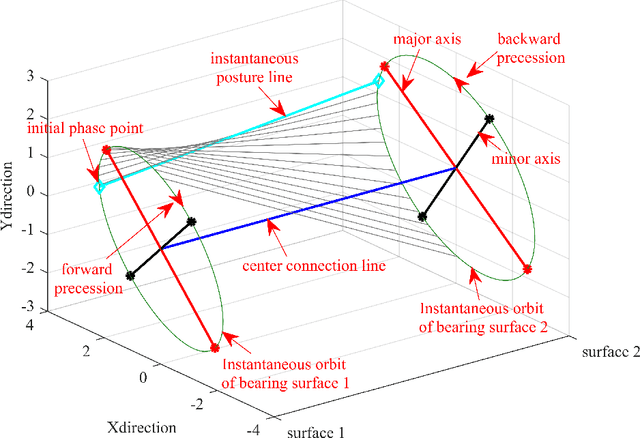

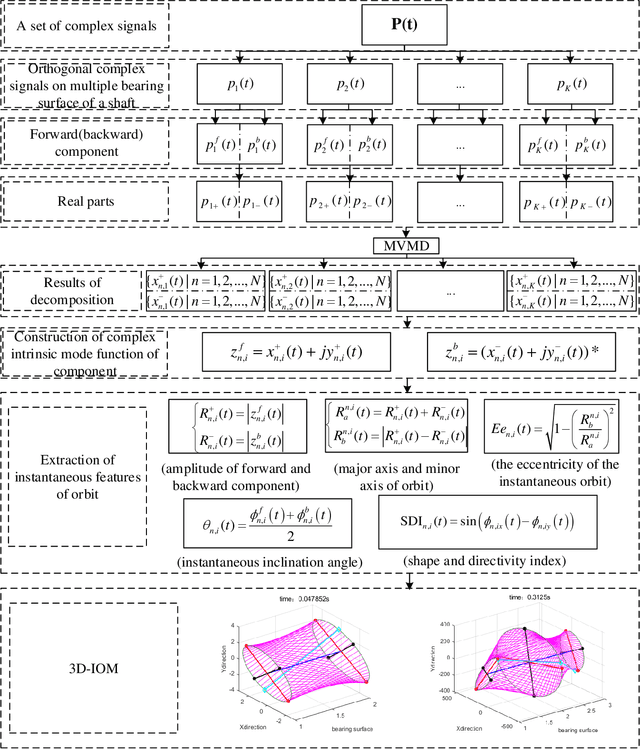

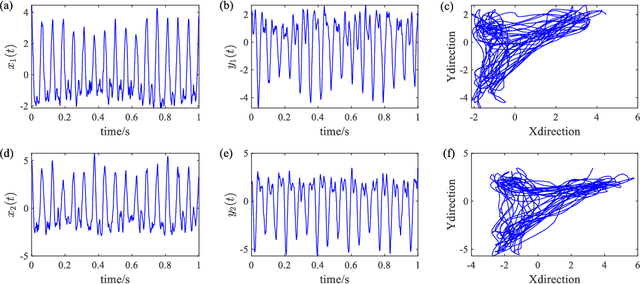

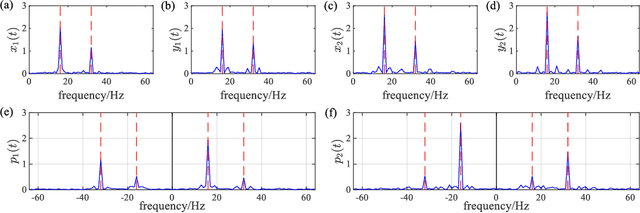

Three-dimensional instantaneous orbit map for rotor-bearing system based on a novel multivariable complex variational mode decomposition algorithm

Jul 29, 2021

Abstract:Full spectrum and holospectrum are homogenous information fusion technology developed for the fault diagnosis of rotating machinery and are often used in the analysis of the orbit of rotating machinery. However, both of the techniques are based on Fourier transform, so they can only handle stationary signals, which limits their development. By drawing inspiration from the approach of multivariate variational mode decomposition (MVMD) and the complex-valued signal decomposition, we propose a method called multivariate complex variational mode decomposition (MCVMD) for processing non-stationary complex-valued signals of multi-dimensional bearing surfaces in this work. In particular, the proposed method takes the advantages of the joint information between the complex-valued signals of multi-dimensional bearing surfaces, and owing to this property, we provide its three-dimensional instantaneous orbit map (3D-IOM) to present the overall perspective of the rotor-bearing system and also offer a high-resolution time-full spectrum (Time-FS) to display the forward and backward frequency components of all the bearing surfaces within a time-frequency plane. The effectiveness of the proposed method through both the simulated experiment and the real-life complex-valued signals are shown in this paper.

A Homotopic Method to Solve the Lasso Problems with an Improved Upper Bound of Convergence Rate

Oct 26, 2020

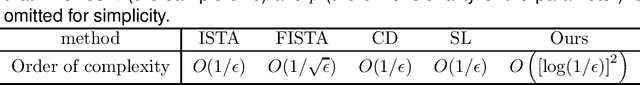

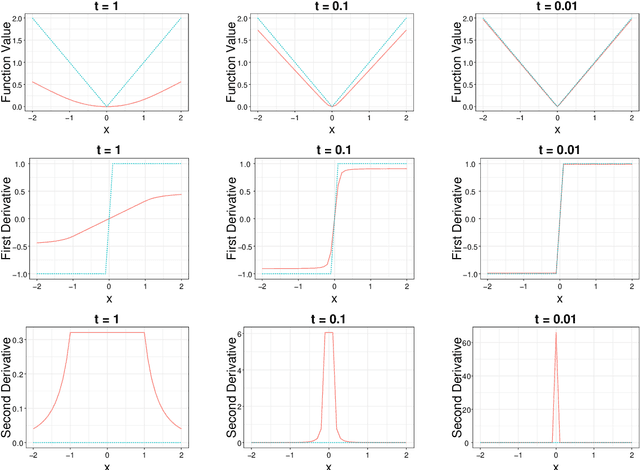

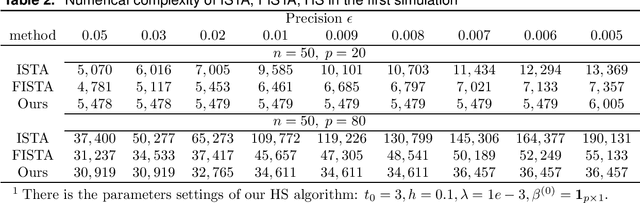

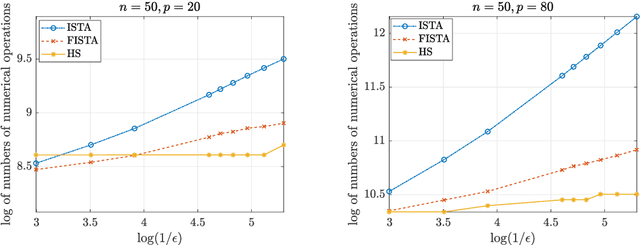

Abstract:In optimization, it is known that when the objective functions are strictly convex and well-conditioned, gradient based approaches can be extremely effective, e.g., achieving the exponential rate in convergence. On the other hand, the existing Lasso-type of estimator in general cannot achieve the optimal rate due to the undesirable behavior of the absolute function at the origin. A homotopic method is to use a sequence of surrogate functions to approximate the $\ell_1$ penalty that is used in the Lasso-type of estimators. The surrogate functions will converge to the $\ell_1$ penalty in the Lasso estimator. At the same time, each surrogate function is strictly convex, which enables provable faster numerical rate of convergence. In this paper, we demonstrate that by meticulously defining the surrogate functions, one can prove faster numerical convergence rate than any existing methods in computing for the Lasso-type of estimators. Namely, the state-of-the-art algorithms can only guarantee $O(1/\epsilon)$ or $O(1/\sqrt{\epsilon})$ convergence rates, while we can prove an $O([\log(1/\epsilon)]^2)$ for the newly proposed algorithm. Our numerical simulations show that the new algorithm also performs better empirically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge