Yalou Huang

Hierarchical Recurrent Attention Network for Response Generation

Jan 25, 2017

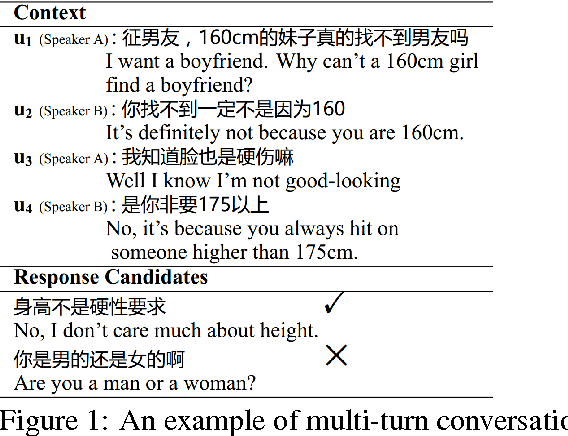

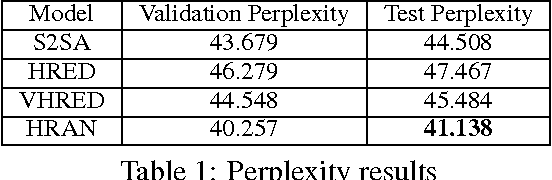

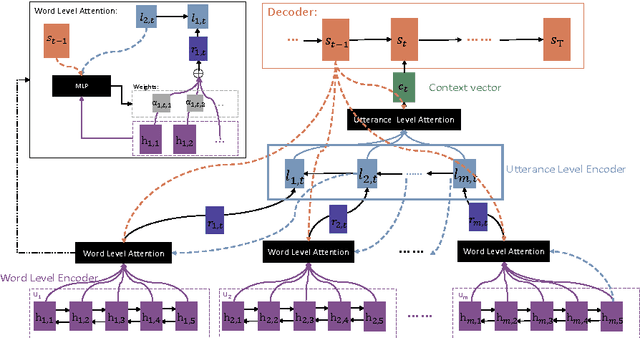

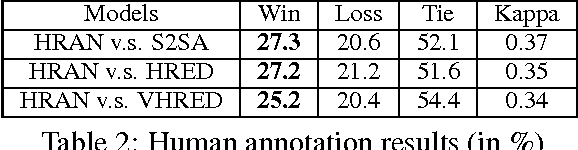

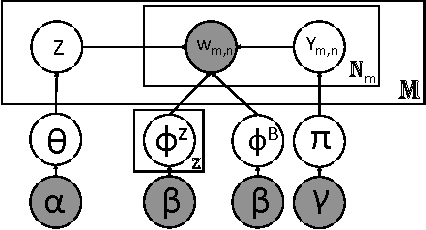

Abstract:We study multi-turn response generation in chatbots where a response is generated according to a conversation context. Existing work has modeled the hierarchy of the context, but does not pay enough attention to the fact that words and utterances in the context are differentially important. As a result, they may lose important information in context and generate irrelevant responses. We propose a hierarchical recurrent attention network (HRAN) to model both aspects in a unified framework. In HRAN, a hierarchical attention mechanism attends to important parts within and among utterances with word level attention and utterance level attention respectively. With the word level attention, hidden vectors of a word level encoder are synthesized as utterance vectors and fed to an utterance level encoder to construct hidden representations of the context. The hidden vectors of the context are then processed by the utterance level attention and formed as context vectors for decoding the response. Empirical studies on both automatic evaluation and human judgment show that HRAN can significantly outperform state-of-the-art models for multi-turn response generation.

Topic Aware Neural Response Generation

Sep 19, 2016

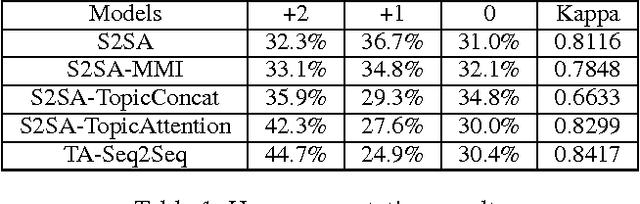

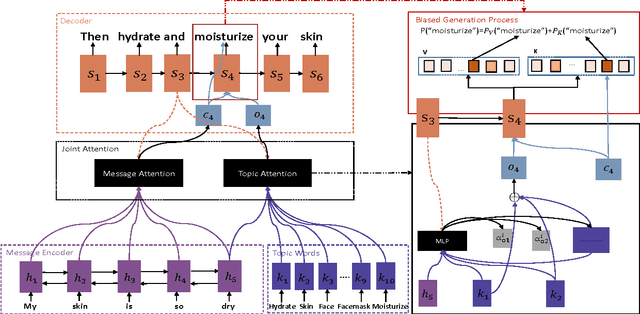

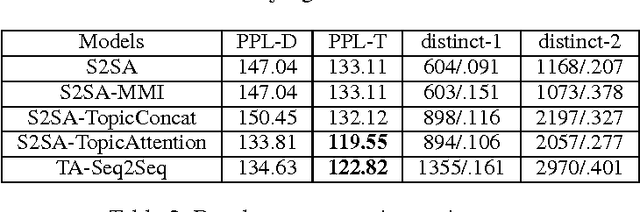

Abstract:We consider incorporating topic information into the sequence-to-sequence framework to generate informative and interesting responses for chatbots. To this end, we propose a topic aware sequence-to-sequence (TA-Seq2Seq) model. The model utilizes topics to simulate prior knowledge of human that guides them to form informative and interesting responses in conversation, and leverages the topic information in generation by a joint attention mechanism and a biased generation probability. The joint attention mechanism summarizes the hidden vectors of an input message as context vectors by message attention, synthesizes topic vectors by topic attention from the topic words of the message obtained from a pre-trained LDA model, and let these vectors jointly affect the generation of words in decoding. To increase the possibility of topic words appearing in responses, the model modifies the generation probability of topic words by adding an extra probability item to bias the overall distribution. Empirical study on both automatic evaluation metrics and human annotations shows that TA-Seq2Seq can generate more informative and interesting responses, and significantly outperform the-state-of-the-art response generation models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge