Xuyun Wen

Improving Brain-to-Image Reconstruction via Fine-Grained Text Bridging

May 29, 2025Abstract:Brain-to-Image reconstruction aims to recover visual stimuli perceived by humans from brain activity. However, the reconstructed visual stimuli often missing details and semantic inconsistencies, which may be attributed to insufficient semantic information. To address this issue, we propose an approach named Fine-grained Brain-to-Image reconstruction (FgB2I), which employs fine-grained text as bridge to improve image reconstruction. FgB2I comprises three key stages: detail enhancement, decoding fine-grained text descriptions, and text-bridged brain-to-image reconstruction. In the detail-enhancement stage, we leverage large vision-language models to generate fine-grained captions for visual stimuli and experimentally validate its importance. We propose three reward metrics (object accuracy, text-image semantic similarity, and image-image semantic similarity) to guide the language model in decoding fine-grained text descriptions from fMRI signals. The fine-grained text descriptions can be integrated into existing reconstruction methods to achieve fine-grained Brain-to-Image reconstruction.

Improve Language Model and Brain Alignment via Associative Memory

May 20, 2025Abstract:Associative memory engages in the integration of relevant information for comprehension in the human cognition system. In this work, we seek to improve alignment between language models and human brain while processing speech information by integrating associative memory. After verifying the alignment between language model and brain by mapping language model activations to brain activity, the original text stimuli expanded with simulated associative memory are regarded as input to computational language models. We find the alignment between language model and brain is improved in brain regions closely related to associative memory processing. We also demonstrate large language models after specific supervised fine-tuning better align with brain response, by building the \textit{Association} dataset containing 1000 samples of stories, with instructions encouraging associative memory as input and associated content as output.

Co-evolution of Functional Brain Network at Multiple Scales during Early Infancy

Sep 15, 2020

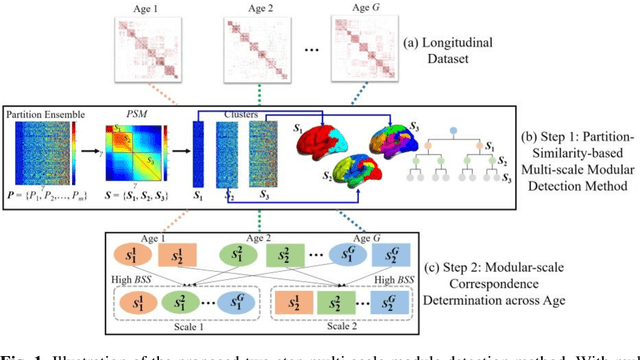

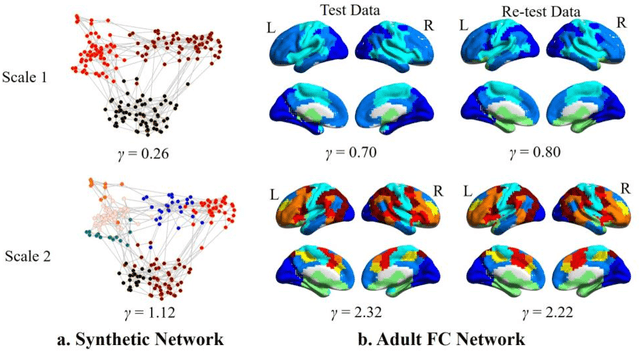

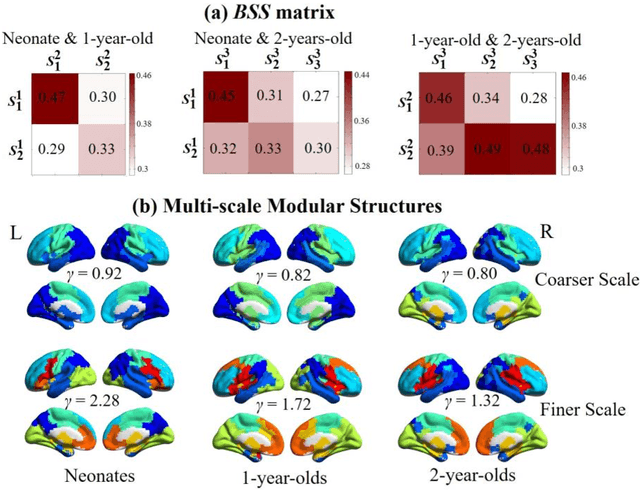

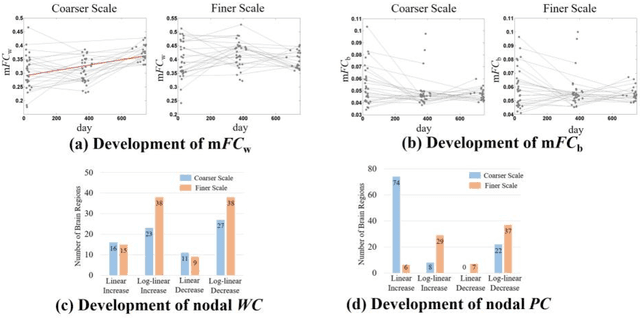

Abstract:The human brains are organized into hierarchically modular networks facilitating efficient and stable information processing and supporting diverse cognitive processes during the course of development. While the remarkable reconfiguration of functional brain network has been firmly established in early life, all these studies investigated the network development from a "single-scale" perspective, which ignore the richness engendered by its hierarchical nature. To fill this gap, this paper leveraged a longitudinal infant resting-state functional magnetic resonance imaging dataset from birth to 2 years of age, and proposed an advanced methodological framework to delineate the multi-scale reconfiguration of functional brain network during early development. Our proposed framework is consist of two parts. The first part developed a novel two-step multi-scale module detection method that could uncover efficient and consistent modular structure for longitudinal dataset from multiple scales in a completely data-driven manner. The second part designed a systematic approach that employed the linear mixed-effect model to four global and nodal module-related metrics to delineate scale-specific age-related changes of network organization. By applying our proposed methodological framework on the collected longitudinal infant dataset, we provided the first evidence that, in the first 2 years of life, the brain functional network is co-evolved at different scales, where each scale displays the unique reconfiguration pattern in terms of modular organization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge