Xingang Yan

Learning Like Humans: Advancing LLM Reasoning Capabilities via Adaptive Difficulty Curriculum Learning and Expert-Guided Self-Reformulation

May 13, 2025

Abstract:Despite impressive progress in areas like mathematical reasoning, large language models still face significant challenges in consistently solving complex problems. Drawing inspiration from key human learning strategies, we propose two novel strategies to enhance the capability of large language models to solve these complex problems. First, Adaptive Difficulty Curriculum Learning (ADCL) is a novel curriculum learning strategy that tackles the Difficulty Shift phenomenon (i.e., a model's perception of problem difficulty dynamically changes during training) by periodically re-estimating difficulty within upcoming data batches to maintain alignment with the model's evolving capabilities. Second, Expert-Guided Self-Reformulation (EGSR) is a novel reinforcement learning strategy that bridges the gap between imitation learning and pure exploration by guiding models to reformulate expert solutions within their own conceptual framework, rather than relying on direct imitation, fostering deeper understanding and knowledge assimilation. Extensive experiments on challenging mathematical reasoning benchmarks, using Qwen2.5-7B as the base model, demonstrate that these human-inspired strategies synergistically and significantly enhance performance. Notably, their combined application improves performance over the standard Zero-RL baseline by 10% on the AIME24 benchmark and 16.6% on AIME25.

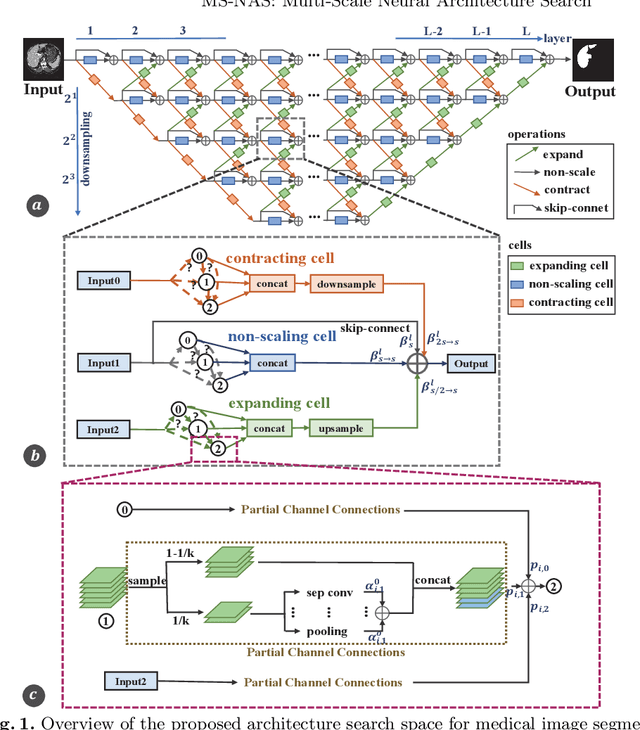

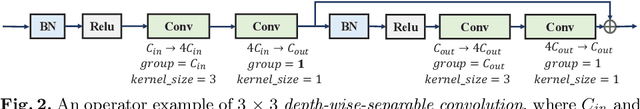

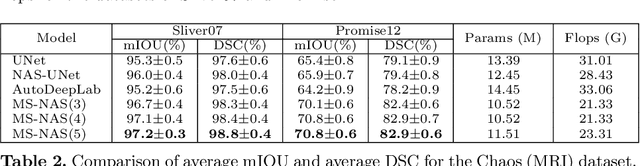

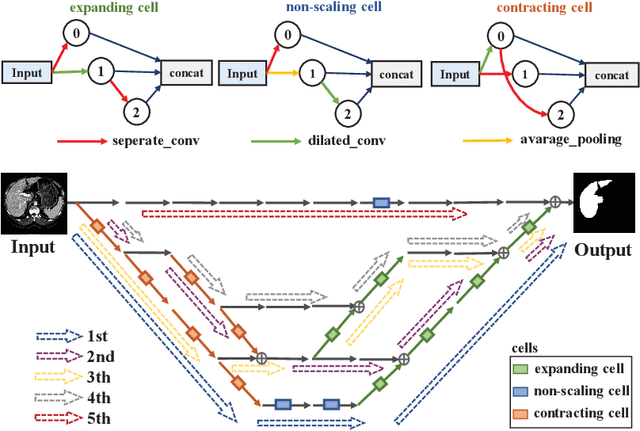

MS-NAS: Multi-Scale Neural Architecture Search for Medical Image Segmentation

Jul 13, 2020

Abstract:The recent breakthroughs of Neural Architecture Search (NAS) have motivated various applications in medical image segmentation. However, most existing work either simply rely on hyper-parameter tuning or stick to a fixed network backbone, thereby limiting the underlying search space to identify more efficient architecture. This paper presents a Multi-Scale NAS (MS-NAS) framework that is featured with multi-scale search space from network backbone to cell operation, and multi-scale fusion capability to fuse features with different sizes. To mitigate the computational overhead due to the larger search space, a partial channel connection scheme and a two-step decoding method are utilized to reduce computational overhead while maintaining optimization quality. Experimental results show that on various datasets for segmentation, MS-NAS outperforms the state-of-the-art methods and achieves 0.6-5.4% mIOU and 0.4-3.5% DSC improvements, while the computational resource consumption is reduced by 18.0-24.9%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge