Xiaofei Mao

DriveAgent-R1: Advancing VLM-based Autonomous Driving with Hybrid Thinking and Active Perception

Jul 28, 2025Abstract:Vision-Language Models (VLMs) are advancing autonomous driving, yet their potential is constrained by myopic decision-making and passive perception, limiting reliability in complex environments. We introduce DriveAgent-R1 to tackle these challenges in long-horizon, high-level behavioral decision-making. DriveAgent-R1 features two core innovations: a Hybrid-Thinking framework that adaptively switches between efficient text-based and in-depth tool-based reasoning, and an Active Perception mechanism with a vision toolkit to proactively resolve uncertainties, thereby balancing decision-making efficiency and reliability. The agent is trained using a novel, three-stage progressive reinforcement learning strategy designed to master these hybrid capabilities. Extensive experiments demonstrate that DriveAgent-R1 achieves state-of-the-art performance, outperforming even leading proprietary large multimodal models, such as Claude Sonnet 4. Ablation studies validate our approach and confirm that the agent's decisions are robustly grounded in actively perceived visual evidence, paving a path toward safer and more intelligent autonomous systems.

Integrating Coarse Granularity Part-level Features with Supervised Global-level Features for Person Re-identification

Oct 15, 2020

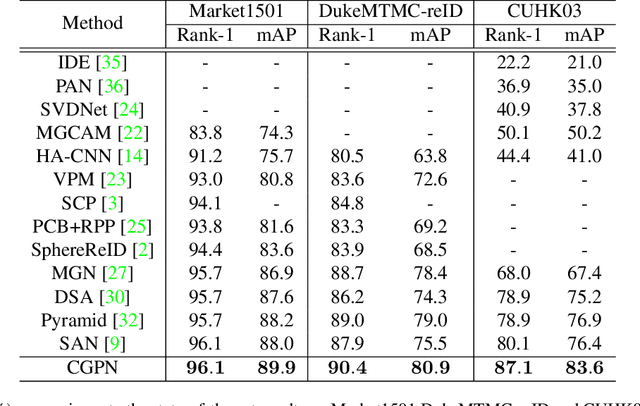

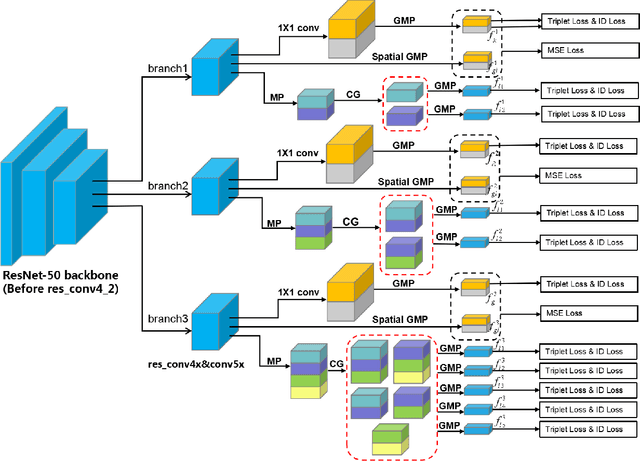

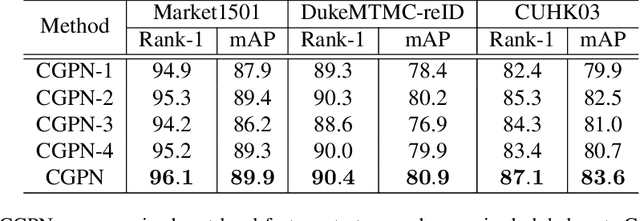

Abstract:Holistic person re-identification (Re-ID) and partial person re-identification have achieved great progress respectively in recent years. However, scenarios in reality often include both holistic and partial pedestrian images, which makes single holistic or partial person Re-ID hard to work. In this paper, we propose a robust coarse granularity part-level person Re-ID network (CGPN), which not only extracts robust regional level body features, but also integrates supervised global features for both holistic and partial person images. CGPN gains two-fold benefit toward higher accuracy for person Re-ID. On one hand, CGPN learns to extract effective body part features for both holistic and partial person images. On the other hand, compared with extracting global features directly by backbone network, CGPN learns to extract more accurate global features with a supervision strategy. The single model trained on three Re-ID datasets including Market-1501, DukeMTMC-reID and CUHK03 achieves state-of-the-art performances and outperforms any existing approaches. Especially on CUHK03, which is the most challenging dataset for person Re-ID, in single query mode, we obtain a top result of Rank-1/mAP=87.1\%/83.6\% with this method without re-ranking, outperforming the current best method by +7.0\%/+6.7\%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge