Wesley P. Chan

University of British Columbia

Demonstrating Cloth Folding to Robots: Design and Evaluation of a 2D and a 3D User Interface

Apr 07, 2021

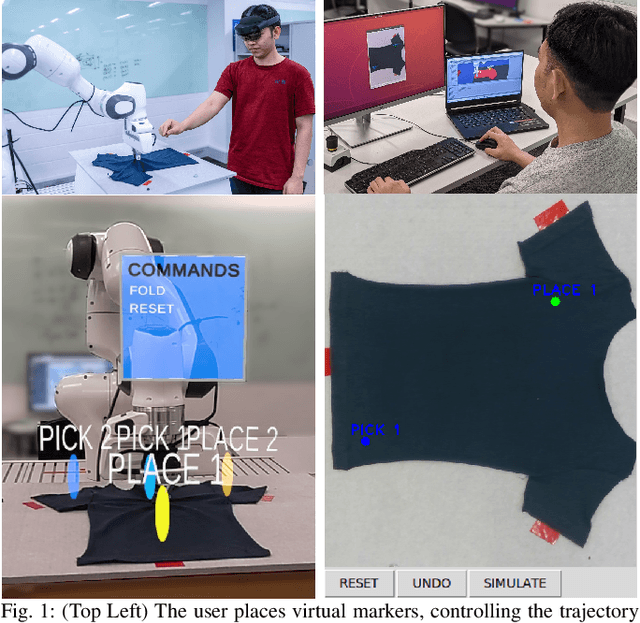

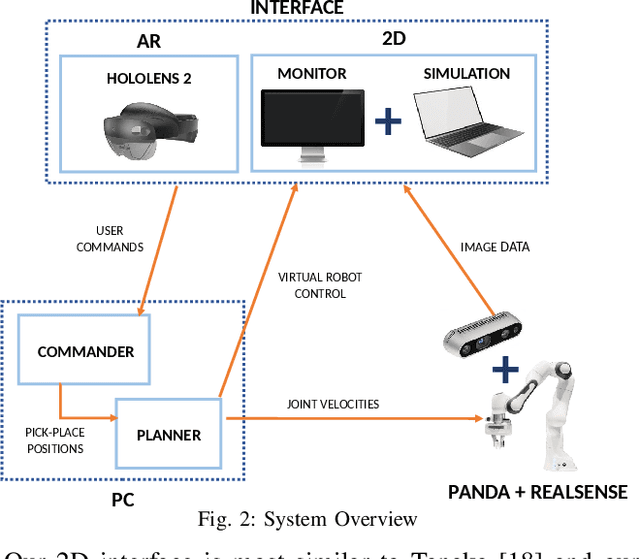

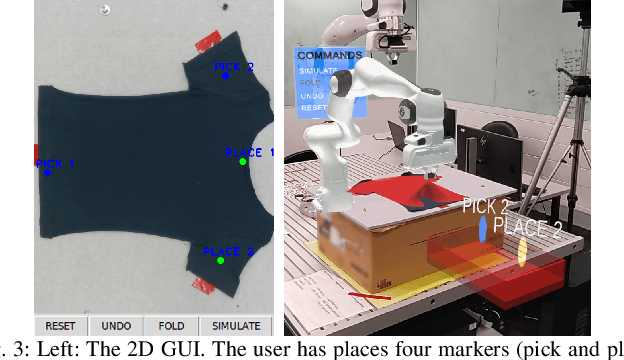

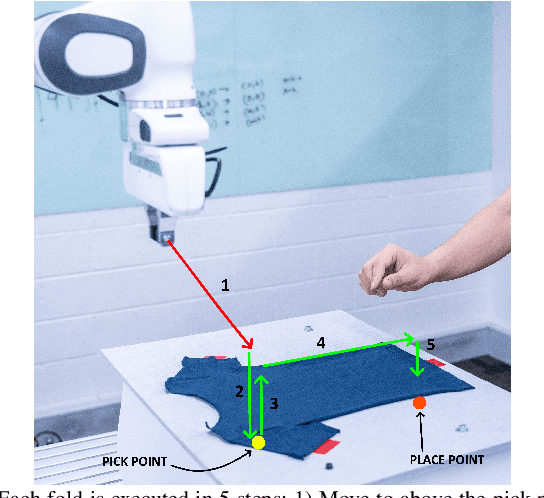

Abstract:An appropriate user interface to collect human demonstration data for deformable object manipulation has been mostly overlooked in the literature. We present an interaction design for demonstrating cloth folding to robots. Users choose pick and place points on the cloth and can preview a visualization of a simulated cloth before real-robot execution. Two interfaces are proposed: A 2D display-and-mouse interface where points are placed by clicking on an image of the cloth, and a 3D Augmented Reality interface where the chosen points are placed by hand gestures. We conduct a user study with 18 participants, in which each user completed two sequential folds to achieve a cloth goal shape. Results show that while both interfaces were acceptable, the 3D interface was found to be more suitable for understanding the task, and the 2D interface suitable for repetition. Results also found that fold previews improve three key metrics: task efficiency, the ability to predict the final shape of the cloth and overall user satisfaction.

Visualizing Robot Intent for Object Handovers with Augmented Reality

Mar 06, 2021

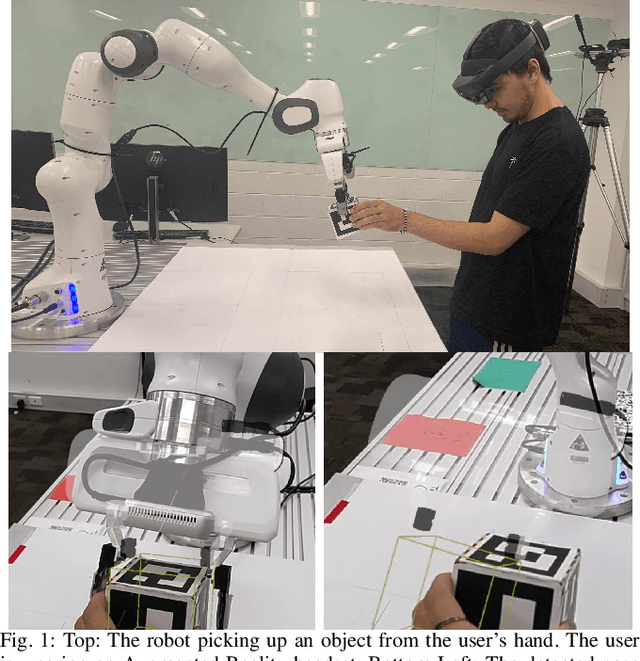

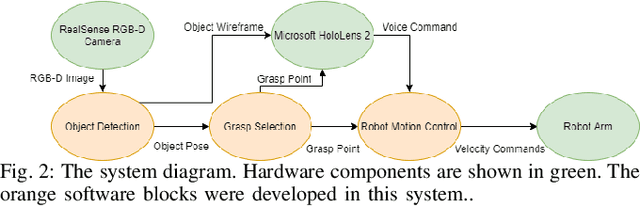

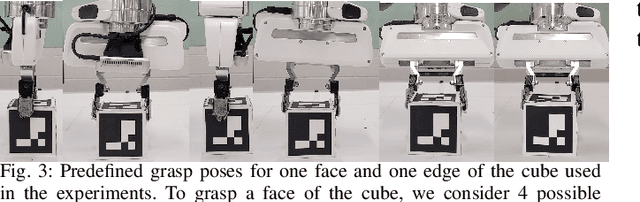

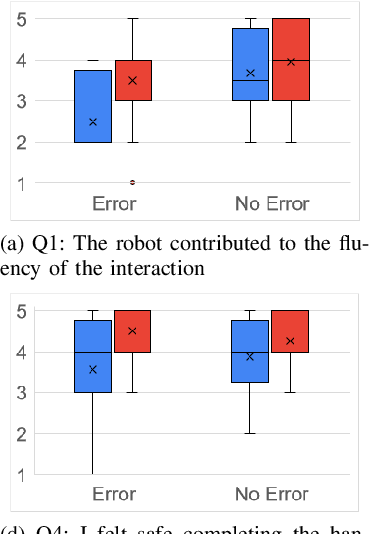

Abstract:Humans are very skillful in communicating their intent for when and where a handover would occur. On the other hand, even the state-of-the-art robotic implementations for handovers display a general lack of communication skills. We propose visualizing the internal state and intent of robots for Human-to-Robot Handovers using Augmented Reality. Specifically, we visualize 3D models of the object and the robotic gripper to communicate the robot's estimation of where the object is and the pose that the robot intends to grasp the object. We conduct a user study with 16 participants, in which each participant handed over a cube-shaped object to the robot 12 times. Results show that visualizing robot intent using augmented reality substantially improves the subjective experience of the users for handovers and decreases the time to transfer the object. Results also indicate that the benefits of augmented reality are still present even when the robot makes errors in localizing the object.

Autonomous Person-Specific Following Robot

Nov 11, 2020

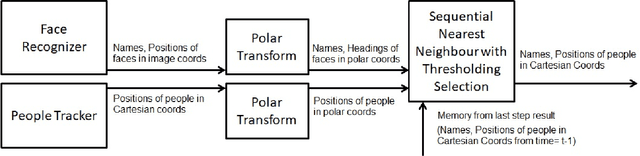

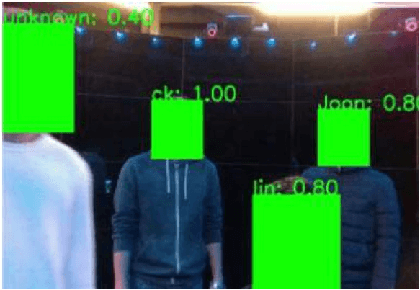

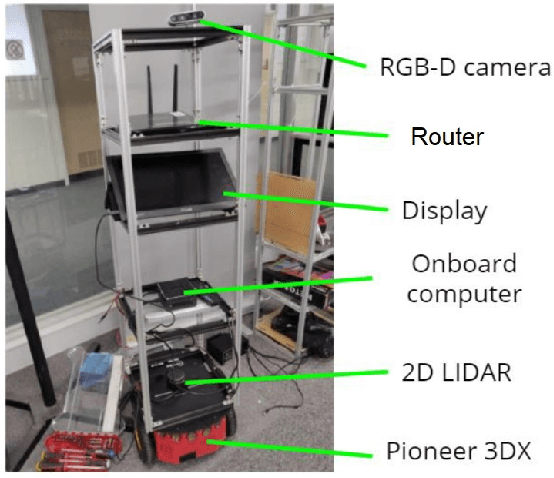

Abstract:Following a specific user is a desired or even required capability for service robots in many human-robot collaborative applications. However, most existing person-following robots follow people without knowledge of who it is following. In this paper, we proposed an identity-specific person tracker, capable of tracking and identifying nearby people, to enable person-specific following. Our proposed method uses a Sequential Nearest Neighbour with Thresholding Selection algorithm we devised to fuse together an anonymous person tracker and a face recogniser. Experiment results comparing our proposed method with alternative approaches showed that our method achieves better performance in tracking and identifying people, as well as improved robot performance in following a target individual.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge