Wenqi Wei

Rethinking Learning Rate Tuning in the Era of Large Language Models

Sep 16, 2023Abstract:Large Language Models (LLMs) represent the recent success of deep learning in achieving remarkable human-like predictive performance. It has become a mainstream strategy to leverage fine-tuning to adapt LLMs for various real-world applications due to the prohibitive expenses associated with LLM training. The learning rate is one of the most important hyperparameters in LLM fine-tuning with direct impacts on both fine-tuning efficiency and fine-tuned LLM quality. Existing learning rate policies are primarily designed for training traditional deep neural networks (DNNs), which may not work well for LLM fine-tuning. We reassess the research challenges and opportunities of learning rate tuning in the coming era of Large Language Models. This paper makes three original contributions. First, we revisit existing learning rate policies to analyze the critical challenges of learning rate tuning in the era of LLMs. Second, we present LRBench++ to benchmark learning rate policies and facilitate learning rate tuning for both traditional DNNs and LLMs. Third, our experimental analysis with LRBench++ demonstrates the key differences between LLM fine-tuning and traditional DNN training and validates our analysis.

Explicit Time Embedding Based Cascade Attention Network for Information Popularity Prediction

Aug 19, 2023Abstract:Predicting information cascade popularity is a fundamental problem in social networks. Capturing temporal attributes and cascade role information (e.g., cascade graphs and cascade sequences) is necessary for understanding the information cascade. Current methods rarely focus on unifying this information for popularity predictions, which prevents them from effectively modeling the full properties of cascades to achieve satisfactory prediction performances. In this paper, we propose an explicit Time embedding based Cascade Attention Network (TCAN) as a novel popularity prediction architecture for large-scale information networks. TCAN integrates temporal attributes (i.e., periodicity, linearity, and non-linear scaling) into node features via a general time embedding approach (TE), and then employs a cascade graph attention encoder (CGAT) and a cascade sequence attention encoder (CSAT) to fully learn the representation of cascade graphs and cascade sequences. We use two real-world datasets (i.e., Weibo and APS) with tens of thousands of cascade samples to validate our methods. Experimental results show that TCAN obtains mean logarithm squared errors of 2.007 and 1.201 and running times of 1.76 hours and 0.15 hours on both datasets, respectively. Furthermore, TCAN outperforms other representative baselines by 10.4%, 3.8%, and 10.4% in terms of MSLE, MAE, and R-squared on average while maintaining good interpretability.

Few-shot Multi-domain Knowledge Rearming for Context-aware Defence against Advanced Persistent Threats

Jun 14, 2023Abstract:Advanced persistent threats (APTs) have novel features such as multi-stage penetration, highly-tailored intention, and evasive tactics. APTs defense requires fusing multi-dimensional Cyber threat intelligence data to identify attack intentions and conducts efficient knowledge discovery strategies by data-driven machine learning to recognize entity relationships. However, data-driven machine learning lacks generalization ability on fresh or unknown samples, reducing the accuracy and practicality of the defense model. Besides, the private deployment of these APT defense models on heterogeneous environments and various network devices requires significant investment in context awareness (such as known attack entities, continuous network states, and current security strategies). In this paper, we propose a few-shot multi-domain knowledge rearming (FMKR) scheme for context-aware defense against APTs. By completing multiple small tasks that are generated from different network domains with meta-learning, the FMKR firstly trains a model with good discrimination and generalization ability for fresh and unknown APT attacks. In each FMKR task, both threat intelligence and local entities are fused into the support/query sets in meta-learning to identify possible attack stages. Secondly, to rearm current security strategies, an finetuning-based deployment mechanism is proposed to transfer learned knowledge into the student model, while minimizing the defense cost. Compared to multiple model replacement strategies, the FMKR provides a faster response to attack behaviors while consuming less scheduling cost. Based on the feedback from multiple real users of the Industrial Internet of Things (IIoT) over 2 months, we demonstrate that the proposed scheme can improve the defense satisfaction rate.

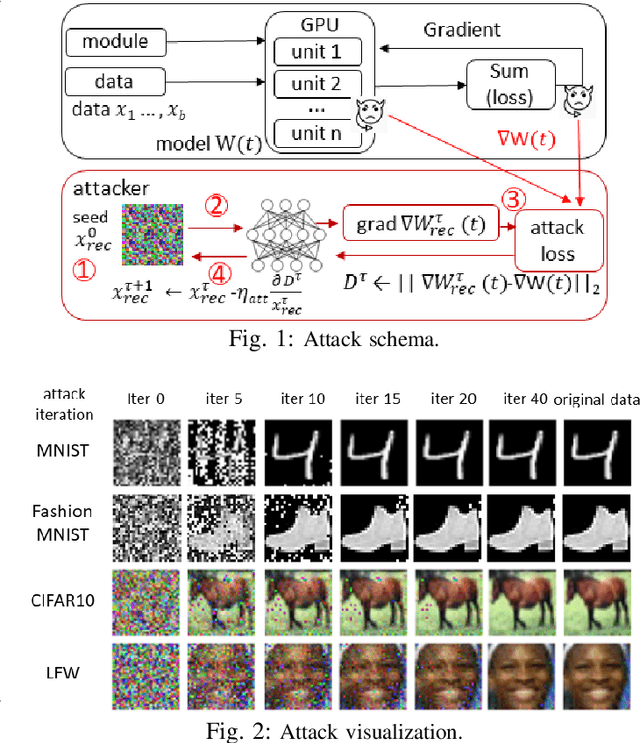

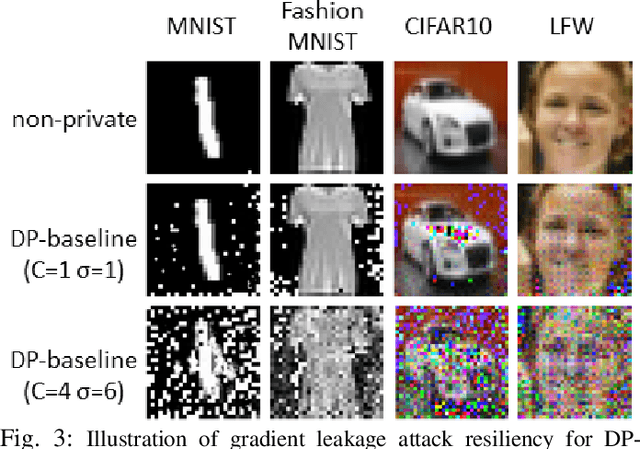

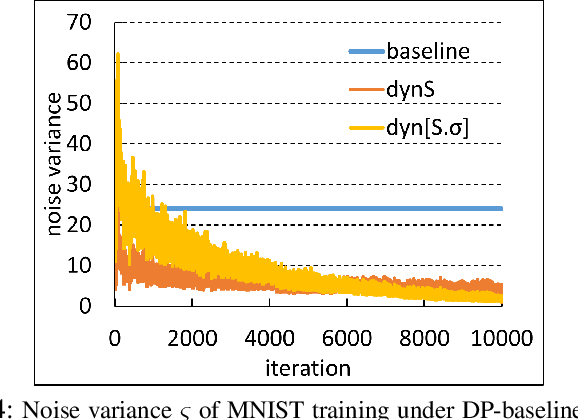

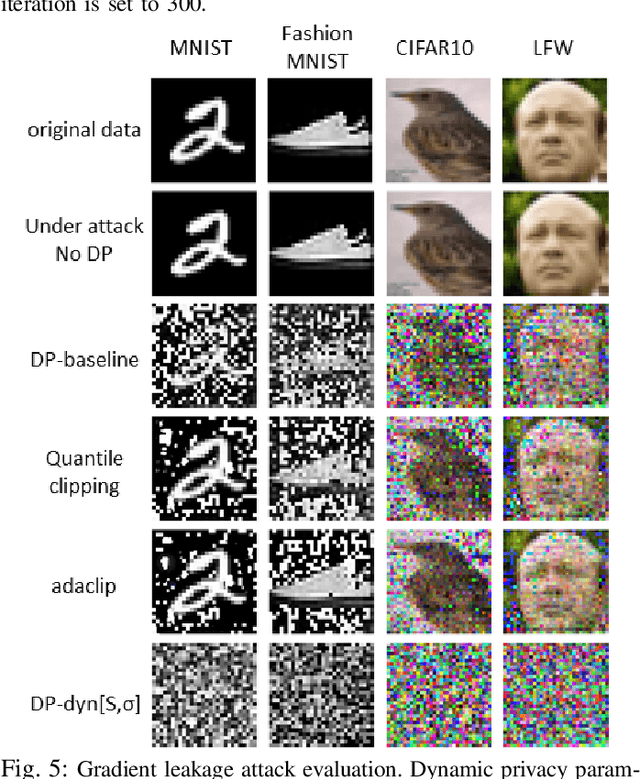

Securing Distributed SGD against Gradient Leakage Threats

May 10, 2023Abstract:This paper presents a holistic approach to gradient leakage resilient distributed Stochastic Gradient Descent (SGD). First, we analyze two types of strategies for privacy-enhanced federated learning: (i) gradient pruning with random selection or low-rank filtering and (ii) gradient perturbation with additive random noise or differential privacy noise. We analyze the inherent limitations of these approaches and their underlying impact on privacy guarantee, model accuracy, and attack resilience. Next, we present a gradient leakage resilient approach to securing distributed SGD in federated learning, with differential privacy controlled noise as the tool. Unlike conventional methods with the per-client federated noise injection and fixed noise parameter strategy, our approach keeps track of the trend of per-example gradient updates. It makes adaptive noise injection closely aligned throughout the federated model training. Finally, we provide an empirical privacy analysis on the privacy guarantee, model utility, and attack resilience of the proposed approach. Extensive evaluation using five benchmark datasets demonstrates that our gradient leakage resilient approach can outperform the state-of-the-art methods with competitive accuracy performance, strong differential privacy guarantee, and high resilience against gradient leakage attacks. The code associated with this paper can be found: https://github.com/git-disl/Fed-alphaCDP.

STDLens: Model Hijacking-Resilient Federated Learning for Object Detection

Mar 25, 2023Abstract:Federated Learning (FL) has been gaining popularity as a collaborative learning framework to train deep learning-based object detection models over a distributed population of clients. Despite its advantages, FL is vulnerable to model hijacking. The attacker can control how the object detection system should misbehave by implanting Trojaned gradients using only a small number of compromised clients in the collaborative learning process. This paper introduces STDLens, a principled approach to safeguarding FL against such attacks. We first investigate existing mitigation mechanisms and analyze their failures caused by the inherent errors in spatial clustering analysis on gradients. Based on the insights, we introduce a three-tier forensic framework to identify and expel Trojaned gradients and reclaim the performance over the course of FL. We consider three types of adaptive attacks and demonstrate the robustness of STDLens against advanced adversaries. Extensive experiments show that STDLens can protect FL against different model hijacking attacks and outperform existing methods in identifying and removing Trojaned gradients with significantly higher precision and much lower false-positive rates.

GNN-Ensemble: Towards Random Decision Graph Neural Networks

Mar 20, 2023Abstract:Graph Neural Networks (GNNs) have enjoyed wide spread applications in graph-structured data. However, existing graph based applications commonly lack annotated data. GNNs are required to learn latent patterns from a limited amount of training data to perform inferences on a vast amount of test data. The increased complexity of GNNs, as well as a single point of model parameter initialization, usually lead to overfitting and sub-optimal performance. In addition, it is known that GNNs are vulnerable to adversarial attacks. In this paper, we push one step forward on the ensemble learning of GNNs with improved accuracy, generalization, and adversarial robustness. Following the principles of stochastic modeling, we propose a new method called GNN-Ensemble to construct an ensemble of random decision graph neural networks whose capacity can be arbitrarily expanded for improvement in performance. The essence of the method is to build multiple GNNs in randomly selected substructures in the topological space and subfeatures in the feature space, and then combine them for final decision making. These GNNs in different substructure and subfeature spaces generalize their classification in complementary ways. Consequently, their combined classification performance can be improved and overfitting on the training data can be effectively reduced. In the meantime, we show that GNN-Ensemble can significantly improve the adversarial robustness against attacks on GNNs.

Machine Learning for Synthetic Data Generation: a Review

Feb 08, 2023Abstract:Data plays a crucial role in machine learning. However, in real-world applications, there are several problems with data, e.g., data are of low quality; a limited number of data points lead to under-fitting of the machine learning model; it is hard to access the data due to privacy, safety and regulatory concerns. \textit{Synthetic data generation} offers a promising new avenue, as it can be shared and used in ways that real-world data cannot. This paper systematically reviews the existing works that leverage machine learning models for synthetic data generation. Specifically, we discuss the synthetic data generation works from several perspectives: (i) applications, including computer vision, speech, natural language, healthcare, and business; (ii) machine learning methods, particularly neural network architectures and deep generative models; (iii) privacy and fairness issue. In addition, we identify the challenges and opportunities in this emerging field and suggest future research directions.

EENet: Learning to Early Exit for Adaptive Inference

Jan 15, 2023Abstract:Budgeted adaptive inference with early exits is an emerging technique to improve the computational efficiency of deep neural networks (DNNs) for edge AI applications with limited resources at test time. This method leverages the fact that different test data samples may not require the same amount of computation for a correct prediction. By allowing early exiting from full layers of DNN inference for some test examples, we can reduce latency and improve throughput of edge inference while preserving performance. Although there have been numerous studies on designing specialized DNN architectures for training early-exit enabled DNN models, most of the existing work employ hand-tuned or manual rule-based early exit policies. In this study, we introduce a novel multi-exit DNN inference framework, coined as EENet, which leverages multi-objective learning to optimize the early exit policy for a trained multi-exit DNN under a given inference budget. This paper makes two novel contributions. First, we introduce the concept of early exit utility scores by combining diverse confidence measures with class-wise prediction scores to better estimate the correctness of test-time predictions at a given exit. Second, we train a lightweight, budget-driven, multi-objective neural network over validation predictions to learn the exit assignment scheduling for query examples at test time. The EENet early exit scheduler optimizes both the distribution of test samples to different exits and the selection of the exit utility thresholds such that the given inference budget is satisfied while the performance metric is maximized. Extensive experiments are conducted on five benchmarks, including three image datasets (CIFAR-10, CIFAR-100, ImageNet) and two NLP datasets (SST-2, AgNews). The results demonstrate the performance improvements of EENet compared to existing representative early exit techniques.

Gradient Leakage Attack Resilient Deep Learning

Dec 25, 2021

Abstract:Gradient leakage attacks are considered one of the wickedest privacy threats in deep learning as attackers covertly spy gradient updates during iterative training without compromising model training quality, and yet secretly reconstruct sensitive training data using leaked gradients with high attack success rate. Although deep learning with differential privacy is a defacto standard for publishing deep learning models with differential privacy guarantee, we show that differentially private algorithms with fixed privacy parameters are vulnerable against gradient leakage attacks. This paper investigates alternative approaches to gradient leakage resilient deep learning with differential privacy (DP). First, we analyze existing implementation of deep learning with differential privacy, which use fixed noise variance to injects constant noise to the gradients in all layers using fixed privacy parameters. Despite the DP guarantee provided, the method suffers from low accuracy and is vulnerable to gradient leakage attacks. Second, we present a gradient leakage resilient deep learning approach with differential privacy guarantee by using dynamic privacy parameters. Unlike fixed-parameter strategies that result in constant noise variance, different dynamic parameter strategies present alternative techniques to introduce adaptive noise variance and adaptive noise injection which are closely aligned to the trend of gradient updates during differentially private model training. Finally, we describe four complementary metrics to evaluate and compare alternative approaches.

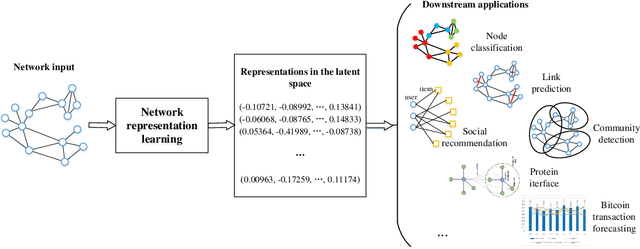

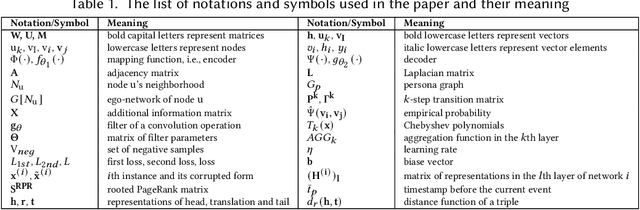

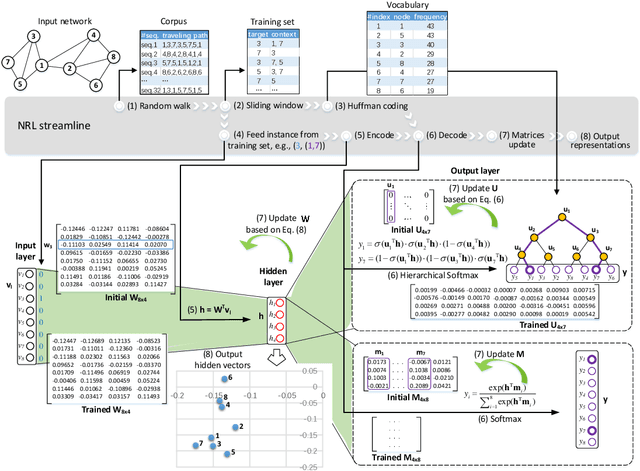

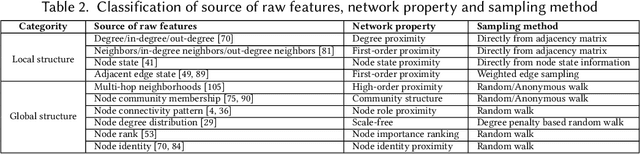

Network Representation Learning: From Preprocessing, Feature Extraction to Node Embedding

Oct 14, 2021

Abstract:Network representation learning (NRL) advances the conventional graph mining of social networks, knowledge graphs, and complex biomedical and physics information networks. Over dozens of network representation learning algorithms have been reported in the literature. Most of them focus on learning node embeddings for homogeneous networks, but they differ in the specific encoding schemes and specific types of node semantics captured and used for learning node embedding. This survey paper reviews the design principles and the different node embedding techniques for network representation learning over homogeneous networks. To facilitate the comparison of different node embedding algorithms, we introduce a unified reference framework to divide and generalize the node embedding learning process on a given network into preprocessing steps, node feature extraction steps and node embedding model training for a NRL task such as link prediction and node clustering. With this unifying reference framework, we highlight the representative methods, models, and techniques used at different stages of the node embedding model learning process. This survey not only helps researchers and practitioners to gain an in-depth understanding of different network representation learning techniques but also provides practical guidelines for designing and developing the next generation of network representation learning algorithms and systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge