Wenjing Hong

Evolutionary Reinforcement Learning based AI tutor for Socratic Interdisciplinary Instruction

Dec 12, 2025

Abstract:Cultivating higher-order cognitive abilities -- such as knowledge integration, critical thinking, and creativity -- in modern STEM education necessitates a pedagogical shift from passive knowledge transmission to active Socratic construction. Although Large Language Models (LLMs) hold promise for STEM Interdisciplinary education, current methodologies employing Prompt Engineering (PE), Supervised Fine-tuning (SFT), or standard Reinforcement Learning (RL) often fall short of supporting this paradigm. Existing methods are hindered by three fundamental challenges: the inability to dynamically model latent student cognitive states; severe reward sparsity and delay inherent in long-term educational goals; and a tendency toward policy collapse lacking strategic diversity due to reliance on behavioral cloning. Recognizing the unobservability and dynamic complexity of these interactions, we formalize the Socratic Interdisciplinary Instructional Problem (SIIP) as a structured Partially Observable Markov Decision Process (POMDP), demanding simultaneous global exploration and fine-grained policy refinement. To this end, we propose ERL4SIIP, a novel Evolutionary Reinforcement Learning (ERL) framework specifically tailored for this domain. ERL4SIIP integrates: (1) a dynamic student simulator grounded in a STEM knowledge graph for latent state modeling; (2) a Hierarchical Reward Mechanism that decomposes long-horizon goals into dense signals; and (3) a LoRA-Division based optimization strategy coupling evolutionary algorithms for population-level global search with PPO for local gradient ascent.

Surrogate-Assisted Evolutionary Reinforcement Learning Based on Autoencoder and Hyperbolic Neural Network

May 26, 2025Abstract:Evolutionary Reinforcement Learning (ERL), training the Reinforcement Learning (RL) policies with Evolutionary Algorithms (EAs), have demonstrated enhanced exploration capabilities and greater robustness than using traditional policy gradient. However, ERL suffers from the high computational costs and low search efficiency, as EAs require evaluating numerous candidate policies with expensive simulations, many of which are ineffective and do not contribute meaningfully to the training. One intuitive way to reduce the ineffective evaluations is to adopt the surrogates. Unfortunately, existing ERL policies are often modeled as deep neural networks (DNNs) and thus naturally represented as high-dimensional vectors containing millions of weights, which makes the building of effective surrogates for ERL policies extremely challenging. This paper proposes a novel surrogate-assisted ERL that integrates Autoencoders (AE) and Hyperbolic Neural Networks (HNN). Specifically, AE compresses high-dimensional policies into low-dimensional representations while extracting key features as the inputs for the surrogate. HNN, functioning as a classification-based surrogate model, can learn complex nonlinear relationships from sampled data and enable more accurate pre-selection of the sampled policies without real evaluations. The experiments on 10 Atari and 4 Mujoco games have verified that the proposed method outperforms previous approaches significantly. The search trajectories guided by AE and HNN are also visually demonstrated to be more effective, in terms of both exploration and convergence. This paper not only presents the first learnable policy embedding and surrogate-modeling modules for high-dimensional ERL policies, but also empirically reveals when and why they can be successful.

Automatic Construction of Parallel Algorithm Portfolios for Multi-objective Optimization

Nov 17, 2022

Abstract:It has been widely observed that there exists no universal best Multi-objective Evolutionary Algorithm (MOEA) dominating all other MOEAs on all possible Multi-objective Optimization Problems (MOPs). In this work, we advocate using the Parallel Algorithm Portfolio (PAP), which runs multiple MOEAs independently in parallel and gets the best out of them, to combine the advantages of different MOEAs. Since the manual construction of PAPs is non-trivial and tedious, we propose to automatically construct high-performance PAPs for solving MOPs. Specifically, we first propose a variant of PAPs, namely MOEAs/PAP, which can better determine the output solution set for MOPs than conventional PAPs. Then, we present an automatic construction approach for MOEAs/PAP with a novel performance metric for evaluating the performance of MOEAs across multiple MOPs. Finally, we use the proposed approach to construct a MOEAs/PAP based on a training set of MOPs and an algorithm configuration space defined by several variants of NSGA-II. Experimental results show that the automatically constructed MOEAs/PAP can even rival the state-of-the-art multi-operator-based MOEAs designed by human experts, demonstrating the huge potential of automatic construction of PAPs in multi-objective optimization.

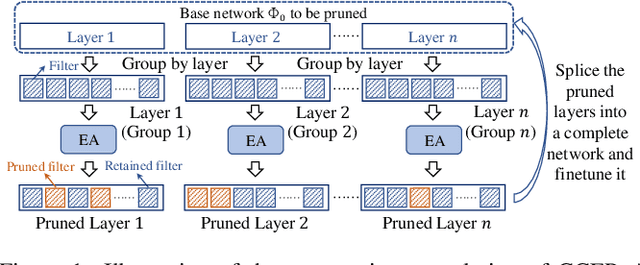

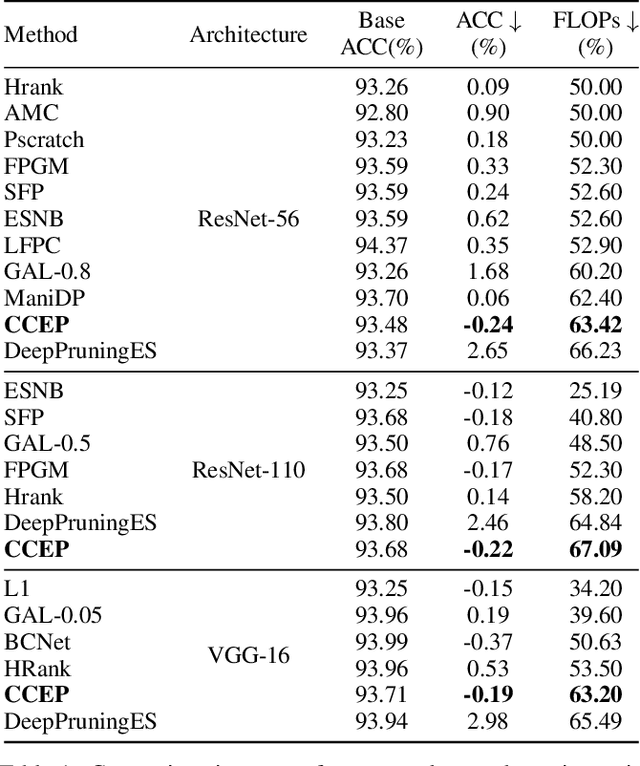

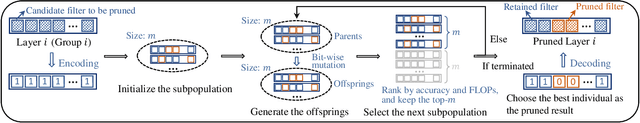

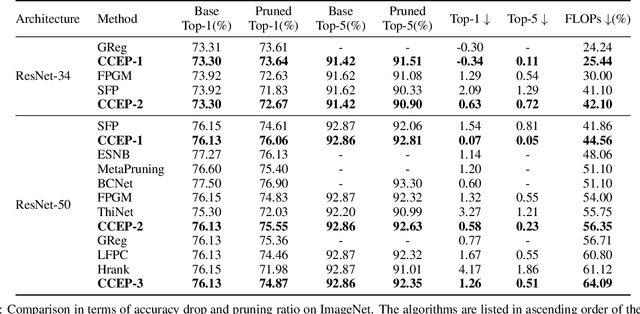

Neural Network Pruning by Cooperative Coevolution

Apr 12, 2022

Abstract:Neural network pruning is a popular model compression method which can significantly reduce the computing cost with negligible loss of accuracy. Recently, filters are often pruned directly by designing proper criteria or using auxiliary modules to measure their importance, which, however, requires expertise and trial-and-error. Due to the advantage of automation, pruning by evolutionary algorithms (EAs) has attracted much attention, but the performance is limited for deep neural networks as the search space can be quite large. In this paper, we propose a new filter pruning algorithm CCEP by cooperative coevolution, which prunes the filters in each layer by EAs separately. That is, CCEP reduces the pruning space by a divide-and-conquer strategy. The experiments show that CCEP can achieve a competitive performance with the state-of-the-art pruning methods, e.g., prune ResNet56 for $63.42\%$ FLOPs on CIFAR10 with $-0.24\%$ accuracy drop, and ResNet50 for $44.56\%$ FLOPs on ImageNet with $0.07\%$ accuracy drop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge