Wang Kang

ReasoningV: Efficient Verilog Code Generation with Adaptive Hybrid Reasoning Model

Apr 20, 2025

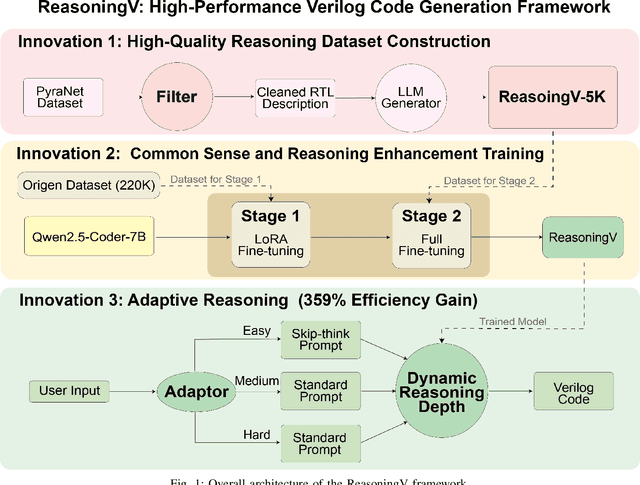

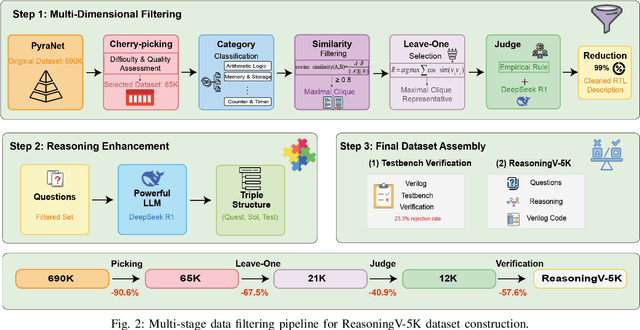

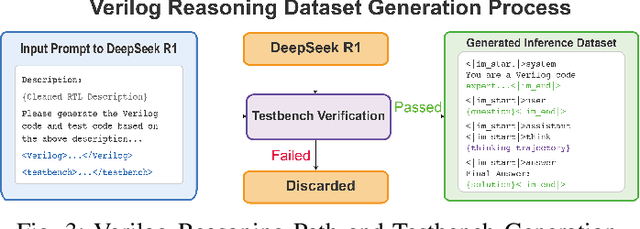

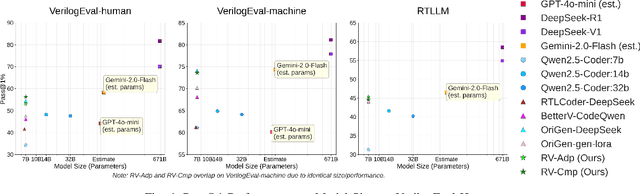

Abstract:Large Language Models (LLMs) have advanced Verilog code generation significantly, yet face challenges in data quality, reasoning capabilities, and computational efficiency. This paper presents ReasoningV, a novel model employing a hybrid reasoning strategy that integrates trained intrinsic capabilities with dynamic inference adaptation for Verilog code generation. Our framework introduces three complementary innovations: (1) ReasoningV-5K, a high-quality dataset of 5,000 functionally verified instances with reasoning paths created through multi-dimensional filtering of PyraNet samples; (2) a two-stage training approach combining parameter-efficient fine-tuning for foundational knowledge with full-parameter optimization for enhanced reasoning; and (3) an adaptive reasoning mechanism that dynamically adjusts reasoning depth based on problem complexity, reducing token consumption by up to 75\% while preserving performance. Experimental results demonstrate ReasoningV's effectiveness with a pass@1 accuracy of 57.8\% on VerilogEval-human, achieving performance competitive with leading commercial models like Gemini-2.0-flash (59.5\%) and exceeding the previous best open-source model by 10.4 percentage points. ReasoningV offers a more reliable and accessible pathway for advancing AI-driven hardware design automation, with our model, data, and code available at https://github.com/BUAA-CLab/ReasoningV.

Towards Optimal Circuit Generation: Multi-Agent Collaboration Meets Collective Intelligence

Apr 20, 2025Abstract:Large language models (LLMs) have transformed code generation, yet their application in hardware design produces gate counts 38\%--1075\% higher than human designs. We present CircuitMind, a multi-agent framework that achieves human-competitive efficiency through three key innovations: syntax locking (constraining generation to basic logic gates), retrieval-augmented generation (enabling knowledge-driven design), and dual-reward optimization (balancing correctness with efficiency). To evaluate our approach, we introduce TC-Bench, the first gate-level benchmark harnessing collective intelligence from the TuringComplete ecosystem -- a competitive circuit design platform with hundreds of thousands of players. Experiments show CircuitMind enables 55.6\% of model implementations to match or exceed top-tier human experts in composite efficiency metrics. Most remarkably, our framework elevates the 14B Phi-4 model to outperform both GPT-4o mini and Gemini 2.0 Flash, achieving efficiency comparable to the top 25\% of human experts without requiring specialized training. These innovations establish a new paradigm for hardware optimization where collaborative AI systems leverage collective human expertise to achieve optimal circuit designs. Our model, data, and code are open-source at https://github.com/BUAA-CLab/CircuitMind.

Differentiable Multi-Fidelity Fusion: Efficient Learning of Physics Simulations with Neural Architecture Search and Transfer Learning

Jun 12, 2023

Abstract:With rapid progress in deep learning, neural networks have been widely used in scientific research and engineering applications as surrogate models. Despite the great success of neural networks in fitting complex systems, two major challenges still remain: i) the lack of generalization on different problems/datasets, and ii) the demand for large amounts of simulation data that are computationally expensive. To resolve these challenges, we propose the differentiable \mf (DMF) model, which leverages neural architecture search (NAS) to automatically search the suitable model architecture for different problems, and transfer learning to transfer the learned knowledge from low-fidelity (fast but inaccurate) data to high-fidelity (slow but accurate) model. Novel and latest machine learning techniques such as hyperparameters search and alternate learning are used to improve the efficiency and robustness of DMF. As a result, DMF can efficiently learn the physics simulations with only a few high-fidelity training samples, and outperform the state-of-the-art methods with a significant margin (with up to 58$\%$ improvement in RMSE) based on a variety of synthetic and practical benchmark problems.

Forecasting the outcome of spintronic experiments with Neural Ordinary Differential Equations

Jul 23, 2021

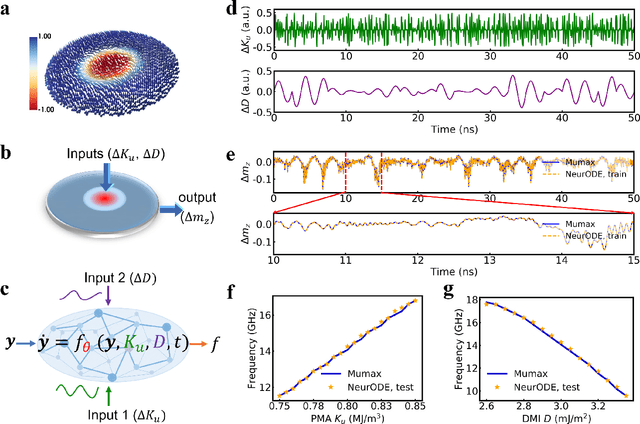

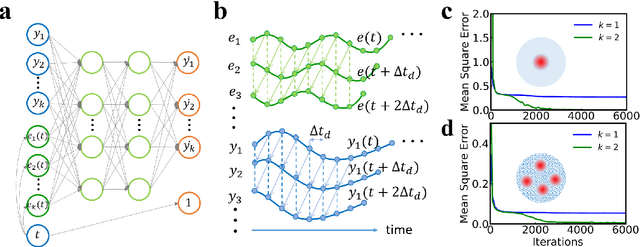

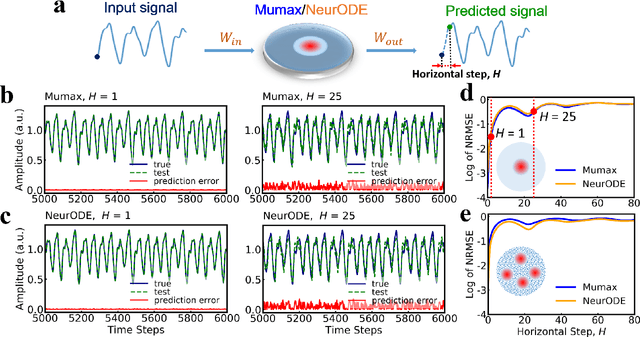

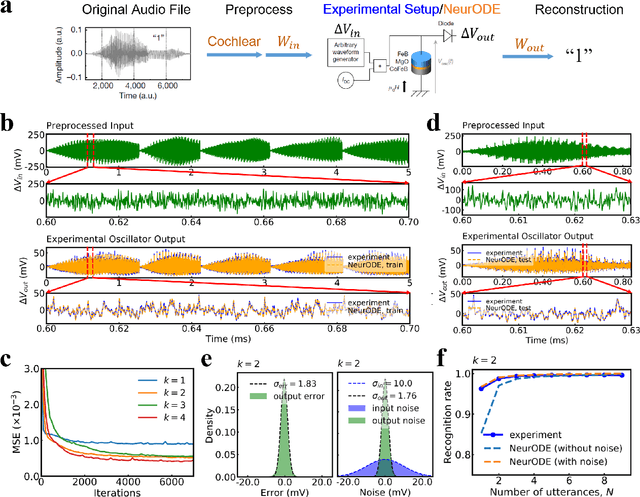

Abstract:Deep learning has an increasing impact to assist research, allowing, for example, the discovery of novel materials. Until now, however, these artificial intelligence techniques have fallen short of discovering the full differential equation of an experimental physical system. Here we show that a dynamical neural network, trained on a minimal amount of data, can predict the behavior of spintronic devices with high accuracy and an extremely efficient simulation time, compared to the micromagnetic simulations that are usually employed to model them. For this purpose, we re-frame the formalism of Neural Ordinary Differential Equations (ODEs) to the constraints of spintronics: few measured outputs, multiple inputs and internal parameters. We demonstrate with Spin-Neural ODEs an acceleration factor over 200 compared to micromagnetic simulations for a complex problem -- the simulation of a reservoir computer made of magnetic skyrmions (20 minutes compared to three days). In a second realization, we show that we can predict the noisy response of experimental spintronic nano-oscillators to varying inputs after training Spin-Neural ODEs on five milliseconds of their measured response to different excitations. Spin-Neural ODE is a disruptive tool for developing spintronic applications in complement to micromagnetic simulations, which are time-consuming and cannot fit experiments when noise or imperfections are present. Spin-Neural ODE can also be generalized to other electronic devices involving dynamics.

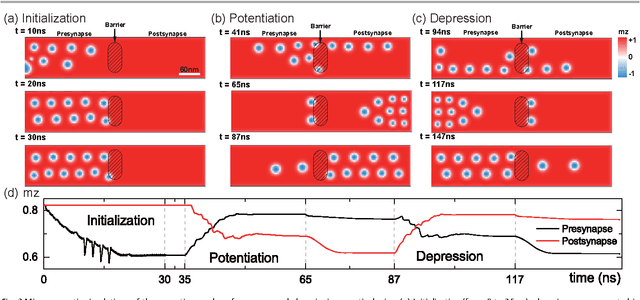

Magnetic skyrmion-based synaptic devices

Aug 29, 2016

Abstract:Magnetic skyrmions are promising candidates for next-generation information carriers, owing to their small size, topological stability, and ultralow depinning current density. A wide variety of skyrmionic device concepts and prototypes have been proposed, highlighting their potential applications. Here, we report on a bioinspired skyrmionic device with synaptic plasticity. The synaptic weight of the proposed device can be strengthened/weakened by positive/negative stimuli, mimicking the potentiation/depression process of a biological synapse. Both short-term plasticity(STP) and long-term potentiation(LTP) functionalities have been demonstrated for a spiking time-dependent plasticity(STDP) scheme. This proposal suggests new possibilities for synaptic devices for use in spiking neuromorphic computing applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge