Toshihiko Yamasaki

Personalized Image Enhancement Featuring Masked Style Modeling

Jun 15, 2023Abstract:We address personalized image enhancement in this study, where we enhance input images for each user based on the user's preferred images. Previous methods apply the same preferred style to all input images (i.e., only one style for each user); in contrast to these methods, we aim to achieve content-aware personalization by applying different styles to each image considering the contents. For content-aware personalization, we make two contributions. First, we propose a method named masked style modeling, which can predict a style for an input image considering the contents by using the framework of masked language modeling. Second, to allow this model to consider the contents of images, we propose a novel training scheme where we download images from Flickr and create pseudo input and retouched image pairs using a degrading model. We conduct quantitative evaluations and a user study, and our method trained using our training scheme successfully achieves content-aware personalization; moreover, our method outperforms other previous methods in this field. Our source code is available at https://github.com/satoshi-kosugi/masked-style-modeling.

Crowd-Powered Photo Enhancement Featuring an Active Learning Based Local Filter

Jun 15, 2023Abstract:In this study, we address local photo enhancement to improve the aesthetic quality of an input image by applying different effects to different regions. Existing photo enhancement methods are either not content-aware or not local; therefore, we propose a crowd-powered local enhancement method for content-aware local enhancement, which is achieved by asking crowd workers to locally optimize parameters for image editing functions. To make it easier to locally optimize the parameters, we propose an active learning based local filter. The parameters need to be determined at only a few key pixels selected by an active learning method, and the parameters at the other pixels are automatically predicted using a regression model. The parameters at the selected key pixels are independently optimized, breaking down the optimization problem into a sequence of single-slider adjustments. Our experiments show that the proposed filter outperforms existing filters, and our enhanced results are more visually pleasing than the results by the existing enhancement methods. Our source code and results are available at https://github.com/satoshi-kosugi/crowd-powered.

Online Open-set Semi-supervised Object Detection via Semi-supervised Outlier Filtering

May 23, 2023Abstract:Open-set semi-supervised object detection (OSSOD) methods aim to utilize practical unlabeled datasets with out-of-distribution (OOD) instances for object detection. The main challenge in OSSOD is distinguishing and filtering the OOD instances from the in-distribution (ID) instances during pseudo-labeling. The previous method uses an offline OOD detection network trained only with labeled data for solving this problem. However, the scarcity of available data limits the potential for improvement. Meanwhile, training separately leads to low efficiency. To alleviate the above issues, this paper proposes a novel end-to-end online framework that improves performance and efficiency by mining more valuable instances from unlabeled data. Specifically, we first propose a semi-supervised OOD detection strategy to mine valuable ID and OOD instances in unlabeled datasets for training. Then, we constitute an online end-to-end trainable OSSOD framework by integrating the OOD detection head into the object detector, making it jointly trainable with the original detection task. Our experimental results show that our method works well on several benchmarks, including the partially labeled COCO dataset with open-set classes and the fully labeled COCO dataset with the additional large-scale open-set unlabeled dataset, OpenImages. Compared with previous OSSOD methods, our approach achieves the best performance on COCO with OpenImages by +0.94 mAP, reaching 44.07 mAP.

MetaMixer: A Regularization Strategy for Online Knowledge Distillation

Mar 14, 2023Abstract:Online knowledge distillation (KD) has received increasing attention in recent years. However, while most existing online KD methods focus on developing complicated model structures and training strategies to improve the distillation of high-level knowledge like probability distribution, the effects of the multi-level knowledge in the online KD are greatly overlooked, especially the low-level knowledge. Thus, to provide a novel viewpoint to online KD, we propose MetaMixer, a regularization strategy that can strengthen the distillation by combining the low-level knowledge that impacts the localization capability of the networks, and high-level knowledge that focuses on the whole image. Experiments under different conditions show that MetaMixer can achieve significant performance gains over state-of-the-art methods.

Toward Extremely Lightweight Distracted Driver Recognition With Distillation-Based Neural Architecture Search and Knowledge Transfer

Feb 09, 2023Abstract:The number of traffic accidents has been continuously increasing in recent years worldwide. Many accidents are caused by distracted drivers, who take their attention away from driving. Motivated by the success of Convolutional Neural Networks (CNNs) in computer vision, many researchers developed CNN-based algorithms to recognize distracted driving from a dashcam and warn the driver against unsafe behaviors. However, current models have too many parameters, which is unfeasible for vehicle-mounted computing. This work proposes a novel knowledge-distillation-based framework to solve this problem. The proposed framework first constructs a high-performance teacher network by progressively strengthening the robustness to illumination changes from shallow to deep layers of a CNN. Then, the teacher network is used to guide the architecture searching process of a student network through knowledge distillation. After that, we use the teacher network again to transfer knowledge to the student network by knowledge distillation. Experimental results on the Statefarm Distracted Driver Detection Dataset and AUC Distracted Driver Dataset show that the proposed approach is highly effective for recognizing distracted driving behaviors from photos: (1) the teacher network's accuracy surpasses the previous best accuracy; (2) the student network achieves very high accuracy with only 0.42M parameters (around 55% of the previous most lightweight model). Furthermore, the student network architecture can be extended to a spatial-temporal 3D CNN for recognizing distracted driving from video clips. The 3D student network largely surpasses the previous best accuracy with only 2.03M parameters on the Drive&Act Dataset. The source code is available at https://github.com/Dichao-Liu/Lightweight_Distracted_Driver_Recognition_with_Distillation-Based_NAS_and_Knowledge_Transfer.

Semi-supervised Fashion Compatibility Prediction by Color Distortion Prediction

Dec 27, 2022Abstract:Supervised learning methods have been suffering from the fact that a large-scale labeled dataset is mandatory, which is difficult to obtain. This has been a more significant issue for fashion compatibility prediction because compatibility aims to capture people's perception of aesthetics, which are sparse and changing. Thus, the labeled dataset may become outdated quickly due to fast fashion. Moreover, labeling the dataset always needs some expert knowledge; at least they should have a good sense of aesthetics. However, there are limited self/semi-supervised learning techniques in this field. In this paper, we propose a general color distortion prediction task forcing the baseline to recognize low-level image information to learn more discriminative representation for fashion compatibility prediction. Specifically, we first propose to distort the image by adjusting the image color balance, contrast, sharpness, and brightness. Then, we propose adding Gaussian noise to the distorted image before passing them to the convolutional neural network (CNN) backbone to learn a probability distribution over all possible distortions. The proposed pretext task is adopted in the state-of-the-art methods in fashion compatibility and shows its effectiveness in improving these methods' ability in extracting better feature representations. Applying the proposed pretext task to the baseline can consistently outperform the original baseline.

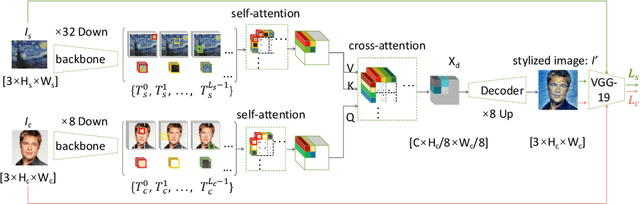

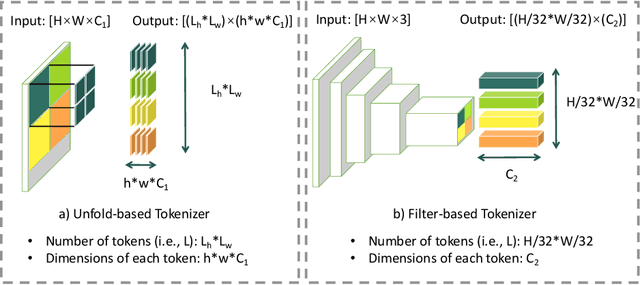

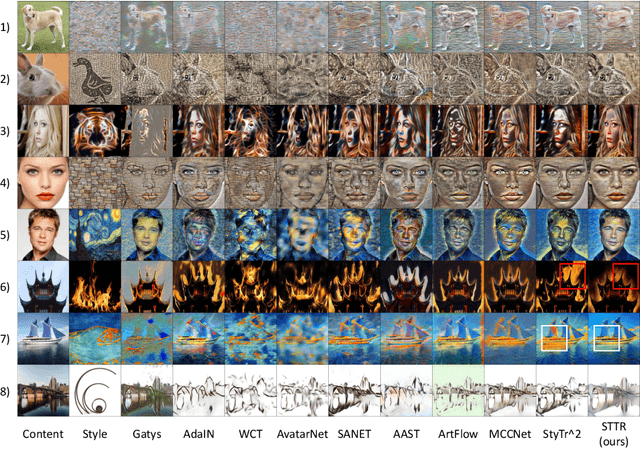

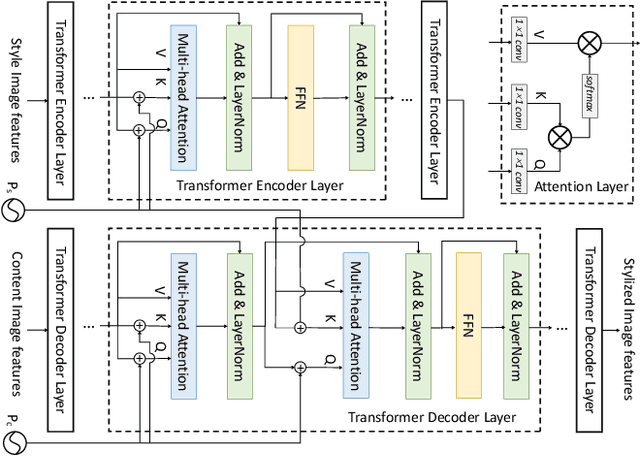

Fine-Grained Image Style Transfer with Visual Transformers

Oct 11, 2022

Abstract:With the development of the convolutional neural network, image style transfer has drawn increasing attention. However, most existing approaches adopt a global feature transformation to transfer style patterns into content images (e.g., AdaIN and WCT). Such a design usually destroys the spatial information of the input images and fails to transfer fine-grained style patterns into style transfer results. To solve this problem, we propose a novel STyle TRansformer (STTR) network which breaks both content and style images into visual tokens to achieve a fine-grained style transformation. Specifically, two attention mechanisms are adopted in our STTR. We first propose to use self-attention to encode content and style tokens such that similar tokens can be grouped and learned together. We then adopt cross-attention between content and style tokens that encourages fine-grained style transformations. To compare STTR with existing approaches, we conduct user studies on Amazon Mechanical Turk (AMT), which are carried out with 50 human subjects with 1,000 votes in total. Extensive evaluations demonstrate the effectiveness and efficiency of the proposed STTR in generating visually pleasing style transfer results.

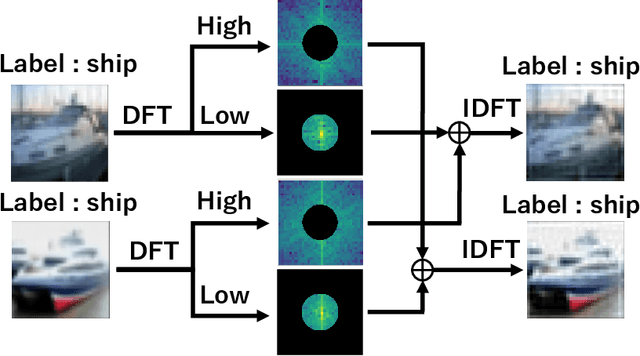

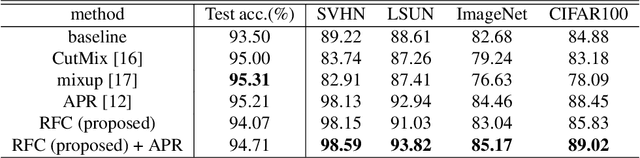

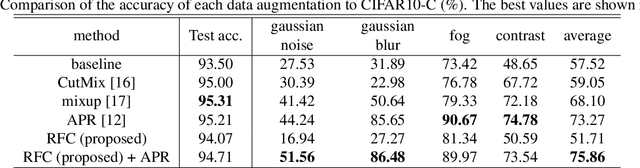

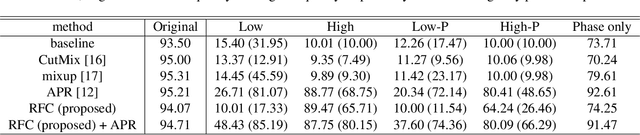

Improving Robustness to Out-of-Distribution Data by Frequency-based Augmentation

Sep 06, 2022

Abstract:Although Convolutional Neural Networks (CNNs) have high accuracy in image recognition, they are vulnerable to adversarial examples and out-of-distribution data, and the difference from human recognition has been pointed out. In order to improve the robustness against out-of-distribution data, we present a frequency-based data augmentation technique that replaces the frequency components with other images of the same class. When the training data are CIFAR10 and the out-of-distribution data are SVHN, the Area Under Receiver Operating Characteristic (AUROC) curve of the model trained with the proposed method increases from 89.22\% to 98.15\%, and further increased to 98.59\% when combined with another data augmentation method. Furthermore, we experimentally demonstrate that the robust model for out-of-distribution data uses a lot of high-frequency components of the image.

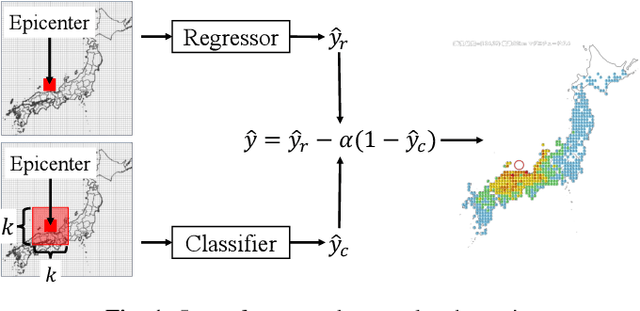

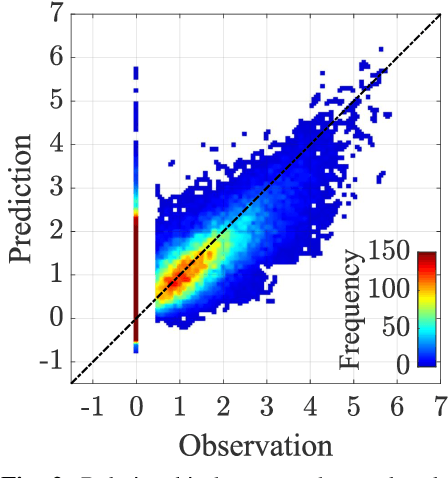

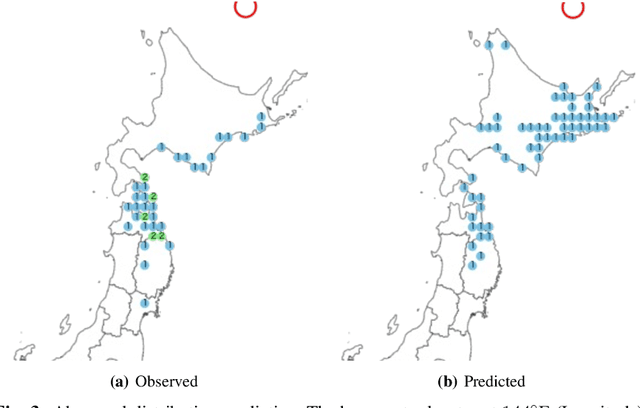

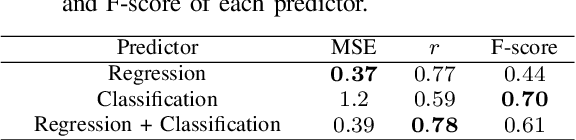

Prediction of Seismic Intensity Distributions Using Neural Networks

Aug 16, 2022

Abstract:The ground motion prediction equation is commonly used to predict the seismic intensity distribution. However, it is not easy to apply this method to seismic distributions affected by underground plate structures, which are commonly known as abnormal seismic distributions. This study proposes a hybrid of regression and classification approaches using neural networks. The proposed model treats the distributions as 2-dimensional data like an image. Our method can accurately predict seismic intensity distributions, even abnormal distributions.

SAT: Self-adaptive training for fashion compatibility prediction

Jun 25, 2022

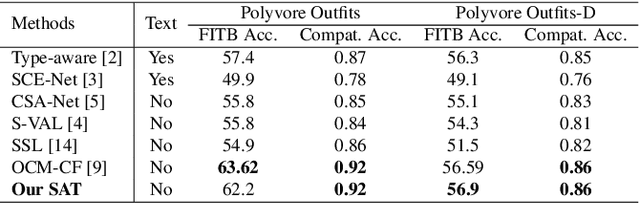

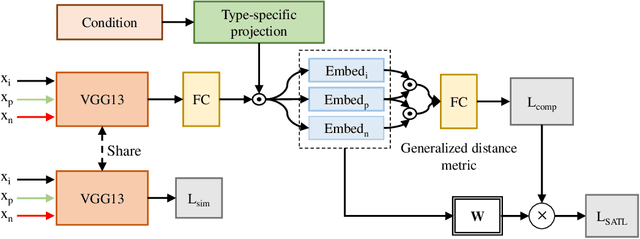

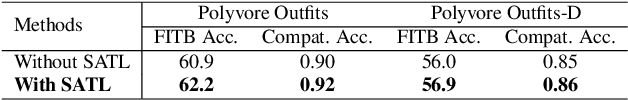

Abstract:This paper presents a self-adaptive training (SAT) model for fashion compatibility prediction. It focuses on the learning of some hard items, such as those that share similar color, texture, and pattern features but are considered incompatible due to the aesthetics or temporal shifts. Specifically, we first design a method to define hard outfits and a difficulty score (DS) is defined and assigned to each outfit based on the difficulty in recommending an item for it. Then, we propose a self-adaptive triplet loss (SATL), where the DS of the outfit is considered. Finally, we propose a very simple conditional similarity network combining the proposed SATL to achieve the learning of hard items in the fashion compatibility prediction. Experiments on the publicly available Polyvore Outfits and Polyvore Outfits-D datasets demonstrate our SAT's effectiveness in fashion compatibility prediction. Besides, our SATL can be easily extended to other conditional similarity networks to improve their performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge