Tilo Burghardt

Dynamic Curriculum Learning for Great Ape Detection in the Wild

Apr 30, 2022

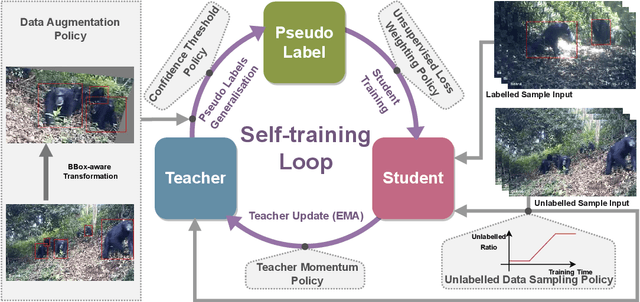

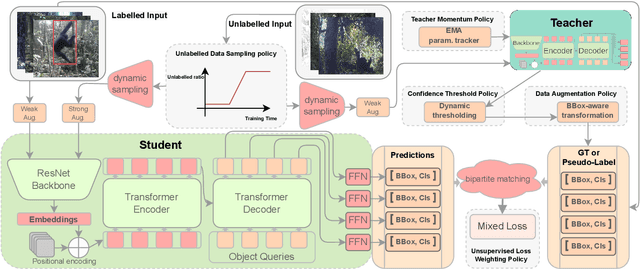

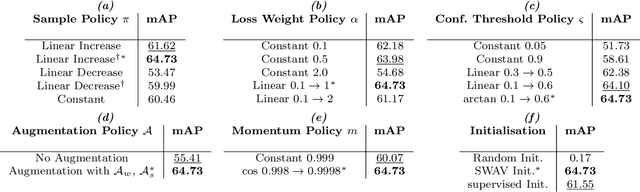

Abstract:We propose a novel end-to-end curriculum learning approach that leverages large volumes of unlabelled great ape camera trap footage to improve supervised species detector construction in challenging real-world jungle environments. In contrast to previous semi-supervised methods, our approach gradually improves detection quality by steering training towards virtuous self-reinforcement. To achieve this, we propose integrating pseudo-labelling with dynamic curriculum learning policies. We show that such dynamics and controls can avoid learning collapse and gradually tie detector adjustments to higher model quality. We provide theoretical arguments and ablations, and confirm significant performance improvements against various state-of-the-art systems when evaluating on the Extended PanAfrican Dataset holding several thousand camera trap videos of great apes. We note that system performance is strongest for smaller labelled ratios, which are common in ecological applications. Our approach, although designed with wildlife data in mind, also shows competitive benchmarks for generic object detection in the MS-COCO dataset, indicating wider applicability of introduced concepts. The code is available at https://github.com/youshyee/DCL-Detection.

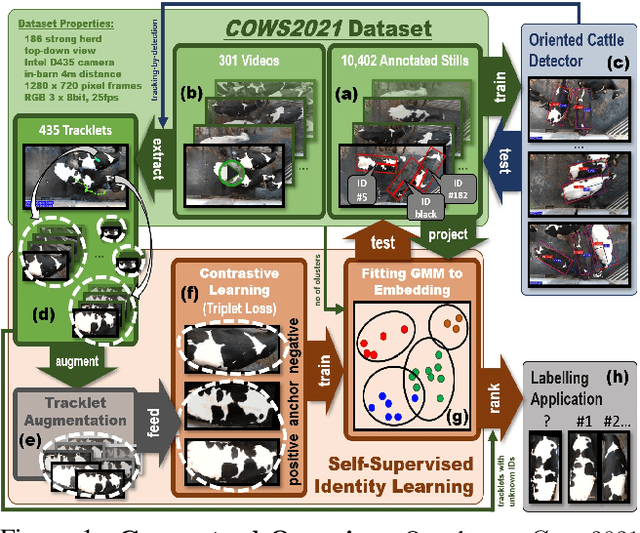

Label a Herd in Minutes: Individual Holstein-Friesian Cattle Identification

Apr 22, 2022

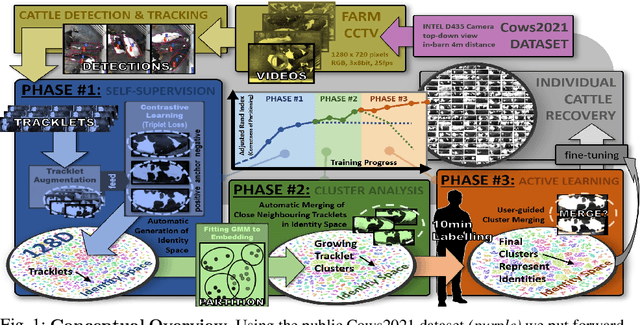

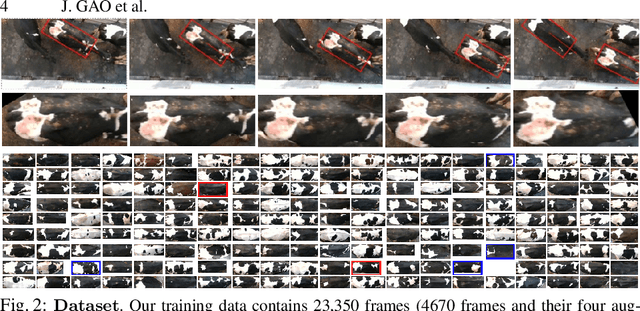

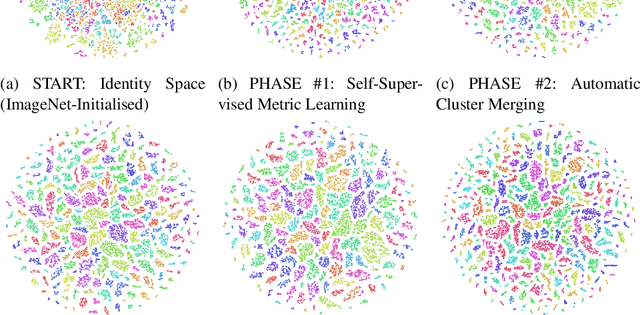

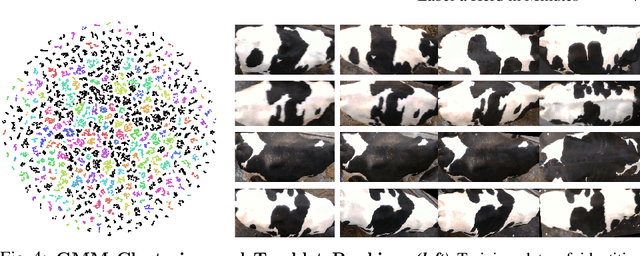

Abstract:We describe a practically evaluated approach for training visual cattle ID systems for a whole farm requiring only ten minutes of labelling effort. In particular, for the task of automatic identification of individual Holstein-Friesians in real-world farm CCTV, we show that self-supervision, metric learning, cluster analysis, and active learning can complement each other to significantly reduce the annotation requirements usually needed to train cattle identification frameworks. Evaluating the approach on the test portion of the publicly available Cows2021 dataset, for training we use 23,350 frames across 435 single individual tracklets generated by automated oriented cattle detection and tracking in operational farm footage. Self-supervised metric learning is first employed to initialise a candidate identity space where each tracklet is considered a distinct entity. Grouping entities into equivalence classes representing cattle identities is then performed by automated merging via cluster analysis and active learning. Critically, we identify the inflection point at which automated choices cannot replicate improvements based on human intervention to reduce annotation to a minimum. Experimental results show that cluster analysis and a few minutes of labelling after automated self-supervision can improve the test identification accuracy of 153 identities to 92.44% (ARI=0.93) from the 74.9% (ARI=0.754) obtained by self-supervision only. These promising results indicate that a tailored combination of human and machine reasoning in visual cattle ID pipelines can be highly effective whilst requiring only minimal labelling effort. We provide all key source code and network weights with this paper for easy result reproduction.

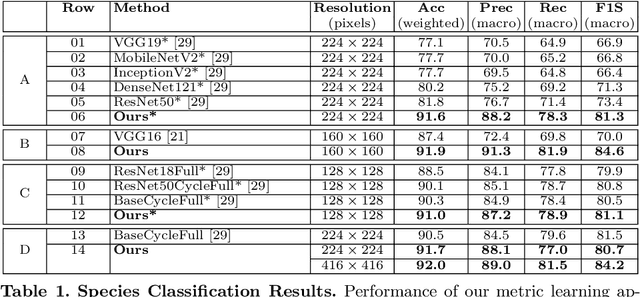

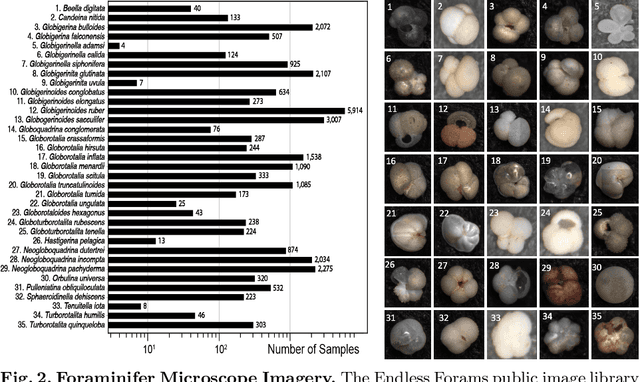

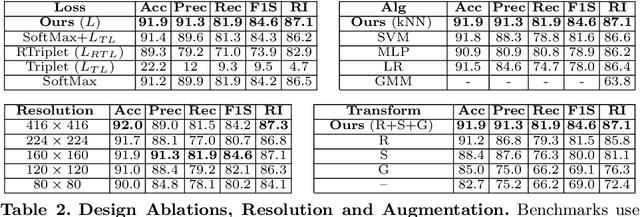

Visual Microfossil Identification via Deep Metric Learning

Jan 04, 2022

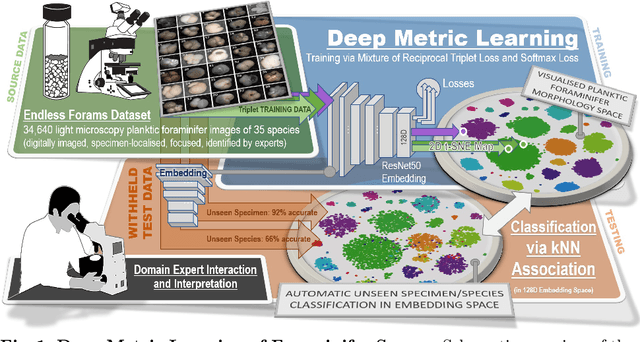

Abstract:We apply deep metric learning for the first time to the prob-lem of classifying planktic foraminifer shells on microscopic images. This species recognition task is an important information source and scientific pillar for reconstructing past climates. All foraminifer CNN recognition pipelines in the literature produce black-box classifiers that lack visualisation options for human experts and cannot be applied to open set problems. Here, we benchmark metric learning against these pipelines, produce the first scientific visualisation of the phenotypic planktic foraminifer morphology space, and demonstrate that metric learning can be used to cluster species unseen during training. We show that metric learning out-performs all published CNN-based state-of-the-art benchmarks in this domain. We evaluate our approach on the 34,640 expert-annotated images of the Endless Forams public library of 35 modern planktic foraminifera species. Our results on this data show leading 92% accuracy (at 0.84 F1-score) in reproducing expert labels on withheld test data, and 66.5% accuracy (at 0.70 F1-score) when clustering species never encountered in training. We conclude that metric learning is highly effective for this domain and serves as an important tool towards expert-in-the-loop automation of microfossil identification. Key code, network weights, and data splits are published with this paper for full reproducibility.

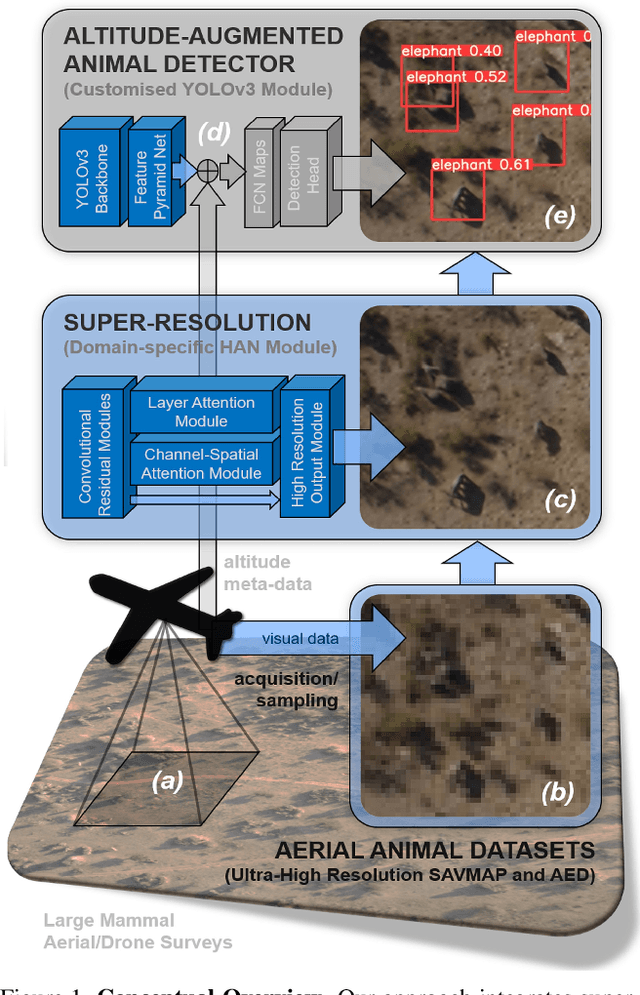

Small or Far Away? Exploiting Deep Super-Resolution and Altitude Data for Aerial Animal Surveillance

Nov 12, 2021

Abstract:Visuals captured by high-flying aerial drones are increasingly used to assess biodiversity and animal population dynamics around the globe. Yet, challenging acquisition scenarios and tiny animal depictions in airborne imagery, despite ultra-high resolution cameras, have so far been limiting factors for applying computer vision detectors successfully with high confidence. In this paper, we address the problem for the first time by combining deep object detectors with super-resolution techniques and altitude data. In particular, we show that the integration of a holistic attention network based super-resolution approach and a custom-built altitude data exploitation network into standard recognition pipelines can considerably increase the detection efficacy in real-world settings. We evaluate the system on two public, large aerial-capture animal datasets, SAVMAP and AED. We find that the proposed approach can consistently improve over ablated baselines and the state-of-the-art performance for both datasets. In addition, we provide a systematic analysis of the relationship between animal resolution and detection performance. We conclude that super-resolution and altitude knowledge exploitation techniques can significantly increase benchmarks across settings and, thus, should be used routinely when detecting minutely resolved animals in aerial imagery.

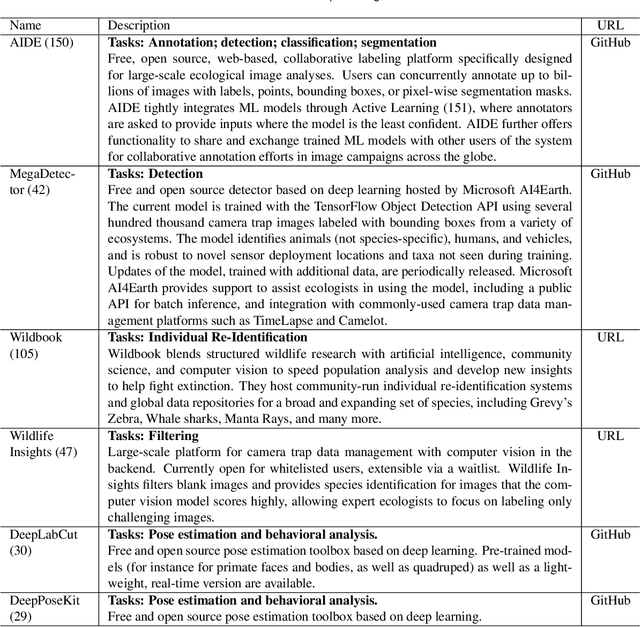

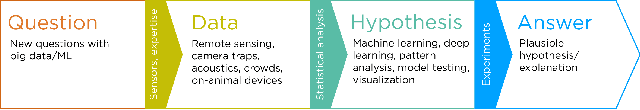

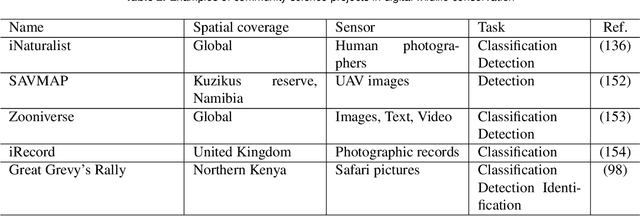

Seeing biodiversity: perspectives in machine learning for wildlife conservation

Oct 25, 2021

Abstract:Data acquisition in animal ecology is rapidly accelerating due to inexpensive and accessible sensors such as smartphones, drones, satellites, audio recorders and bio-logging devices. These new technologies and the data they generate hold great potential for large-scale environmental monitoring and understanding, but are limited by current data processing approaches which are inefficient in how they ingest, digest, and distill data into relevant information. We argue that machine learning, and especially deep learning approaches, can meet this analytic challenge to enhance our understanding, monitoring capacity, and conservation of wildlife species. Incorporating machine learning into ecological workflows could improve inputs for population and behavior models and eventually lead to integrated hybrid modeling tools, with ecological models acting as constraints for machine learning models and the latter providing data-supported insights. In essence, by combining new machine learning approaches with ecological domain knowledge, animal ecologists can capitalize on the abundance of data generated by modern sensor technologies in order to reliably estimate population abundances, study animal behavior and mitigate human/wildlife conflicts. To succeed, this approach will require close collaboration and cross-disciplinary education between the computer science and animal ecology communities in order to ensure the quality of machine learning approaches and train a new generation of data scientists in ecology and conservation.

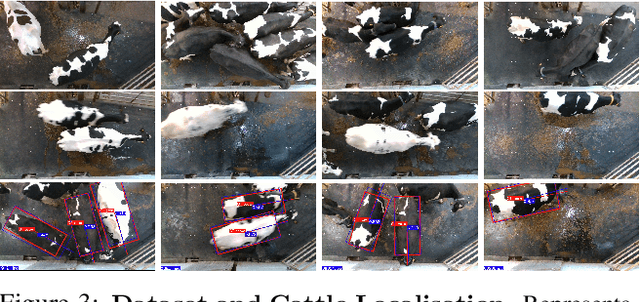

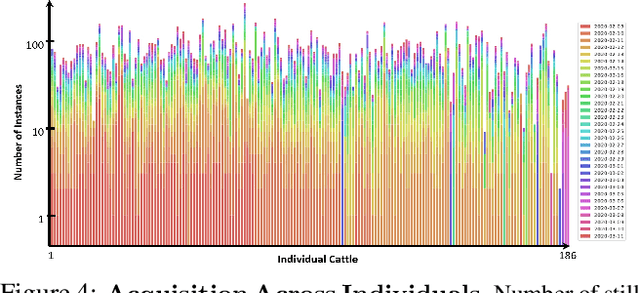

Towards Self-Supervision for Video Identification of Individual Holstein-Friesian Cattle: The Cows2021 Dataset

May 05, 2021

Abstract:In this paper we publish the largest identity-annotated Holstein-Friesian cattle dataset Cows2021 and a first self-supervision framework for video identification of individual animals. The dataset contains 10,402 RGB images with labels for localisation and identity as well as 301 videos from the same herd. The data shows top-down in-barn imagery, which captures the breed's individually distinctive black and white coat pattern. Motivated by the labelling burden involved in constructing visual cattle identification systems, we propose exploiting the temporal coat pattern appearance across videos as a self-supervision signal for animal identity learning. Using an individual-agnostic cattle detector that yields oriented bounding-boxes, rotation-normalised tracklets of individuals are formed via tracking-by-detection and enriched via augmentations. This produces a `positive' sample set per tracklet, which is paired against a `negative' set sampled from random cattle of other videos. Frame-triplet contrastive learning is then employed to construct a metric latent space. The fitting of a Gaussian Mixture Model to this space yields a cattle identity classifier. Results show an accuracy of Top-1 57.0% and Top-4: 76.9% and an Adjusted Rand Index: 0.53 compared to the ground truth. Whilst supervised training surpasses this benchmark by a large margin, we conclude that self-supervision can nevertheless play a highly effective role in speeding up labelling efforts when initially constructing supervision information. We provide all data and full source code alongside an analysis and evaluation of the system.

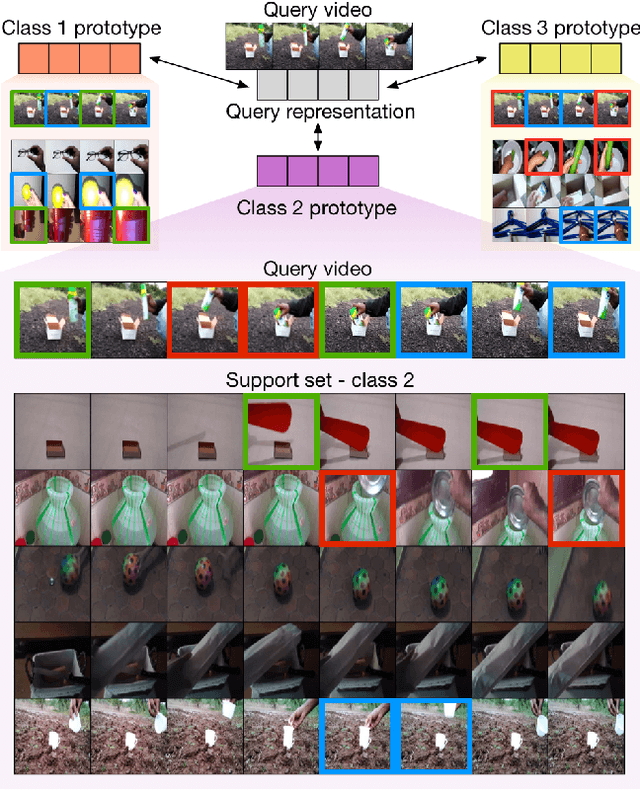

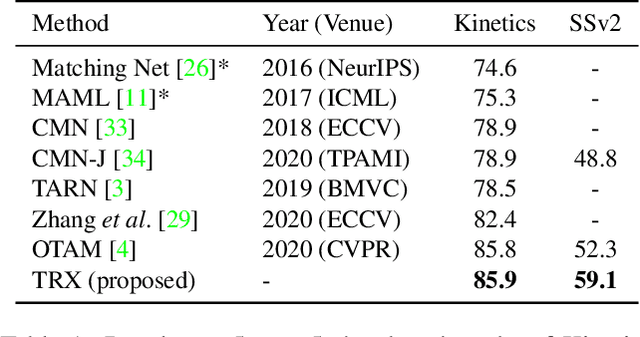

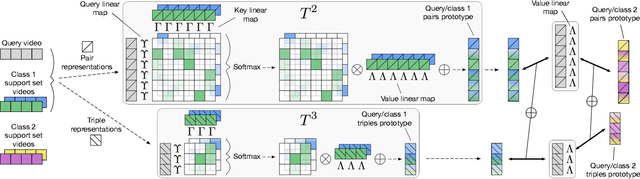

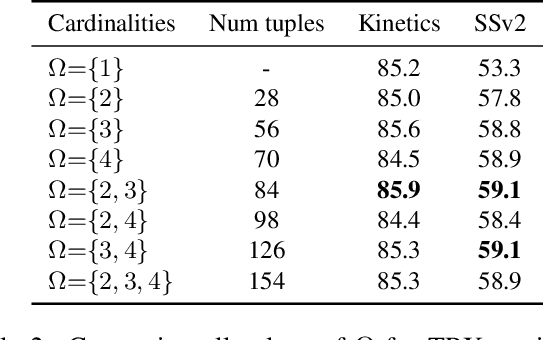

Temporal-Relational CrossTransformers for Few-Shot Action Recognition

Jan 15, 2021

Abstract:We propose a novel approach to few-shot action recognition, finding temporally-corresponding frame tuples between the query and videos in the support set. Distinct from previous few-shot action recognition works, we construct class prototypes using the CrossTransformer attention mechanism to observe relevant sub-sequences of all support videos, rather than using class averages or single best matches. Video representations are formed from ordered tuples of varying numbers of frames, which allows sub-sequences of actions at different speeds and temporal offsets to be compared. Our proposed Temporal-Relational CrossTransformers achieve state-of-the-art results on both Kinetics and Something-Something V2 (SSv2), outperforming prior work on SSv2 by a wide margin (6.8%) due to the method's ability to model temporal relations. A detailed ablation showcases the importance of matching to multiple support set videos and learning higher-order relational CrossTransformers. Code is available at https://github.com/tobyperrett/trx

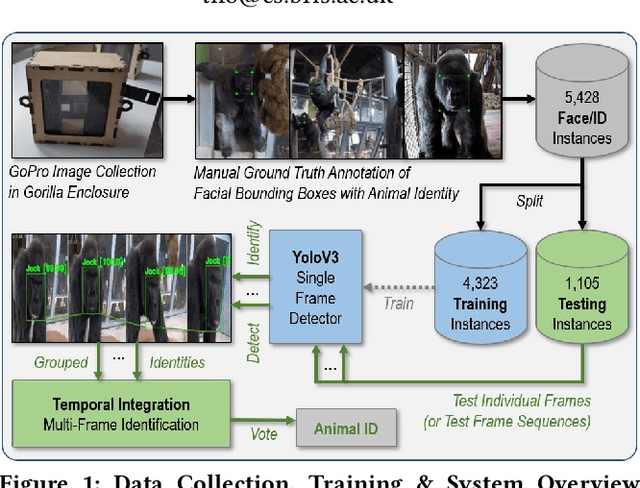

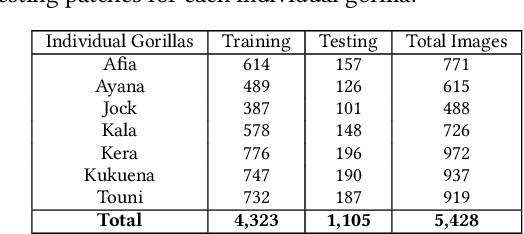

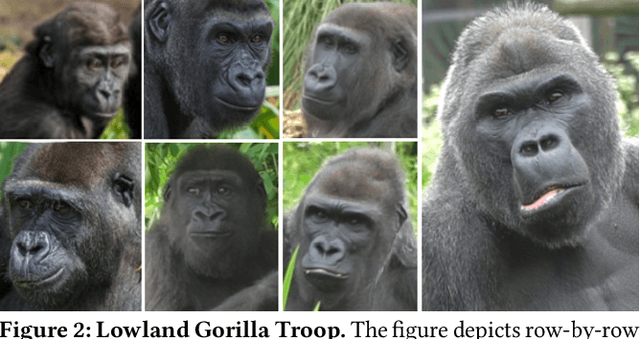

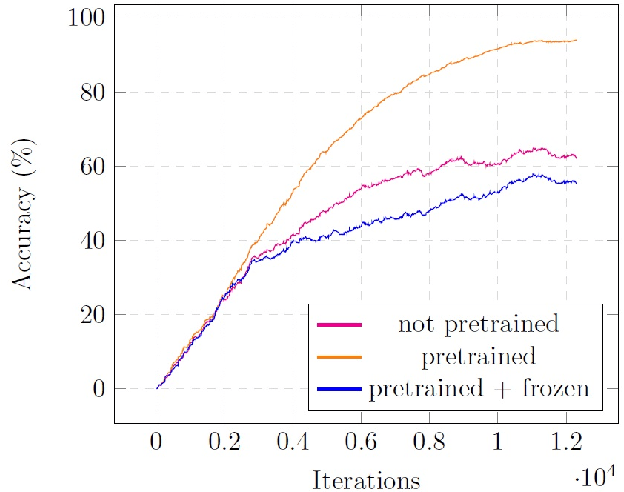

A Dataset and Application for Facial Recognition of Individual Gorillas in Zoo Environments

Dec 08, 2020

Abstract:We put forward a video dataset with 5k+ facial bounding box annotations across a troop of 7 western lowland gorillas at Bristol Zoo Gardens. Training on this dataset, we implement and evaluate a standard deep learning pipeline on the task of facially recognising individual gorillas in a zoo environment. We show that a basic YOLOv3-powered application is able to perform identifications at 92% mAP when utilising single frames only. Tracking-by-detection-association and identity voting across short tracklets yields an improved robust performance of 97% mAP. To facilitate easy utilisation for enriching the research capabilities of zoo environments, we publish the code, video dataset, weights, and ground-truth annotations at data.bris.ac.uk.

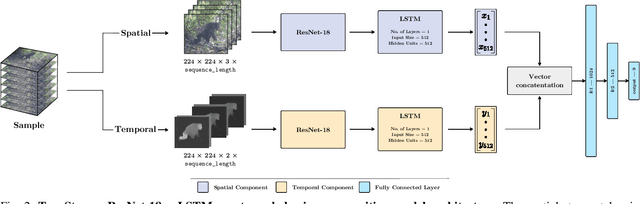

Visual Recognition of Great Ape Behaviours in the Wild

Nov 21, 2020

Abstract:We propose a first great ape-specific visual behaviour recognition system utilising deep learning that is capable of detecting nine core ape behaviours.

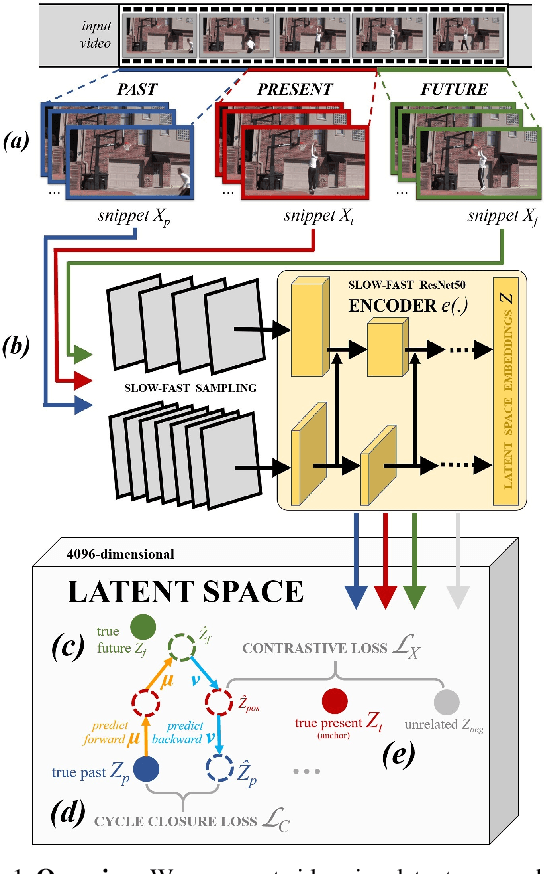

Back to the Future: Cycle Encoding Prediction for Self-supervised Contrastive Video Representation Learning

Oct 15, 2020

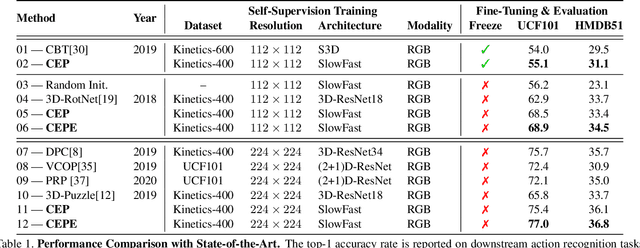

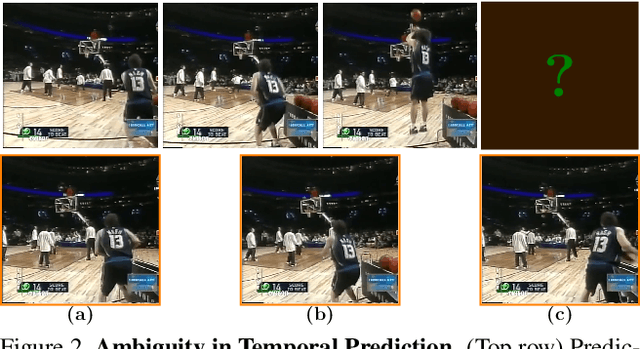

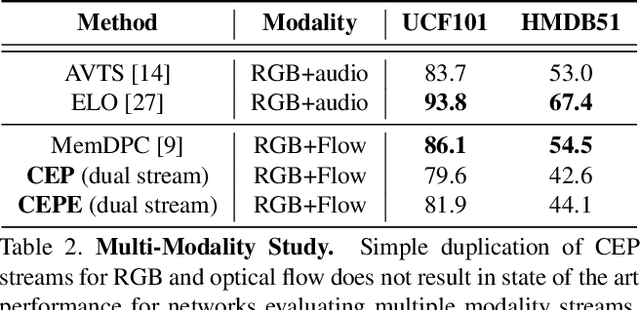

Abstract:In this paper we show that learning video feature spaces in which temporal cycles are maximally predictable benefits action classification. In particular, we propose a novel learning approach termed Cycle Encoding Prediction (CEP) that is able to effectively represent high-level spatio-temporal structure of unlabelled video content. CEP builds a latent space wherein the concept of closed forward-backward as well as backward-forward temporal loops is approximately preserved. As a self-supervision signal, CEP leverages the bi-directional temporal coherence of the video stream and applies loss functions that encourage both temporal cycle closure as well as contrastive feature separation. Architecturally, the underpinning network structure utilises a single feature encoder for all video snippets, adding two predictive modules that learn temporal forward and backward transitions. We apply our framework for pretext training of networks for action recognition tasks. We report significantly improved results for the standard datasets UCF101 and HMDB51. Detailed ablation studies support the effectiveness of the proposed components. We publish source code for the CEP components in full with this paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge