Thomas Vandal

Spectral Synthesis for Satellite-to-Satellite Translation

Oct 12, 2020Abstract:Earth observing satellites carrying multi-spectral sensors are widely used to monitor the physical and biological states of the atmosphere, land, and oceans. These satellites have different vantage points above the earth and different spectral imaging bands resulting in inconsistent imagery from one to another. This presents challenges in building downstream applications. What if we could generate synthetic bands for existing satellites from the union of all domains? We tackle the problem of generating synthetic spectral imagery for multispectral sensors as an unsupervised image-to-image translation problem with partial labels and introduce a novel shared spectral reconstruction loss. Simulated experiments performed by dropping one or more spectral bands show that cross-domain reconstruction outperforms measurements obtained from a second vantage point. On a downstream cloud detection task, we show that generating synthetic bands with our model improves segmentation performance beyond our baseline. Our proposed approach enables synchronization of multispectral data and provides a basis for more homogeneous remote sensing datasets.

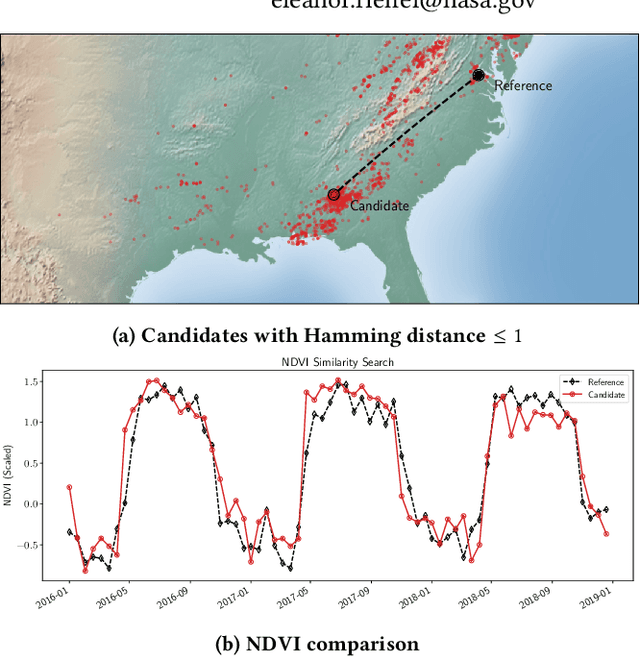

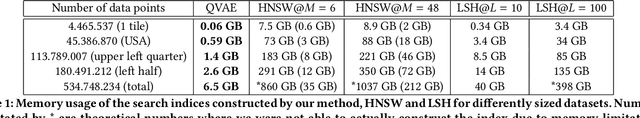

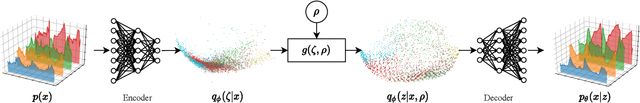

High-Dimensional Similarity Search with Quantum-Assisted Variational Autoencoder

Jun 13, 2020

Abstract:Recent progress in quantum algorithms and hardware indicates the potential importance of quantum computing in the near future. However, finding suitable application areas remains an active area of research. Quantum machine learning is touted as a potential approach to demonstrate quantum advantage within both the gate-model and the adiabatic schemes. For instance, the Quantum-assisted Variational Autoencoder has been proposed as a quantum enhancement to the discrete VAE. We extend on previous work and study the real-world applicability of a QVAE by presenting a proof-of-concept for similarity search in large-scale high-dimensional datasets. While exact and fast similarity search algorithms are available for low dimensional datasets, scaling to high-dimensional data is non-trivial. We show how to construct a space-efficient search index based on the latent space representation of a QVAE. Our experiments show a correlation between the Hamming distance in the embedded space and the Euclidean distance in the original space on the Moderate Resolution Imaging Spectroradiometer (MODIS) dataset. Further, we find real-world speedups compared to linear search and demonstrate memory-efficient scaling to half a billion data points.

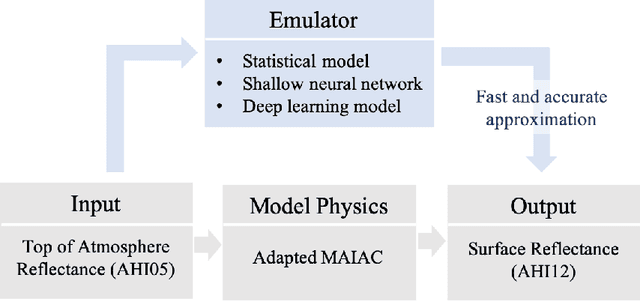

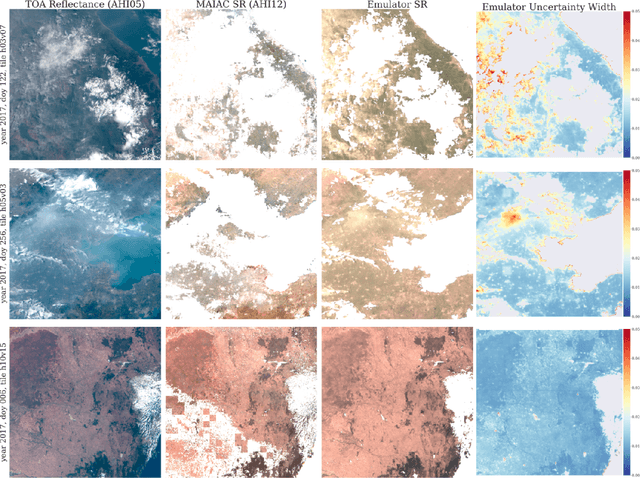

Deep Learning Emulation of Multi-Angle Implementation of Atmospheric Correction (MAIAC)

Oct 29, 2019

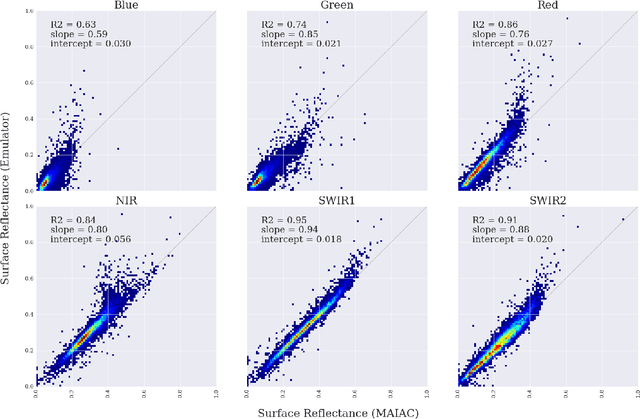

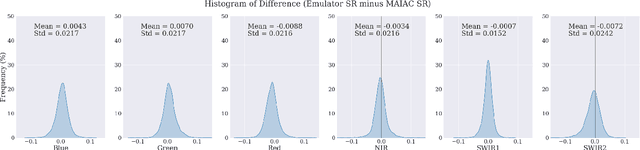

Abstract:New generation geostationary satellites make solar reflectance observations available at a continental scale with unprecedented spatiotemporal resolution and spectral range. Generating quality land monitoring products requires correction of the effects of atmospheric scattering and absorption, which vary in time and space according to geometry and atmospheric composition. Many atmospheric radiative transfer models, including that of Multi-Angle Implementation of Atmospheric Correction (MAIAC), are too computationally complex to be run in real time, and rely on precomputed look-up tables. Additionally, uncertainty in measurements and models for remote sensing receives insufficient attention, in part due to the difficulty of obtaining sufficient ground measurements. In this paper, we present an adaptation of Bayesian Deep Learning (BDL) to emulation of the MAIAC atmospheric correction algorithm. Emulation approaches learn a statistical model as an efficient approximation of a physical model, while machine learning methods have demonstrated performance in extracting spatial features and learning complex, nonlinear mappings. We demonstrate stable surface reflectance retrieval by emulation (R2 between MAIAC and emulator SR are 0.63, 0.75, 0.86, 0.84, 0.95, and 0.91 for Blue, Green, Red, NIR, SWIR1, and SWIR2 bands, respectively), accurate cloud detection (86\%), and well-calibrated, geolocated uncertainty estimates. Our results support BDL-based emulation as an accurate and efficient (up to 6x speedup) method for approximation atmospheric correction, where built-in uncertainty estimates stand to open new opportunities for model assessment and support informed use of SR-derived quantities in multiple domains.

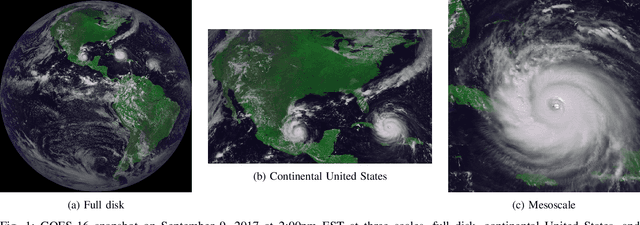

Optical Flow for Intermediate Frame Interpolation of Multispectral Geostationary Satellite Data

Jul 28, 2019

Abstract:Applications of satellite data in areas such as weather tracking and modeling, ecosystem monitoring, wildfire detection, and landcover change are heavily dependent on the trade-offs related to the spatial, spectral and temporal resolutions of the observations. For instance, geostationary weather tracking satellites are designed to take hemispherical snapshots many times throughout the day but sensor hardware limits data collection. In this work we tackle this limitation by developing a method for temporal upsampling of multi-spectral satellite imagery using optical flow video interpolation deep convolutional neural networks. The presented model, extends Super SloMo (SSM) from single optical flow estimates to multichannel where flows are computed per wavelength band. We apply this technique on up to 8 multispectral bands of GOES-R/Advanced Baseline Imager mesoscale dataset to temporally enhance full disk hemispheric snapshots from 15 minutes to 1 minute. Through extensive experimentation, we show SSM greatly outperforms the linear interpolation baseline and that multichannel optical flows improves performance on GOES/ABI. Furthermore, we discuss challenges and open questions related to temporal interpolation of multispectral geostationary satellite imagery.

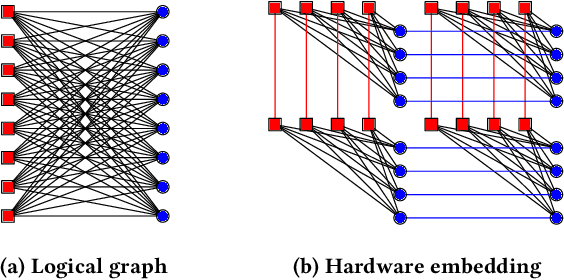

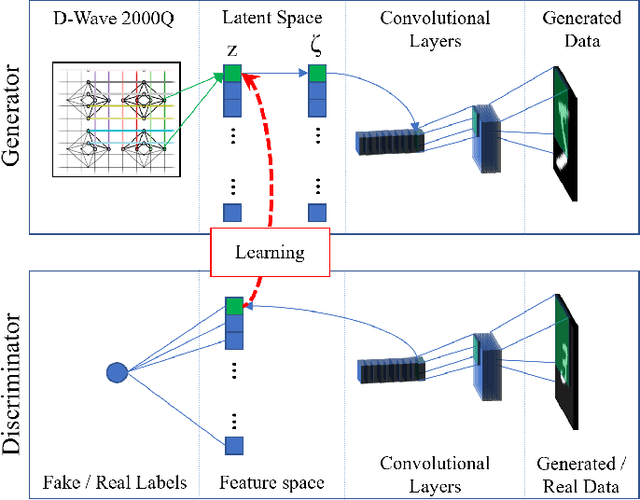

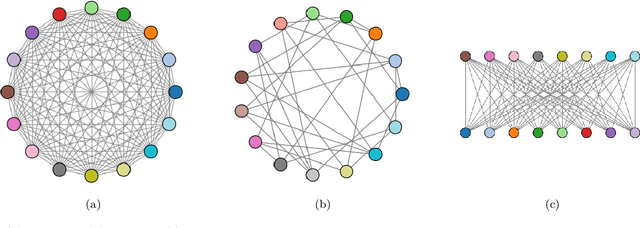

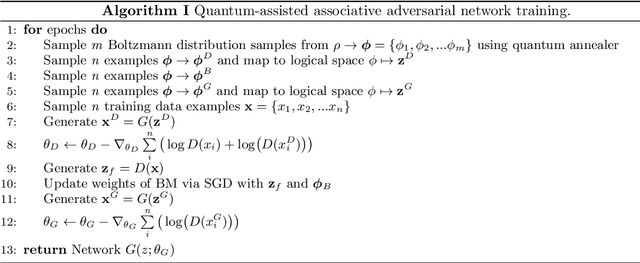

Quantum-assisted associative adversarial network: Applying quantum annealing in deep learning

Apr 23, 2019

Abstract:We present an algorithm for learning a latent variable generative model via generative adversarial learning where the canonical uniform noise input is replaced by samples from a graphical model. This graphical model is learned by a Boltzmann machine which learns low-dimensional feature representation of data extracted by the discriminator. A quantum annealer, the D-Wave 2000Q, is used to sample from this model. This algorithm joins a growing family of algorithms that use a quantum annealing subroutine in deep learning, and provides a framework to test the advantages of quantum-assisted learning in GANs. Fully connected, symmetric bipartite and Chimera graph topologies are compared on a reduced stochastically binarized MNIST dataset, for both classical and quantum annealing sampling methods. The quantum-assisted associative adversarial network successfully learns a generative model of the MNIST dataset for all topologies, and is also applied to the LSUN dataset bedrooms class for the Chimera topology. Evaluated using the Fr\'{e}chet inception distance and inception score, the quantum and classical versions of the algorithm are found to have equivalent performance for learning an implicit generative model of the MNIST dataset.

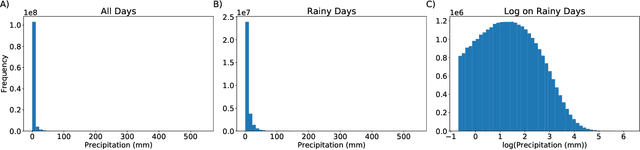

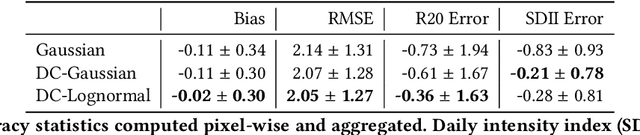

Quantifying Uncertainty in Discrete-Continuous and Skewed Data with Bayesian Deep Learning

May 24, 2018

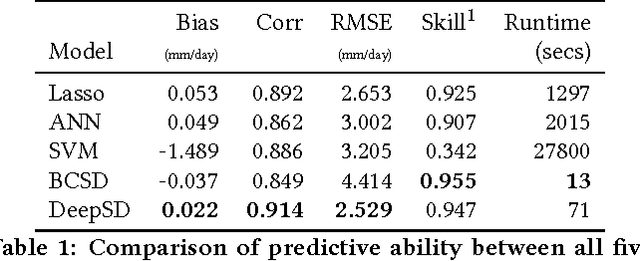

Abstract:Deep Learning (DL) methods have been transforming computer vision with innovative adaptations to other domains including climate change. For DL to pervade Science and Engineering (S&E) applications where risk management is a core component, well-characterized uncertainty estimates must accompany predictions. However, S&E observations and model-simulations often follow heavily skewed distributions and are not well modeled with DL approaches, since they usually optimize a Gaussian, or Euclidean, likelihood loss. Recent developments in Bayesian Deep Learning (BDL), which attempts to capture uncertainties from noisy observations, aleatoric, and from unknown model parameters, epistemic, provide us a foundation. Here we present a discrete-continuous BDL model with Gaussian and lognormal likelihoods for uncertainty quantification (UQ). We demonstrate the approach by developing UQ estimates on `DeepSD', a super-resolution based DL model for Statistical Downscaling (SD) in climate applied to precipitation, which follows an extremely skewed distribution. We find that the discrete-continuous models outperform a basic Gaussian distribution in terms of predictive accuracy and uncertainty calibration. Furthermore, we find that the lognormal distribution, which can handle skewed distributions, produces quality uncertainty estimates at the extremes. Such results may be important across S&E, as well as other domains such as finance and economics, where extremes are often of significant interest. Furthermore, to our knowledge, this is the first UQ model in SD where both aleatoric and epistemic uncertainties are characterized.

* 10 Pages

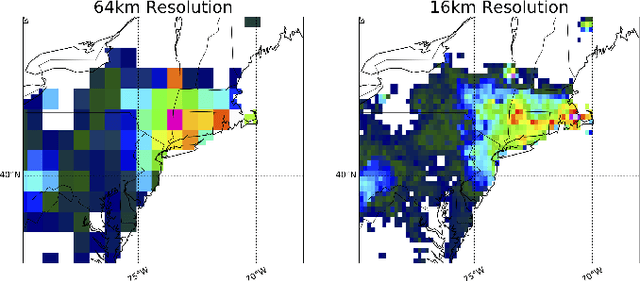

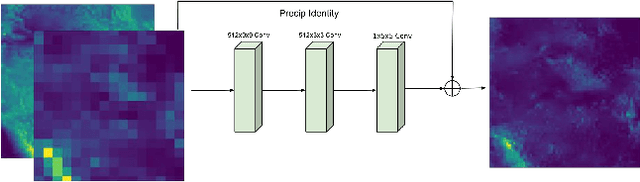

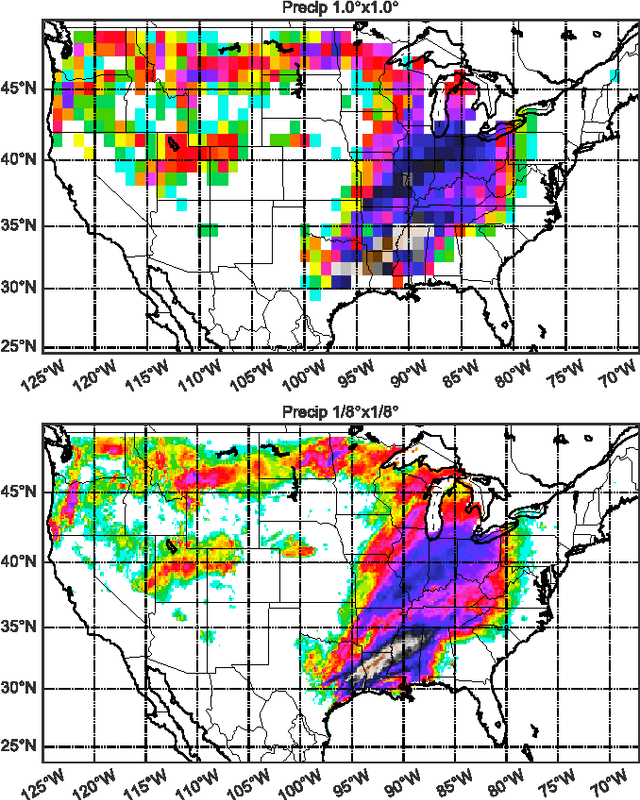

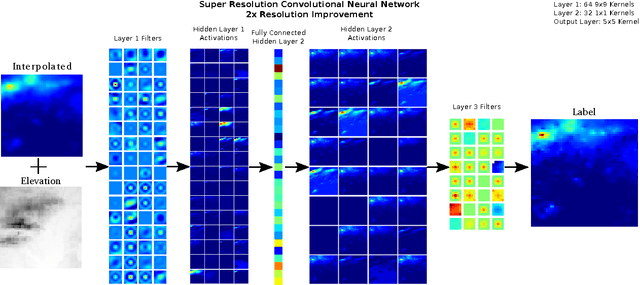

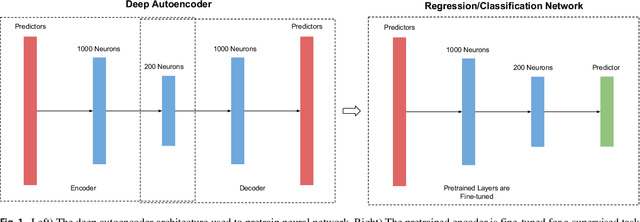

DeepSD: Generating High Resolution Climate Change Projections through Single Image Super-Resolution

Mar 09, 2017

Abstract:The impacts of climate change are felt by most critical systems, such as infrastructure, ecological systems, and power-plants. However, contemporary Earth System Models (ESM) are run at spatial resolutions too coarse for assessing effects this localized. Local scale projections can be obtained using statistical downscaling, a technique which uses historical climate observations to learn a low-resolution to high-resolution mapping. Depending on statistical modeling choices, downscaled projections have been shown to vary significantly terms of accuracy and reliability. The spatio-temporal nature of the climate system motivates the adaptation of super-resolution image processing techniques to statistical downscaling. In our work, we present DeepSD, a generalized stacked super resolution convolutional neural network (SRCNN) framework for statistical downscaling of climate variables. DeepSD augments SRCNN with multi-scale input channels to maximize predictability in statistical downscaling. We provide a comparison with Bias Correction Spatial Disaggregation as well as three Automated-Statistical Downscaling approaches in downscaling daily precipitation from 1 degree (~100km) to 1/8 degrees (~12.5km) over the Continental United States. Furthermore, a framework using the NASA Earth Exchange (NEX) platform is discussed for downscaling more than 20 ESM models with multiple emission scenarios.

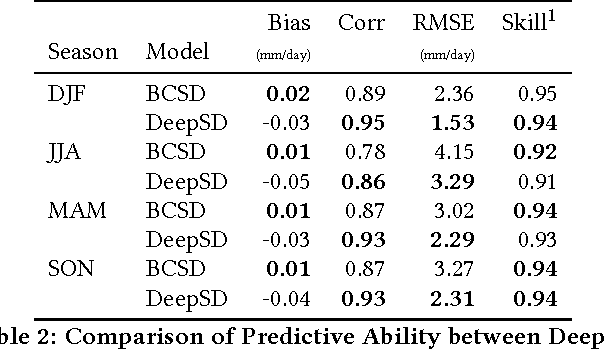

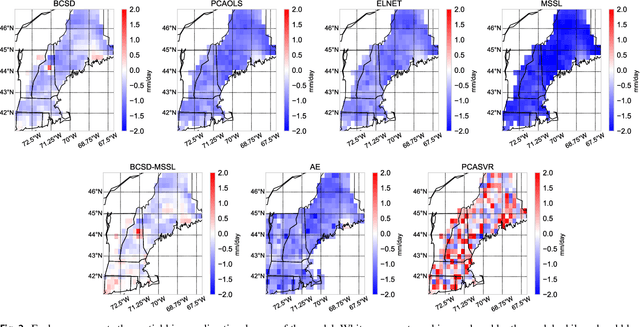

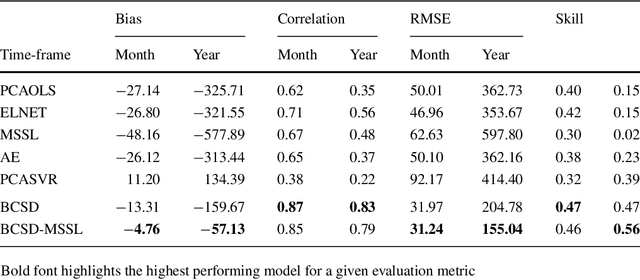

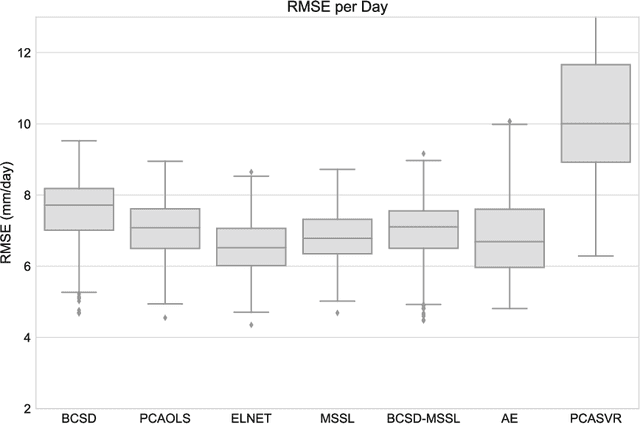

Intercomparison of Machine Learning Methods for Statistical Downscaling: The Case of Daily and Extreme Precipitation

Feb 13, 2017

Abstract:Statistical downscaling of global climate models (GCMs) allows researchers to study local climate change effects decades into the future. A wide range of statistical models have been applied to downscaling GCMs but recent advances in machine learning have not been explored. In this paper, we compare four fundamental statistical methods, Bias Correction Spatial Disaggregation (BCSD), Ordinary Least Squares, Elastic-Net, and Support Vector Machine, with three more advanced machine learning methods, Multi-task Sparse Structure Learning (MSSL), BCSD coupled with MSSL, and Convolutional Neural Networks to downscale daily precipitation in the Northeast United States. Metrics to evaluate of each method's ability to capture daily anomalies, large scale climate shifts, and extremes are analyzed. We find that linear methods, led by BCSD, consistently outperform non-linear approaches. The direct application of state-of-the-art machine learning methods to statistical downscaling does not provide improvements over simpler, longstanding approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge