Suryakanth V Gangashetty

SPRING-INX: A Multilingual Indian Language Speech Corpus by SPRING Lab, IIT Madras

Oct 24, 2023

Abstract:India is home to a multitude of languages of which 22 languages are recognised by the Indian Constitution as official. Building speech based applications for the Indian population is a difficult problem owing to limited data and the number of languages and accents to accommodate. To encourage the language technology community to build speech based applications in Indian languages, we are open sourcing SPRING-INX data which has about 2000 hours of legally sourced and manually transcribed speech data for ASR system building in Assamese, Bengali, Gujarati, Hindi, Kannada, Malayalam, Marathi, Odia, Punjabi and Tamil. This endeavor is by SPRING Lab , Indian Institute of Technology Madras and is a part of National Language Translation Mission (NLTM), funded by the Indian Ministry of Electronics and Information Technology (MeitY), Government of India. We describe the data collection and data cleaning process along with the data statistics in this paper.

Bayesian multilingual topic model for zero-shot cross-lingual topic identification

Jul 02, 2020

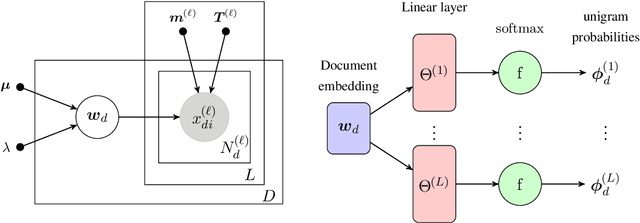

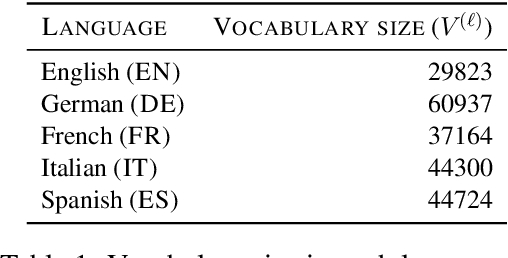

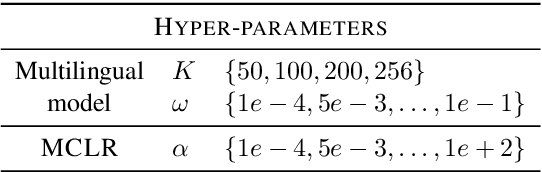

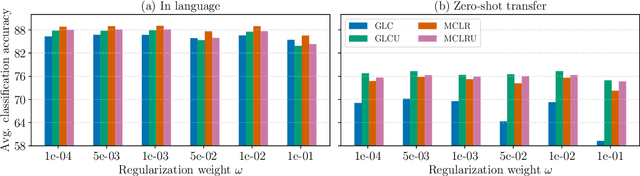

Abstract:This paper presents a Bayesian multilingual topic model for learning language-independent document embeddings. Our model learns to represent the documents in the form of Gaussian distributions, thereby encoding the uncertainty in its covariance. We propagate the learned uncertainties through linear classifiers for zero-shot cross-lingual topic identification. Our experiments on 5 language Europarl and Reuters (MLDoc) corpora show that the proposed model outperforms multi-lingual word embedding and BiLSTM sentence encoder based systems with significant margins in the majority of the transfer directions. Moreover, our system trained under a single day on a single GPU with much lower amounts of data performs competitively as compared to the state-of-the-art universal BiLSTM sentence encoder trained on 93 languages. Our experimental analysis shows that the amount of parallel data improves the overall performance of embeddings. Nonetheless, exploiting the uncertainties is always beneficial.

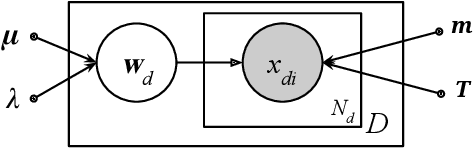

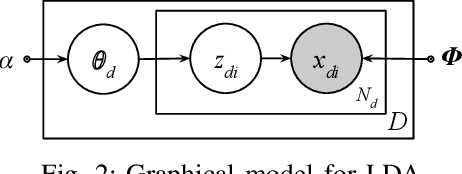

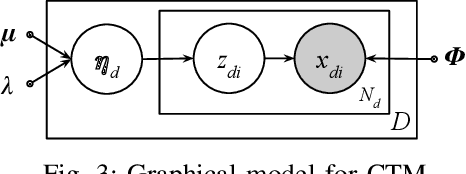

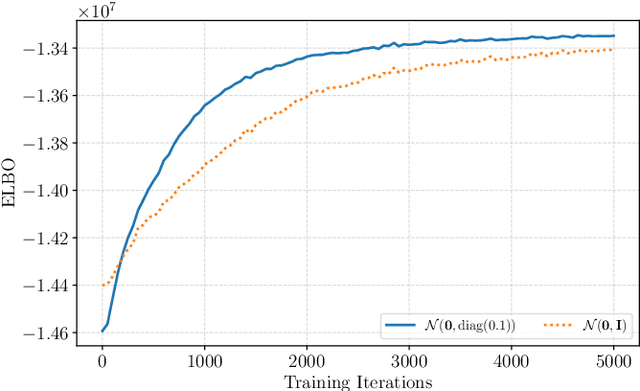

Learning document embeddings along with their uncertainties

Aug 29, 2019

Abstract:Majority of the text modelling techniques yield only point estimates of document embeddings and lack in capturing the uncertainty of the estimates. These uncertainties give a notion of how well the embeddings represent a document. We present Bayesian subspace multinomial model (Bayesian SMM), a generative log-linear model that learns to represent documents in the form of Gaussian distributions, thereby encoding the uncertainty in its covariance. Additionally, in the proposed Bayesian SMM, we address a commonly encountered problem of intractability that appears during variational inference in mixed-logit models. We also present a generative Gaussian linear classifier for topic identification that exploits the uncertainty in document embeddings. Our intrinsic evaluation using perplexity measure shows that the proposed Bayesian SMM fits the data better as compared to variational auto-encoder based document model. Our topic identification experiments on speech (Fisher) and text (20Newsgroups) corpora show that the proposed Bayesian SMM is robust to over-fitting on unseen test data. The topic ID results show that the proposed model is significantly better than variational auto-encoder based methods and achieve similar results when compared to fully supervised discriminative models.

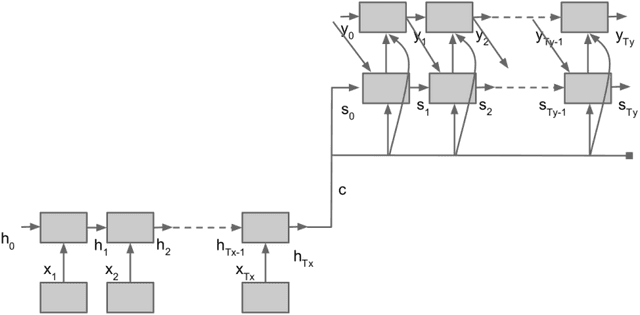

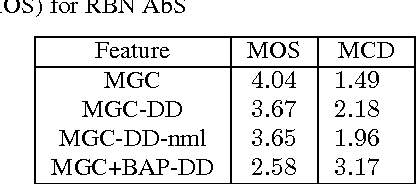

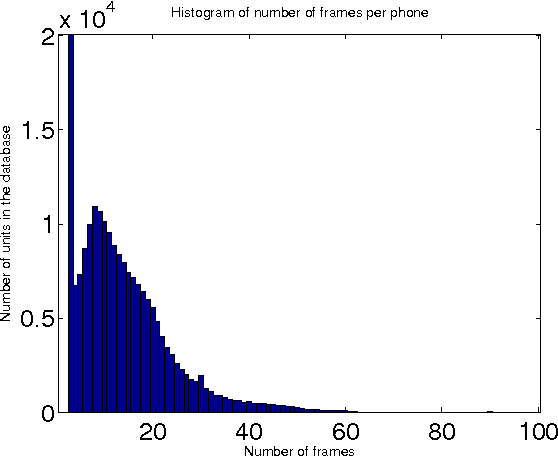

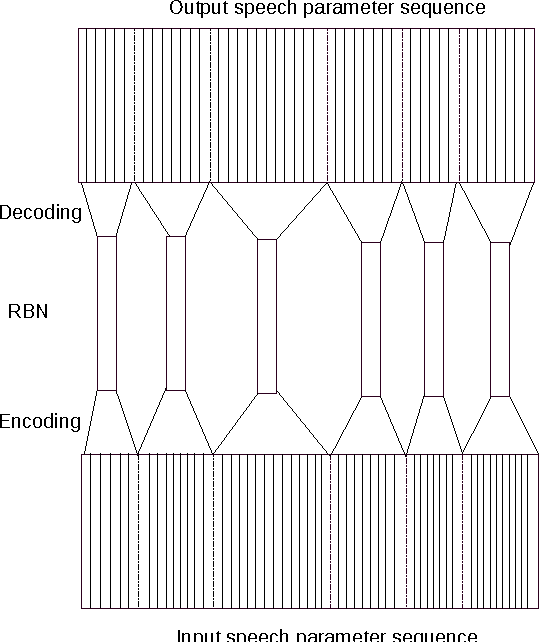

Statistical Parametric Speech Synthesis Using Bottleneck Representation From Sequence Auto-encoder

Jun 19, 2016

Abstract:In this paper, we describe a statistical parametric speech synthesis approach with unit-level acoustic representation. In conventional deep neural network based speech synthesis, the input text features are repeated for the entire duration of phoneme for mapping text and speech parameters. This mapping is learnt at the frame-level which is the de-facto acoustic representation. However much of this computational requirement can be drastically reduced if every unit can be represented with a fixed-dimensional representation. Using recurrent neural network based auto-encoder, we show that it is indeed possible to map units of varying duration to a single vector. We then use this acoustic representation at unit-level to synthesize speech using deep neural network based statistical parametric speech synthesis technique. Results show that the proposed approach is able to synthesize at the same quality as the conventional frame based approach at a highly reduced computational cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge