Soochahn Lee

Contamination Detection for VLMs using Multi-Modal Semantic Perturbation

Nov 05, 2025Abstract:Recent advances in Vision-Language Models (VLMs) have achieved state-of-the-art performance on numerous benchmark tasks. However, the use of internet-scale, often proprietary, pretraining corpora raises a critical concern for both practitioners and users: inflated performance due to test-set leakage. While prior works have proposed mitigation strategies such as decontamination of pretraining data and benchmark redesign for LLMs, the complementary direction of developing detection methods for contaminated VLMs remains underexplored. To address this gap, we deliberately contaminate open-source VLMs on popular benchmarks and show that existing detection approaches either fail outright or exhibit inconsistent behavior. We then propose a novel simple yet effective detection method based on multi-modal semantic perturbation, demonstrating that contaminated models fail to generalize under controlled perturbations. Finally, we validate our approach across multiple realistic contamination strategies, confirming its robustness and effectiveness. The code and perturbed dataset will be released publicly.

UniTalk: Towards Universal Active Speaker Detection in Real World Scenarios

May 28, 2025Abstract:We present UniTalk, a novel dataset specifically designed for the task of active speaker detection, emphasizing challenging scenarios to enhance model generalization. Unlike previously established benchmarks such as AVA, which predominantly features old movies and thus exhibits significant domain gaps, UniTalk focuses explicitly on diverse and difficult real-world conditions. These include underrepresented languages, noisy backgrounds, and crowded scenes - such as multiple visible speakers speaking concurrently or in overlapping turns. It contains over 44.5 hours of video with frame-level active speaker annotations across 48,693 speaking identities, and spans a broad range of video types that reflect real-world conditions. Through rigorous evaluation, we show that state-of-the-art models, while achieving nearly perfect scores on AVA, fail to reach saturation on UniTalk, suggesting that the ASD task remains far from solved under realistic conditions. Nevertheless, models trained on UniTalk demonstrate stronger generalization to modern "in-the-wild" datasets like Talkies and ASW, as well as to AVA. UniTalk thus establishes a new benchmark for active speaker detection, providing researchers with a valuable resource for developing and evaluating versatile and resilient models. Dataset: https://huggingface.co/datasets/plnguyen2908/UniTalk-ASD Code: https://github.com/plnguyen2908/UniTalk-ASD-code

Auto-regressive transformation for image alignment

May 08, 2025

Abstract:Existing methods for image alignment struggle in cases involving feature-sparse regions, extreme scale and field-of-view differences, and large deformations, often resulting in suboptimal accuracy. Robustness to these challenges improves through iterative refinement of the transformation field while focusing on critical regions in multi-scale image representations. We thus propose Auto-Regressive Transformation (ART), a novel method that iteratively estimates the coarse-to-fine transformations within an auto-regressive framework. Leveraging hierarchical multi-scale features, our network refines the transformations using randomly sampled points at each scale. By incorporating guidance from the cross-attention layer, the model focuses on critical regions, ensuring accurate alignment even in challenging, feature-limited conditions. Extensive experiments across diverse datasets demonstrate that ART significantly outperforms state-of-the-art methods, establishing it as a powerful new method for precise image alignment with broad applicability.

CoAPT: Context Attribute words for Prompt Tuning

Jul 18, 2024

Abstract:We propose a novel prompt tuning method called CoAPT(Context Attribute words in Prompt Tuning) for few/zero-shot image classification. The core motivation is that attributes are descriptive words with rich information about a given concept. Thus, we aim to enrich text queries of existing prompt tuning methods, improving alignment between text and image embeddings in CLIP embedding space. To do so, CoAPT integrates attribute words as additional prompts within learnable prompt tuning and can be easily incorporated into various existing prompt tuning methods. To facilitate the incorporation of attributes into text embeddings and the alignment with image embeddings, soft prompts are trained together with an additional meta-network that generates input-image-wise feature biases from the concatenated feature encodings of the image-text combined queries. Our experiments demonstrate that CoAPT leads to considerable improvements for existing baseline methods on several few/zero-shot image classification tasks, including base-to-novel generalization, cross-dataset transfer, and domain generalization. Our findings highlight the importance of combining hard and soft prompts and pave the way for future research on the interplay between text and image latent spaces in pre-trained models.

Generation of Structurally Realistic Retinal Fundus Images with Diffusion Models

May 11, 2023

Abstract:We introduce a new technique for generating retinal fundus images that have anatomically accurate vascular structures, using diffusion models. We generate artery/vein masks to create the vascular structure, which we then condition to produce retinal fundus images. The proposed method can generate high-quality images with more realistic vascular structures and can create a diverse range of images based on the strengths of the diffusion model. We present quantitative evaluations that demonstrate the performance improvement using our method for data augmentation on vessel segmentation and artery/vein classification. We also present Turing test results by clinical experts, showing that our generated images are difficult to distinguish with real images. We believe that our method can be applied to construct stand-alone datasets that are irrelevant of patient privacy.

Extraction of Coronary Vessels in Fluoroscopic X-Ray Sequences Using Vessel Correspondence Optimization

Jul 28, 2022Abstract:We present a method to extract coronary vessels from fluoroscopic x-ray sequences. Given the vessel structure for the source frame, vessel correspondence candidates in the subsequent frame are generated by a novel hierarchical search scheme to overcome the aperture problem. Optimal correspondences are determined within a Markov random field optimization framework. Post-processing is performed to extract vessel branches newly visible due to the inflow of contrast agent. Quantitative and qualitative evaluation conducted on a dataset of 18 sequences demonstrates the effectiveness of the proposed method.

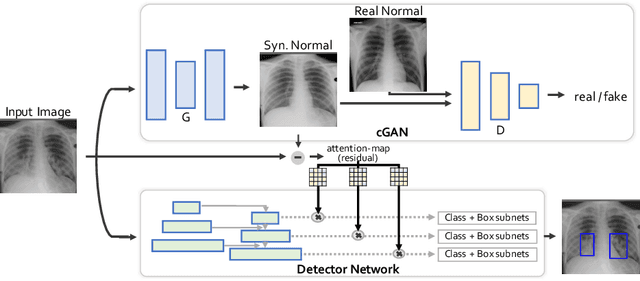

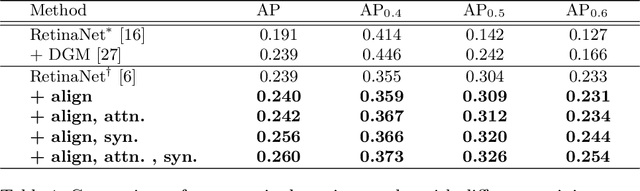

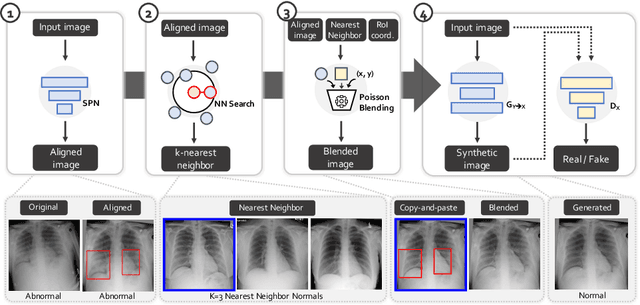

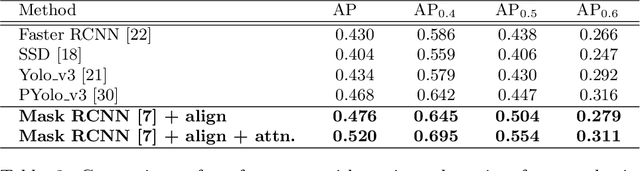

Generative Residual Attention Network for Disease Detection

Oct 25, 2021

Abstract:Accurate identification and localization of abnormalities from radiology images serve as a critical role in computer-aided diagnosis (CAD) systems. Building a highly generalizable system usually requires a large amount of data with high-quality annotations, including disease-specific global and localization information. However, in medical images, only a limited number of high-quality images and annotations are available due to annotation expenses. In this paper, we explore this problem by presenting a novel approach for disease generation in X-rays using a conditional generative adversarial learning. Specifically, given a chest X-ray image from a source domain, we generate a corresponding radiology image in a target domain while preserving the identity of the patient. We then use the generated X-ray image in the target domain to augment our training to improve the detection performance. We also present a unified framework that simultaneously performs disease generation and localization.We evaluate the proposed approach on the X-ray image dataset provided by the Radiological Society of North America (RSNA), surpassing the state-of-the-art baseline detection algorithms.

Scale Space Approximation in Convolutional Neural Networks for Retinal Vessel Segmentation

Oct 18, 2018

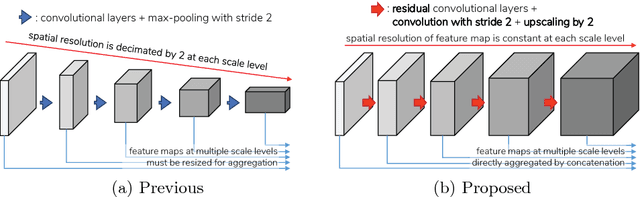

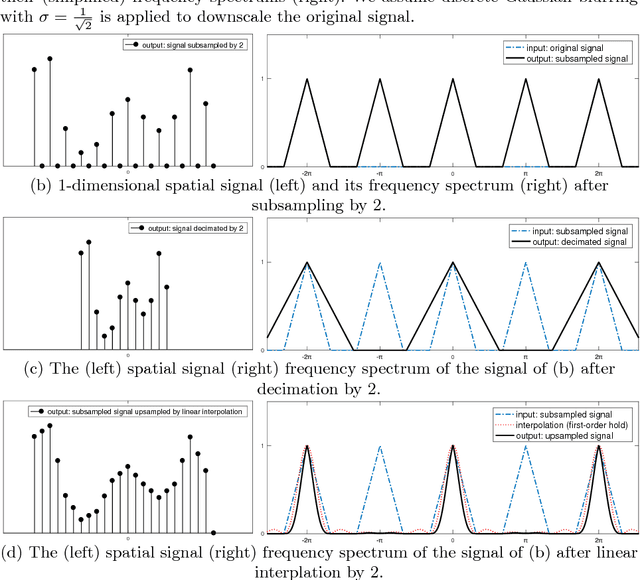

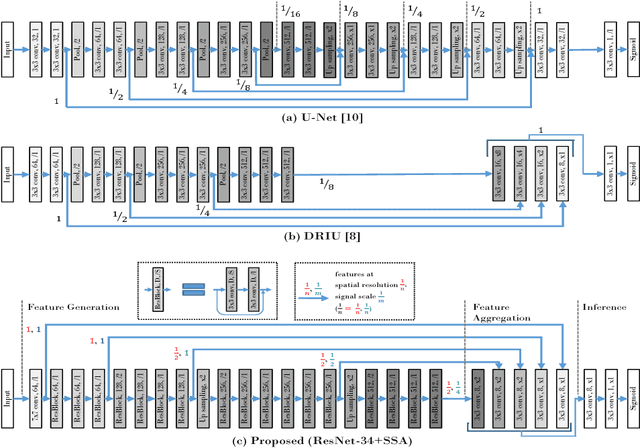

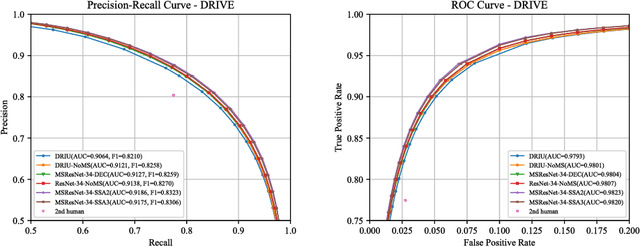

Abstract:Retinal images have the highest resolution and clarity among medical images. Thus, vessel analysis in retinal images may facilitate early diagnosis and treatment of many chronic diseases. In this paper, we propose a novel multi-scale residual convolutional neural network structure based on a \emph{scale-space approximation (SSA)} block of layers, comprising subsampling and subsequent upsampling, for multi-scale representation. Through analysis in the frequency domain, we show that this block structure is a close approximation of Gaussian filtering, the operation to achieve scale variations in scale-space theory. Experimental evaluations demonstrate that the proposed network outperforms current state-of-the-art methods. Ablative analysis shows that the SSA is indeed an important factor in performance improvement.

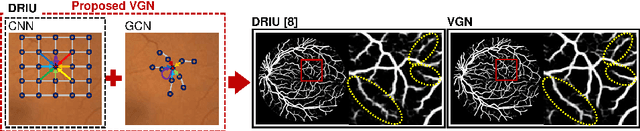

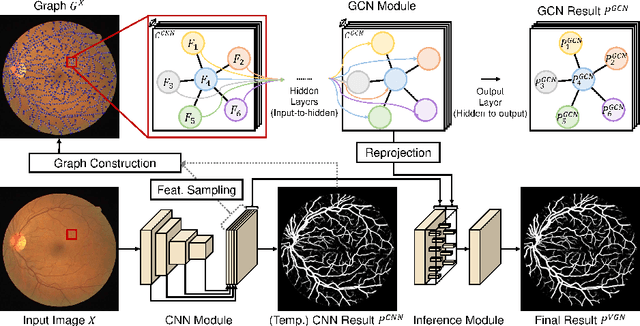

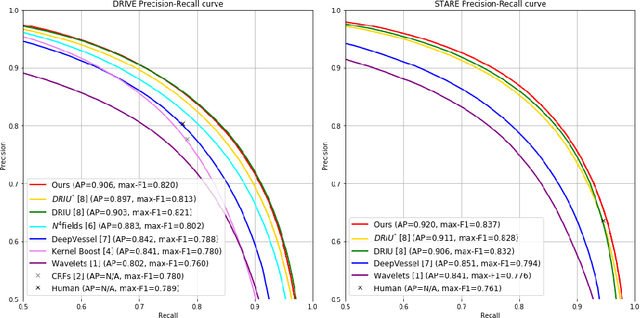

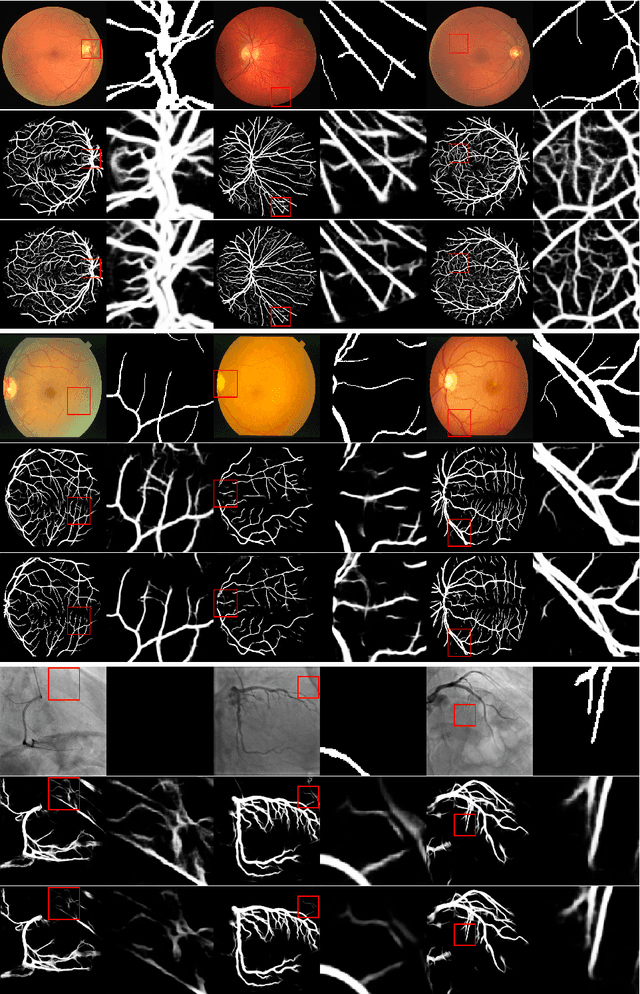

Deep Vessel Segmentation By Learning Graphical Connectivity

Jun 06, 2018

Abstract:We propose a novel deep-learning-based system for vessel segmentation. Existing methods using CNNs have mostly relied on local appearances learned on the regular image grid, without considering the graphical structure of vessel shape. To address this, we incorporate a graph convolutional network into a unified CNN architecture, where the final segmentation is inferred by combining the different types of features. The proposed method can be applied to expand any type of CNN-based vessel segmentation method to enhance the performance. Experiments show that the proposed method outperforms the current state-of-the-art methods on two retinal image datasets as well as a coronary artery X-ray angiography dataset.

Joint Weakly and Semi-Supervised Deep Learning for Localization and Classification of Masses in Breast Ultrasound Images

Oct 10, 2017

Abstract:We propose a framework for localization and classification of masses in breast ultrasound (BUS) images. In particular, we simultaneously use a weakly annotated dataset and a relatively small strongly annotated dataset to train a convolutional neural network detector. We have experimentally found that mass detectors trained with small, strongly annotated datasets are easily overfitted, whereas those trained with large, weakly annotated datasets present a non-trivial problem. To overcome these problems, we jointly use datasets with different characteristics in a hybrid manner. Consequently, a sophisticated weakly and semi-supervised training scenario is introduced with appropriate training loss selection. Experimental results show that the proposed method successfully localizes and classifies masses while requiring less effort in annotation work. The influences of each component in the proposed framework are also validated by conducting an ablative analysis. Although the proposed method is intended for masses in BUS images, it can also be applied as a general framework to train computer-aided detection and diagnosis systems for a wide variety of image modalities, target organs, and diseases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge