Shiqi Wei

Virtual Traffic Police: Large Language Model-Augmented Traffic Signal Control for Unforeseen Incidents

Jan 22, 2026Abstract:Adaptive traffic signal control (TSC) has demonstrated strong effectiveness in managing dynamic traffic flows. However, conventional methods often struggle when unforeseen traffic incidents occur (e.g., accidents and road maintenance), which typically require labor-intensive and inefficient manual interventions by traffic police officers. Large Language Models (LLMs) appear to be a promising solution thanks to their remarkable reasoning and generalization capabilities. Nevertheless, existing works often propose to replace existing TSC systems with LLM-based systems, which can be (i) unreliable due to the inherent hallucinations of LLMs and (ii) costly due to the need for system replacement. To address the issues of existing works, we propose a hierarchical framework that augments existing TSC systems with LLMs, whereby a virtual traffic police agent at the upper level dynamically fine-tunes selected parameters of signal controllers at the lower level in response to real-time traffic incidents. To enhance domain-specific reliability in response to unforeseen traffic incidents, we devise a self-refined traffic language retrieval system (TLRS), whereby retrieval-augmented generation is employed to draw knowledge from a tailored traffic language database that encompasses traffic conditions and controller operation principles. Moreover, we devise an LLM-based verifier to update the TLRS continuously over the reasoning process. Our results show that LLMs can serve as trustworthy virtual traffic police officers that can adapt conventional TSC methods to unforeseen traffic incidents with significantly improved operational efficiency and reliability.

KNSE: A Knowledge-aware Natural Language Inference Framework for Dialogue Symptom Status Recognition

May 26, 2023

Abstract:Symptom diagnosis in medical conversations aims to correctly extract both symptom entities and their status from the doctor-patient dialogue. In this paper, we propose a novel framework called KNSE for symptom status recognition (SSR), where the SSR is formulated as a natural language inference (NLI) task. For each mentioned symptom in a dialogue window, we first generate knowledge about the symptom and hypothesis about status of the symptom, to form a (premise, knowledge, hypothesis) triplet. The BERT model is then used to encode the triplet, which is further processed by modules including utterance aggregation, self-attention, cross-attention, and GRU to predict the symptom status. Benefiting from the NLI formalization, the proposed framework can encode more informative prior knowledge to better localize and track symptom status, which can effectively improve the performance of symptom status recognition. Preliminary experiments on Chinese medical dialogue datasets show that KNSE outperforms previous competitive baselines and has advantages in cross-disease and cross-symptom scenarios.

Learning long-term music representations via hierarchical contextual constraints

Feb 13, 2022

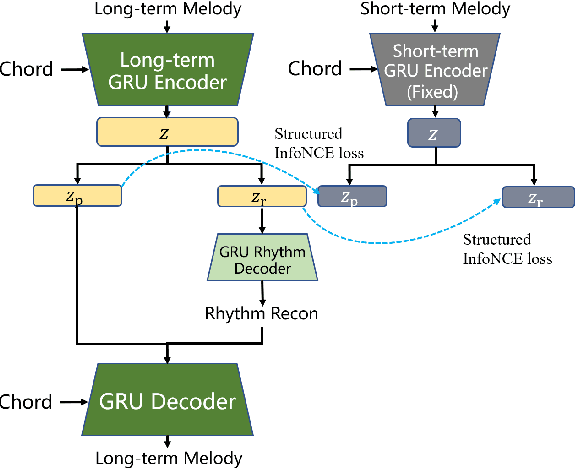

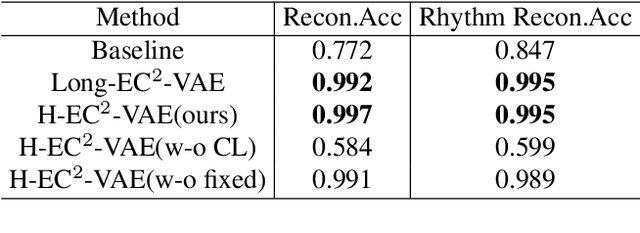

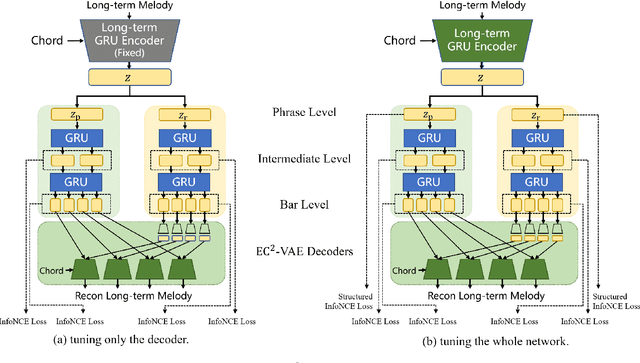

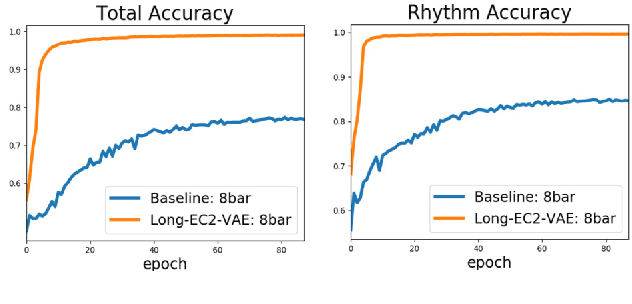

Abstract:Learning symbolic music representations, especially disentangled representations with probabilistic interpretations, has been shown to benefit both music understanding and generation. However, most models are only applicable to short-term music, while learning long-term music representations remains a challenging task. We have seen several studies attempting to learn hierarchical representations directly in an end-to-end manner, but these models have not been able to achieve the desired results and the training process is not stable. In this paper, we propose a novel approach to learn long-term symbolic music representations through contextual constraints. First, we use contrastive learning to pre-train a long-term representation by constraining its difference from the short-term representation (extracted by an off-the-shelf model). Then, we fine-tune the long-term representation by a hierarchical prediction model such that a good long-term representation (e.g., an 8-bar representation) can reconstruct the corresponding short-term ones (e.g., the 2-bar representations within the 8-bar range). Experiments show that our method stabilizes the training and the fine-tuning steps. In addition, the designed contextual constraints benefit both reconstruction and disentanglement, significantly outperforming the baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge