Mid-attribute speaker generation using optimal-transport-based interpolation of Gaussian mixture models

Oct 18, 2022Aya Watanabe, Shinnosuke Takamichi, Yuki Saito, Detai Xin, Hiroshi Saruwatari

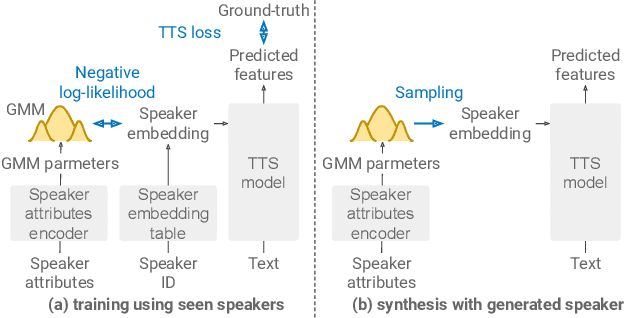

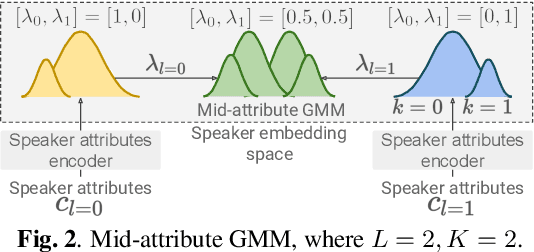

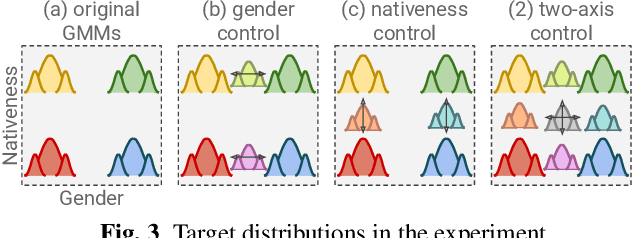

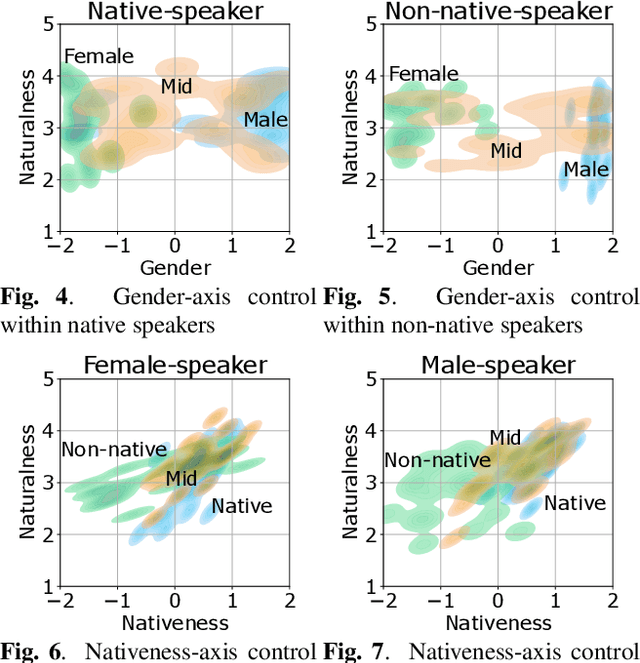

In this paper, we propose a method for intermediating multiple speakers' attributes and diversifying their voice characteristics in ``speaker generation,'' an emerging task that aims to synthesize a nonexistent speaker's naturally sounding voice. The conventional TacoSpawn-based speaker generation method represents the distributions of speaker embeddings by Gaussian mixture models (GMMs) conditioned with speaker attributes. Although this method enables the sampling of various speakers from the speaker-attribute-aware GMMs, it is not yet clear whether the learned distributions can represent speakers with an intermediate attribute (i.e., mid-attribute). To this end, we propose an optimal-transport-based method that interpolates the learned GMMs to generate nonexistent speakers with mid-attribute (e.g., gender-neutral) voices. We empirically validate our method and evaluate the naturalness of synthetic speech and the controllability of two speaker attributes: gender and language fluency. The evaluation results show that our method can control the generated speakers' attributes by a continuous scalar value without statistically significant degradation of speech naturalness.

Spontaneous speech synthesis with linguistic-speech consistency training using pseudo-filled pauses

Oct 18, 2022Yuta Matsunaga, Takaaki Saeki, Shinnosuke Takamichi, Hiroshi Saruwatari

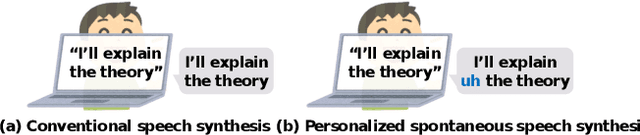

We propose a training method for spontaneous speech synthesis models that guarantees the consistency of linguistic parts of synthesized speech. Personalized spontaneous speech synthesis aims to reproduce the individuality of disfluency, such as filled pauses. Our prior model includes a filled-pause prediction model and synthesizes filled-pause-included speech from text without filled pauses. However, inserting the filled pauses degrades the quality of the linguistic parts of the synthesized speech. This might be because filled-pause insertion tendencies differ between training and inference, and the synthesis model cannot represent connections between filled pauses and surrounding phonemes in inference. We, therefore, developed a linguistic-speech consistency training that guarantees the consistency of linguistic parts of synthetic speech with and without filled pauses. The proposed consistency training utilizes not only ground-truth-filled pauses but also pseudo ones. Our experiments demonstrate that this method improves the naturalness of the synthetic linguistic speech and the entire predicted-filled-pause-included synthetic speech.

Visual onoma-to-wave: environmental sound synthesis from visual onomatopoeias and sound-source images

Oct 17, 2022Hien Ohnaka, Shinnosuke Takamichi, Keisuke Imoto, Yuki Okamoto, Kazuki Fujii, Hiroshi Saruwatari

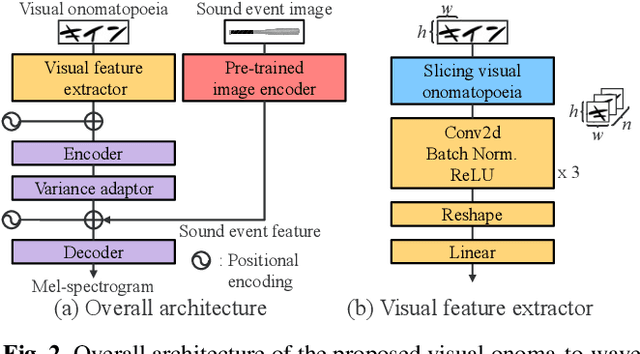

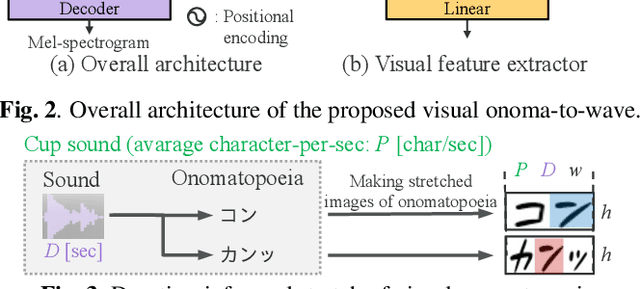

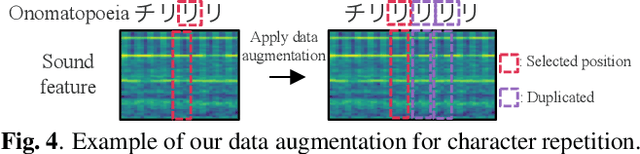

We propose a method for synthesizing environmental sounds from visually represented onomatopoeias and sound sources. An onomatopoeia is a word that imitates a sound structure, i.e., the text representation of sound. From this perspective, onoma-to-wave has been proposed to synthesize environmental sounds from the desired onomatopoeia texts. Onomatopoeias have another representation: visual-text representations of sounds in comics, advertisements, and virtual reality. A visual onomatopoeia (visual text of onomatopoeia) contains rich information that is not present in the text, such as a long-short duration of the image, so the use of this representation is expected to synthesize diverse sounds. Therefore, we propose visual onoma-to-wave for environmental sound synthesis from visual onomatopoeia. The method can transfer visual concepts of the visual text and sound-source image to the synthesized sound. We also propose a data augmentation method focusing on the repetition of onomatopoeias to enhance the performance of our method. An experimental evaluation shows that the methods can synthesize diverse environmental sounds from visual text and sound-source images.

Empirical Study Incorporating Linguistic Knowledge on Filled Pauses for Personalized Spontaneous Speech Synthesis

Oct 14, 2022Yuta Matsunaga, Takaaki Saeki, Shinnosuke Takamichi, Hiroshi Saruwatari

We present a comprehensive empirical study for personalized spontaneous speech synthesis on the basis of linguistic knowledge. With the advent of voice cloning for reading-style speech synthesis, a new voice cloning paradigm for human-like and spontaneous speech synthesis is required. We, therefore, focus on personalized spontaneous speech synthesis that can clone both the individual's voice timbre and speech disfluency. Specifically, we deal with filled pauses, a major source of speech disfluency, which is known to play an important role in speech generation and communication in psychology and linguistics. To comparatively evaluate personalized filled pause insertion and non-personalized filled pause prediction methods, we developed a speech synthesis method with a non-personalized external filled pause predictor trained with a multi-speaker corpus. The results clarify the position-word entanglement of filled pauses, i.e., the necessity of precisely predicting positions for naturalness and the necessity of precisely predicting words for individuality on the evaluation of synthesized speech.

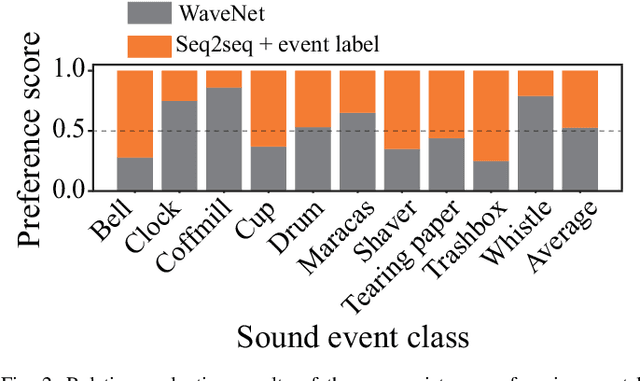

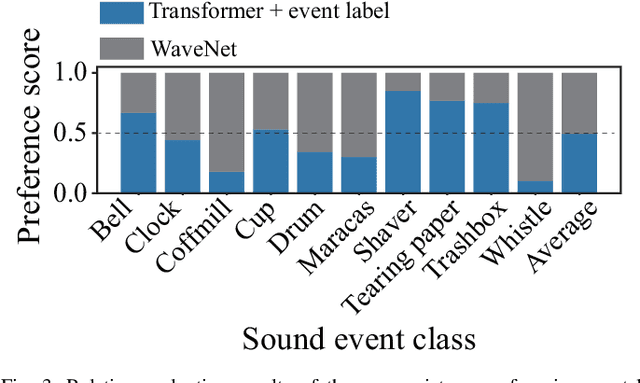

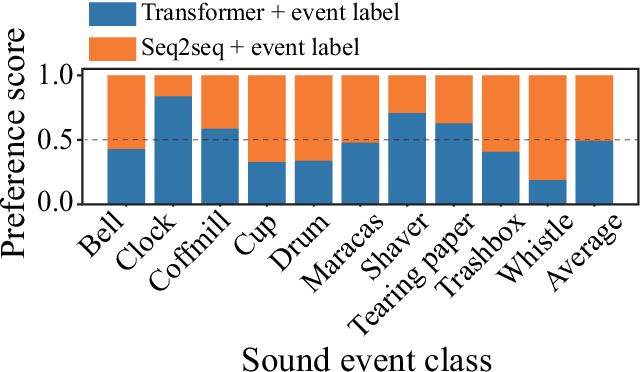

How Should We Evaluate Synthesized Environmental Sounds

Aug 16, 2022Yuki Okamoto, Keisuke Imoto, Shinnosuke Takamichi, Takahiro Fukumori, Yoichi Yamashita

Although several methods of environmental sound synthesis have been proposed, there has been no discussion on how synthesized environmental sounds should be evaluated. Only either subjective or objective evaluations have been conducted in conventional evaluations, and it is not clear what type of evaluation should be carried out. In this paper, we investigate how to evaluate synthesized environmental sounds. We also propose a subjective evaluation methodology to evaluate whether the synthesized sound appropriately represents the information input to the environmental sound synthesis system. In our experiments, we compare the proposed and conventional evaluation methods and show that the results of subjective evaluations tended to differ from those of objective evaluations. From these results, we conclude that it is necessary to conduct not only objective evaluation but also subjective evaluation.

Exploring the Effectiveness of Self-supervised Learning and Classifier Chains in Emotion Recognition of Nonverbal Vocalizations

Jun 21, 2022Detai Xin, Shinnosuke Takamichi, Hiroshi Saruwatari

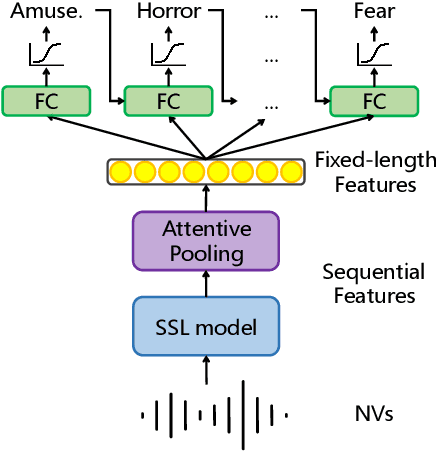

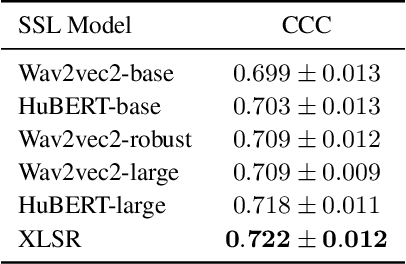

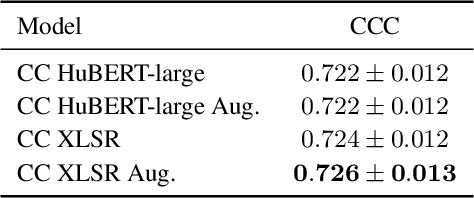

We present an emotion recognition system for nonverbal vocalizations (NVs) submitted to the ExVo Few-Shot track of the ICML Expressive Vocalizations Competition 2022. The proposed method uses self-supervised learning (SSL) models to extract features from NVs and uses a classifier chain to model the label dependency between emotions. Experimental results demonstrate that the proposed method can significantly improve the performance of this task compared to several baseline methods. Our proposed method obtained a mean concordance correlation coefficient (CCC) of $0.725$ in the validation set and $0.739$ in the test set, while the best baseline method only obtained $0.554$ in the validation set. We publicate our code at https://github.com/Aria-K-Alethia/ExVo to help others to reproduce our experimental results.

Acoustic Modeling for End-to-End Empathetic Dialogue Speech Synthesis Using Linguistic and Prosodic Contexts of Dialogue History

Jun 16, 2022Yuto Nishimura, Yuki Saito, Shinnosuke Takamichi, Kentaro Tachibana, Hiroshi Saruwatari

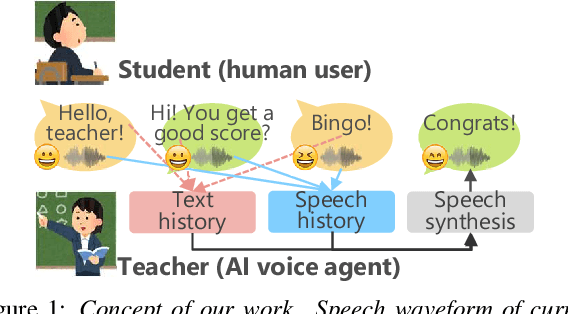

We propose an end-to-end empathetic dialogue speech synthesis (DSS) model that considers both the linguistic and prosodic contexts of dialogue history. Empathy is the active attempt by humans to get inside the interlocutor in dialogue, and empathetic DSS is a technology to implement this act in spoken dialogue systems. Our model is conditioned by the history of linguistic and prosody features for predicting appropriate dialogue context. As such, it can be regarded as an extension of the conventional linguistic-feature-based dialogue history modeling. To train the empathetic DSS model effectively, we investigate 1) a self-supervised learning model pretrained with large speech corpora, 2) a style-guided training using a prosody embedding of the current utterance to be predicted by the dialogue context embedding, 3) a cross-modal attention to combine text and speech modalities, and 4) a sentence-wise embedding to achieve fine-grained prosody modeling rather than utterance-wise modeling. The evaluation results demonstrate that 1) simply considering prosodic contexts of the dialogue history does not improve the quality of speech in empathetic DSS and 2) introducing style-guided training and sentence-wise embedding modeling achieves higher speech quality than that by the conventional method.

Speaking-Rate-Controllable HiFi-GAN Using Feature Interpolation

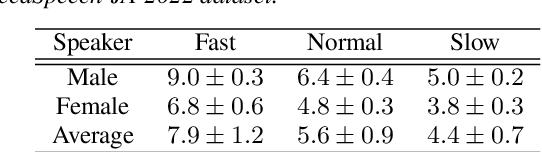

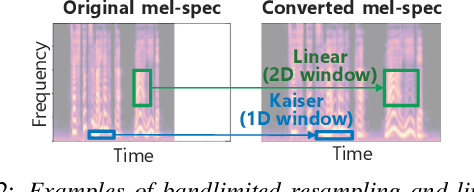

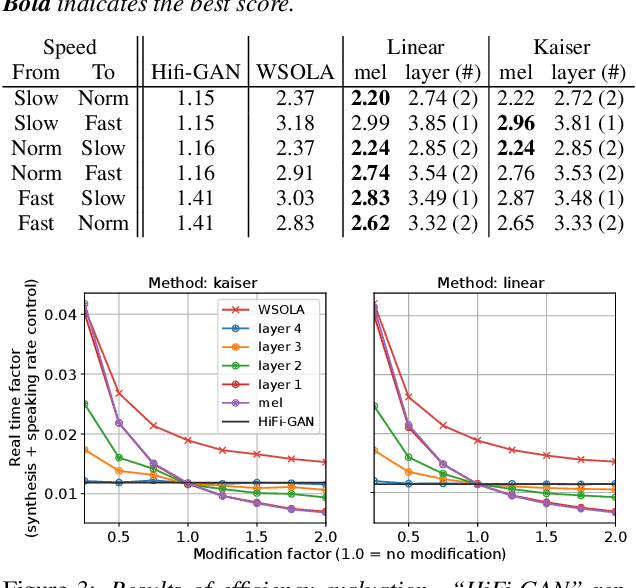

Apr 22, 2022Detai Xin, Shinnosuke Takamichi, Takuma Okamoto, Hisashi Kawai, Hiroshi Saruwatari

This paper presents a speaking-rate-controllable HiFi-GAN neural vocoder. Original HiFi-GAN is a high-fidelity, computationally efficient, and tiny-footprint neural vocoder. We attempt to incorporate a speaking rate control function into HiFi-GAN for improving the accessibility of synthetic speech. The proposed method inserts a differentiable interpolation layer into the HiFi-GAN architecture. A signal resampling method and an image scaling method are implemented in the proposed method to warp the mel-spectrograms or hidden features of the neural vocoder. We also design and open-source a Japanese speech corpus containing three kinds of speaking rates to evaluate the proposed speaking rate control method. Experimental results of comprehensive objective and subjective evaluations demonstrate that 1) the proposed method outperforms a baseline time-scale modification algorithm in speech naturalness, 2) warping mel-spectrograms by image scaling obtained the best performance among all proposed methods, and 3) the proposed speaking rate control method can be incorporated into HiFi-GAN without losing computational efficiency.

UTMOS: UTokyo-SaruLab System for VoiceMOS Challenge 2022

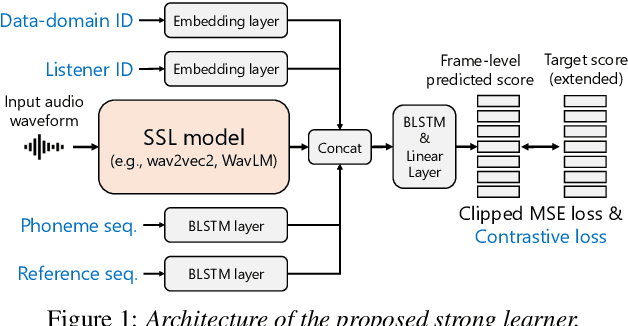

Apr 05, 2022Takaaki Saeki, Detai Xin, Wataru Nakata, Tomoki Koriyama, Shinnosuke Takamichi, Hiroshi Saruwatari

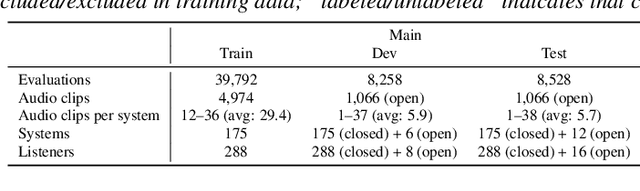

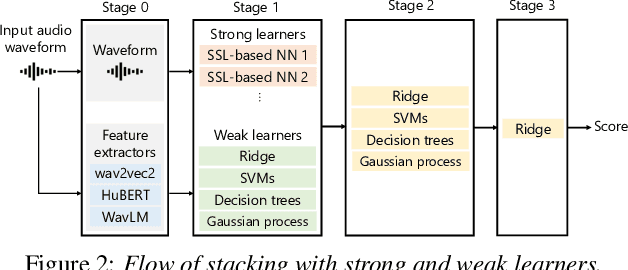

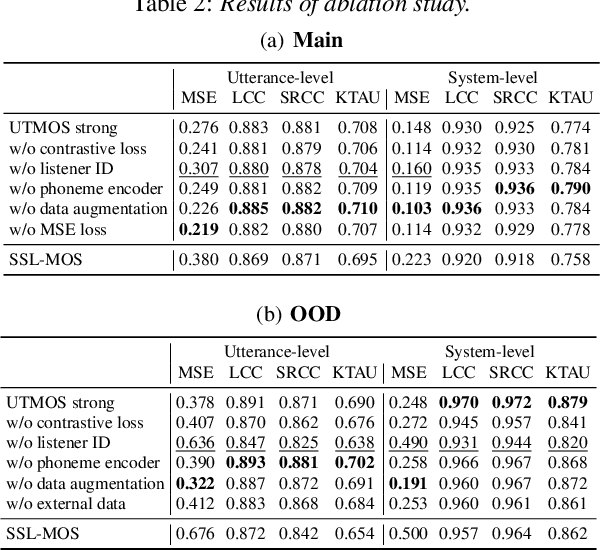

We present the UTokyo-SaruLab mean opinion score (MOS) prediction system submitted to VoiceMOS Challenge 2022. The challenge is to predict the MOS values of speech samples collected from previous Blizzard Challenges and Voice Conversion Challenges for two tracks: a main track for in-domain prediction and an out-of-domain (OOD) track for which there is less labeled data from different listening tests. Our system is based on ensemble learning of strong and weak learners. Strong learners incorporate several improvements to the previous fine-tuning models of self-supervised learning (SSL) models, while weak learners use basic machine-learning methods to predict scores from SSL features. In the Challenge, our system had the highest score on several metrics for both the main and OOD tracks. In addition, we conducted ablation studies to investigate the effectiveness of our proposed methods.

STUDIES: Corpus of Japanese Empathetic Dialogue Speech Towards Friendly Voice Agent

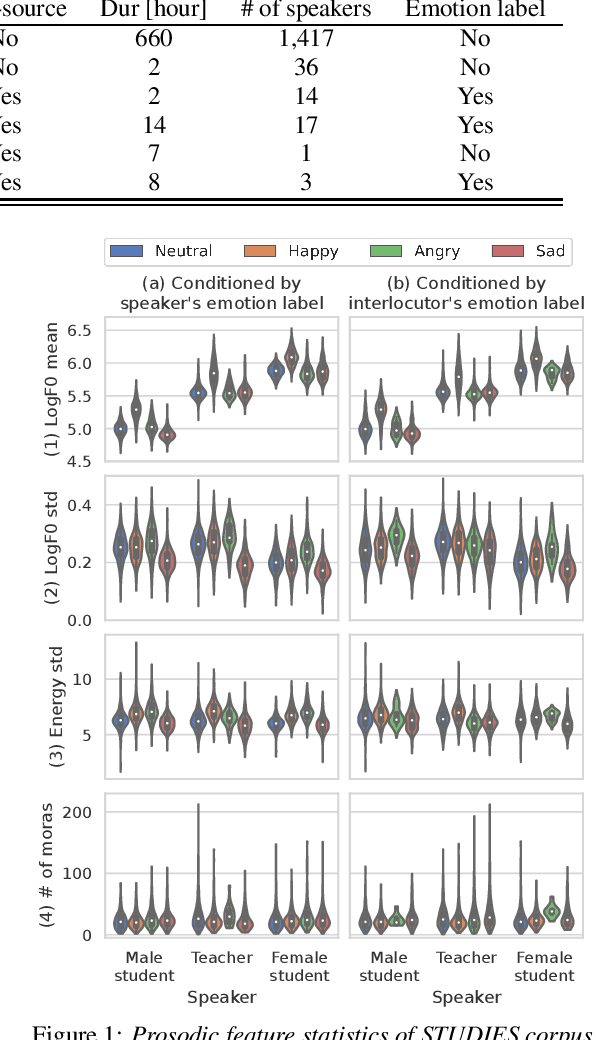

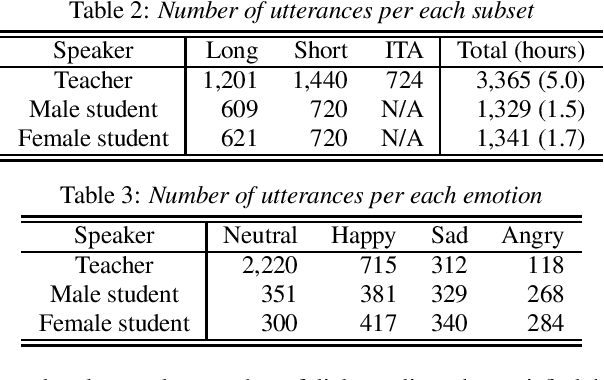

Mar 28, 2022Yuki Saito, Yuto Nishimura, Shinnosuke Takamichi, Kentaro Tachibana, Hiroshi Saruwatari

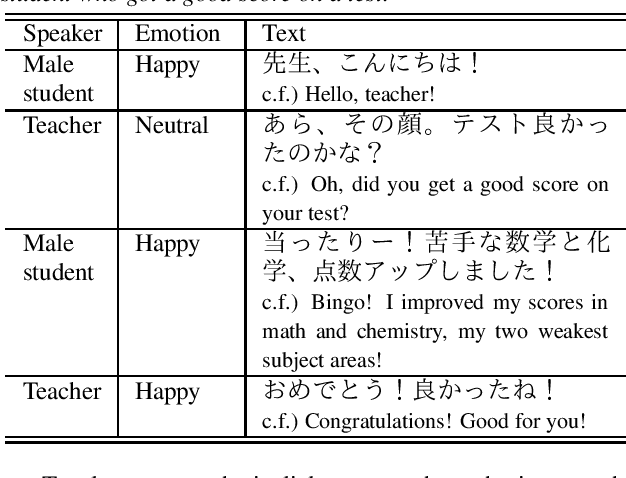

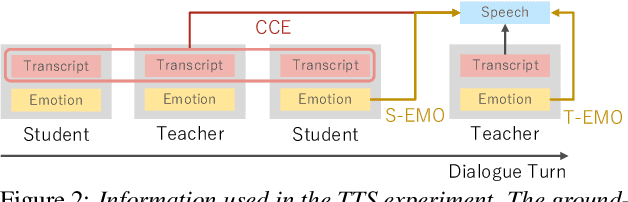

We present STUDIES, a new speech corpus for developing a voice agent that can speak in a friendly manner. Humans naturally control their speech prosody to empathize with each other. By incorporating this "empathetic dialogue" behavior into a spoken dialogue system, we can develop a voice agent that can respond to a user more naturally. We designed the STUDIES corpus to include a speaker who speaks with empathy for the interlocutor's emotion explicitly. We describe our methodology to construct an empathetic dialogue speech corpus and report the analysis results of the STUDIES corpus. We conducted a text-to-speech experiment to initially investigate how we can develop more natural voice agent that can tune its speaking style corresponding to the interlocutor's emotion. The results show that the use of interlocutor's emotion label and conversational context embedding can produce speech with the same degree of naturalness as that synthesized by using the agent's emotion label. Our project page of the STUDIES corpus is http://sython.org/Corpus/STUDIES.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge