Sara Leary

Benchmarking Image Similarity Metrics for Novel View Synthesis Applications

Jun 14, 2025

Abstract:Traditional image similarity metrics are ineffective at evaluating the similarity between a real image of a scene and an artificially generated version of that viewpoint [6, 9, 13, 14]. Our research evaluates the effectiveness of a new, perceptual-based similarity metric, DreamSim [2], and three popular image similarity metrics: Structural Similarity (SSIM), Peak Signal-to-Noise Ratio (PSNR), and Learned Perceptual Image Patch Similarity (LPIPS) [18, 19] in novel view synthesis (NVS) applications. We create a corpus of artificially corrupted images to quantify the sensitivity and discriminative power of each of the image similarity metrics. These tests reveal that traditional metrics are unable to effectively differentiate between images with minor pixel-level changes and those with substantial corruption, whereas DreamSim is more robust to minor defects and can effectively evaluate the high-level similarity of the image. Additionally, our results demonstrate that DreamSim provides a more effective and useful evaluation of render quality, especially for evaluating NVS renders in real-world use cases where slight rendering corruptions are common, but do not affect image utility for human tasks.

APRICOT: A Dataset of Physical Adversarial Attacks on Object Detection

Dec 17, 2019

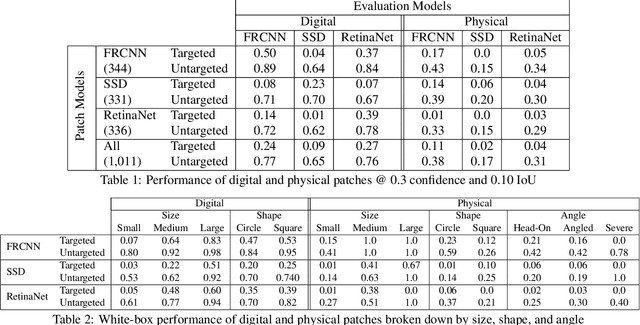

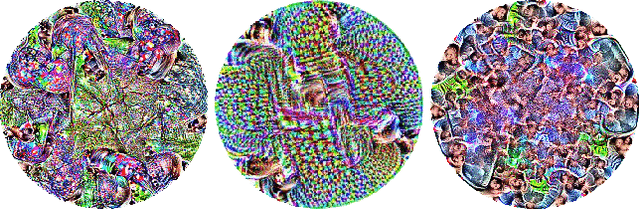

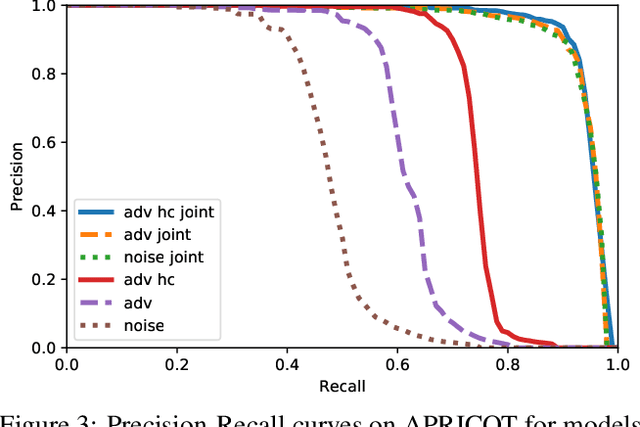

Abstract:Physical adversarial attacks threaten to fool object detection systems, but reproducible research on the real-world effectiveness of physical patches and how to defend against them requires a publicly available benchmark dataset. We present APRICOT, a collection of over 1,000 annotated photographs of printed adversarial patches in public locations. The patches target several object categories for three COCO-trained detection models, and the photos represent natural variation in position, distance, lighting conditions, and viewing angle. Our analysis suggests that maintaining adversarial robustness in uncontrolled settings is highly challenging, but it is still possible to produce targeted detections under white-box and sometimes black-box settings. We establish baselines for defending against adversarial patches through several methods, including a detector supervised with synthetic data and unsupervised methods such as kernel density estimation, Bayesian uncertainty, and reconstruction error. Our results suggest that adversarial patches can be effectively flagged, both in a high-knowledge, attack-specific scenario, and in an unsupervised setting where patches are detected as anomalies in natural images. This dataset and the described experiments provide a benchmark for future research on the effectiveness of and defenses against physical adversarial objects in the wild.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge