Ruifeng Zhang

Zenith: Scaling up Ranking Models for Billion-scale Livestreaming Recommendation

Jan 29, 2026Abstract:Accurately capturing feature interactions is essential in recommender systems, and recent trends show that scaling up model capacity could be a key driver for next-level predictive performance. While prior work has explored various model architectures to capture multi-granularity feature interactions, relatively little attention has been paid to efficient feature handling and scaling model capacity without incurring excessive inference latency. In this paper, we address this by presenting Zenith, a scalable and efficient ranking architecture that learns complex feature interactions with minimal runtime overhead. Zenith is designed to handle a few high-dimensional Prime Tokens with Token Fusion and Token Boost modules, which exhibits superior scaling laws compared to other state-of-the-art ranking methods, thanks to its improved token heterogeneity. Its real-world effectiveness is demonstrated by deploying the architecture to TikTok Live, a leading online livestreaming platform that attracts billions of users globally. Our A/B test shows that Zenith achieves +1.05%/-1.10% in online CTR AUC and Logloss, and realizes +9.93% gains in Quality Watch Session / User and +8.11% in Quality Watch Duration / User.

An Incremental Phase Mapping Approach for X-ray Diffraction Patterns using Binary Peak Representations

Nov 08, 2022

Abstract:Despite the huge advancement in knowledge discovery and data mining techniques, the X-ray diffraction (XRD) analysis process has mostly remained untouched and still involves manual investigation, comparison, and verification. Due to the large volume of XRD samples from high-throughput XRD experiments, it has become impossible for domain scientists to process them manually. Recently, they have started leveraging standard clustering techniques, to reduce the XRD pattern representations requiring manual efforts for labeling and verification. Nevertheless, these standard clustering techniques do not handle problem-specific aspects such as peak shifting, adjacent peaks, background noise, and mixed phases; hence, resulting in incorrect composition-phase diagrams that complicate further steps. Here, we leverage data mining techniques along with domain expertise to handle these issues. In this paper, we introduce an incremental phase mapping approach based on binary peak representations using a new threshold based fuzzy dissimilarity measure. The proposed approach first applies an incremental phase computation algorithm on discrete binary peak representation of XRD samples, followed by hierarchical clustering or manual merging of similar pure phases to obtain the final composition-phase diagram. We evaluate our method on the composition space of two ternary alloy systems- Co-Ni-Ta and Co-Ti-Ta. Our results are verified by domain scientists and closely resembles the manually computed ground-truth composition-phase diagrams. The proposed approach takes us closer towards achieving the goal of complete end-to-end automated XRD analysis.

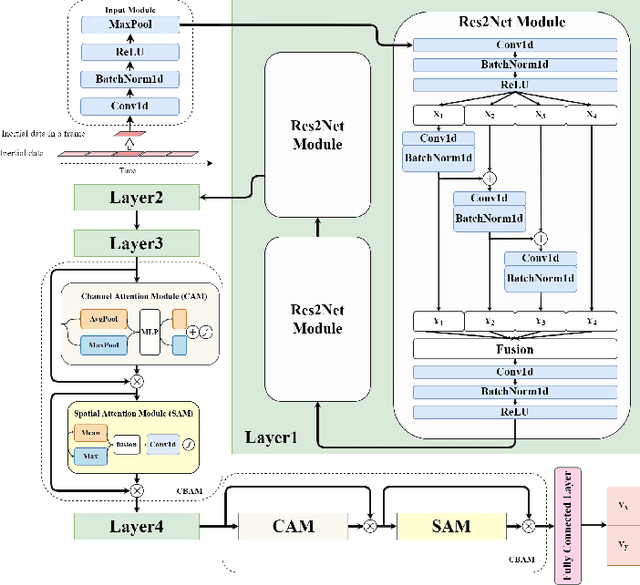

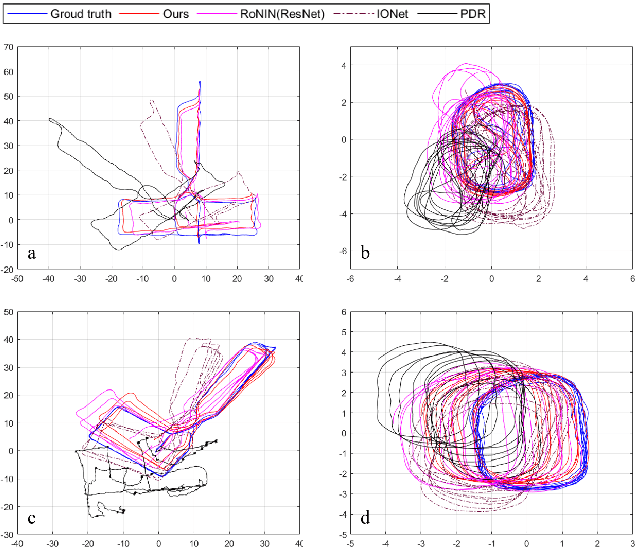

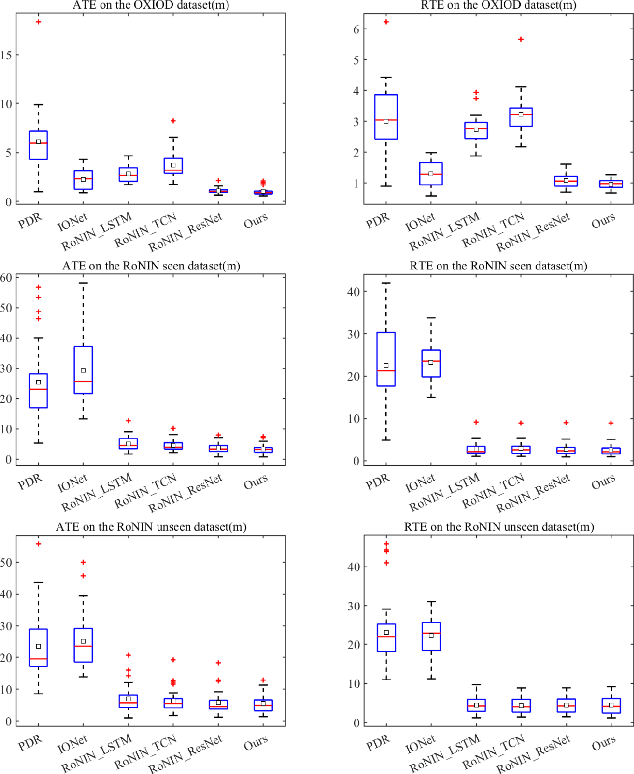

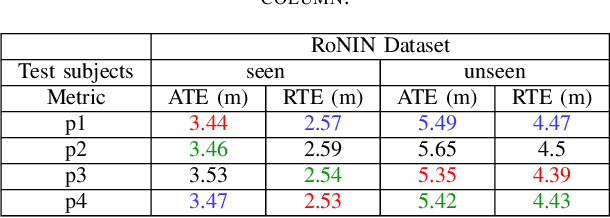

Deep Learning-based Inertial Odometry for Pedestrian Tracking using Attention Mechanism and Res2Net Module

May 20, 2022

Abstract:Pedestrian dead reckoning is a challenging task due to the low-cost inertial sensor error accumulation. Recent research has shown that deep learning methods can achieve impressive performance in handling this issue. In this letter, we propose inertial odometry using a deep learning-based velocity estimation method. The deep neural network based on Res2Net modules and two convolutional block attention modules is leveraged to restore the potential connection between the horizontal velocity vector and raw inertial data from a smartphone. Our network is trained using only fifty percent of the public inertial odometry dataset (RoNIN) data. Then, it is validated on the RoNIN testing dataset and another public inertial odometry dataset (OXIOD). Compared with the traditional step-length and heading system-based algorithm, our approach decreases the absolute translation error (ATE) by 76%-86%. In addition, compared with the state-of-the-art deep learning method (RoNIN), our method improves its ATE by 6%-31.4%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge