Robert Porzel

Breathe with Me: Synchronizing Biosignals for User Embodiment in Robots

Dec 16, 2025Abstract:Embodiment of users within robotic systems has been explored in human-robot interaction, most often in telepresence and teleoperation. In these applications, synchronized visuomotor feedback can evoke a sense of body ownership and agency, contributing to the experience of embodiment. We extend this work by employing embreathment, the representation of the user's own breath in real time, as a means for enhancing user embodiment experience in robots. In a within-subjects experiment, participants controlled a robotic arm, while its movements were either synchronized or non-synchronized with their own breath. Synchrony was shown to significantly increase body ownership, and was preferred by most participants. We propose the representation of physiological signals as a novel interoceptive pathway for human-robot interaction, and discuss implications for telepresence, prosthetics, collaboration with robots, and shared autonomy.

Can AI Explanations Make You Change Your Mind?

Aug 11, 2025Abstract:In the context of AI-based decision support systems, explanations can help users to judge when to trust the AI's suggestion, and when to question it. In this way, human oversight can prevent AI errors and biased decision-making. However, this rests on the assumption that users will consider explanations in enough detail to be able to catch such errors. We conducted an online study on trust in explainable DSS, and were surprised to find that in many cases, participants spent little time on the explanation and did not always consider it in detail. We present an exploratory analysis of this data, investigating what factors impact how carefully study participants consider AI explanations, and how this in turn impacts whether they are open to changing their mind based on what the AI suggests.

An Ontological Model of User Preferences

Oct 29, 2023Abstract:The notion of preferences plays an important role in many disciplines including service robotics which is concerned with scenarios in which robots interact with humans. These interactions can be favored by robots taking human preferences into account. This raises the issue of how preferences should be represented to support such preference-aware decision making. Several formal accounts for a notion of preferences exist. However, these approaches fall short on defining the nature and structure of the options that a robot has in a given situation. In this work, we thus investigate a formal model of preferences where options are non-atomic entities that are defined by the complex situations they bring about.

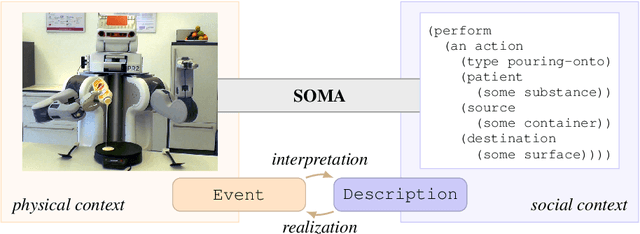

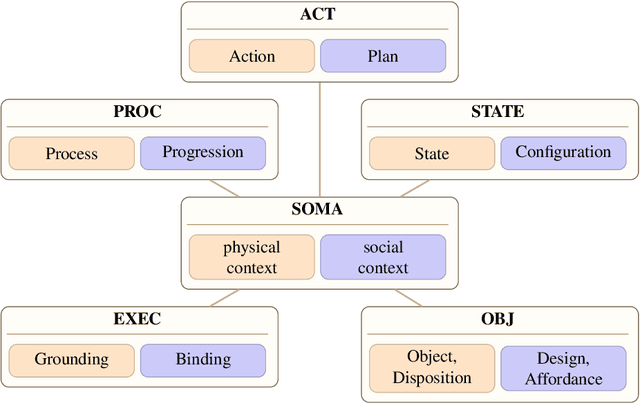

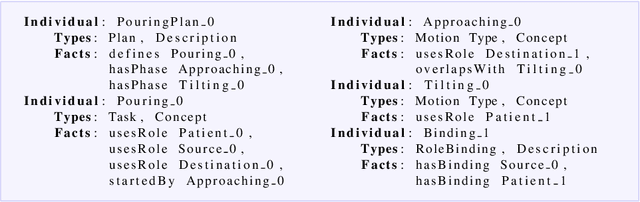

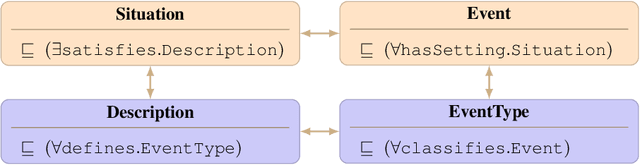

Foundations of the Socio-physical Model of Activities for Autonomous Robotic Agents

Nov 24, 2020

Abstract:In this paper, we present foundations of the Socio-physical Model of Activities (SOMA). SOMA represents both the physical as well as the social context of everyday activities. Such tasks seem to be trivial for humans, however, they pose severe problems for artificial agents. For starters, a natural language command requesting something will leave many pieces of information necessary for performing the task unspecified. Humans can solve such problems fast as we reduce the search space by recourse to prior knowledge such as a connected collection of plans that describe how certain goals can be achieved at various levels of abstraction. Rather than enumerating fine-grained physical contexts SOMA sets out to include socially constructed knowledge about the functions of actions to achieve a variety of goals or the roles objects can play in a given situation. As the human cognition system is capable of generalizing experiences into abstract knowledge pieces applicable to novel situations, we argue that both physical and social context need be modeled to tackle these challenges in a general manner. This is represented by the link between the physical and social context in SOMA where relationships are established between occurrences and generalizations of them, which has been demonstrated in several use cases that validate SOMA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge