Riccardo De Maria

Redefining CX with Agentic AI: Minerva CQ Case Study

Sep 16, 2025Abstract:Despite advances in AI for contact centers, customer experience (CX) continues to suffer from high average handling time (AHT), low first-call resolution, and poor customer satisfaction (CSAT). A key driver is the cognitive load on agents, who must navigate fragmented systems, troubleshoot manually, and frequently place customers on hold. Existing AI-powered agent-assist tools are often reactive driven by static rules, simple prompting, or retrieval-augmented generation (RAG) without deeper contextual reasoning. We introduce Agentic AI goal-driven, autonomous, tool-using systems that proactively support agents in real time. Unlike conventional approaches, Agentic AI identifies customer intent, triggers modular workflows, maintains evolving context, and adapts dynamically to conversation state. This paper presents a case study of Minerva CQ, a real-time Agent Assist product deployed in voice-based customer support. Minerva CQ integrates real-time transcription, intent and sentiment detection, entity recognition, contextual retrieval, dynamic customer profiling, and partial conversational summaries enabling proactive workflows and continuous context-building. Deployed in live production, Minerva CQ acts as an AI co-pilot, delivering measurable improvements in agent efficiency and customer experience across multiple deployments.

CyberBOT: Towards Reliable Cybersecurity Education via Ontology-Grounded Retrieval Augmented Generation

Apr 01, 2025

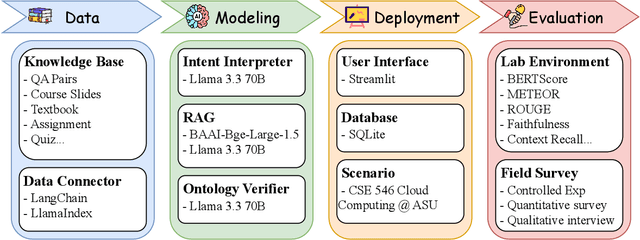

Abstract:Advancements in large language models (LLMs) have enabled the development of intelligent educational tools that support inquiry-based learning across technical domains. In cybersecurity education, where accuracy and safety are paramount, systems must go beyond surface-level relevance to provide information that is both trustworthy and domain-appropriate. To address this challenge, we introduce CyberBOT, a question-answering chatbot that leverages a retrieval-augmented generation (RAG) pipeline to incorporate contextual information from course-specific materials and validate responses using a domain-specific cybersecurity ontology. The ontology serves as a structured reasoning layer that constrains and verifies LLM-generated answers, reducing the risk of misleading or unsafe guidance. CyberBOT has been deployed in a large graduate-level course at Arizona State University (ASU), where more than one hundred students actively engage with the system through a dedicated web-based platform. Computational evaluations in lab environments highlight the potential capacity of CyberBOT, and a forthcoming field study will evaluate its pedagogical impact. By integrating structured domain reasoning with modern generative capabilities, CyberBOT illustrates a promising direction for developing reliable and curriculum-aligned AI applications in specialized educational contexts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge