Raymond Veldhuis

Toward Face Biometric De-identification using Adversarial Examples

Feb 07, 2023Abstract:The remarkable success of face recognition (FR) has endangered the privacy of internet users particularly in social media. Recently, researchers turned to use adversarial examples as a countermeasure. In this paper, we assess the effectiveness of using two widely known adversarial methods (BIM and ILLC) for de-identifying personal images. We discovered, unlike previous claims in the literature, that it is not easy to get a high protection success rate (suppressing identification rate) with imperceptible adversarial perturbation to the human visual system. Finally, we found out that the transferability of adversarial examples is highly affected by the training parameters of the network with which they are generated.

Memory-free Online Change-point Detection: A Novel Neural Network Approach

Jul 08, 2022

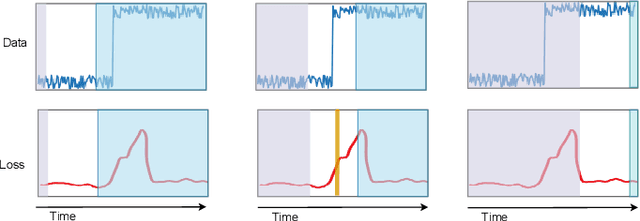

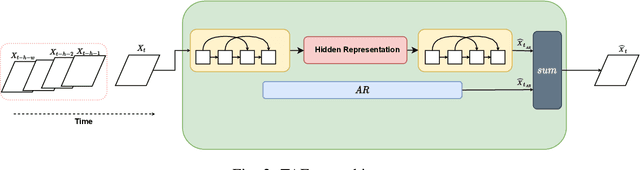

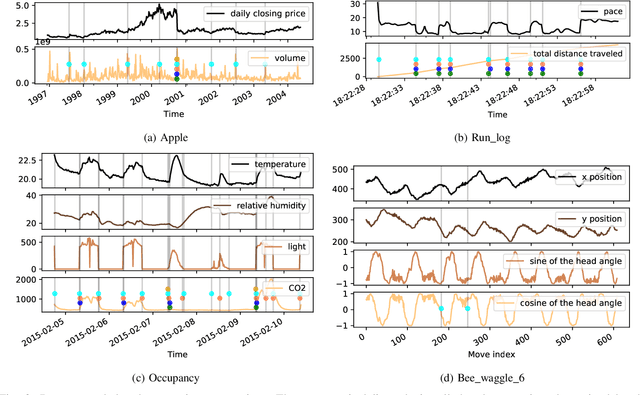

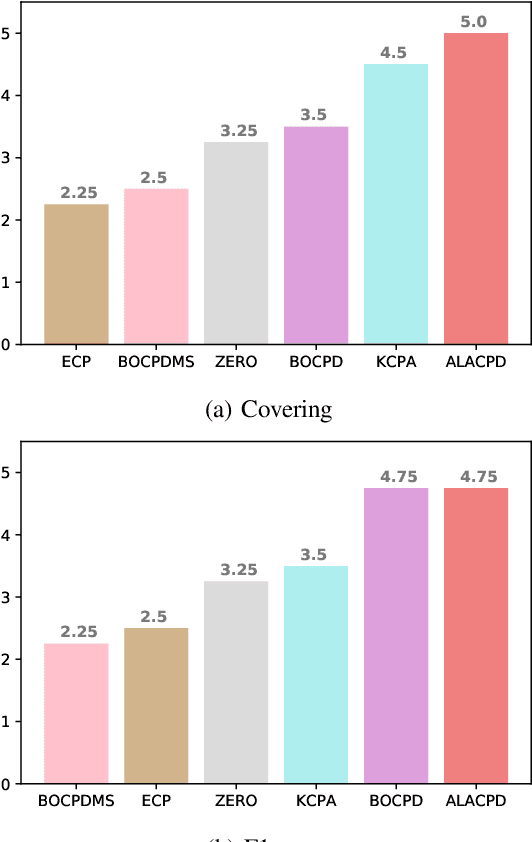

Abstract:Change-point detection (CPD), which detects abrupt changes in the data distribution, is recognized as one of the most significant tasks in time series analysis. Despite the extensive literature on offline CPD, unsupervised online CPD still suffers from major challenges, including scalability, hyperparameter tuning, and learning constraints. To mitigate some of these challenges, in this paper, we propose a novel deep learning approach for unsupervised online CPD from multi-dimensional time series, named Adaptive LSTM-Autoencoder Change-Point Detection (ALACPD). ALACPD exploits an LSTM-autoencoder-based neural network to perform unsupervised online CPD. It continuously adapts to the incoming samples without keeping the previously received input, thus being memory-free. We perform an extensive evaluation on several real-world time series CPD benchmarks. We show that ALACPD, on average, ranks first among state-of-the-art CPD algorithms in terms of quality of the time series segmentation, and it is on par with the best performer in terms of the accuracy of the estimated change-points. The implementation of ALACPD is available online on Github\footnote{\url{https://github.com/zahraatashgahi/ALACPD}}.

A Face Recognition System's Worst Morph Nightmare, Theoretically

Nov 30, 2021

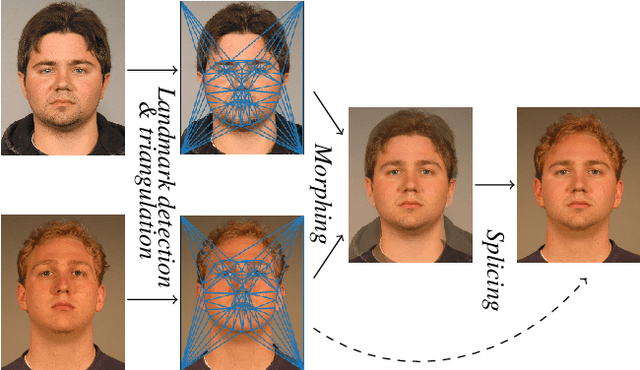

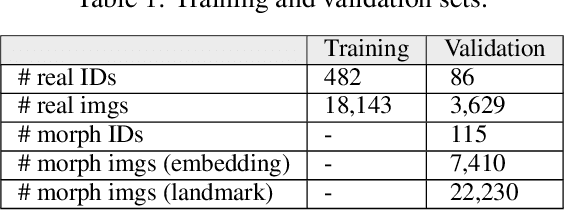

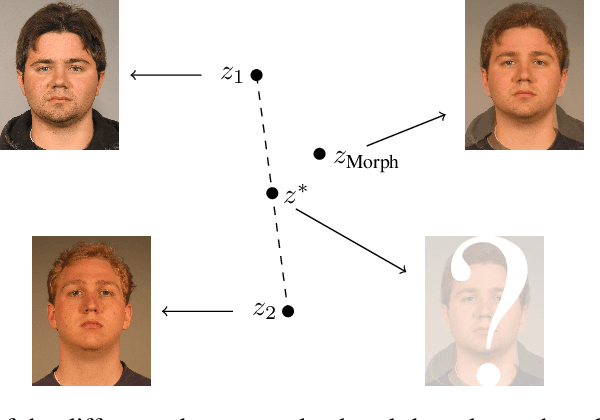

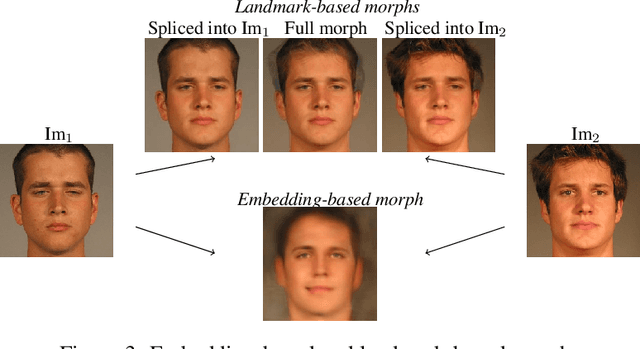

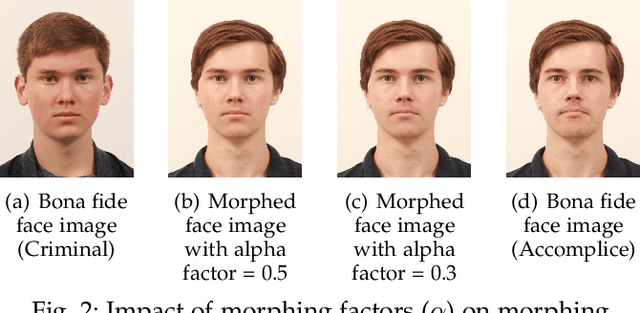

Abstract:It has been shown that Face Recognition Systems (FRSs) are vulnerable to morphing attacks, but most research focusses on landmark-based morphs. A second method for generating morphs uses Generative Adversarial Networks, which results in convincingly real facial images that can be almost as challenging for FRSs as landmark-based attacks. We propose a method to create a third, different type of morph, that has the advantage of being easier to train. We introduce the theoretical concept of \textit{worst-case morphs}, which are those morphs that are most challenging for a fixed FRS. For a set of images and corresponding embeddings in an FRS's latent space, we generate images that approximate these worst-case morphs using a mapping from embedding space back to image space. While the resulting images are not yet as challenging as other morphs, they can provide valuable information in future research on Morphing Attack Detection (MAD) methods and on weaknesses of FRSs. Methods for MAD need to be validated on more varied morph databases. Our proposed method contributes to achieving such variation.

Quick and Robust Feature Selection: the Strength of Energy-efficient Sparse Training for Autoencoders

Dec 01, 2020

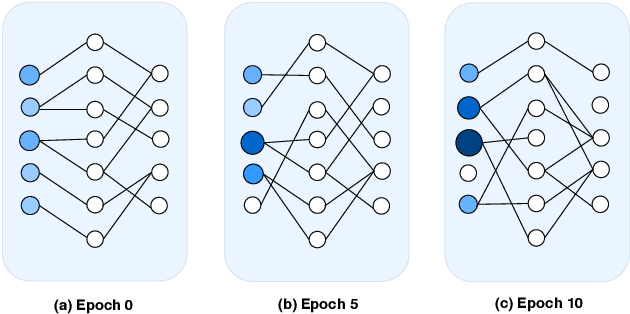

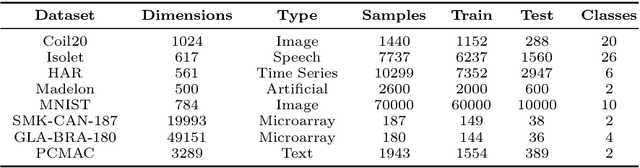

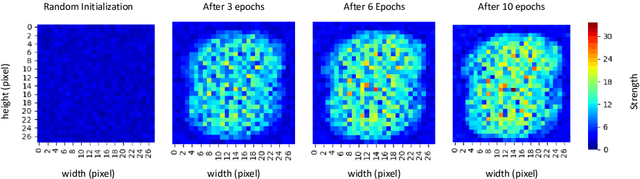

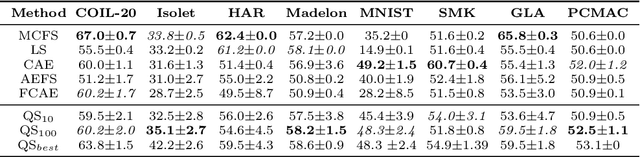

Abstract:Major complications arise from the recent increase in the amount of high-dimensional data, including high computational costs and memory requirements. Feature selection, which identifies the most relevant and informative attributes of a dataset, has been introduced as a solution to this problem. Most of the existing feature selection methods are computationally inefficient; inefficient algorithms lead to high energy consumption, which is not desirable for devices with limited computational and energy resources. In this paper, a novel and flexible method for unsupervised feature selection is proposed. This method, named QuickSelection, introduces the strength of the neuron in sparse neural networks as a criterion to measure the feature importance. This criterion, blended with sparsely connected denoising autoencoders trained with the sparse evolutionary training procedure, derives the importance of all input features simultaneously. We implement QuickSelection in a purely sparse manner as opposed to the typical approach of using a binary mask over connections to simulate sparsity. It results in a considerable speed increase and memory reduction. When tested on several benchmark datasets, including five low-dimensional and three high-dimensional datasets, the proposed method is able to achieve the best trade-off of classification and clustering accuracy, running time, and maximum memory usage, among widely used approaches for feature selection. Besides, our proposed method requires the least amount of energy among the state-of-the-art autoencoder-based feature selection methods.

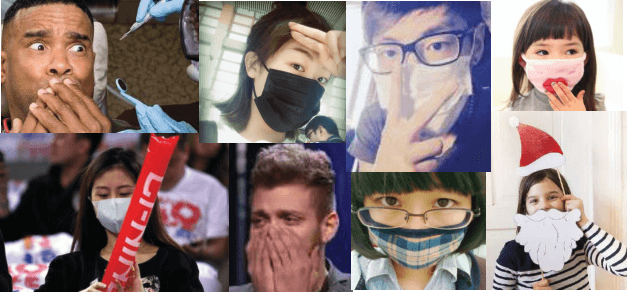

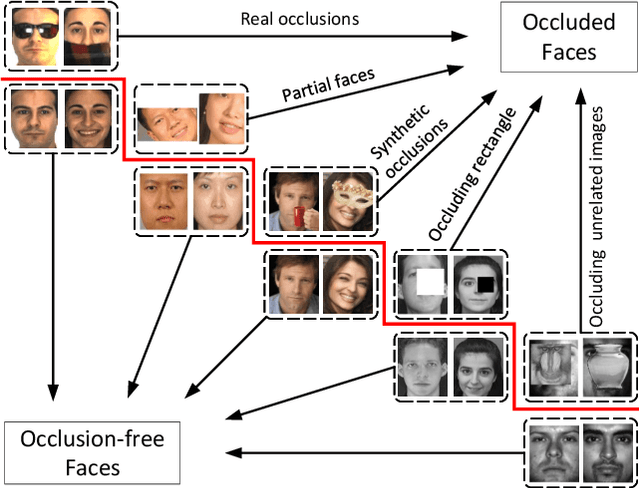

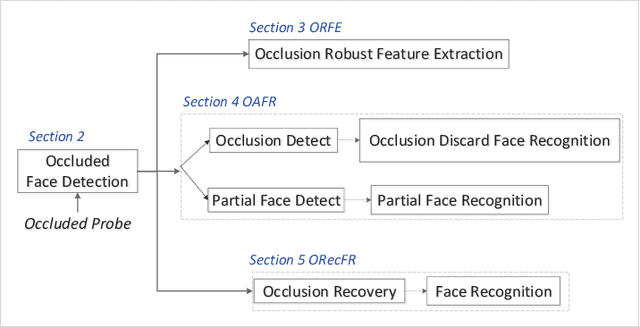

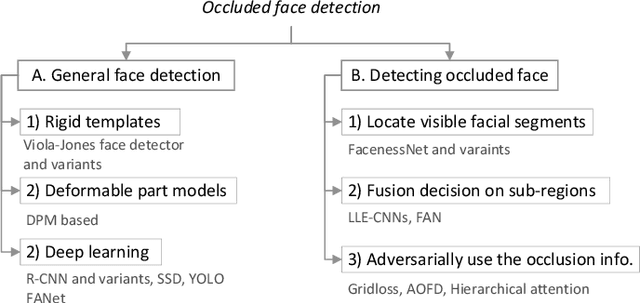

A survey of face recognition techniques under occlusion

Jun 19, 2020

Abstract:The limited capacity to recognize faces under occlusions is a long-standing problem that presents a unique challenge for face recognition systems and even for humans. The problem regarding occlusion is less covered by research when compared to other challenges such as pose variation, different expressions, etc. Nevertheless, occluded face recognition is imperative to exploit the full potential of face recognition for real-world applications. In this paper, we restrict the scope to occluded face recognition. First, we explore what the occlusion problem is and what inherent difficulties can arise. As a part of this review, we introduce face detection under occlusion, a preliminary step in face recognition. Second, we present how existing face recognition methods cope with the occlusion problem and classify them into three categories, which are 1) occlusion robust feature extraction approaches, 2) occlusion aware face recognition approaches, and 3) occlusion recovery based face recognition approaches. Furthermore, we analyze the motivations, innovations, pros and cons, and the performance of representative approaches for comparison. Finally, future challenges and method trends of occluded face recognition are thoroughly discussed.

Morphing Attack Detection -- Database, Evaluation Platform and Benchmarking

Jun 16, 2020

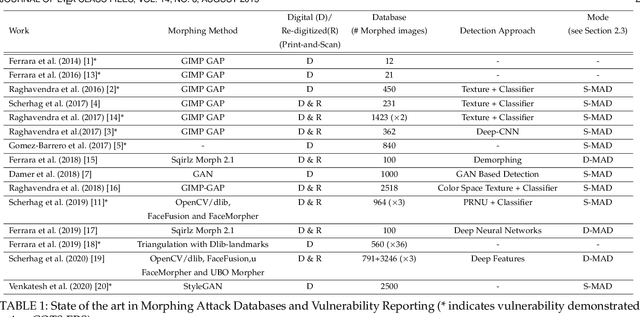

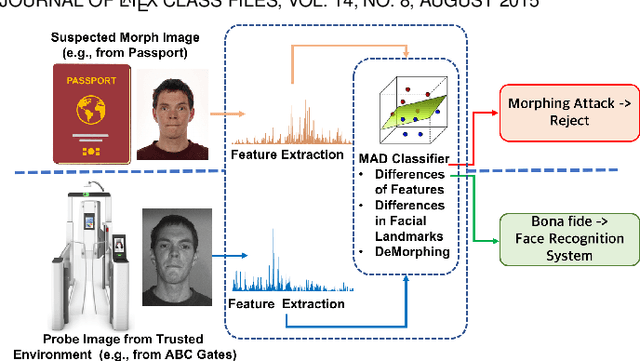

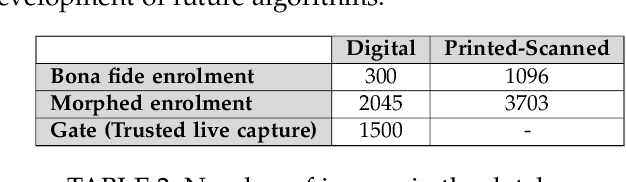

Abstract:Morphing attacks have posed a severe threat to Face Recognition System (FRS). Despite the number of advancements reported in recent works, we note serious open issues that are not addressed. Morphing Attack Detection (MAD) algorithms often are prone to generalization challenges as they are database dependent. The existing databases, mostly of semi-public nature, lack in diversity in terms of ethnicity, various morphing process and post-processing pipelines. Further, they do not reflect a realistic operational scenario for Automated Border Control (ABC) and do not provide a basis to test MAD on unseen data, in order to benchmark the robustness of algorithms. In this work, we present a new sequestered dataset for facilitating the advancements of MAD where the algorithms can be tested on unseen data in an effort to better generalize. The newly constructed dataset consists of facial images from 150 subjects from various ethnicities, age-groups and both genders. In order to challenge the existing MAD algorithms, the morphed images are with careful subject pre-selection created from the subjects, and further post-processed to remove the morphing artifacts. The images are also printed and scanned to remove all digital cues and to simulate a realistic challenge for MAD algorithms. Further, we present a new online evaluation platform to test algorithms on sequestered data. With the platform we can benchmark the morph detection performance and study the generalization ability. This work also presents a detailed analysis on various subsets of sequestered data and outlines open challenges for future directions in MAD research.

Predicting Face Recognition Performance Using Image Quality

Oct 24, 2015

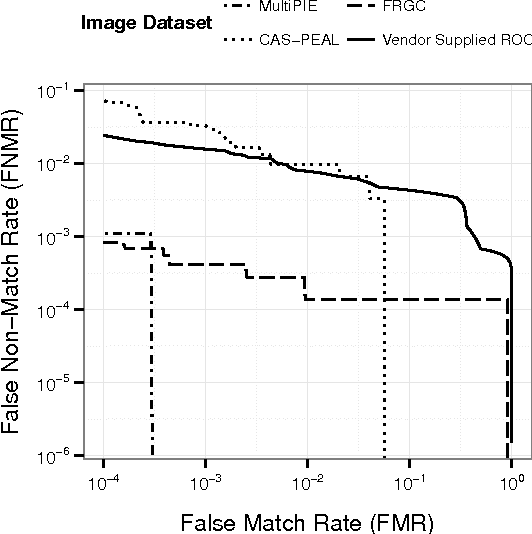

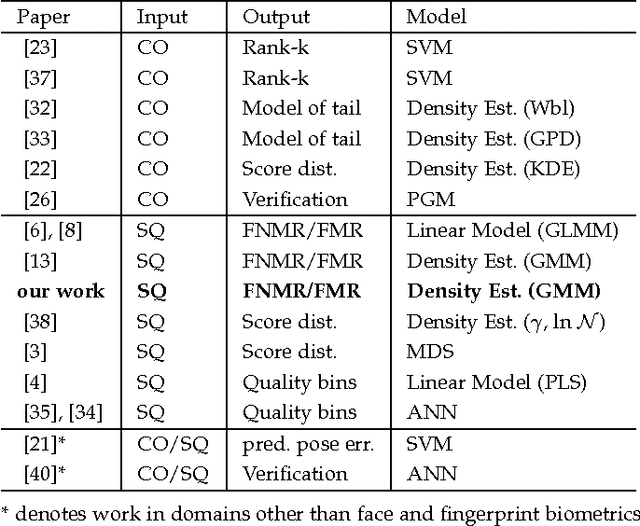

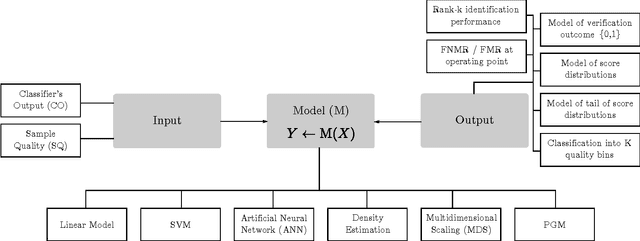

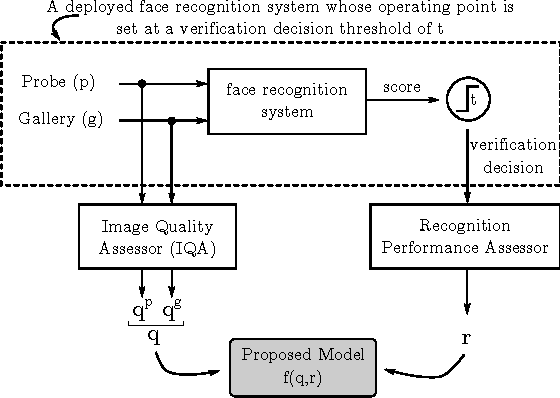

Abstract:This paper proposes a data driven model to predict the performance of a face recognition system based on image quality features. We model the relationship between image quality features (e.g. pose, illumination, etc.) and recognition performance measures using a probability density function. To address the issue of limited nature of practical training data inherent in most data driven models, we have developed a Bayesian approach to model the distribution of recognition performance measures in small regions of the quality space. Since the model is based solely on image quality features, it can predict performance even before the actual recognition has taken place. We evaluate the performance predictive capabilities of the proposed model for six face recognition systems (two commercial and four open source) operating on three independent data sets: MultiPIE, FRGC and CAS-PEAL. Our results show that the proposed model can accurately predict performance using an accurate and unbiased Image Quality Assessor (IQA). Furthermore, our experiments highlight the impact of the unaccounted quality space -- the image quality features not considered by IQA -- in contributing to performance prediction errors.

Can Facial Uniqueness be Inferred from Impostor Scores?

Oct 23, 2013

Abstract:In Biometrics, facial uniqueness is commonly inferred from impostor similarity scores. In this paper, we show that such uniqueness measures are highly unstable in the presence of image quality variations like pose, noise and blur. We also experimentally demonstrate the instability of a recently introduced impostor-based uniqueness measure of [Klare and Jain 2013] when subject to poor quality facial images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge